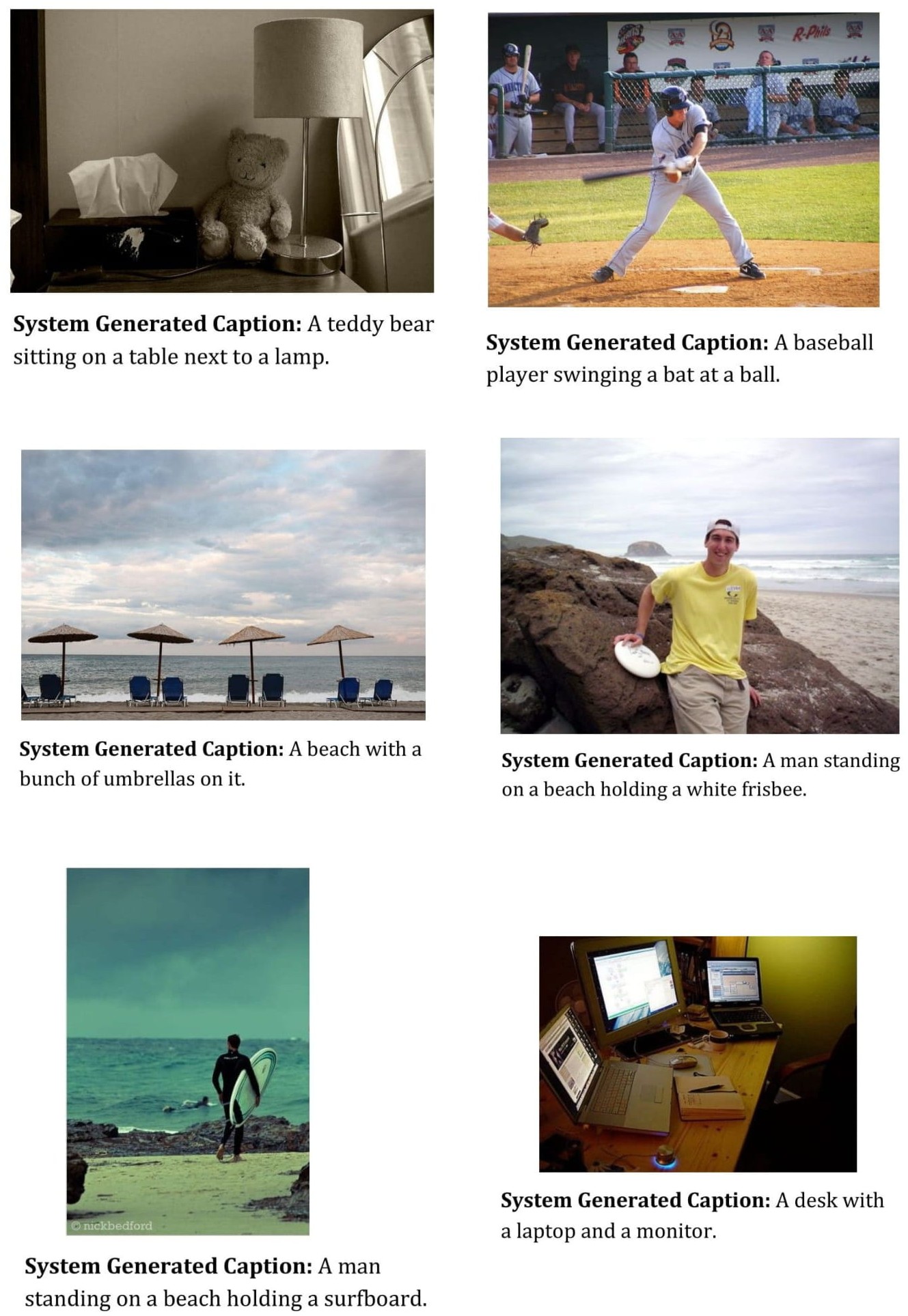

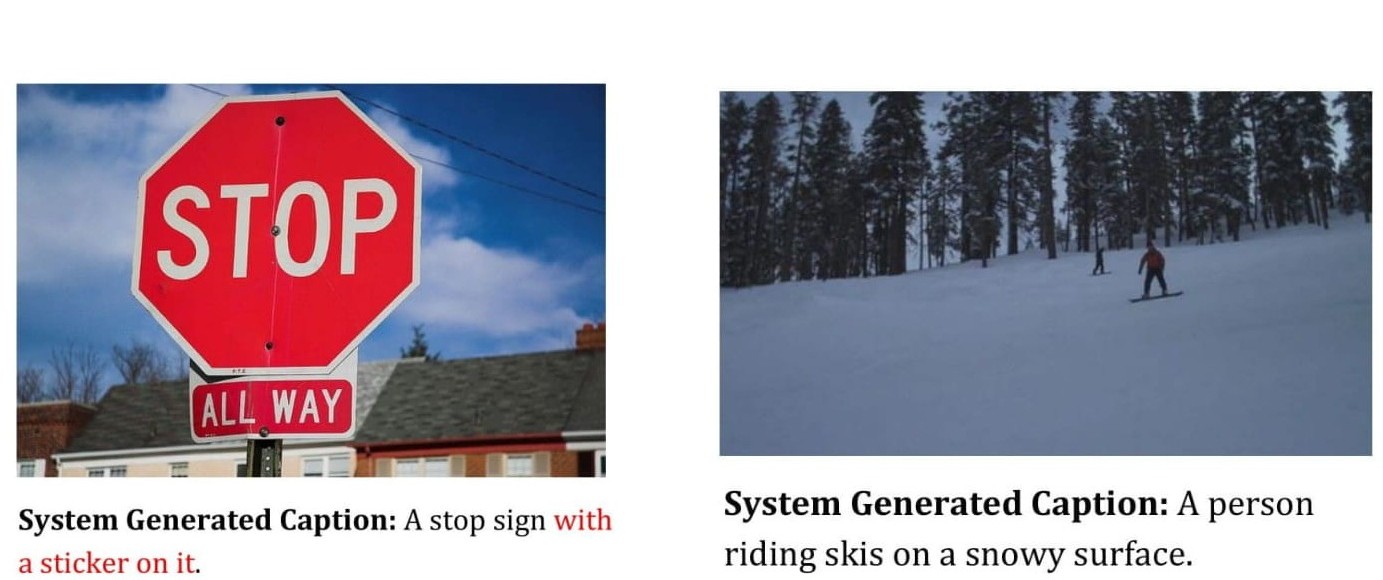

Describing an image efficiently requires extracting as much information from it as possible. Apart from detecting the presence of objects in the image, their respective purpose intending the topic of the image is another vital information which can be incorporated with the model to improve the efficiency of the caption generation system. The sole aim is to put extra thrust on the context of the image imitating human approach. The model follows encoder-decoder framework with topic input. The method is compared with some of the state-of-the-art models based on the result obtained from MSCOCO 2017 dataset. BLEU, CIDEr, ROGUE-L, METEOR scores are used to measure the efficacy of the model which shows improvement in performance of the caption generation process.

- Python 3.6

- Tensorflow

- Numpy

- NLTK

- OpenCV

- Install the packages mentioned in

requirements.txtin a python 3.6 environment. - Install the stopwords package from NLTK

$ python -m nltk.downloader stopwords

- Go to the directory

dataset. - Inside the directory, download and the MSCOCO 2017 Train and Val images along with Train/Val annotations from here and then extract them.

- Download the glove.6B.zip file from here. Then extract the file glove.6B.300d.txt from the downloaded file.

- Create a simplified version of MSCOCO 2017 dataset

$ python dataset/parse_coco.py

- To process the dataset for training the topic model

$ python dataset/create_topic_dataset.py - Train the lda model

$ python lda_model_train.py - Train the topic model

$ python topic_lda_model_train.py

- To process the dataset for training the caption model

$ python dataset/create_caption_dataset.py --image_weights <path to the weights of the topic model> - Train the caption model

$ python caption_model_train.py --image_weights <path to the weights of the topic model>

If you want to directly evaluate without training then the model along with trained weights can be downloaded from the releases page.

Generate model predictions

$ python evaluation/caption_model_predictions.py --model <path to the trained model>

The file generated after executing the above script is used for generation of evaluation scores below.

Evaluation scores are generated using the code provided here.

- Clone the above mentioned repo.

- Copy the directories pycocotools/ and pycocoevalcap/, and the file get_stanford_models.sh from the above repo into the evaluation/ directory.

- Copy the annotations file captions_train2017.json from the MSCOCO 2017 dataset into the evaluation/annotations/ directory.

- Create a new virtual environment in python 2.7 and activate it.

- Install requirements

$ pip install -r evaluation/requirements.txt - Install java

$ sudo apt install default-jre

$ sudo apt install default-jdk - Run the code in the notebook generate_evaluation_scores.ipynb to obtain the evaluation scores.

To generate predicted caption for a single image

$ python3 evaluation/one_step_inference.py --topic_model <path to trained topic model> --caption_model <path to trained caption model> --image <image path>

| Metric | Score |

|---|---|

| BLEU-1 | 0.672 |

| BLEU-2 | 0.494 |

| BLEU-3 | 0.353 |

| BLEU-4 | 0.255 |

| CIDEr | 0.824 |

| ROUGE-L | 0.499 |

| METEOR | 0.232 |

Publication link: https://link.springer.com/article/10.1007/s13369-019-04262-2