Table of Contents generated with DocToc

- Work in Progress

- Community, discussion, contribution, and support

- License

- Getting Started

- Design

- Setting up Your Dev Environment

- Usage

- Testing

We are in the process of enabling this repo for community contribution. See wiki here.

Check the CONTRIBUTING Doc for how to contribute to the repo.

This project is licensed under the Apache License 2.0. A copy of the license can be found in LICENSE.

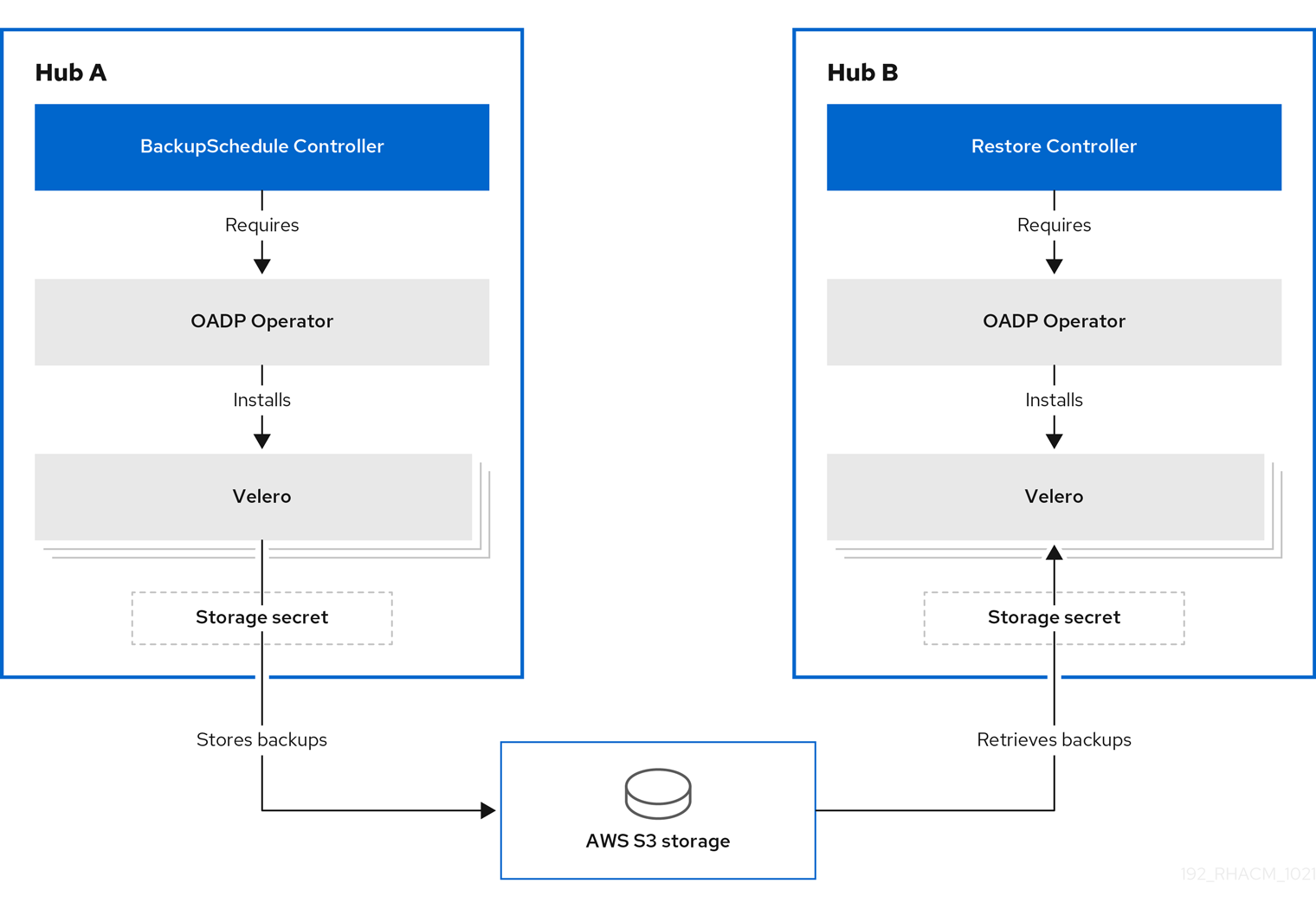

The Cluster Back up and Restore Operator provides disaster recovery solutions for the case when the Red Hat Advanced Cluster Management for Kubernetes hub goes down and needs to be recreated. Scenarios outside the scope of this component : disaster recovery scenarios for applications running on managed clusters or scenarios where the managed clusters go down.

The Cluster Back up and Restore Operator runs on the Red Hat Advanced Cluster Management for Kubernetes hub and depends on the OADP Operator to create a connection to a backup storage location on the hub, which is then used to backup and restore user created hub resources.

The Cluster Back up and Restore Operator chart is installed automatically by the MultiClusterHub resource, when installing or upgrading to version 2.5 of the Red Hat Advanced Cluster Management operator. The OADP Operator will be installed automatically with the Cluster Back up and Restore Operator chart, as a chart hook.

Before you can use the Cluster Back up and Restore operator, the OADP Operator must be configured to set the connection to the storage location where backups will be saved. Make sure you follow the steps to create the secret for the cloud storage where the backups are going to be saved, then use that secret when creating the DataProtectionApplication resource to setup the connection to the storage location.

Note: The Cluster Back up and Restore Operator chart installs the backup-restore-enabled Policy, used to inform on issues with the backup and restore component. The Policy templates check if the required pods are running, storage location is available, backups are available at the defined location and no erros status is reported by the main resources. This Policy is intended to help notify the Hub admin of any backup issues as the hub is active and expected to produce backups.

The operator defines the BackupSchedule.cluster.open-cluster-management.io resource, used to setup Red Hat Advanced Cluster Management for Kubernetes backup schedules, and Restore.cluster.open-cluster-management.io resource, used to process and restore these backups.

The operator sets the options needed to backup remote clusters identity and any other hub resources that needs to be restored.

The Cluster Back up and Restore Operator solution provides backup and restore support for all Red Hat Advanced Cluster Management for Kubernetes hub resources like managed clusters, applications, policies, bare metal assets. It provides support for backing up any third party resources extending the basic hub installation. With this backup solution, you can define a cron based backup schedule which runs at specified time intervals and continuously backs up the latest version of the hub content. When the hub needs to be replaced or in a disaster scenario when the hub goes down, a new hub can be deployed and backed up data moved to the new hub, so that the new hub replaces the old one.

The steps below show how the Cluster Back up and Restore Operator finds the resources to be backed up. With this approach the backup includes all CRDs installed on the hub, including any extensions using third parties components.

- Exclude all resources in the MultiClusterHub namespace. This is to avoid backing up installation resources which are linked to the current Hub identity and should not be backed up.

- Backup all CRDs with an api version suffixed by

.open-cluster-management.io. This will cover all Advanced Cluster Management resources. - Additionally, backup all CRDs from these api groups:

argoproj.io,app.k8s.io,core.observatorium.io,hive.openshift.io - Exclude all CRDs from the following api groups :

admission.cluster.open-cluster-management.io,admission.work.open-cluster-management.io,internal.open-cluster-management.io,operator.open-cluster-management.io,work.open-cluster-management.io,search.open-cluster-management.io,admission.hive.openshift.io,velero.io - Exclude the following CRDs; they are part of the included api groups but are either not needed or they are being recreated by owner resources, which are also backed up:

clustermanagementaddon,observabilityaddon,applicationmanager,certpolicycontroller,iampolicycontroller,policycontroller,searchcollector,workmanager,backupschedule,restore,clusterclaim.cluster.open-cluster-management.io - Backup secrets and configmaps with one of the following label annotations:

cluster.open-cluster-management.io/type,hive.openshift.io/secret-type,cluster.open-cluster-management.io/backup - Use this label annotation for any other resources that should be backed up and are not included in the above criteria:

cluster.open-cluster-management.io/backup - Resources picked up by the above rules that should not be backed up, can be explicitly excluded when setting this label annotation:

velero.io/exclude-from-backup=true

Third party components can choose to back up their resources with the ACM backup by adding the cluster.open-cluster-management.io/backup label to these resources. The value of the label could be any string, including an empty string. It is indicated though to set a value that can be later on used to easily identify the component backing up this resource. For example cluster.open-cluster-management.io/backup: idp if the components are provided by an idp solution.

Note:

Use the cluster-activation value for the cluster.open-cluster-management.io/backup label if you want the resources to be restored when the managed clusters activation resources are restored. Restoring the managed clusters activation resources result in managed clusters being actively managed by the hub where the restore was executed.

As mentioned above, when you add the cluster.open-cluster-management.io/backup label to a resource, this resource is automatically backed up under the acm-resources-generic-schedule backup. If any of these resources need to be restored only when the managed clusters are moved to the new hub, so when the veleroManagedClustersBackupName:latest is used on the restored resource, then you have to set the label value to cluster-activation. This will ensure the resource is not restored unless the managed cluster activation is called.

Example :

apiVersion: my.group/v1alpha1

kind: MyResource

metadata:

labels:

cluster.open-cluster-management.io/backup: cluster-activationA backup schedule is activated when creating the backupschedule.cluster.open-cluster-management.io resource, as shown here

After you create a backupschedule.cluster.open-cluster-management.io resource you should be able to run oc get bsch -n <oadp-operator-ns> and get the status of the scheduled cluster backups. The <oadp-operator-ns> is the namespace where BackupSchedule was created and it should be the same namespace where the OADP Operator was installed.

The backupschedule.cluster.open-cluster-management.io creates 6 schedule.velero.io resources, used to generate the backups.

Run os get schedules -A | grep acm to view the list of backup scheduled.

Resources are backed up in 3 separate groups:

-

credentials backup ( 3 backup files, for hive, ACM and generic backups )

-

resources backup ( 2 backup files, one for the ACM resources and second for generic resources, labeled with

cluster.open-cluster-management.io/backup) -

managed clusters backup, schedule labeled with

cluster.open-cluster-management.io/backup-schedule-type: acm-managed-clusters( one backup containing only resources which result in activating the managed cluster connection to the hub where the backup was restored on)

Note:

a. The backup file created in step 2. above contains managed cluster specific resources but does not contain the subset of resources which will result in managed clusters connect to this hub. These resources, also called activation resources, are contained by the managed clusters backup, created in step 3. When you restore on a new hub just the resources from step 1 and 2 above, the new hub shows all managed clusters but they are in a detached state. The managed clusters are still connected to the original hub that had produced the backup files.

b. Only managed clusters created using the hive api will be automatically connected with the new hub when the acm-managed-clusters backup from step 3 is restored on another hub. All other managed clusters will show up as Pending Import and must be imported back on the new hub.

c. When restoring the acm-managed-clusters backup on a new hub, by using the veleroManagedClustersBackupName: latest option on the restore resource, make sure the old hub from where the backups have been created is shut down, otherwise the old hub will try to reconnect with the managed clusters as soon as the managed cluster reconciliation addons find the managed clusters are no longer available, so both hubs will try to manage the clusters at the same time.

As hubs change from passive to primary clusters and back, different clusters can backup up data at the same storage location. This could result in backup collisions, which means the latest backups are generated by a hub who is no longer the designated primary hub. That hub produces backups because the BackupSchedule.cluster.open-cluster-management.io resource is still Enabled on this hub, but it should no longer write backup data since that hub is no longer a primary hub.

Situations when a backup collision could happen:

- Primary hub goes down unexpectedly:

- 1.1) Primary hub, Hub1, goes down

- 1.2) Hub1 backup data is restored on Hub2

- 1.3) The admin creates the

BackupSchedule.cluster.open-cluster-management.ioresource on Hub2. Hub2 is now the primary hub and generates backup data to the common storage location. - 1.4) Hub1 comes back to life unexpectedly. Since the

BackupSchedule.cluster.open-cluster-management.ioresource is still enabled on Hub1, it will resume writting backups to the same storage location as Hub2. Both Hub1 and Hub2 are now writting backup data at the same storage location. Any cluster restoring the latest backups from this storage location could pick up Hub1 data instead of Hub2.

- The admin tests the disaster scenario by making Hub2 a primary hub:

- 2.1) Hub1 is stopped

- 2.2) Hub1 backup data is restored on Hub2

- 2.3) The admin creates the

BackupSchedule.cluster.open-cluster-management.ioresource on Hub2. Hub2 is now the primary hub and generates backup data to the common storage location. - 2.4) After the disaster test is completed, the admin will revert to the previous state and make Hub1 the primary hub:

- 2.4.1) Hub1 is started. Hub2 is still up though and the

BackupSchedule.cluster.open-cluster-management.ioresource is Enabled on Hub2. Until Hub2BackupSchedule.cluster.open-cluster-management.ioresource is deleted or Hub2 is stopped, Hub2 could write backups at any time at the same storage location, corrupting the backup data. Any cluster restoring the latest backups from this location could pick up Hub2 data instead of Hub1. The right approach here would have been to first stop Hub2 or delete theBackupSchedule.cluster.open-cluster-management.ioresource on Hub2, then start Hub1.

- 2.4.1) Hub1 is started. Hub2 is still up though and the

In order to avoid and to report this type of backup collisions, a BackupCollision state exists for a BackupSchedule.cluster.open-cluster-management.io resource. The controller checks regularly if the latest backup in the storage location has been generated from the current cluster. If not, it means that another cluster has more recently written backup data to the storage location so this hub is in collision with another hub.

In this case, the current hub BackupSchedule.cluster.open-cluster-management.io resource status is set to BackupCollision and the Schedule.velero.io resources created by this resource are deleted to avoid data corruption. The BackupCollision is reported by the backup Policy. The admin should verify what hub must be the one writting data to the storage location, than remove the BackupSchedule.cluster.open-cluster-management.io resource from the invalid hub and recreated a new BackupSchedule.cluster.open-cluster-management.io resource on the valid, primary hub, to resume the backup on this hub.

Example of a schedule in BackupCollision state:

oc get backupschedule -A

NAMESPACE NAME PHASE MESSAGE

openshift-adp schedule-hub-1 BackupCollision Backup acm-resources-schedule-20220301234625, from cluster with id [be97a9eb-60b8-4511-805c-298e7c0898b3] is using the same storage location. This is a backup collision with current cluster [1f30bfe5-0588-441c-889e-eaf0ae55f941] backup. Review and resolve the collision then create a new BackupSchedule resource to resume backups from this cluster.

In a usual restore scenario, the hub where the backups have been executed becomes unavailable and data backed up needs to be moved to a new hub. This is done by running the cluster restore operation on the hub where the backed up data needs to be moved to. In this case, the restore operation is executed on a different hub than the one where the backup was created.

There are also cases where you want to restore the data on the same hub where the backup was collected, in order to recover data from a previous snapshot. In this case both restore and backup operations are executed on the same hub.

A restore backup is executed when creating the restore.cluster.open-cluster-management.io resource on the hub. A few samples are available here

- use the passive sample if you want to restore all resources on the new hub but you don't want to have the managed clusters be managed by the new hub. You can use this restore configuration when the initial hub is still up and you want to prevent the managed clusters to change ownership. You could use this restore option when you want to view the initial hub configuration on the new hub or to prepare the new hub to take over when needed; in that case just restore the managed clusters resources using the passive activation sample.

- use the passive activation sample when you want for this hub to manage the clusters. In this case it is assumed that the other data has been restored already on this hub using the passive sample

- use the restore sample if you want to restore all data at once and make this hub take over the managed clusters in one step.

After you create a restore.cluster.open-cluster-management.io resource on the hub, you should be able to run oc get restore -n <oadp-operator-ns> and get the status of the restore operation. You should also be able to verify on your hub that the backed up resources contained by the backup file have been created.

Note:

a. The restore.cluster.open-cluster-management.io resource is executed once. After the restore operation is completed, if you want to run another restore operation on the same hub, you have to create a new restore.cluster.open-cluster-management.io resource.

b. Although you can create multiple restore.cluster.open-cluster-management.io resources, only one is allowed to be executing at any moment in time.

c. The restore operation allows to restore all 3 backup types created by the backup operation, although you can choose to install only a certain type (only managed clusters or only user credentials or only hub resources).

The restore defines 3 required spec properties, defining the restore logic for the 3 type of backed up files.

veleroManagedClustersBackupNameis used to define the restore option for the managed clusters.veleroCredentialsBackupNameis used to define the restore option for the user credentials.veleroResourcesBackupNameis used to define the restore option for the hub resources (applications and policies).

The valid options for the above properties are :

latest- restore the last available backup file for this type of backupskip- do not attempt to restore this type of backup with the current restore operation<backup_name>- restore the specified backup pointing to it by name

Below you can see a sample available with the operator.

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Restore

metadata:

name: restore-acm

spec:

cleanupBeforeRestore: CleanupRestored

veleroManagedClustersBackupName: latest

veleroCredentialsBackupName: latest

veleroResourcesBackupName: latestVelero currently skips backed up resources if they are already installed on the hub. This limits the scenarios that can be used when restoring hub data on a new hub. Unless the new hub is not used and the restore is applied only once, the hub could not be relibly used as a passive configuration: the data on this hub is not reflective of the data available with the restored resources.

Restore limitations examples:

- A Policy exists on the new hub, before the backup data is restored on this hub. After the restore of the backup resources, the new hub is not identical with the initial hub from where the data was restored. The Policy should not be running on the new hub since this is a Policy not available with the backup resources.

- A Policy exists on the new hub, before the backup data is restored on this hub. The backup data contains the same Policy but in an updated configuration. Since Velero skips existing resources, the Policy will stay unchanged on the new hub, so the Policy is not the same as the one backed up on the initial hub.

- A Policy is restored on the new hub. The primary hub keeps updating the content and the Policy content changes as well. The user reapplies the backup on the new hub, expecting to get the updated Policy. Since the Policy already exists on the hub - created by the previous restore - it will not be restored again. So the new hub has now a different configuration from the primary hub, even if the backup contains the expected updated content; that content is not updated by Velero on the new hub.

To address above limitations, when a Restore.cluster.open-cluster-management.io resource is created, the Cluster Back up and Restore Operator runs a prepare for restore set of steps which will clean up the hub, before Velero restore is called.

The prepare for cleanup option uses the cleanupBeforeRestore property to identify the subset of objects to clean up. There are 3 options you could set for this clean up:

None: no clean up necessary, just call Velero restore. This is to be used on a brand new hub.CleanupRestored: clean up all resources created by a previous acm restore. This should be the common usage for this property. It is less intrusive then theCleanupAlland covers the scenario where you start with a clean hub and keep restoring resources on this hub ( limitation sample 3 above )CleanupAll: clean up all resources on the hub which could be part of an acm backup, even if they were not created as a result of a restore operation. This is to be used when extra content has been created on this hub which requires clean up ( limitation samples 1 and 2 above ). Use this option with caution though as this will cleanup resources on the hub created by the user, not by a previous backup. It is strongly recommended to use theCleanupRestoredoption and to refrain from manually updating hub content when the hub is designated as a passive candidate for a disaster scenario. Basically avoid getting into the situation where you have to swipe the cluster using theCleanupAlloption; this is given as a last alternative.

The Cluster Back up and Restore Operator chart installs the backup-restore-enabled Policy, used to inform on issues with the backup and restore component.

The Policy has a set of templates which check for the following constraints and informs when any of them are violated.

The following templates check the pod status for the backup component and dependencies:

acm-backup-pod-runningtemplate checks if Backup and restore operator pod is runningoadp-pod-runningtemplate checks if OADP operator pod is runningvelero-pod-runningtemplate checks if Velero pod is running

backup-storage-location-availabletemplate checks if aBackupStorageLocation.velero.ioresource was created and the status isAvailable. This implies that the connection to the backup storage is valid.

acm-backup-clusters-collision-reporttemplate checks that if aBackupSchedule.cluster.open-cluster-management.ioexists on the current hub, its state is notBackupCollision. This verifies that the current hub is not in collision with any other hub when writing backup data to the storage location. For a definition of the BackupCollision state read the Backup Collisions section

acm-backup-phase-validationtemplate checks that if aBackupSchedule.cluster.open-cluster-management.ioexists on the current cluster, the status is not in (Failed, or empty state). This ensures that if this cluster is the primary hub and is generating backups, theBackupSchedule.cluster.open-cluster-management.iostatus is healthy.- the same template checks that if a

Restore.cluster.open-cluster-management.ioexists on the current cluster, the status is not in (Failed, or empty state). This ensures that if this cluster is the secondary hub and is restoring backups, theRestore.cluster.open-cluster-management.iostatus is healthy.

acm-managed-clusters-schedule-backups-availabletemplate checks ifBackup.velero.ioresources are available at the location sepcified by theBackupStorageLocation.velero.ioand the backups were created by aBackupSchedule.cluster.open-cluster-management.ioresource. This validates that the backups have been executed at least once, using the Backup and restore operator.

- a

BackupSchedule.cluster.open-cluster-management.iois actively running and saving new backups at the storage location. This validation is done by thebackup-schedule-cron-enabledPolicy template. The template checks that there is aBackup.velero.iowith a labelvelero.io/schedule-name: acm-validation-policy-scheduleat the storage location. Theacm-validation-policy-schedulebackups are set to expire after the time set for the backups cron schedule. If no cron job is running anymore to create backups, the oldacm-validation-policy-schedulebackup is deleted because it expired and a new one is not created. So if noacm-validation-policy-schedulebackups exist at any moment in time, it means that there are no active cron job genertaing acm backups.

This Policy is intended to help notify the Hub admin of any backup issues as the hub is active and expected to produce or restore backups.

- Operator SDK

To install the Cluster Back up and Restore Operator, you can either run it outside the cluster, for faster iteration during development, or inside the cluster.

First we require installing the Operator CRD:

make build

make installThen proceed to the installation method you prefer below.

If you would like to run the Cluster Back up and Restore Operator outside the cluster, execute:

make runIf you would like to run the Operator inside the cluster, you'll need to build a container image. You can use a local private registry, or host it on a public registry service like quay.io.

- Build your image:

make docker-build IMG=<registry>/<imagename>:<tag>

- Push the image:

make docker-push IMG=<registry>/<imagename>:<tag>

- Deploy the Operator:

make deploy IMG=<registry>/<imagename>:<tag>

Here you can find an example of a backupschedule.cluster.open-cluster-management.io resource definition:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: BackupSchedule

metadata:

name: schedule-acm

spec:

veleroSchedule: 0 */6 * * * # Create a backup every 6 hours

veleroTtl: 72h # deletes scheduled backups after 72h; optional, if not specified, the maximum default value set by velero is used - 720h-

veleroScheduleis a required property and defines a cron job for scheduling the backups. -

veleroTtlis an optional property and defines the expiration time for a scheduled backup resource. If not specified, the maximum default value set by velero is used, which is 720h.

This is an example of a restore.cluster.open-cluster-management.io resource definition

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Restore

metadata:

name: restore-acm

spec:

cleanupBeforeRestore: CleanupRestored

veleroManagedClustersBackupName: latest

veleroCredentialsBackupName: latest

veleroResourcesBackupName: latestIn order to create an instance of backupschedule.cluster.open-cluster-management.io or restore.cluster.open-cluster-management.io you can start from one of the sample configurations.

Replace the <oadp-operator-ns> with the namespace name used to install the OADP Operator.

kubectl create -n <oadp-operator-ns> -f config/samples/cluster_v1beta1_backupschedule.yaml

kubectl create -n <oadp-operator-ns> -f config/samples/cluster_v1beta1_restore.yamlAfter you create a backupschedule.cluster.open-cluster-management.io resource you should be able to run oc get bsch -n <oadp-operator-ns> and get the status of the scheduled cluster backups.

In the example below, you have created a backupschedule.cluster.open-cluster-management.io resource named schedule-acm.

The resource status shows the definition for the 3 schedule.velero.io resources created by this cluster backup scheduler.

$ oc get bsch -n <oadp-operator-ns>

NAME PHASE

schedule-acm

After you create a restore.cluster.open-cluster-management.io resource on the new hub, you should be able to run oc get restore -n <oadp-operator-ns> and get the status of the restore operation. You should also be able to verify on the new hub that the backed up resources contained by the backup file have been created.

The restore defines 3 required spec properties, defining the restore logic for the 3 type of backed up files.

veleroManagedClustersBackupNameis used to define the restore option for the managed clusters.veleroCredentialsBackupNameis used to define the restore option for the user credentials.veleroResourcesBackupNameis used to define the restore option for the hub resources (applications and policies).

The valid options for the above properties are :

latest- restore the last available backup file for this type of backupskip- do not attempt to restore this type of backup with the current restore operation<backup_name>- restore the specified backup pointing to it by name

The cleanupBeforeRestore property is used to clean up resources before the restore is executed. More details about this options here.

Note: The restore.cluster.open-cluster-management.io resource is executed once. After the restore operation is completed, if you want to run another restore operation on the same hub, you have to create a new restore.cluster.open-cluster-management.io resource.

Below is an example of a restore.cluster.open-cluster-management.io resource, restoring all 3 types of backed up files, using the latest available backups:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Restore

metadata:

name: restore-acm

spec:

cleanupBeforeRestore: CleanupRestored

veleroManagedClustersBackupName: latest

veleroCredentialsBackupName: latest

veleroResourcesBackupName: latestYou can define a restore operation where you only restore the managed clusters:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Restore

metadata:

name: restore-acm

spec:

cleanupBeforeRestore: None

veleroManagedClustersBackupName: latest

veleroCredentialsBackupName: skip

veleroResourcesBackupName: skipThe sample below restores the managed clusters from backup acm-managed-clusters-schedule-20210902205438 :

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Restore

metadata:

name: restore-acm

spec:

cleanupBeforeRestore: None

veleroManagedClustersBackupName: acm-managed-clusters-schedule-20210902205438

veleroCredentialsBackupName: skip

veleroResourcesBackupName: skip