This repo demonstates an implementation of streaming Retrieval Augmented Generation, making usage of Bedrock, Lambda Function URLs, and LanceDB embedding store backed by S3.

Important: this application uses various AWS services and there are costs associated with these services after the Free Tier usage - please see the AWS Pricing page for details. You are responsible for any AWS costs incurred. No warranty is implied in this example.

- Create an AWS account if you do not already have one and log in. The IAM user that you use must have sufficient permissions to make necessary AWS service calls and manage AWS resources.

- AWS CLI installed and configured

- Git Installed

- AWS Serverless Application Model (AWS SAM) installed

- Docker Installed and Running

-

Create a new directory, navigate to that directory in a terminal and clone the GitHub repository:

git clone https://github.com/shafkevi/lambda-bedrock-s3-streaming-rag -

Change directory to the pattern directory:

cd lambda-bedrock-s3-streaming-rag -

From the command line, use AWS SAM to deploy the AWS resources for the pattern as specified in the template.yml file:

sam build -u sam deploy --guided -

During the prompts:

- Enter a stack name

- Enter the desired AWS Region

- Allow SAM CLI to create IAM roles with the required permissions.

Once you have run

sam deploy --guidedmode once and saved arguments to a configuration file (samconfig.toml), you can usesam deployin future to use these defaults. -

Note your stack name and outputs from the SAM deployment process. These contain the resource names and/or ARNs which are used for testing.

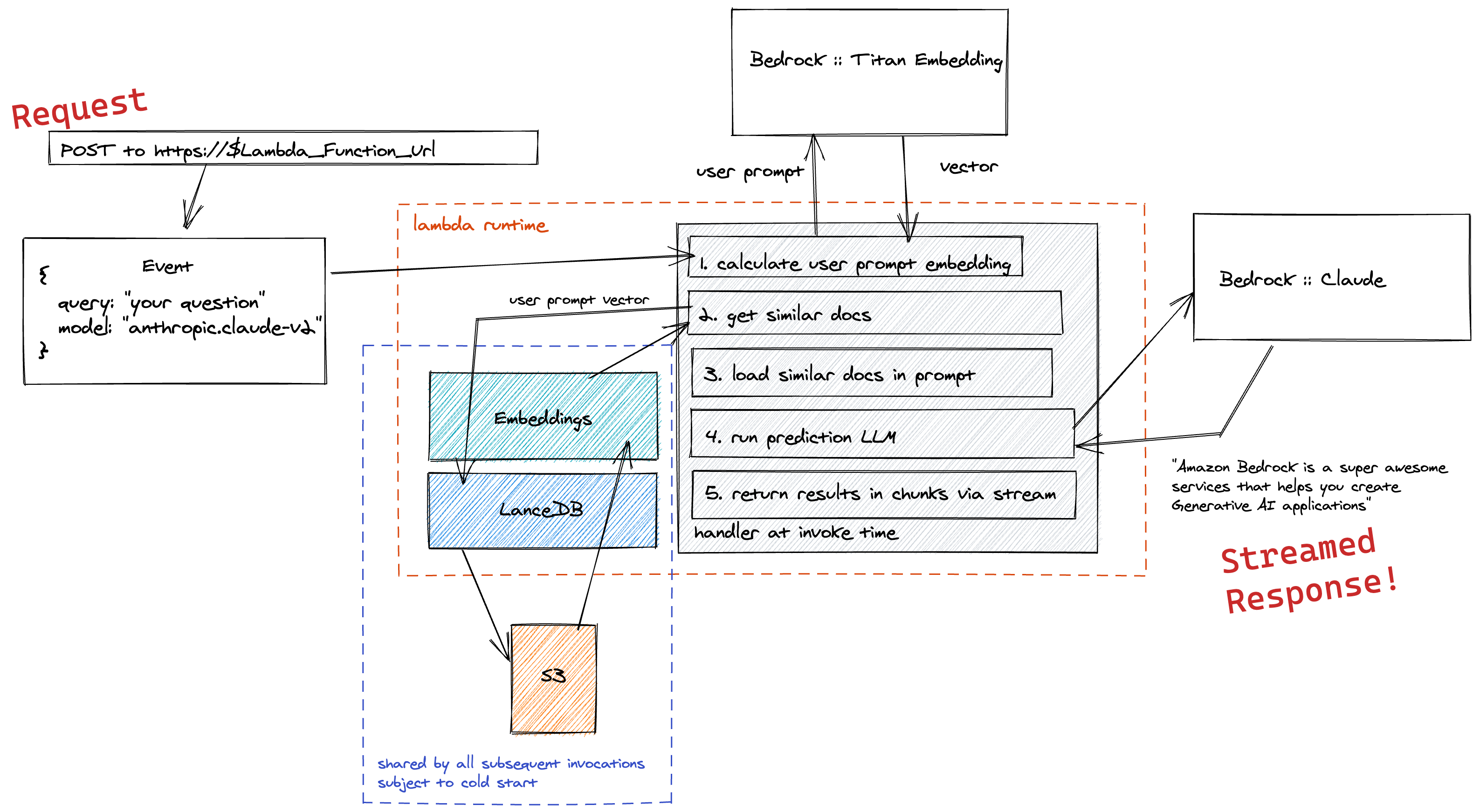

With this pattern we want to showcase how to implement a streaming serverless Retrieval Augmented Generation (RAG) architecture.

Customers asked for a way to quickly test RAG capabilities on a small number of documents without managing infrastructure for contextual knowledge and non-parametric memory.

In this pattern, we run a RAG workflow in a single Lambda function, so that customers only pay for the infrastructure they use, when they use it.

We use LanceDB with Amazon S3 as backend for embedding storage.

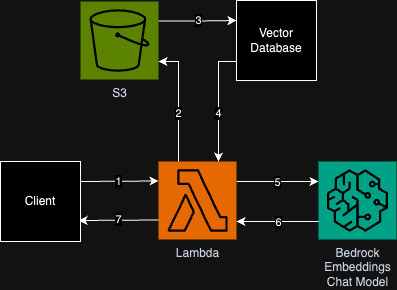

This pattern deploys one Lambda function and an S3 Bucket where to store your embeddings.

This pattern makes use of Bedrock to calculate embeddings with Amazon Titan Embedding and any Amazon Bedrock chat model as prediction LLM.

The responses are streamed using Lambda URL function streaming for a quicker time to first byte and a better user experience.

We also provide a local pipeline to ingest your PDFs and upload them to S3.

The permissions defined in template.yaml restrict model access to just the titan, claude, and mistral models, but you can update this to use other models as you wish.

Once your stack has been deployed, you can ingest PDF documents by following the instructions in ./data-pipeline/README.md

Once your stack has been deployed and you have loaded in your documents, follow the testing instructions in ./testing/README.md

- Delete the stack

sam delete

Copyright 2023 Amazon.com, Inc. or its affiliates. All Rights Reserved.

SPDX-License-Identifier: MIT-0