This was for a research project related to the Computer Vision and Machine Learning course I did during my B. Tech. The project was to research on the Cocktail Party Problem and how is Computer Vision used to solve it.

The cocktail party effect is the phenomenon of the brain's ability to focus one's auditory attention on a particular stimulus while filtering out a range of other stimuli, such as when a partygoer can focus on a single conversation in a noisy room. So, the cocktail party problem involves separating speech signals of speakers by using their images while speaking.

The following was done as part of the project:

- Literature Survey on the techniques used to solve this problem (Literature Survey).

- Implementing 2 state-of-the-art models, running them and evaluating the results (Implementation_Details).

- Coming up with potential improvements in the models (Proposed_Improvements).

The underlying repository was one of the models we implemented and the following potential improvements were tried on this model:

Documentation of the underlying repository:

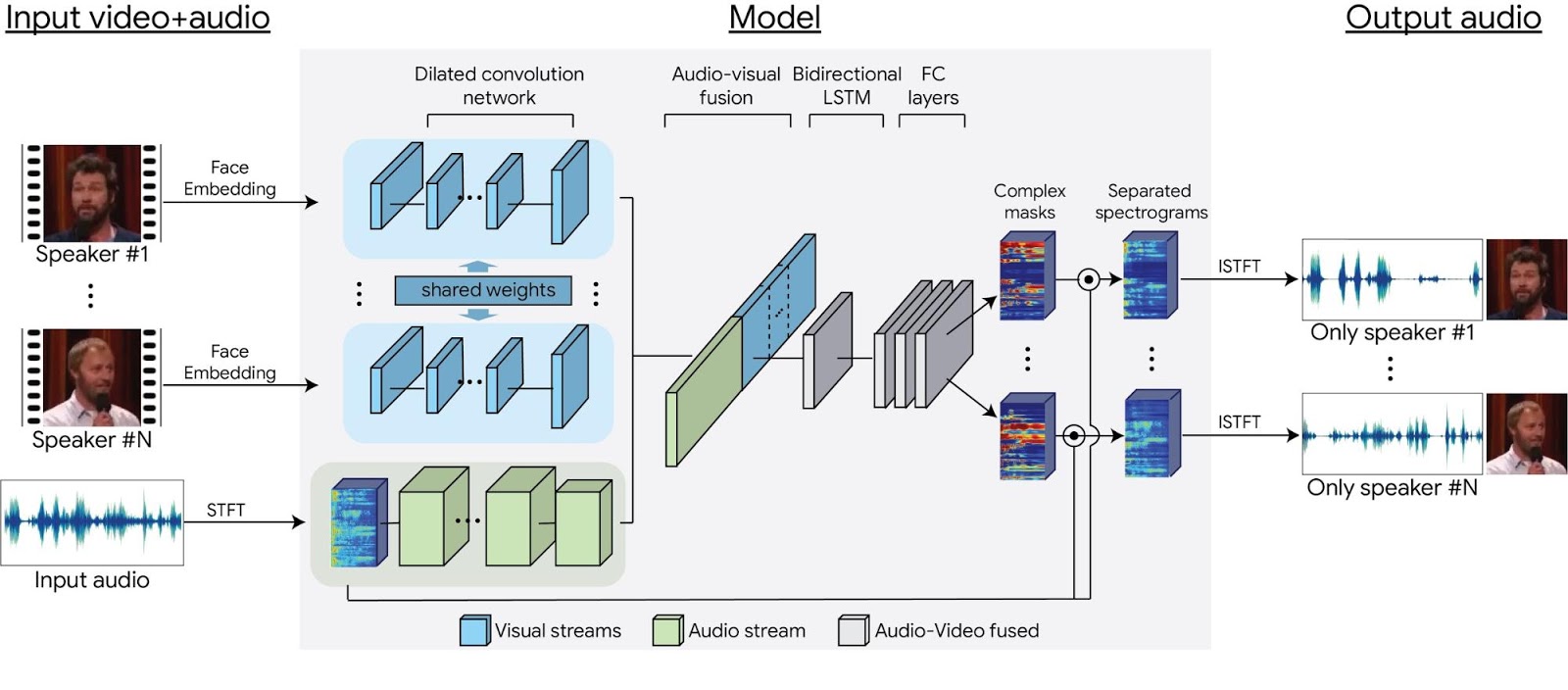

This is a project to improve the speech separation task. In this project, Audio-only and Audio-Visual deep learning separation models are modified based on the paper Looking to Listen at the Cocktail Party[1].

AVspeech dataset : contains 4700 hours of video segments, from a total of 290k YouTube videos.

Customized video and audio downloader are provided in audio and video. (based on youtube-dl,sox,ffmpeg)

Instrouction for generating data is under Data.

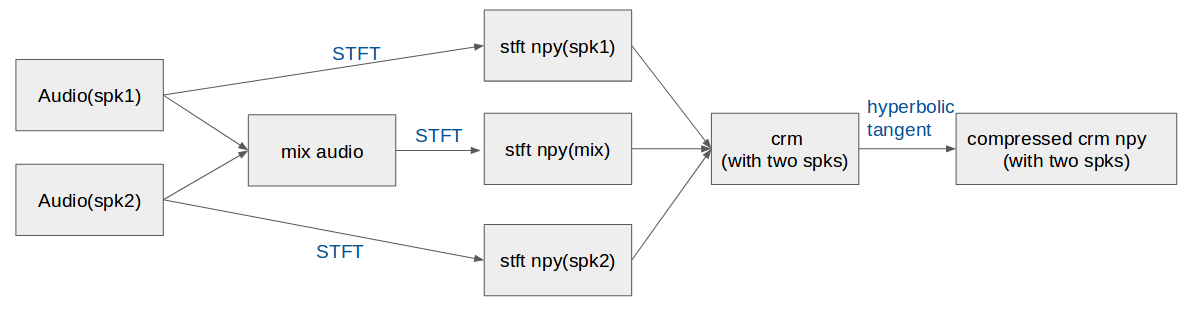

There are several preprocess functions in the utils. Including STFT, iSTFT, power-law compression, complex mask and modified hyperbolic tangent[5] etc. Below is the preprocessing for audio data:

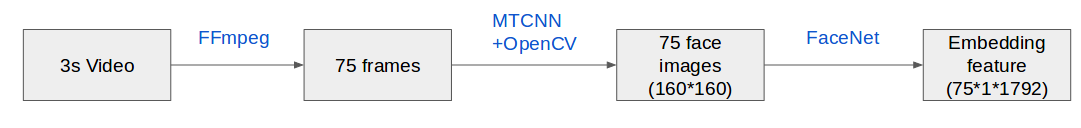

For the visual part, MTCNN is applied to detect face and correct it by checking the provided face center[2]. The visual frames are transfered to 1792 (lowest layer in the network that is not spatially varying) face embeddings features with pre-trained FaceNet model[3]. Below is the preprocessing for visual data:

Audio-only model is provided in model_v1 and Audio-visual model is provided in model_v2.

Follwing is the brief structure of Audio-Visual model, some layers are revised corresponding to our customized compression and dataset.[1]

Loss function : modified discriminative loss function inspired from paper[4].

Optimizer : Adam

Apply complex ratio mask (cRM) to STFT of the mixed speech, we can produce the STFT of single speaker’s speech.

Samples to complete the prediction are provided in eval file in model_v1 and model_v2.