Quick Start | Releases (+MacOS executables)

Imagine AI that is not just smart, but personal. Selfie turns your data into APIs for text generation and natural language search that can power chatbots, storytelling experiences, games, and more.

Selfie is a local-first, open-source project that runs on your device.

Selfie offers a more personalized interaction between you and the digital world via:

- Text Completions: Mix your data into text completions using any OpenAI-compatible tool (like OpenAI SDKs and SillyTavern) or the API.

- Simple Data Import: Quickly import any text file, with enhanced support for messaging platform exports.

- Use Any LLM: Use local (default) or hosted LLMs from OpenAI, Replicate, etc.

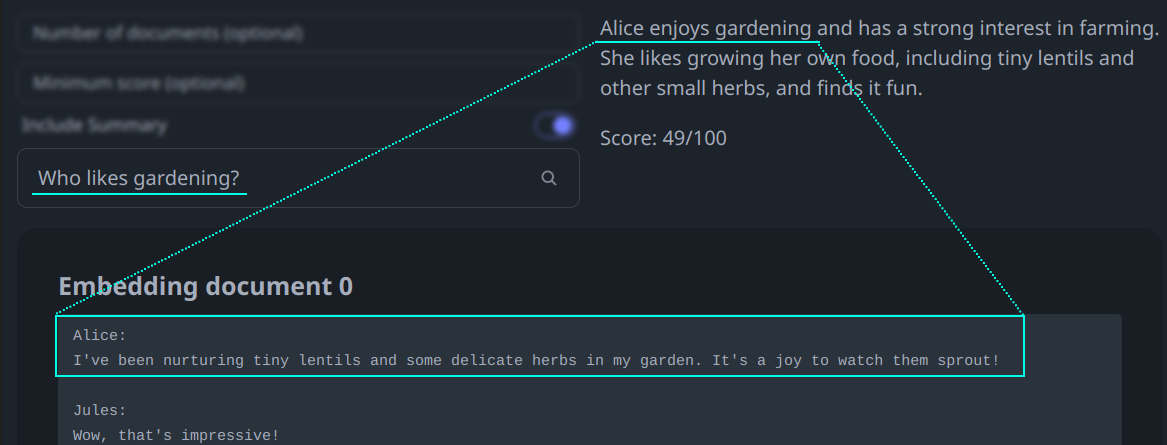

- Direct Queries: Search your data with natural language.

Selfie comes with a local UI for importing and interacting with your data.

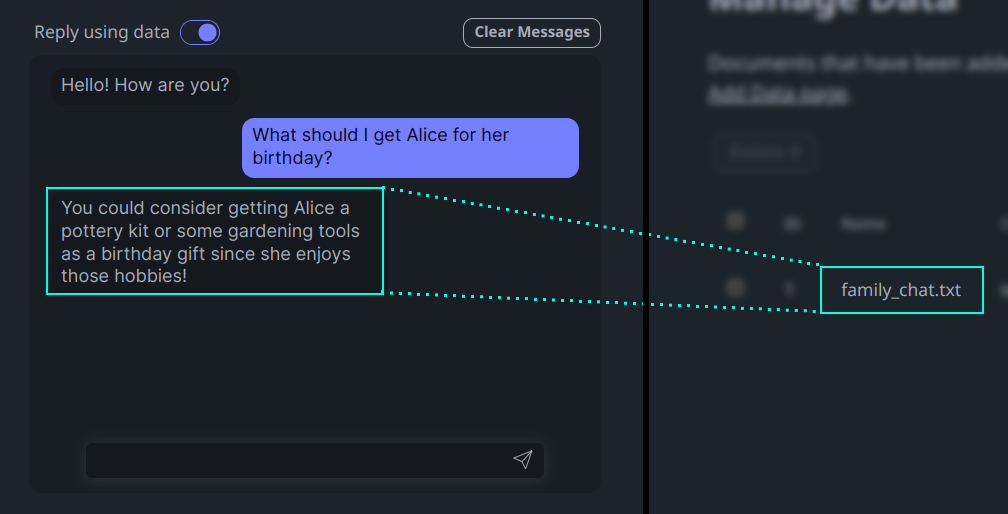

Personalized Chat

Semantic Search

Selfie provides a full API for OpenAI-style text completions and search.

curl -X POST 'http://localhost:8181/v1/chat/completions' \

-H 'Content-Type: application/json' \

-d '{

"messages": [{"role": "user", "content": "As Alice, what is your proudest garden achievement?"}]

}' | jq '.choices[0].message.content'

# "I grew a 10-pound tomato!"Choose an installation method that best suits your operating system.

Important: The first time you run Selfie, it will download ~4GB of model weights and may appear to be unresponsive.

- Choose and download the latest executable for your MacOS based on the processor type:

- Unzip the downloaded file and run the executable to start Selfie.

- Right-click on the system tray icon and click Launch UI to open the Selfie UI in your default web browser.

To run Selfie using Python, follow these steps:

- Ensure Python (version 3.9 or later), Poetry, and Node.js are installed on your system.

- Clone the Selfie repository or download the source code for the latest release.

- Execute

start.shto run the application. - Right-click on the system tray icon and click Launch UI to open the Selfie UI in your default web browser.

Tip: On MacOS, you can quickly install Poetry and Node.js using Homebrew with the following command:

brew install poetry nodejs. Visit Homebrew's website for installation instructions.

If you encounter any issues, refer to the detailed manual installation instructions in the Installation section.

Windows users should refer to the detailed installation instructions in the Installation section.

Selfie's core feature is personalized text generation. You can think of it as middleware that intelligently mixes your data into a request.

A typical request:

Application --prompt--> LLM

A request through Selfie:

Application --prompt--> Selfie --prompt+data--> LLM

On the application side, Selfie exposes text generation APIs, including OpenAI-compatible endpoints.

On the LLM side, Selfie uses tools like LiteLLM and txtai to support forwarding data-augmented requests to your LLM of choice

For most users, the easiest way to install Selfie is to follow the Quick Start instructions. If that doesn't work, or if you just want to install Selfie manually, follow the detailed instructions below.

Tip: Python 3.11 is recommended.

Manual Installation

- Ensure that python 3.9+, poetry, and Node.js are installed.

- Clone or download the repository.

- In a terminal, navigate to the project directory.

- Run

./scripts/build-ui.shto build the UI and copy it to the server. - Run

poetry installto install required Python dependencies. - Optional: Run

./scripts/llama-cpp-python-cublas.shto enable hardware acceleration (for details, see Scripts). - Run

poetry run python -m selfie. The first time you run this, it will download ~4GB of model weights.- On macOS, you may need to run

OMP_NUM_THREADS=1 KMP_DUPLICATE_LIB_OK=TRUE poetry run python -m selfieto avoid OpenMP errors. Read more about OMP_NUM_THREADS here.

- On macOS, you may need to run

If you encounter any issues, you can try running in headless mode: poetry run python -m selfie --headless and opening http://localhost:8181 in your browser.

Note: You can host selfie at a publicly-accessible URL with ngrok. Add your ngrok token (and optionally, ngrok domain) in

selfie/.envand runpoetry run python -m selfie --share.

You can also run Selfie using Docker. To do so, follow these steps:

- Ensure that Docker is installed.

- Clone or download selfie repository.

- In a terminal, navigate to the project directory.

- Run the following commands for the image you want to use (CPU, GPU, or ARM64).

This will start the server and the UI in your browser at http://localhost:8181.

Your data will be stored in the data directory.

This mounts your Hugging Face cache into the container, so you don't have to download the models again if you already

have them.

./docker/build.sh cpu

docker run -p 8181:8181 \

--name selfie-cpu \

-v $(pwd)/data:/selfie/data \

-v $HOME/.cache/huggingface:/root/.cache/huggingface \

selfie:cpu./docker/build.sh gpu

docker run -p 8181:8181 \

--name selfie-gpu \

-v $(pwd)/data:/selfie/data \

-v $HOME/.cache/huggingface:/root/.cache/huggingface \

--gpus all \

--ipc=host --ulimit memlock=-1 --ulimit stack=67108864 \

selfie:gpuDisclaimer:

This Docker container is designed to run on a wide range of architectures, including Apple's M1 and M2 chips. However, due to current limitations with Docker on macOS, direct access to Metal APIs is not available for containers. As a result, applications requiring intensive graphics processing may experience reduced performance compared to running natively on macOS. This setup is recommended for development and testing purposes only. Running it on a native machine is recommended for better performance.

We're continuously exploring ways to improve performance and compatibility.

./docker/build.sh arm64

docker run -p 8181:8181 \

--name selfie-arm64 \

-v $(pwd)/data:/selfie/data \

-v $HOME/.cache/huggingface:/root/.cache/huggingface \

selfie:arm64Selfie comes with a web-based UI that you can use to import your data and interact with it.

Selfie supports importing text data, with special processing for certain data formats, like chat logs from WhatsApp and ChatGPT.

Note: You can try the example files in the

example-chatsdirectory if you want to try a specific data format that you don't have ready for import.

To import data into Selfie:

- Open the Add Data Page: Access the UI and locate the Add Data section.

- Select Data Source: Choose the type of data you are uploading (e.g., WhatsApp, Text Files). Choose the type that most closely matches your data format.

- Configure and Submit: Complete the required fields and submit the form.

Support for new types of data can be added by creating new data connectors in selfie/connectors/ (instructions here, please contribute!).

Note: Ensure you obtain consent from participants in the chats you wish to export.

Now you are ready to interact with your data!

The Playground page includes a chat interface and a search feature. Write an LLM persona by entering a name and bio, and try interacting with your data through conversation. You can also search your data for specific topics under Search.

Now you are ready to use the Selfie API!

To quickly see your API in action, try viewing this link in your web browser:

http://localhost:8181/v1/index_documents/summary?topic=travel.

Detailed API documentation is available here.

By default, Selfie augments text completions with local models using llama.cpp and a local txtai embeddings database.

OpenAI-supported parameters like messages, temperature, max_tokens, etc., should all work out of the box, with some special considerations:

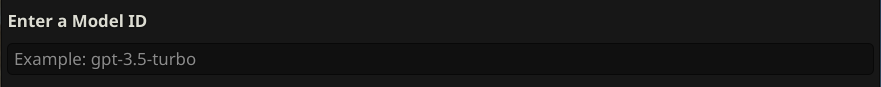

modelshould be a local path, HuggingFace model name, or LiteLLM model name, depending on the method you choose.

You can also include special parameters to direct Selfie in how your request should be handled:

method: The method to use for text generation. Options arellama.cppfor running local model files directly (e.g.,.gguffiles), andlitellmfor everything else.api_base: The base URL of an OpenAI-compatible API to use for text generation, e.g. if you want to host a local model with another tool.api_key: The API key required by your API atapi_base.disable_augmentation: Set totrueto generate text without data augmentation.

Examples and more details are provided in the next sections.

Selfie uses txtai to download local models and run them with llama.cpp. In completion requests, specify the llama.cpp method, or leave it off as the default, and ensure that your model is defined correctly, as a local path or HuggingFace model, according to txtai's documentation.

{

"prompt": "What is the meaning of life?",

"method": "llama.cpp",

"model": "~/models/dolphin-2.6-mistral-7b-dpo.Q5_K_M.gguf"

}or

{

"prompt": "What is the meaning of life?",

"method": "llama.cpp",

"model": "TheBloke/dolphin-2.6-mistral-7B-dpo-GGUF/dolphin-2.6-mistral-7b-dpo.Q5_K_M.gguf"

}You can even use a local Open-AI compatible API (LiteLLM OpenAI-Compatible Endpoints docs).

{

"method": "litellm",

"api_base": "http://localhost:5000/v1",

"api_key": "none"

}Method is optional and defaults to litellm when api_base is specified.

Selfie can use hosted model providers through litellm. In completion requests, specify the litellm method (optional) and ensure that your model is prefixed correctly according to litellm's documentation for your provider.

{

"method": "litellm",

"model": "replicate/llama-2-70b-chat:2796ee9483c3fd7aa2e171d38f4ca12251a30609463dcfd4cd76703f22e96cdf"

}In general, you need an API key for your provided loaded into your environment. A quick way to do that is to specify it when you start the server:

REPLICATE_API_KEY=replicatekey python -m selfie.

Add disable_augmentation: true to your request body to generate text without data augmentation.

{

"prompt": "What is the meaning of life?",

"method": "llama.cpp",

"model": "~/models/dolphin-2.6-mistral-7b-dpo.Q5_K_M.gguf",

"disable_augmentation": true

}Selfie can be used to augment text generation in a variety of applications. Here are some examples.

The OpenAI SDK is a popular way to access OpenAI's text generation models. You can use Selfie to augment the text completions that the SDK generates simply by setting the apiBase and apiKey parameters.

import OpenAI from 'openai';

const openai = new OpenAI({

baseURL: 'http://localhost:8181/v1',

apiKey: ''

});

const chatCompletion = await openai.chat.completions.create({

messages: [

{ role: 'system', content: `Write Alice's next reply.` },

{ role: 'user', content: 'What are your favorite snacks?' },

]

});

console.log(chatCompletion.choices[0].message.content);

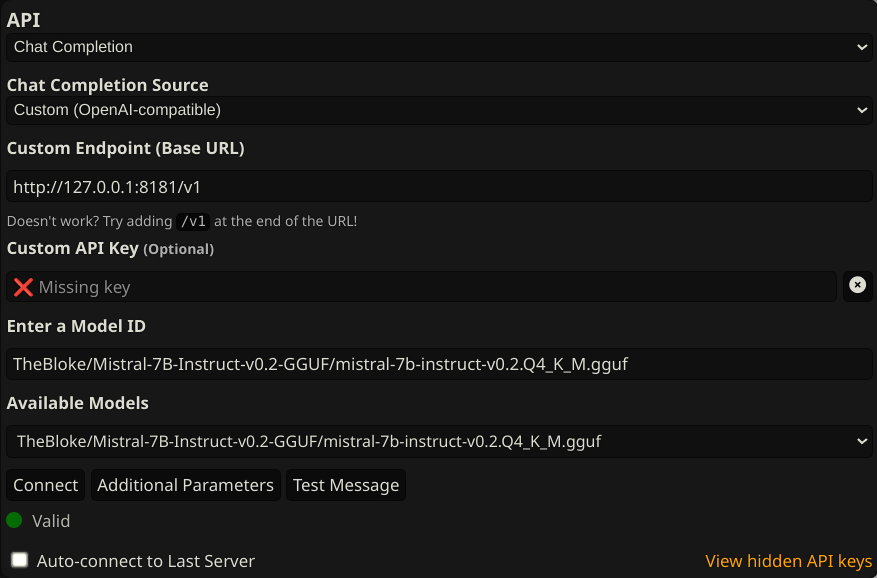

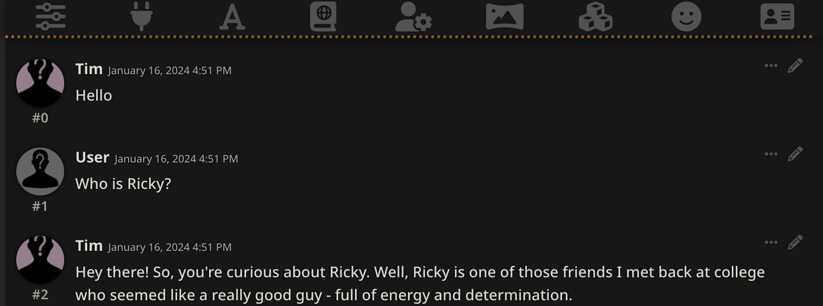

// "I enjoy Bahn Mi and Vietnamese coffee."SillyTavern is a self-hosted application that allows you to chat/roleplay with characters that you create. You can use Selfie to give SillyTavern characters some awareness of your data.

- Install and run SillyTavern.

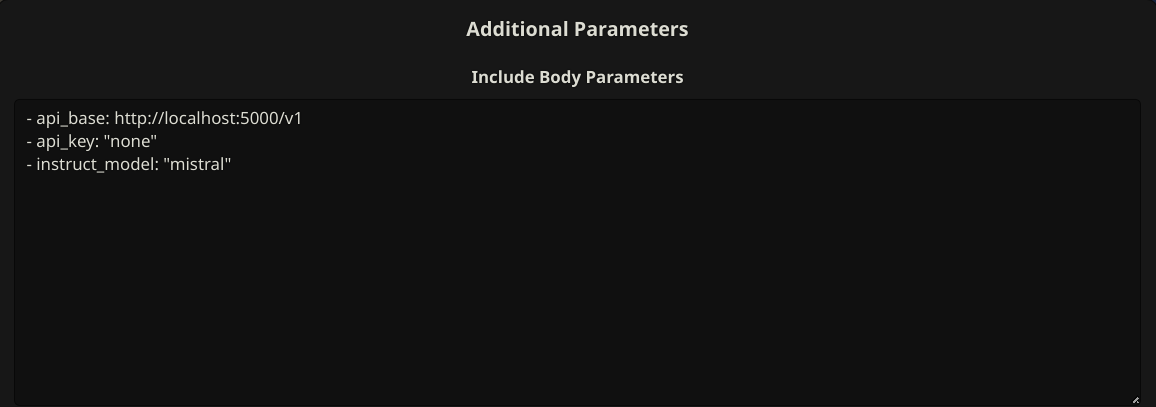

- Configure a custom chat completion source as

http://localhost:8181. You can customize the model by setting API parameters formethodandmodelin Additional Parameters.

- Create a character, customize the text generation settings, etc.

- Chat with your character to see that it is aware of your data:

You can even tell Selfie to use an OpenAI-compatible API for the LLM that it augments:

Note that model is empty:

We pass an extra parameter,

We pass an extra parameter, instruct_mode, for text-generation-webui.

Note: some OpenAI-compatible APIs may not properly handle SillyTavern's use of multiple system messages and non-alternating user/assistant messages (like text-generation-webui). A text-generation-webui workaround is described here.

The scripts directory contains a variety of scripts for setting up Selfie. Here's a brief overview of each script:

To build the UI, run ./scripts/build-ui.sh.

To install llama.cpp with hardware acceleration for better performance, run ./scripts/llama-cpp-python-cublas.sh.

Alternatively, you can build llama-cpp-python manually with the flags of your choice by following the instructions.

Selfie is a work in progress. Here are some features that are not yet fully supported.

To build an executable for your platform:

- Run

poetry run pip install pyinstaller. (pyinstaller is not compatible with Python >3.12 so it is not included by default) - Run

poetry run pyinstaller selfie.spec --noconfirm. - Start the built service with

./dist/selfie/selfie.

Selfie is a community project. We welcome contributions of all kinds, including bug reports, feature requests, and pull requests. Please see the contributing guide for more information.

Join the Vana Discord server to chat with the community and get help with Selfie.