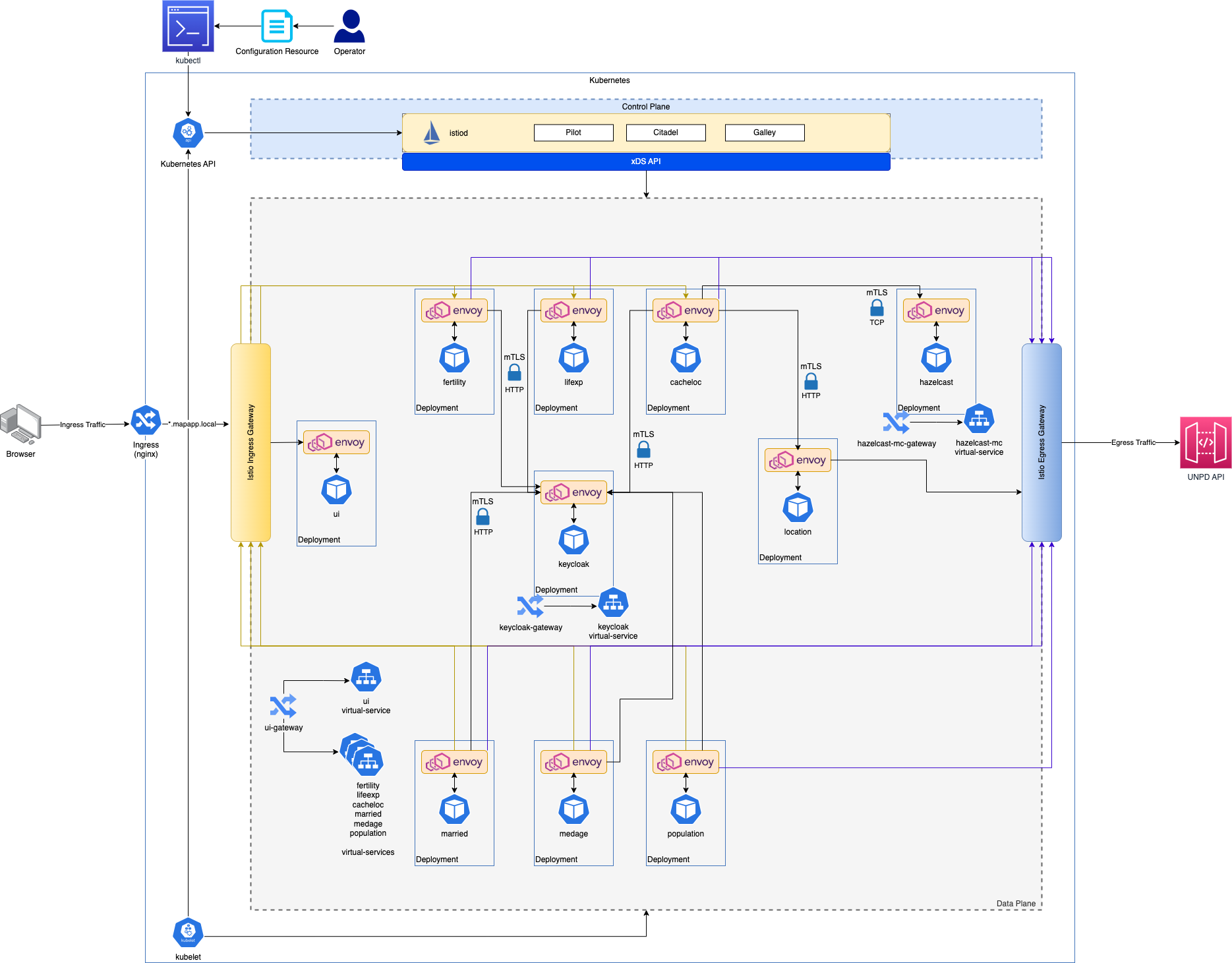

A project to display population statistics on a map based on UN Population Division data. The stack is deployable on Istio service mesh and leverages Leaflet, Next.js, Quarkus and Hazelcast.

This documentation provides guidelines on installation, configuration and architectural details.

This section is for revealing installation details for underpinning technologies required by project. The development is done on MacBook so guideline is based on MacOS based instruction sets. For different platforms please follow technology vendors' installation guidelines.

Add following entries to hosts(e.g. /etc/hosts on MacOS) file:

127.0.0.1 ui.mapapp.local

127.0.0.1 hazelcast.mapapp.local

127.0.0.1 keycloak.mapapp.local

This project requires Java SE 21 installed and registered for Maven. Install Maven and set JAVA_OPTS environment variable with the path of Java 21 installation.

As containerization engine podman is used:

- Install podman, podman-desktop and podman-compose:

brew install podman brew install --cask podman-desktop brew install podman-compose

- Configure podman VM as follows in order to sustain Istio service mesh:

podman machine init --cpus 4 --memory 8192 --disk-size 100 podman machine set --rootful podman machine start podman system connection default podman-machine-default-root - Validate whether podman VM is up&running:

Output:

podman info

NAME VM TYPE CREATED LAST UP CPUS MEMORY DISK SIZE podman-machine-default qemu 3 minutes ago Currently running 4 5.369GB 64.42GB

kind is used that is a convenient way to have a Kubernetes (k8s) cluster for local development.

- Install kind using

brew:brew install kind

- Install

kubectlusing brew:brew install kubectl

- Add following entry into

~/.zshrcto have kubectl completion automatically in your terminal:source <(kubectl completion zsh)

- Add following entry into

~/.zshrcto enable podman provider for kind:KIND_EXPERIMENTAL_PROVIDER=podman

- Open a new terminal window and install a new k8s cluster on kind using custom configuration cluster-config.yml that sets one control-plane and two worker nodes up. It also has mappings to forward ports from the host to an ingress controller running on a node:

kind create cluster --config=./k8s/kind/cluster-config.yml

- Check whether cluster is available:

Output:

kind get clusters

enabling experimental podman provider kindOutput:kubectl cluster-info --context kind-kind

Kubernetes control plane is running at https://127.0.0.1:51040 CoreDNS is running at https://127.0.0.1:51040/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

After a restart/reboot of your PC/server, containers for your kind setup (kind - k8s in Docker uses Docker containers for a k8s setup) should be started manually as they are not configured to restart automatically by kind:

podman start kind-control-plane kind-workerAs the package manager for k8s helm is used:

brew install helmAs service mesh Istio is used:

- Install binaries of latest version with following commands:

cd /usr/local/opt/ curl -L https://istio.io/downloadIstio | sh - mkdir -p ~/completions && istioctl collateral --zsh -o ~/completions ISTIO_VERSION="$(curl -sL https://github.com/istio/istio/releases | \ grep -o 'releases/[0-9]*.[0-9]*.[0-9]*/' | sort -V | \ tail -1 | awk -F'/' '{ print $2}')" echo -n "\nISTIO_HOME=/usr/local/opt/istio-${ISTIO_VERSION}" >> ~/.zshrc

- Add following entries into

~/.zshrc:export PATH="$PATH:${ISTIO_HOME}/bin" source ~/completions/_istioctl

- In a new terminal session validate Istio installation:

Output should be (for v1.21.1):

istioctl x precheck

✔ No issues found when checking the cluster. Istio is safe to install or upgrade! To get started, check out https://istio.io/latest/docs/setup/getting-started/ - Install Istio components with demo profile (see available profiles for details) into k8s cluster:

Output should be (for v1.21.1):

istioctl install --set profile=demo -y

✔ Istio core installed ✔ Istiod installed ✔ Egress gateways installed ✔ Ingress gateways installed ✔ Installation complete - Add a new namespace for project:

Output:

kubectl create namespace mapapp

namespace/mapapp created - To enable auto sidecar proxy injection of Istio add following label to namespace that is used for project:

Output:

kubectl label namespace mapapp istio-injection=enabled

namespace/mapapp labeled - Verify Istio installation:

The end of output should be:

istioctl verify-install

✔ Istio is installed and verified successfully

To launch deployments on Istio, an ingress controller needs to be deployed on cluster. This setup leverages Nginx Ingress Controller:

- Apply Nginx ingress controller objects via official Helm chart:

kubectl create ns ingress-nginx kubectl taint node kind-control-plane node-role.kubernetes.io/control-plane:NoSchedule- helm install --namespace ingress-nginx nginx-ingress ingress-nginx \ --set controller.hostPort.enabled=true \ --set controller.service.type=NodePort \ --set-string controller.nodeSelector.ingress-ready=true \ --repo https://kubernetes.github.io/ingress-nginx - Validate whether ingress controller is ready to accept requests:

Output should resemble:

kubectl wait --namespace ingress-nginx \ --for=condition=ready pod \ --selector=app.kubernetes.io/component=controller \ --timeout=90spod/ingress-nginx-controller-5bb6b499dc-mclk6 condition met - Apply ingress object to route requests from host machine to Istio's

ingress-controllerservice:kubectl apply -f ./k8s/kind/ingress.yml

To test this ingress setup:

-

Add a new namespace and apply Istio side-car proxy auto injection:

kubectl create namespace testingress kubectl label namespace testingress istio-injection=enabled

-

Apply sample deployment that comes with Istio installation (2 different versions of helloworld endpoint):

kubectl config set-context --current --namespace=testingress kubectl apply -f $ISTIO_HOME/samples/helloworld/helloworld-gateway.yaml kubectl apply -f $ISTIO_HOME/samples/helloworld/helloworld.yaml

-

Validate whether pods are up&running:

kubectl get pods

Output should resemble:

NAME READY STATUS RESTARTS AGE helloworld-v1-b6c45f55-7c9xh 1/1 Running 0 55s helloworld-v2-79d5467d55-khmfg 1/1 Running 0 55s -

Check requests are routed to different versions of helloworld service in a round-robin fashion:

HTTP:

for i in {0..4} do curl -XGET http://ui.mapapp.local/hello done

Output should resemble to:

Hello version: v2, instance: helloworld-v2-79d5467d55-khmfg Hello version: v1, instance: helloworld-v1-b6c45f55-7c9xh Hello version: v1, instance: helloworld-v1-b6c45f55-7c9xh Hello version: v2, instance: helloworld-v2-79d5467d55-khmfg Hello version: v2, instance: helloworld-v2-79d5467d55-khmfgHTTPS:

for i in {0..4} do curl --insecure -XGET https://ui.mapapp.local/hello done

Output should resemble to:

Hello version: v2, instance: helloworld-v2-79d5467d55-khmfg Hello version: v1, instance: helloworld-v1-b6c45f55-7c9xh Hello version: v2, instance: helloworld-v2-79d5467d55-khmfg Hello version: v1, instance: helloworld-v1-b6c45f55-7c9xh Hello version: v2, instance: helloworld-v2-79d5467d55-khmfg -

It is also possible to verify whether traffic passes through Envoy sidecar proxy:

curl --head -XGET http://ui.mapapp.local/hello

Output should contain

x-envoy-upstream-service-timeheader that gives the time in milliseconds spent by the upstream host processing the request and the network latency between Envoy and upstream host:HTTP/1.1 200 OK Date: Sun, 11 Jun 2023 13:28:07 GMT Content-Type: text/html; charset=utf-8 Content-Length: 58 Connection: keep-alive x-envoy-upstream-service-time: 192 -

To clean up resources:

kubectl -n testingress delete all --all kubectl delete ns testingress

The project consists of several backend microservices, UI and Hazelcast data-grid. This section provides details to deploy and activate them.

Invoke custom script, build_and_load.sh, to build and load custom images into kind k8s cluster:

zsh ./k8s/deploy/build_and_load.shOutput should resemble to:

Loading image to kind: localhost/mapapp/population:1.0

using podman due to KIND_EXPERIMENTAL_PROVIDER

enabling experimental podman provider

...

Loading image to kind: localhost/mapapp/ui:latest

using podman due to KIND_EXPERIMENTAL_PROVIDER

enabling experimental podman provider

...

> Loaded images into kind:

localhost/mapapp/cacheloc 1.0 5e1000b564af4 194MB

...

localhost/mapapp/ui latest 3ed85b82e22b2 58.6MB

Invoke following script to deploy project to service mesh on kind k8s cluster:

zsh ./k8s/deploy/apply.shInvoke following script to remove deployment:

zsh ./k8s/deploy/clean.shThe project utilizes Keycloak as IdM with OAuth2 & OIDC support. The keycloak.env contains password for admin user of administration portal in keycloak.mapapp.local. The setup automatically deploys a designated realm.json exported within server (see Importing and Exporting Realms

) with following users for the sake of testing:

| username | password | realm | role |

|---|---|---|---|

| jsmith | Mnbuyt765 | mapapp | user |

| awhite | Abc123def | mapapp | admin |