| page_type | languages | products | name | description | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

sample |

|

|

Azure OpenAI Service Load Balancing with Azure API Management |

This sample demonstrates how to load balance requests between multiple Azure OpenAI Services using Azure API Management. |

This sample demonstrates how to use Azure API Management to load balance requests to multiple instances of the Azure OpenAI Service.

This approach takes advantages of the static, round-robin load balancing technique using policies in Azure API Management. This approach provides the following advantages:

- Support for multiple Azure OpenAI Service deployments behind a single Azure API Management endpoint.

- Remove complexity from application code by abstracting Azure OpenAI Service instance and API key management to Azure API Management using policies and named values from Azure Key Vault.

- Retry logic for failed requests between Azure OpenAI Service instances.

For more information on topics covered in this sample, refer to the following documentation:

- Understand policies in Azure API Management

- Error handling in Azure API Management policies

- Manage secrets using named values in Azure API Management policies

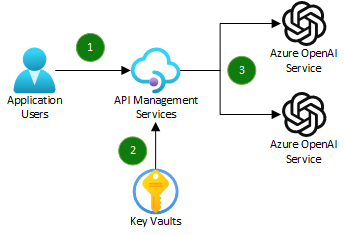

The following diagram illustrates the simplified user flow of the sample.

- A user makes a request to a deployed Azure API Management API that is configured using the Azure OpenAI API specification.

- The API Management API is configured with a policy that uses a static, round-robin load balancing technique to route requests to one of the Azure OpenAI Service instances.

- Based on the selected Azure OpenAI Service instance, the API key for the instance is retrieved from Azure Key Vault.

- The original request including headers and body are forwarded to the selected Azure OpenAI Service instance, along with the specific API key header.

If the request fails, the policy will retry the request with the next Azure OpenAI Service instance, and repeat in a round-robin fashion until the request succeeds or a maximum of 3 attempts is reached.

- Azure OpenAI Service, a managed service for OpenAI GPT models that exposes a REST API.

- Azure API Management, a managed service that provides a gateway to the backend Azure OpenAI Service instances.

- Azure Key Vault, a managed service that stores the API keys for the Azure OpenAI Service instances as secrets used by Azure API Management.

- Azure Managed Identity, a user-defined managed identity for Azure API Management to access Azure Key Vault.

- Azure Bicep, used to create a repeatable infrastructure deployment for the Azure resources.

To deploy the infrastructure and test load balancing using Azure API Management, you need to:

- Install the latest .NET SDK.

- Install PowerShell Core.

- Install the Azure CLI.

- Install Visual Studio Code with the Polyglot Notebooks extension.

- Apply for access to the Azure OpenAI Service.

The Sample.ipynb notebook contains all the necessary steps to deploy the infrastructure using Azure Bicep, and make requests to the deployed Azure API Management API to test load balancing between two Azure OpenAI Service instances.

Note: The sample uses the Azure CLI to deploy the infrastructure from the main.bicep file, and PowerShell commands to test the deployed APIs.

The notebook is split into multiple parts including:

- Login to Azure and set the default subscription.

- Deploy the Azure resources using Azure Bicep.

- Test load balancing using Azure API Management.

- Cleanup the Azure resources.

Each step is documented in the notebook with additional information and links to relevant documentation.