Megatron on AWS EC2 UltraCluster

Megatron on AWS EC2 UltraCluster provides steps, code and configuration samples to deploy and train a GPT type Natural Language Understanding (NLU) model using an AWS EC2 UltraCluster of P4d instances and the NVIDIA Megatron-LM framework.

Megatron is a large and powerful transformer developed by the Applied Deep Learning Research team at NVIDIA. Refer to Megatron's original Github repository for more information.

Repository Structure

This repository contains configuration files for AWS ParallelCluster in the configs folder.

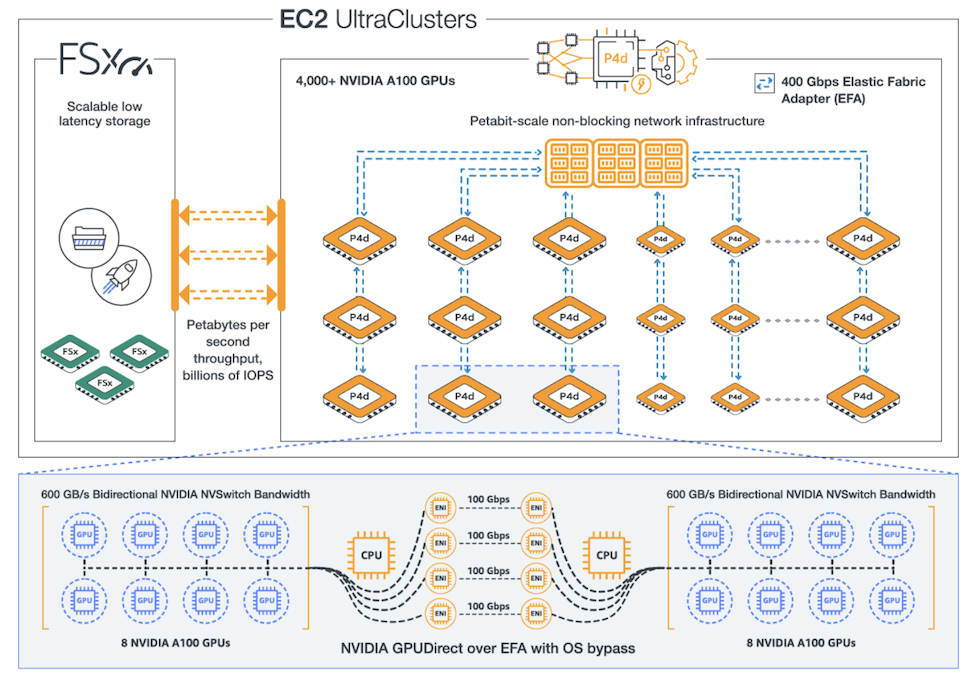

The configurations implement a tightly coupled cluster of p4d.24xlarge EC2 instances, leveraging AWS Elastic Fabric Adapter (EFA) and Amazon FSx for Lustre in an EC2 UltraCluster of P4d configuration.

The scripts folder contains scripts to build a custom Deep Learning AMI with Megatron-LM and its dependencies.

It also contains scripts to train a GPT-2 8 billion parameter model, in a 8-way model parallel configuration, using the SLURM scheduler available through ParallelCluster.

The TUTORIAL.md describes how-to:

- Set-up a cluster management environment with AWS ParallelCluster.

- Build a custom Deep Learning AMI using the

pclusterCLI. - Configure and deploy a multi-queue cluster with CPU and GPU instances.

- Preprocess the latest English Wikipedia data dump from Wikimedia on a large CPU instance.

- Train the 8B parameter version of GPT-2 using the preprocessed data across 128 GPUs.

The Advanced section of the tutorial also describes how to monitor training using Tensorboard.

Amazon EC2 UltraCluster

For an overview of the Amazon EC2 UltraClusters of P4d instances follow this link

Contribution guidelines

If you want to contribute to models, please review the contribution guidelines.

License

This project is licensed under [MIT], see the LICENSE file.