Quan Sun1*, Qiying Yu2,1*, Yufeng Cui1*, Fan Zhang1*, Xiaosong Zhang1*, Yueze Wang1, Hongcheng Gao1,

Jingjing Liu2, Tiejun Huang1,3, Xinlong Wang1

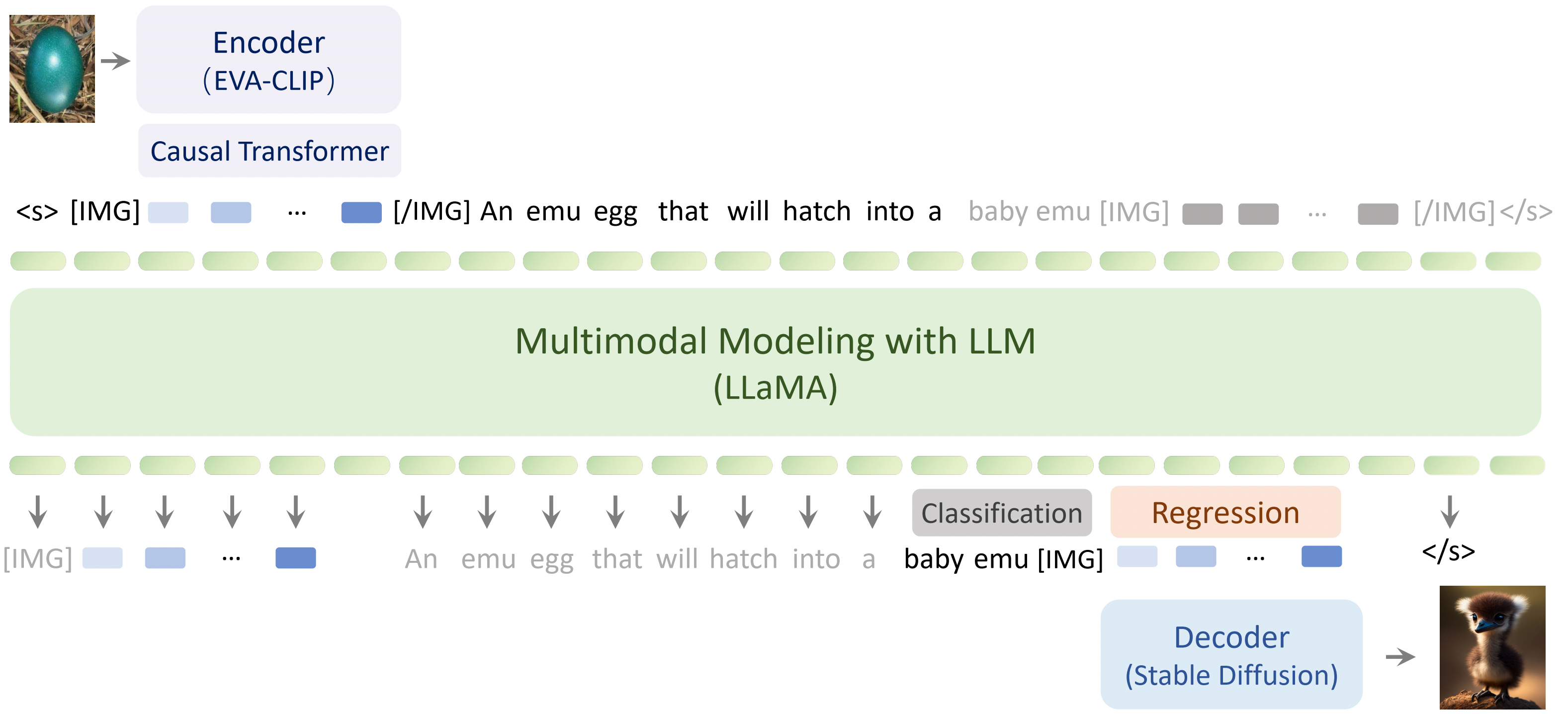

Emu is a Large Multimodal Model (LMM) trained with a unified autoregressive objective, i.e., predict-the-next-element, including both visual embeddings and textual tokens. Trained under this objective, Emu can serve as a generalist interface for both image-to-text and text-to-image tasks.

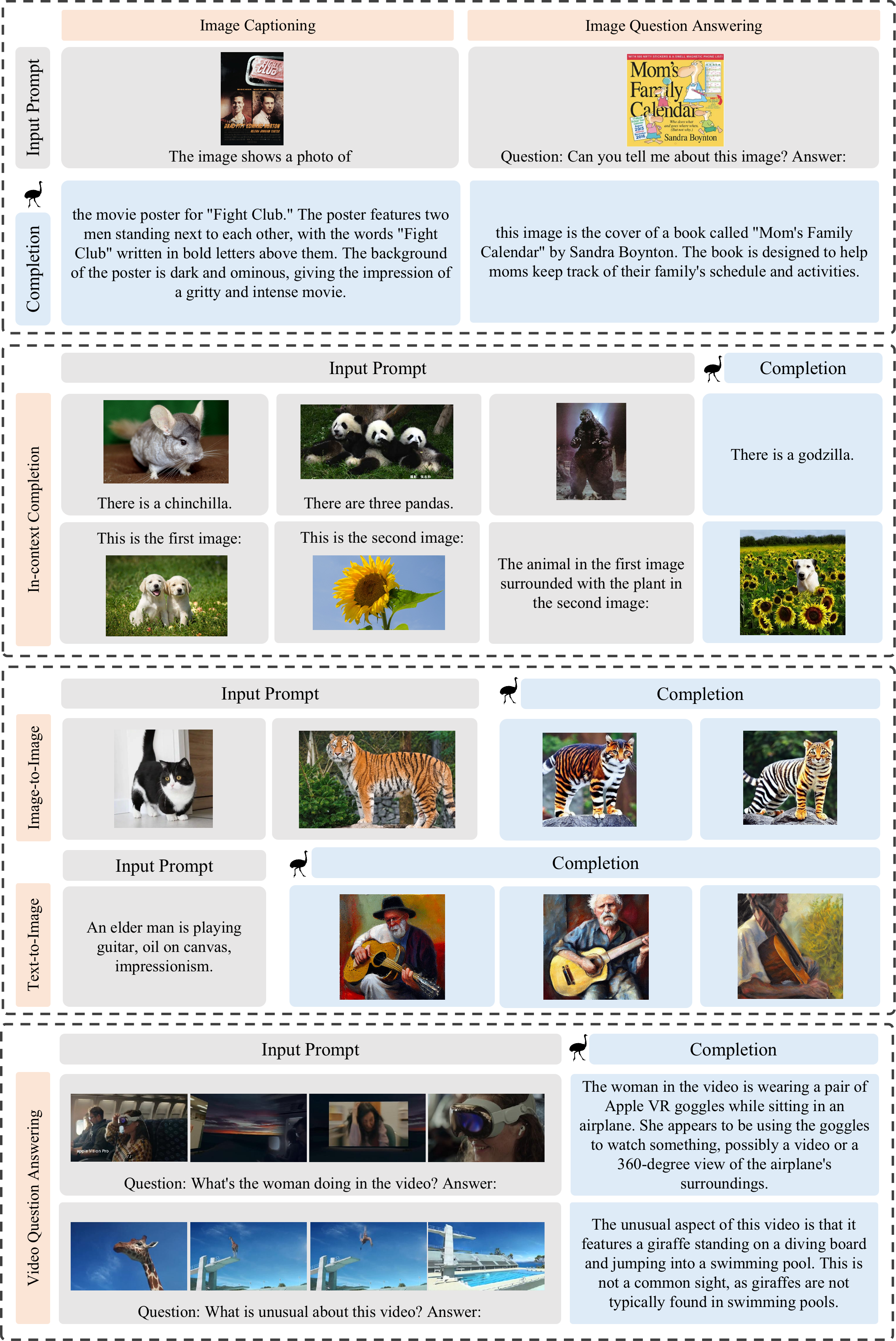

Emu serves as a generalist interface capable of diverse multimodal tasks, such as image captioning, image/video question answering, and text-to-image generation, together with new abilities like in-context text and image generation, and image blending:

Clone this repository and install required packages:

git clone https://github.com/baaivision/Emu

cd Emu

pip install -r requirements.txtWe release the pretrained and instruction-tuned weights of Emu. Our weights are subject to LLaMA's license.

| Model name | Weight |

|---|---|

| Emu | 🤗 HF link (27GB) |

| Emu-I | 🤗 HF link (27GB) |

At present, we provide inference code for image captioning and visual question answering:

python inference.py --instruct --ckpt-path $Instruct_CKPT_PATHWe thank the great work from LLaMA, BLIP-2, Stable Diffusion, and FastChat.

If you find Emu useful for your research and applications, please consider starring this repository and citing:

@article{Emu,

title={Generative Pretraining in Multimodality},

author={Sun, Quan and Yu, Qiying and Cui, Yufeng and Zhang, Fan and Zhang, Xiaosong and Wang, Yueze and Gao, Hongcheng and Liu, Jingjing and Huang, Tiejun and Wang, Xinlong},

publisher={arXiv:2307.05222},

year={2023},

}