The Singularity Data Lake Add-On for Splunk provides integration with Singularity Data Lake and DataSet by SentinelOne. The key functions allow two-way integration:

- SPL custom command to query directly from the Splunk UI.

- Inputs to index alerts as CIM-compliant, or any user-defined query results.

- Alert action to send events from Splunk.

The add-on can be installed manually via the .tgz file in the release directory. Reference Splunk documentation for installing add-ons. For Splunk Cloud customers, reference Splunk documentation for private app installation on Classic Experience or Victoria Experience.

For those looking to customize, the package subdirectory contains all artifacts. To compile, reference Splunk's UCC Framework instructions to use ucc-gen and slim package.

The add-on uses Splunk encrypted secrets storage, so admins require admin_all_objects to create secret storage objects and users require list_storage_passwords capability to retrieve secrets.

| Splunk component | Required | Comments |

|---|---|---|

| Search heads | Yes | Required to use the custom search command. |

| Indexers | No | Parsing is performed during data collection. |

| Forwarders | Optional | For distributed deployments, if the modular inputs are used, this add-on is installed on heavy forwarders. |

| Splunk component | Required | Comments |

|---|---|---|

| Search heads | Yes | Required to use the custom search command. Splunk Cloud Victoria Experience also handles modular inputs on the search heads. |

| Indexers | No | Parsing is performed during data collection. |

| Inputs Data Manager | Optional | For Splunk Cloud Classic Experience, if the modular inputs are used, this add-on is installed on an IDM. |

- From the SentinelOne console, ensure Enhanced Deep Visibility is enabled by clicking your name > My User > Change Deep Visibility Mode > Enhanced.

- Open Enhanced Deep Visibility.

- In the top left, ensure an account is selected (not

Global)

- Continue following the DataSet instructions below.

- Make note of the URL (e.g.

https://app.scalyr.com,https://xdr.us1.sentinelone.netorhttps://xdr.eu1.sentinelone.net). For SentinelOne users, note this differs from the core SentinelOne console URL. - Navigate to API Keys.

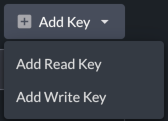

- Under Log Access Keys, click Add Key > Add Read Key (required for search command and inputs).

- Under Log Access Keys, click Add Key > Add Write Key (required for alert action).

- Optionally, click the pencil icon to rename the keys.

To get the AuthN API token follow the below mentioned details:

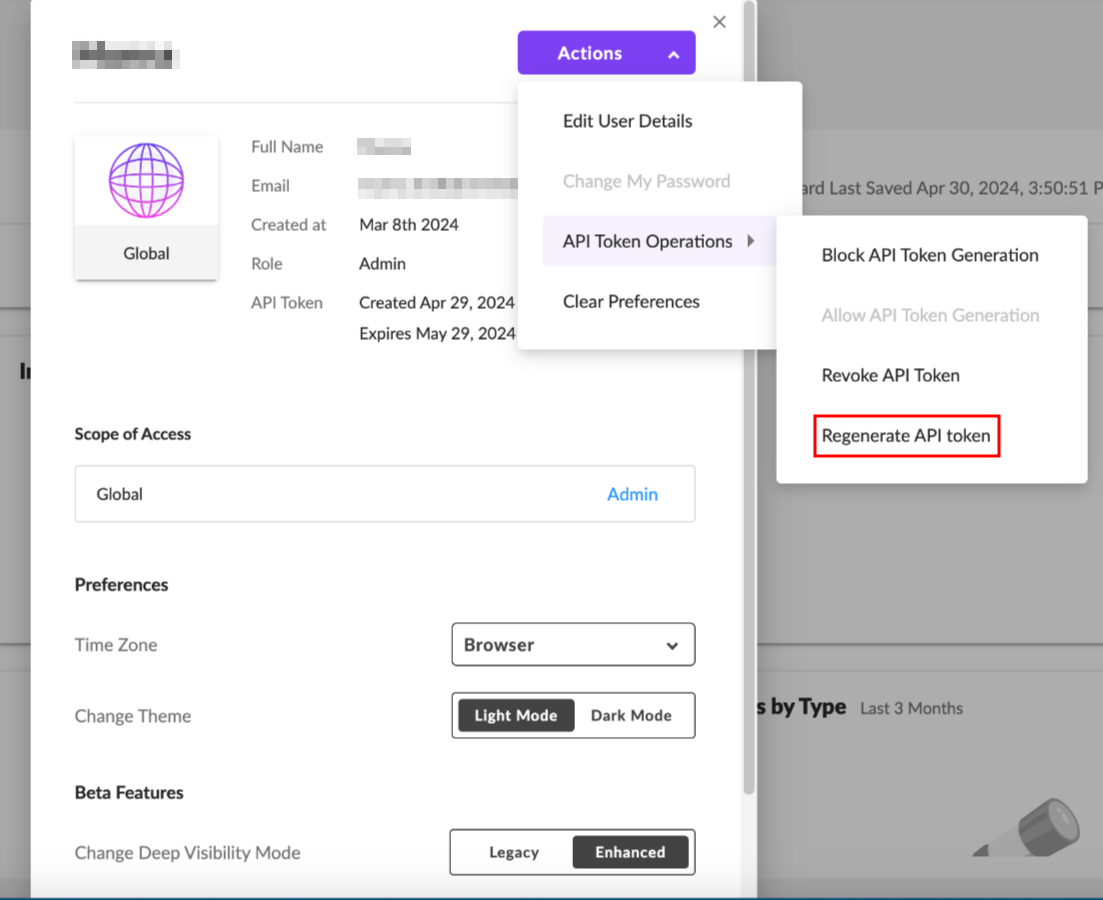

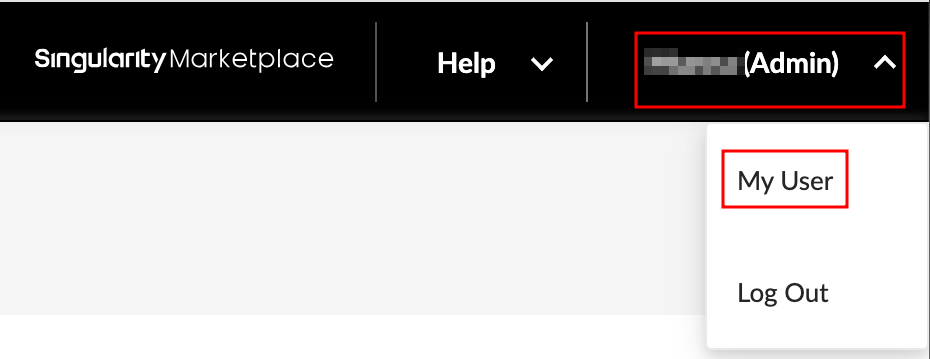

- Login into the SentinelOne console, click on your User Name > My User

- Click on Actions > API Token Operations > Generate / Regenrate API token.

- Copy the API Token and save it for configuration.

- In Splunk, open the Add-on

- On the configuration > account tab:

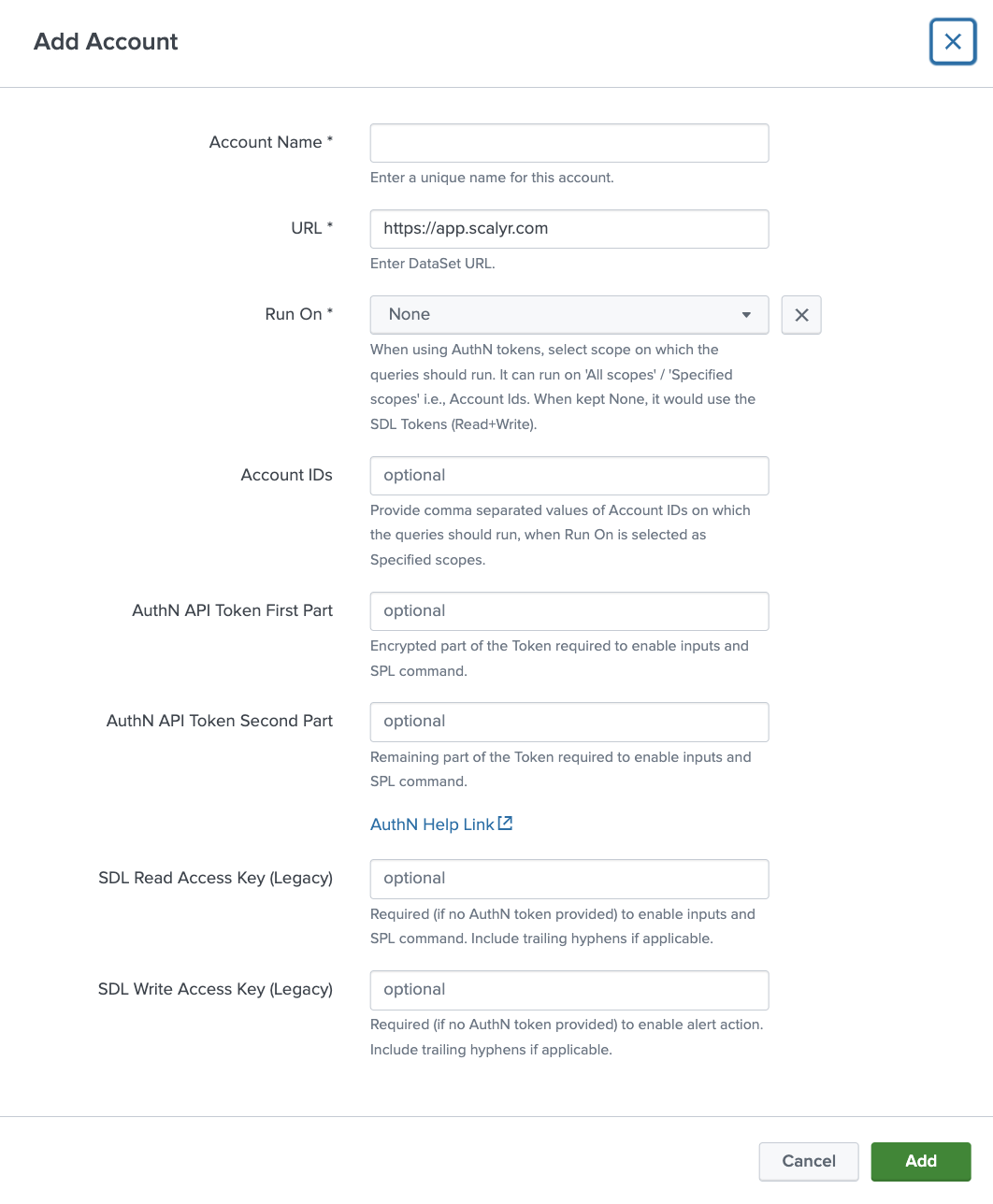

- Click Add

- Enter a user-friendly account name. For multiple accounts, the account name can be used in queries (more details below).

- Enter the full URL noted above (e.g.:

https://app.scalyr.com,https://xdr.us1.sentinelone.netorhttps://xdr.eu1.sentinelone.net). - Enter Tenant value, it can be True/False/Blank. If set to True, the queries will run for the entire Tenant or if set to False, provide Account IDs as a comma separated values to run searches in those specific accounts. Leave it blank if you are not trying use the Tenant level searches.

- Provide the comma seperated Account Ids, if Tenant is False. eg: 1234567890,9876543210.

- Enter the AuthN API Token First part which includes first 220 characters.

- Enter the AuthN API Token Second part which includes remaining characters.

- Use this command to prepare both parts of AuthN API token:

read -p "Enter Token: " input_string && echo "Part1: $(echo $input_string | cut -c 1-220)"; echo "Part2: $(echo $input_string | cut -c 221-)" - Reason for creating 2 parts of AuthN Token: Splunk Storage Manager has a limitation of storing only 256 characters of encrypted data from inputs. And the AuthN Token can have length <256, hence its split into 2 parts, the first one is encrypted (first 220 chars) and the second one is not. As we are encrypting most of the Token, its use is safe.

- Use this command to prepare both parts of AuthN API token:

- Enter the DataSet read key from above (required for searching), please ignore this if AuthN token value is provided.

- Enter the DataSet write key from above (only required for alert actions).

- Click Save

- Optionally, configure logging level and proxy information on the associated tabs.

- Click Save.

- The included Singularity Data Lake by Example dashboard can be used to confirm connectivity and also shows example searches to get started.

The | dataset command allows queries against the DataSet APIs directly from Splunk's search bar.

Optional parameters are supported:

- account - If multiple accounts are used, the account name as configured in setup can be specified (

emeain the screenshot above). If multiple accounts are configured but not specified in search, the first result (by alphanumeric name) is used. To search across all accounts,account=*can be used. - method - Define

query,powerquery,facetortimeseriesto call the appropriate REST endpoint. Default is query. - query - The DataSet query filter used to select events. Default is no filter (return all events limited by time and maxCount).

- starttime - The Splunk time picker can be used (not "All Time"), but if starttime is defined it will take precedence to define the start time for DataSet events to return. Use epoch time or relative shorthand in the form of a number followed by d, h, m or s (for days, hours, minutes or seconds), e.g.:

24h. Default is 24h. - endtime - The Splunk time picker can be used (not "All Time"), but if endtime is defined it will take precedence to define the end time for DataSet events to return. Use epoch time or relative shorthand in the form of a number followed by d, h, m or s (for days, hours, minutes or seconds), e.g.:

5m. Default is current time at search.

For query and powerquery:

- maxcount - Number of events to return.

- columns - Specified fields to return from DataSet query (or powerquery, analogous to using

| columnsin a powerquery). Yields performance gains for high volume queries instead of returning and merging all fields.

For facet:

- field - Define field to get most frequent values of. Default is logfile.

For timeseries:

- function - Define value to compute from matching events. Default is rate.

- buckets - The number of numeric values to return by dividing time range into equal slices. Default is 1.

- createsummaries - Specify whether to create summaries to automatically update on ingestion pipeline. Default is true; recommend setting to false for one-off or while testing new queries.

- useonlysummaries - Specify whether to only use preexisting timeseries for fastest speed.

For all queries, be sure to "wrap the entire query in double quotes, and use 'single quotes' inside" or double quotes \"escaped with a backslash\", as shown in the following examples.

For powerqueries using timebucket functions, return the time field as timestamp. This field is use to timestamp events in Splunk as _time.

Query Example:

| dataset method=query search="serverHost = * AND Action = 'allow'" maxcount=50 starttime=10m endtime=1m

Power Query Example 1: | dataset method=powerquery search="dataset = \"accesslog\" | group requests = count(), errors = count(status == 404) by uriPath | let rate = errors / requests | filter rate > 0.01 | sort -rate"

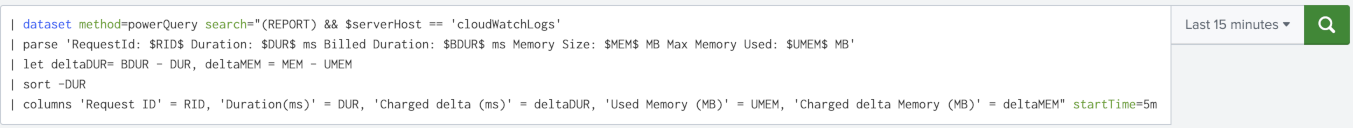

Power Query Example 2: | dataset account=emea method=powerQuery search="$serverHost == 'cloudWatchLogs' | parse 'RequestId: $RID$ Duration: $DUR$ ms Billed Duration: $BDUR$ ms Memory Size: $MEM$ MB Max Memory Used: $UMEM$ MB' | let deltaDUR= BDUR - DUR, deltaMEM = MEM - UMEM | sort -DUR | columns 'Request ID' = RID, 'Duration(ms)' = DUR, 'Charged delta (ms)' = deltaDUR, 'Used Memory (MB)' = UMEM, 'Charged delta Memory (MB)' = deltaMEM" starttime=5m

Facet Query Example:

| dataset account=* method=facet search="serverHost = *" field=serverHost maxcount=25 | spath | table value, count

Timeseries Query Example:

| dataset method=timeseries search="serverHost='scalyr-metalog'" function="p90(delayMedian)" starttime="24h" buckets=24 createsummaries=false onlyusesummaries=false

Since events are returned in JSON format, the Splunk spath command is useful. Additionally, the Splunk collect command can be used to add the events to a summary index:

| dataset query="serverHost = * AND Action = 'allow'" maxcount=50 starttime=10m endtime=1m | spath | collect index=dataset

For use cases requiring data indexed in Splunk, optional inputs are provided utilizing time-based checkpointing to prevent reindexing the same data:

| Source Type | Description | CIM Data Model |

|---|---|---|

| dataset:alerts | Predefined Power Query API call to index alert state change records | Alerts |

| dataset:query | User-defined standard query API call to index events | - |

| dataset:powerquery | User-defined PowerQuery API call to index events | - |

-

On the inputs page, click Create New Input and select the desired input

-

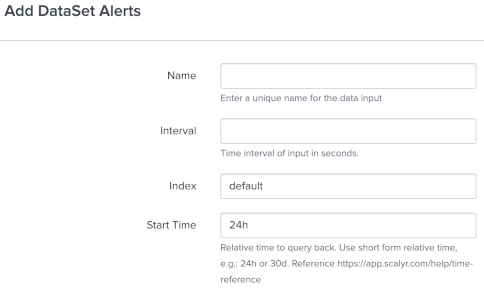

For DataSet alerts, enter:

- A name for the input.

- Interval, in seconds. A good starting point is

300seconds to collect every five mintues. - Splunk index name

- Start time, in relative shorthand form, e.g.:

24hfor 24 hours before input execution time.

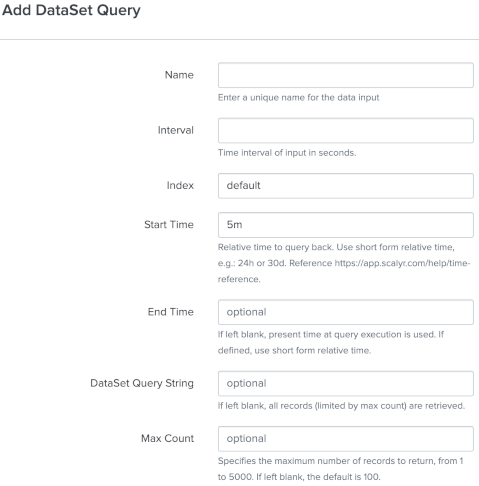

- For DataSet queries, enter:

- A name for the input.

- Interval, in seconds. A good starting point is

300seconds to collect every five mintues. - Splunk index name

- Start time, in relative shorthand form, e.g.:

24hfor 24 hours before input execution time. - (optional) End time, in relative shorthand form, e.g.:

5mfor 5 minutes before input execution time. - (optional) Query string used to return matching events.

- (optional) Maximum number of events to return.

- For DataSet Power Queries, enter:

- A name for the input.

- Interval, in seconds. A good starting point is

300seconds to collect every five mintues. - Splunk index name

- Start time, in relative shorthand form, e.g.:

24hfor 24 hours before input execution time. - (optional) End time, in relative shorthand form, e.g.:

5mfor 5 minutes before input execution time. - Query string used to return matching events, including commands such as

| columns,| limit, etc.

An alert action allows sending an event to the DataSet addEvents API.

SentinelOne Data Lake users are able to see meta logs, such as search actions, but no endpoint data in Splunk - Ensure the read API token was provisioned from an account, not Global.

Error saving configuration "CSRF validation failed" - This is a Splunk browser issue; try reloading the page, using a private window or clearing cache and cookies then retrying.

Search errors Account token error, review search log for details or Splunk configuration error, see search log for details. - API token was unable to be retrieved. Common issues include user role missing list_storage_passwords permission, API token not set or incorrect account name given that has not been configured. Review job inspector search log for errors returned by Splunk. Error retrieving account settings, error = UrlEncoded('broken') indicates a likely misconfigured or incorrect account name; splunklib.binding.HTTPError: HTTP 403 Forbidden -- You (user=username) do not have permission to perform this operation (requires capability: list_storage_passwords OR admin_all_objects) indicates missing Splunk user permissions (list_storage_passwords).

To troubleshoot the custom command, check the Job Inspector search log, also available in the internal index: index=_internal app="TA_dataset" sourcetype=splunk_search_messages.

For support, open a ticket with SentinelOne or DataSet support, including any logged errors.

Though not typically an issue for users, DataSet does have API rate limiting. If issues are encountered, open a case with support to review and potentially increase limits.

DataSet API PowerQueries limit search filters to 5,000 characters.

If Splunk events all show the same time, ensure results are returning a timestamp field. This is used to timestamp events as _time in Splunk.

This add-on was built with the Splunk Add-on UCC framework and uses the Splunk Enterprise Python SDK. Splunk is a trademark or registered trademark of Splunk Inc. in the United States and other countries.

For information on development and contributing, please see CONTRIBUTING.md.

For information on how to report security vulnerabilities, please see SECURITY.md.

Copyright 2023 SentinelOne, Inc.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this work except in compliance with the License. You may obtain a copy of the License in the LICENSE file, or at: