Adaptive Context-Aware Object Detection for Resource-Constrained Embedded Devices

Convolutional Neural Networks achieve state-of-the-art accuracy in object detection tasks. However, they have large compute and memory footprints that challenge their deployment on resource-constrained edge devices. In AdaCon, we leverage the prior knowledge about the probabilities that different object categories can occur jointly to increase the efficiency of object detection models. In particular, our technique clusters the object categories based on their spatial co-occurrence probability. We use those clusters to design a hierarchical adaptive network. During runtime, a branch controller chooses which part(s) of the network to execute based on the spatial context of the input frame.

Available on IEEEXplore and Arxiv.

AdaCon consists of two main steps:

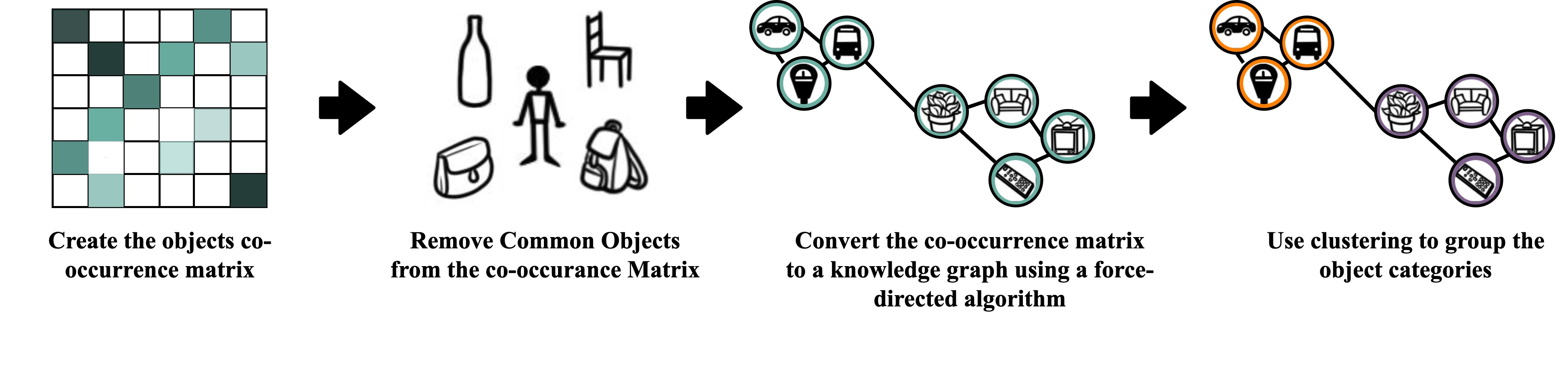

Spatial-context based Clustering has 5 steps. First, we construct the co-occurrence matrix of the object categories where each value represents the frequency of the co-occurrence of the object categories in the same scene across all the training dataset. Then, we remove the common objects. Next, we convert the frequency-based co-occurrence matrix to a correlation matrix. Then, we use the correlation matrix to build a knowledge graph using Fruchterman-Reingold force-directed algorithm. Finally, we cluster the objects based on their location in the knowledge graph

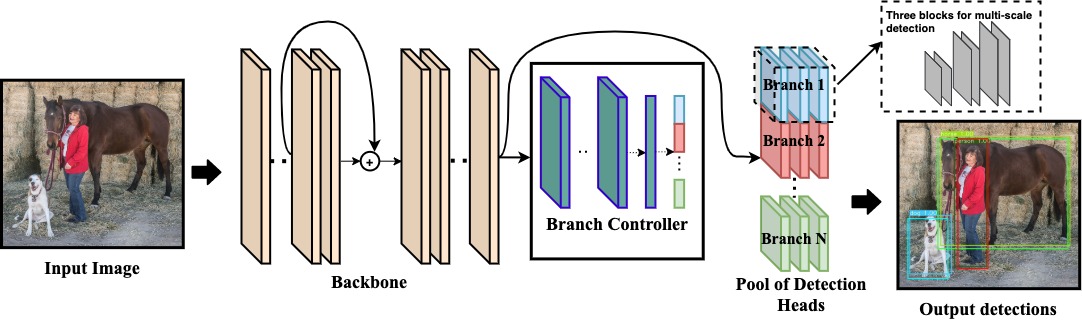

Our adaptive object detection model consists of three components: a backbone, a branch controller, and a pool of specialized detection heads (branches). The backbone is first executed to extract the common features in the input image, then a branch controller takes the extracted features and route them towards one or more of the downstream specialized branches. Only the chosen branch(es) are then executed to get the detected object categories and their bounding boxes. During runtime, it has two modes of operation: single-branch execution mode where only the branch with the highest confidence score gets executed, and multi-branch execution mode where all the branches with a confidence score higher than a certain threshold are executed.

-

Clone the repo

git clone https://github.com/scale-lab/AdaCon.git ; cd AdaCon -

Create a virtual environment with Python 3.7 or later

python -m venv env ; source env/bin/activate -

Install the requirements using

pip install -r requirements.txt

-

Download the pretrained AdaCon Model

./weights/download_adacon_weights.sh -

Optional Download the pretrained YOLO models. Run

./weights/download_yolov3_weights.sh -

Run Inference using

python detect.py --model model.args --adaptive --source 0

detect.py runs inference on a variety of sources, and save the results to runs/detect

python detect.py --model {MODEL.args}

--source {SRC}

[--adaptive]

[--single]

[--multi]

[--bc-thres {THRES}]

[--img-size {IMG_SIZE}]

MODEL.argsis the path of the desired model description, check model.args for an example.SRCis the input source. Set it to:0for webcam.file.jpgfor image.file.mp4for video.pathfor directory.

adaptiveflag enables running the adaptive AdaCon model, otherwise the static model is executed.singleflag enables single-branch execution of AdaCon.multiflag running multi-branch execution of AdaCon (Default).THRESis the confidence threshold for AdaCon's branch controller (Default = 0.4).IMG_SIZEis the resolution of the input image.

- To download COCO dataset, run

cd data ; ./get_coco2014.shorcd data ; ./get_coco2017.shto download COCO 2014 or COCO 2017 respectively. - To download your custom dataset, follow this tutorial.

test.py runs test on different datasets.

python test.py --model {MODEL.args}

--data {DATA.data}

[--adaptive]

[--single]

[--multi]

[--bc-thres {THRES}]

[--img-size {IMG_SIZE}]

MODEL.argsis the path of the desired model description, check model.args for an example.DATA.datais the path of the desired data, use data/coco2014.data and data/coco2017.data or follow the same format for your custom dataset.adaptiveflag enables running the adaptive AdaCon model, otherwise the static model is executed.singleflag enables single-branch execution of AdaCon.multiflag running multi-branch execution of AdaCon (Default).THRESis the confidence threshold for AdaCon's branch controller (Default = 0.4).IMG_SIZEis the resolution of the input image.

train.py runs training for AdaCon model and it also supports training a static model. Our script takes a pretrained backbone (part of the static model), then it trains the branch controller as well as the branches.

python train.py --model {MODEL.args}

--data {DATA.data}

[--adaptive]

MODEL.argsis the path of the desired model description, check model.args for an example.DATA.datais the path of the desired data, use data/coco2014.data and data/coco2017.data or follow the same format for your custom dataset.adaptiveflag enables training the adaptive AdaCon model, otherwise the static model is trained.

This code is a fork from Ultralytics implementation for Yolov3.