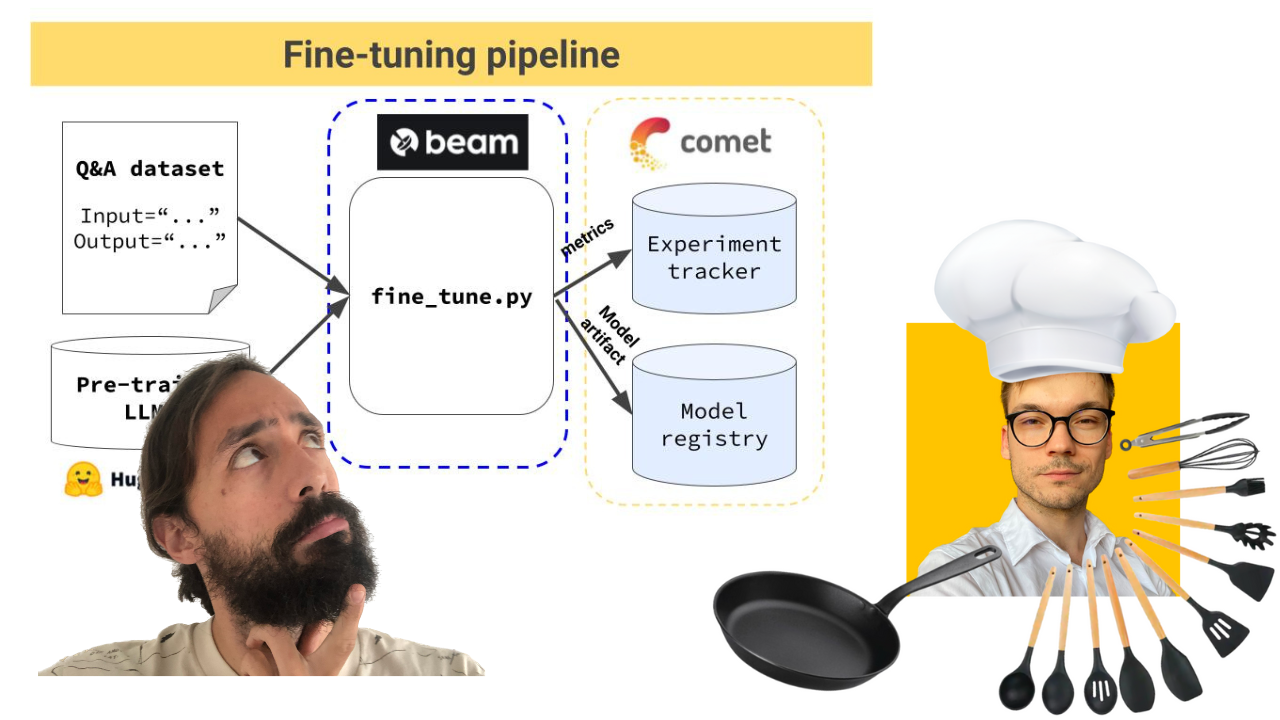

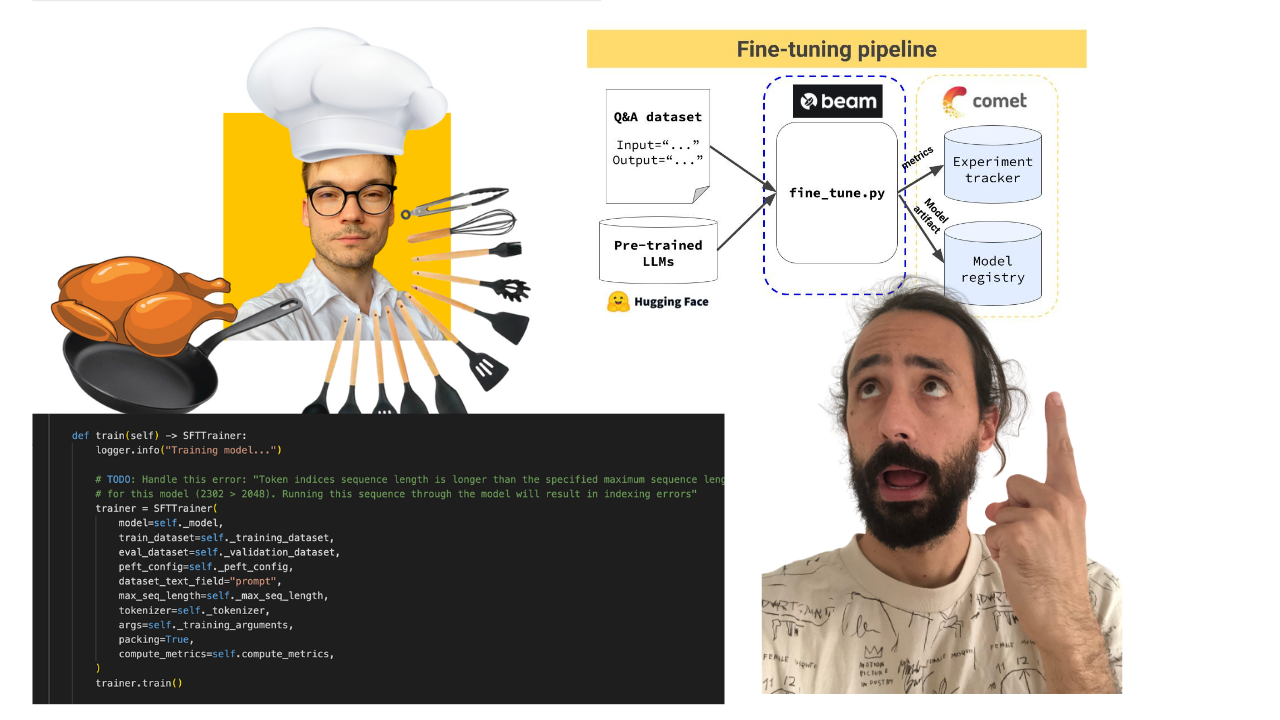

- Fine-tune Falcon 7B using our own Q&A generated dataset containing investing questions and answers based on Alpaca News.

- It seems that 1 GPU is enough if we use Lit-Parrot

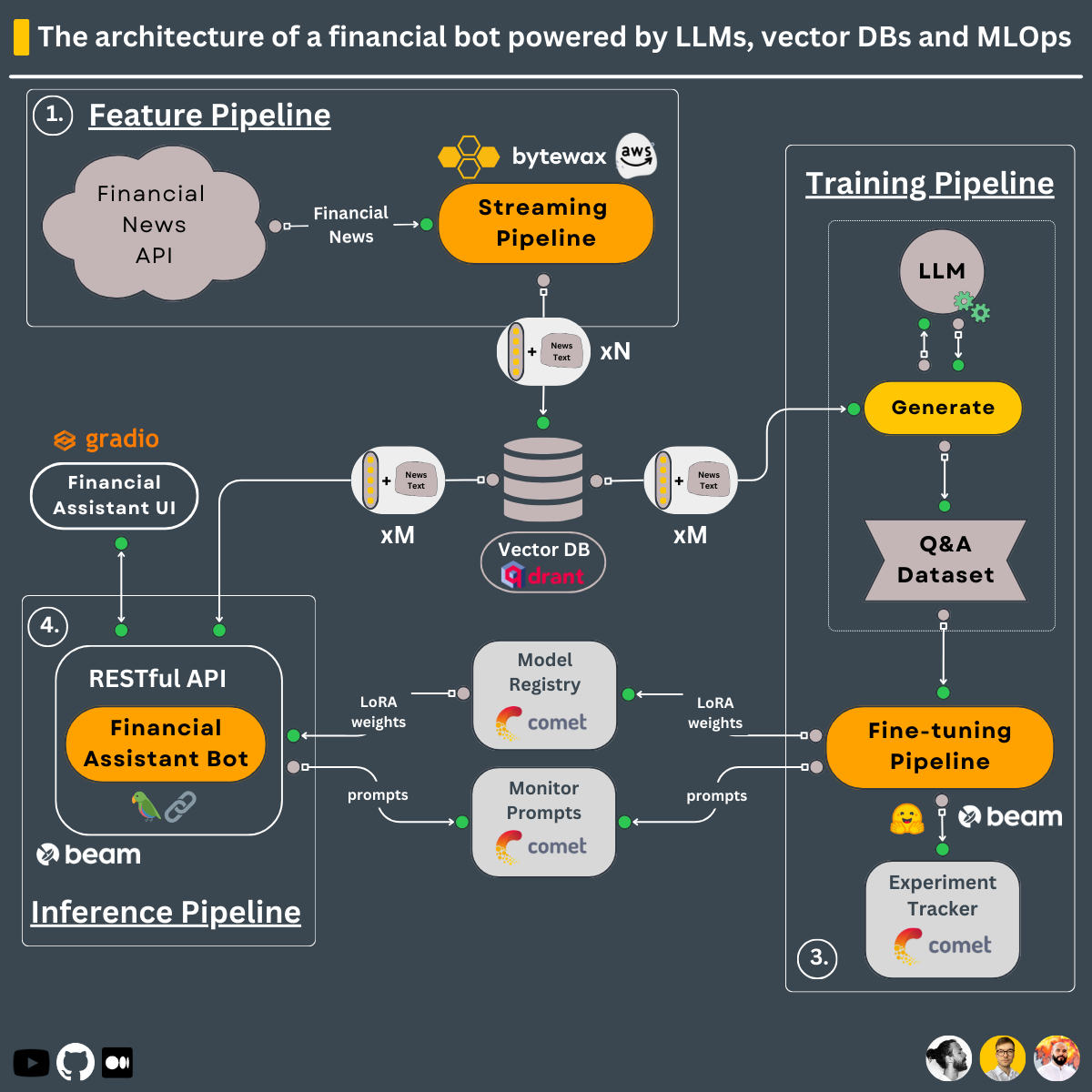

- Build real-time feature pipeline, that ingests data form Alpaca, computes embeddings, and stores them into a serverless Vector DB.

- REST API for inference, that

- receives a question (e.g. "Is it a good time to invest in renewable energy?"),

- finds the most relevant documents in the VectorDB (aka context)

- sends a prompt with question and context to our fine-tuned Falcon and return response.

Before diving into the modules, you have to set up a couple of additional tools for the course.

financial news data source

Follow this document, showing you how to create a FREE account, generate the API Keys, and put them somewhere safe.

vector DB

Go to Qdrant, create a FREE account, and follow this document on how to generate the API Keys.

ML platform

Go to Comet ML, create a FREE account, a project, and an API KEY. We will show you in every module how to add these credentials.

cloud compute

Go to Beam and follow their quick setup/get started tutorial. You must install their CLI and configure your credentials on your local machine.

When using Poetry, we had issues locating the Beam CLI when using the Poetry virtual environment. To fix this, create a symlink using the following command - replace <your-poetry-env-name> with your Poetry env name:

export POETRY_ENV_NAME=<your-poetry-env-name>

ln -s /usr/local/bin/beam ~/.cache/pypoetry/virtualenvs/${POETRY_ENV_NAME}/bin/beamcloud compute

Go to AWS, create an account, and generate a pair of credentials.

After, download and install their AWS CLI and configure it with your credentials.

Every module has its dependencies and scripts. In a production setup, every module would have its repository, but in this use case, for learning purposes, we put everything in one place:

Thus, check out the README for every module individually to see how to install & use it:

If you want to run a notebook server inside a virtual environment, follow the next steps.

First, expose the virtual environment as a notebook kernel:

python -m ipykernel install --user --name hands-on-llms --display-name "hands-on-llms"Now run the notebook server:

jupyter notebook notebooks/ --ip 0.0.0.0 --port 8888