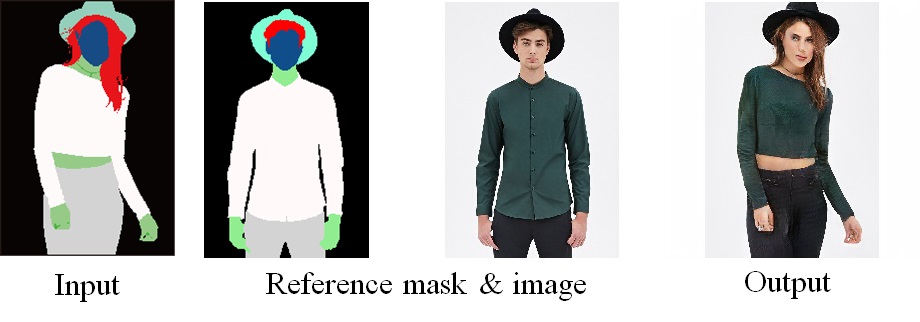

Results of multimodal semantic image synthesis and editing using our method. Our method yields highly diverse images from a single semantic mask (top), and also enables appearance editing for specific semantic objects, e.g., the clothes in the fashion images (bottom).

This code is an implementation of the following paper:

Yuki Endo and Yoshihiro Kanamori: "Diversifying Semantic Image Synthesis and Editing via Class- and Layer-wise VAEs," Computer Graphics Forum (Proc. of Pacific Graphics 2020), 2020. [Project][PDF][Supp(183MB)]

- Python3

- PyTorch (>=1.2.0)

This code also requires the Synchronized-BatchNorm-PyTorch rep.

cd models/networks/

git clone https://github.com/vacancy/Synchronized-BatchNorm-PyTorch

cp -rf Synchronized-BatchNorm-PyTorch/sync_batchnorm .

cd ../../

- Download and decompress our pre-trained models.

- Make a "checkpoints" directory in the parent directory and put the decompressed "ade20k", "deepfashion", and "gta5" directories in the "checkpoints" directory.

- Run the following commands for each dataset:

- ADE20K

python test.py --name ade20k --dataset_mode ade20k --dataroot ./datasets/ade20k/ --use_vae

- DeepFashion

python test.py --name deepfashion --dataset_mode deepfashion --dataroot ./datasets/deepfashion/ --use_vae

- GTA5

python test.py --name gta5 --dataset_mode gta5 --dataroot ./datasets/gta5/ --use_vae

- Style-guided synthesis

You can also specify a style id (ID of a style image in a test set) for style-guided synthesis as follws:

python test.py --name deepfashion --dataset_mode deepfashion --dataroot ./datasets/deepfashion/ --use_vae --style_id 1

First, if you want to train the networks using full training sets, please download and put them in appropriate directories in ./datasets, then

python train.py --name [checkpoint_name] --dataset_mode ade20k --dataroot ./datasets/ade20k/ --use_vae --batchSize 4

python train.py --name [checkpoint_name] --dataset_mode deepfashion --dataroot ./datasets/deepfashion/ --use_vae --batchSize 4

- Download rarity bin and masks. (https://github.com/zth667/Diverse-Image-Synthesis-from-Semantic-Layout)

- Put the downloaded files in ./datasets/gta5/rarity.

- Run the following command.

python train.py --name [checkpoint_name] --dataset_mode gta5 --dataroot ./datasets/gta5/ --use_vae --batchSize 4

Please cite our paper if you find the code useful:

@article{endoPG20,

author = {Yuki Endo and

Yoshihiro Kanamori},

title = {Diversifying Semantic Image Synthesis and Editing via Class- and Layer-wise

VAEs},

journal = {Comput. Graph. Forum},

volume = {39},

number = {7},

pages = {519--530},

year = {2020},

}

This code heavily borrows from the SPADE repository.