A Deep Learning Convolutional Neural Network (CNN) using PyTorch that analyses images of students in a classroom or online meeting setting and categorizes them into distinct states or activities.

-

Facial Expression Recognition: Convolutional Neural Networks (CNN) for facial expression recognition. The dataset for this project includes images from a few publicly available datasets containing nu- merous human facial expressions.

-

Classroom Activity Recognition: A Deep Learning CNN using PyTorch designed to analyze images of students in a classroom or online meeting setting, categorizing them into distinct states or activities.

- Angry

- Neutral

- Engaged

- Bored

Here is a summary of the datasets used in the project:

IMDB-WIKI

Total Images: ~500,000

Image/Class: ~10,000

Features: Age / Gender labels

Source: https://data.vision.ee.ethz.ch/cvl/rrothe/imdb-wiki/

MMA FACIAL EXPRESSION

Total Images: ~128,000

Image/Class: ~6,500

Features: Compact images, Only frontal faces, RGB images

Source: https://www.kaggle.com/datasets/mahmoudima/mma-facial-expression

UTKFace

Total Images: ~20,000

Image/Class: Unknown

Features: Diverse images, Only frontal faces, Duplicate-free

Source: https://susanqq.github.io/UTKFace/

Real and Fake Face Detection

Total Images: ~2,000

Image/Class: ~1,000

Features: High resolution, Only frontal faces, Duplicate-free

Source: https://www.kaggle.com/ciplab/real-and-fake-face-detection`

Flickr-Faces-HQ Dataset (FFHQ)

Total Images: 70,000

Image/Class: ~7,000

Features: High quality images, Only frontal faces, Duplicate-free

Source:https://github.com/NVlabs/ffhq-dataset

Class Training Test

Angry 600 60

bored 800 50

Engaged 850 70

Neutral 750 65

All images are resized to a standard dimension to ensure consistency across the dataset.

from keras.preprocessing.image import ImageDataGenerator

# Assuming images are in a directory 'data/train'

datagen = ImageDataGenerator(rescale=1./255)

# Standard dimensions for all images

standard_size = (150, 150)

# Generator will resize images automatically

train_generator = datagen.flow_from_directory(

'data/train',

target_size=standard_size,

color_mode='grayscale', # Convert images to grayscale

batch_size=32,

class_mode='categorical'

)Images are converted to grayscale to focus on the important features without the distraction of color.

Uniform lighting conditions are applied to images to mitigate the effects of varying illumination.

# Additional configuration for ImageDataGenerator to adjust brightness

datagen = ImageDataGenerator(

rescale=1./255,

brightness_range=[0.8,1.2] # Adjust brightness

)

Images are cropped to remove background noise and focus on the face, the most important part for emotion detection.

- Input: 48x48 RGB images.

- Convolutional Layers: Multiple layers with 4x4 kernels, batch normalization, leaky ReLU activation.

- Pooling Layers: Max pooling layers with 2x2 kernels.

- Fully Connected Layers: 2 fully connected layers with dropout and softmax activation.

- Parallel Processing: nn.DataParallel for multi-GPU support.

- Training Monitoring: Tracks validation set performance to optimize model parameters.

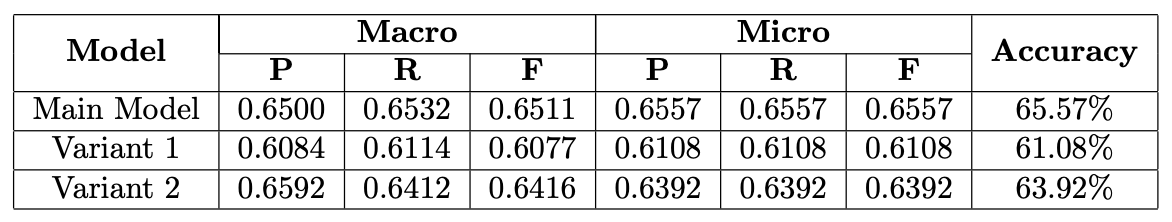

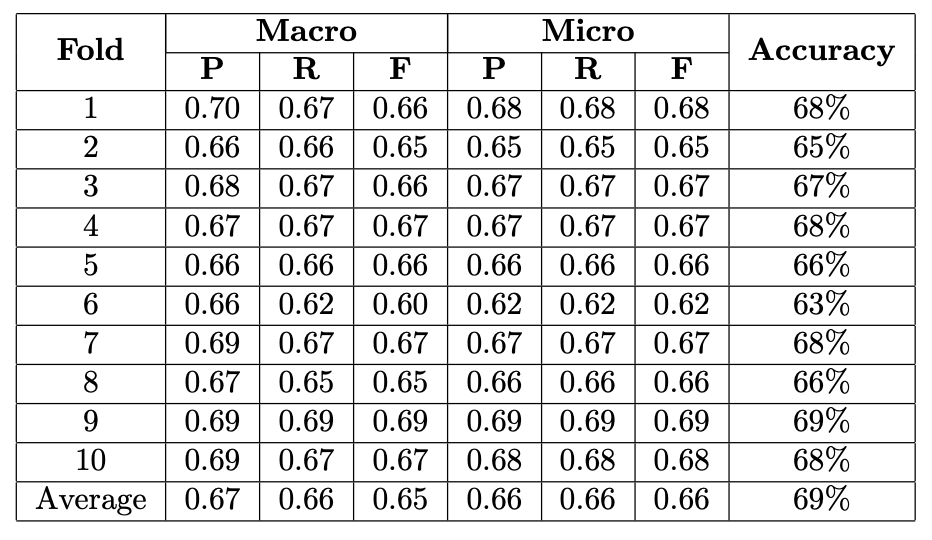

- Main Model beats Variant 1 in accuracy and macro metrics.

- Main Model slightly more accurate than Variant 2.

- Variant 2 has better macro precision, less false positives.

- Trade-off: Higher recall can mean more false positives; higher precision means fewer false alarms.

- There’s a consistent trend across models where Engaged (Class 3) and Bored (Class 2) are often confused. This could be due to similarities in facial expressions or insufficient distinguishing features.

- The Neutral and Angry classes seem to be well-recognized across all models, suggesting that these facial expressions are more consistent and universally recognizable.

- Overall, distinguishing between expressions Class 3 (Engaged) and Class 2 (Bored) poses a challenge for these models. This might be due to the lack of diversity and quality of data.

- Class 2 (Bored) is often confused with Class 3 (Engaged).

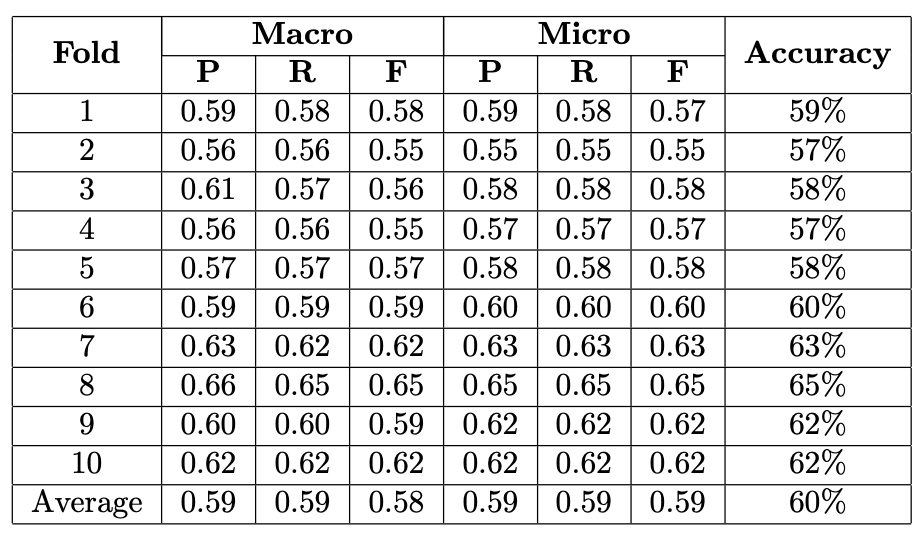

- Similar patterns of confusion as the Main Model, with Class 3 (Engaged) and Class 2 (Bored) having notable misclassifications.

- Adding layers in Variant 2 did not improve performance consistently.

- More layers could capture complex features but risk overfitting.

- Decreased accuracy in Variant 2 might stem from overfitting.

- Main Model's moderate kernel size balances detection of fine and broad features, yielding highest accuracy.

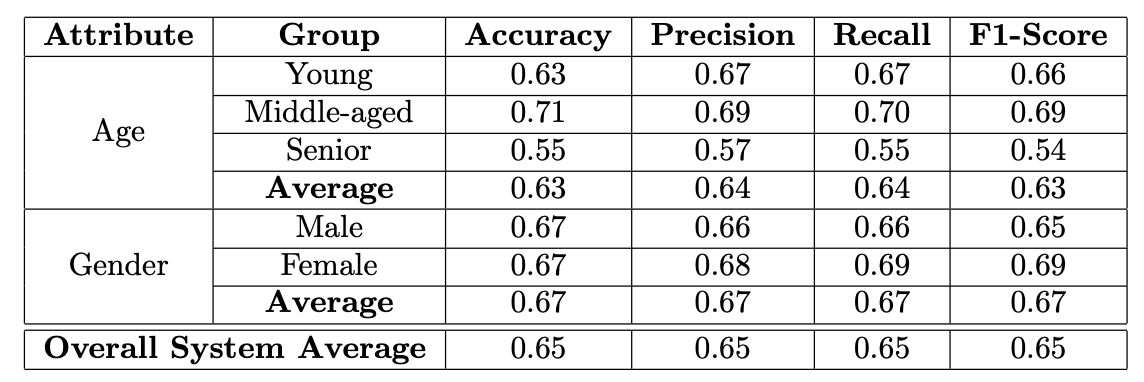

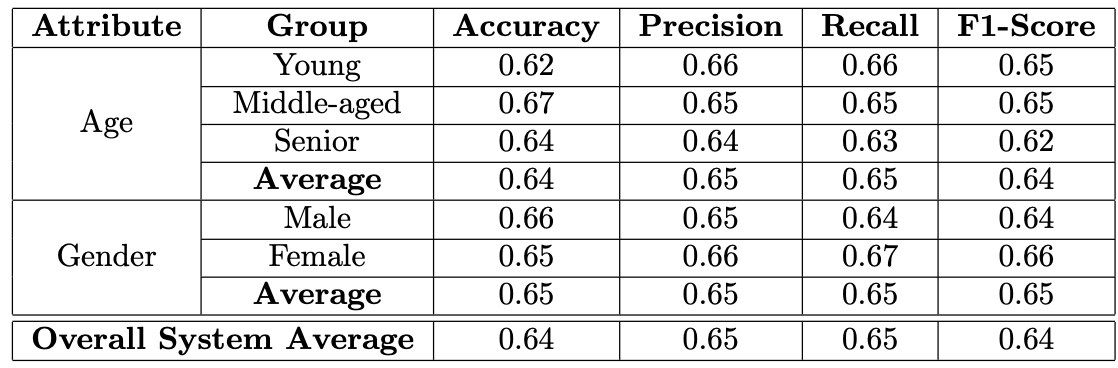

The bias attributes of Age and Gender were analysed to check for disparities in performance across different subgroups. This included the following steps

- The dataset was split into subclasses based on the attributes Gender - Male and Female Age - Young, Mid and Senior

- The performance metrics of the CNN model were evaluated for each subgroup to check for the presence of biases. • The dataset was augmented to mitigate the bias.

The system was biased against people in the Senior age category. For bias mitigation, the dataset was rebalanced by increasing the count of images from the Senior age category and the images from the Mid and Young age brackets were reduced. The subclasses in the Gender attributes did not show any biases.

- Accuracy graph displays fluctuations between 0.63 and 0.69 over 10 measurements.

- Notable accuracy dips occur at the 4th, 6th, and 9th measurements, with the 6th showing the sharpest drop.

- Precision is relatively stable; both Recall and F1 Score significantly drop at the 5th fold.

- The metrics reveal inconsistent model performance, particularly poor at the 5th fold.

- Accuracy across 10 folds, with a score varying from just above 0.57 to just under 0.65.

- Accuracy significantly improves at fold 7, peaking near 0.65 before declining again.

- Macro Precision and Recall exhibit significant volatility, while the F1 Score remains more consistent.

- All three metrics peak at fold 8, with Macro Recall showing the greatest increase.

- K-fold cross-validation yields slightly higher average accuracy than train/test split due to varied data subset performance.

- It evaluates the model over multiple folds for a comprehensive performance assessment.

- Consistent performance across k-folds suggests better model generalization and can influence model selection.

- Python3

- Pip3

- Download and unzip the Facial Expression Dataset.

- Download and unzip the Classroom Activity Dataset in the parent folder.

pip3 install --upgrade pip

pip3 install virtualenv

python3 -m venv venv

source ./venv/bin/activatepip3 install -r requirements.txt- Preprocess and Visualize:

python3 preprocessor.py - Train/Validate and Test Main Model:

python3 cnn_training_early_stop.py; python3 cnn_testing.py - Variants Training and Testing:

python3 cnn_training_variant1.py; python3 cnn_training_variant2.py; python3 cnn_testing_variant_1.py; python3 cnn_testing_variant_2.py - K-fold Cross Validation:

python3 cnn_training_kfold.py

- Classification of images into respective classes.

- Display of training/testing dataset classification.

- Data visualizations using Matplotlib.

- Training over epochs with accuracy and loss metrics.

- Saved models under Model folder.

- K-fold analysis with training and validation metrics.

For further details on methodology, datasets, and findings, refer to the complete project reports.