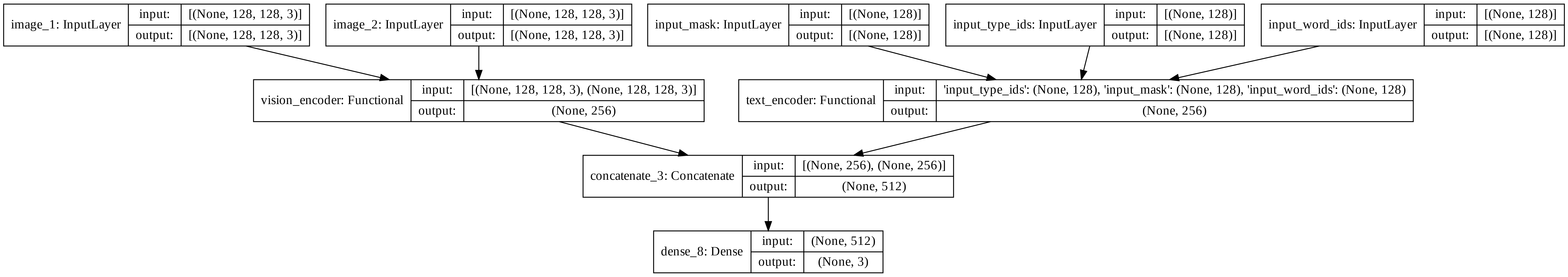

This repository shows how to implement baseline models for multimodal entailment. One of these models looks like so:

High-resolution version is available here.

These models use the multimodal entailment dataset introduced here. This repository is best followed along with this blog post on keras.io: Multimodal entailment. The blog post goes over additional details, thought experiments, notes, etc.

The accompanying blog post marks the 100th example on keras.io.

Multimodal entailment.ipynb: Shows how to train the model shown in above figure.multimodal_entailment_attn.ipynb: Shows how to train a similar model with cross-attention (Luong style).text_entailment.ipynb: Uses only text inputs to train a BERT-based model for the enatailment problem.

Thanks to the ML-GDE program for providing GCP credits.

Thanks to Nilabhra Roy Chowdhury who worked on preparing the image data.