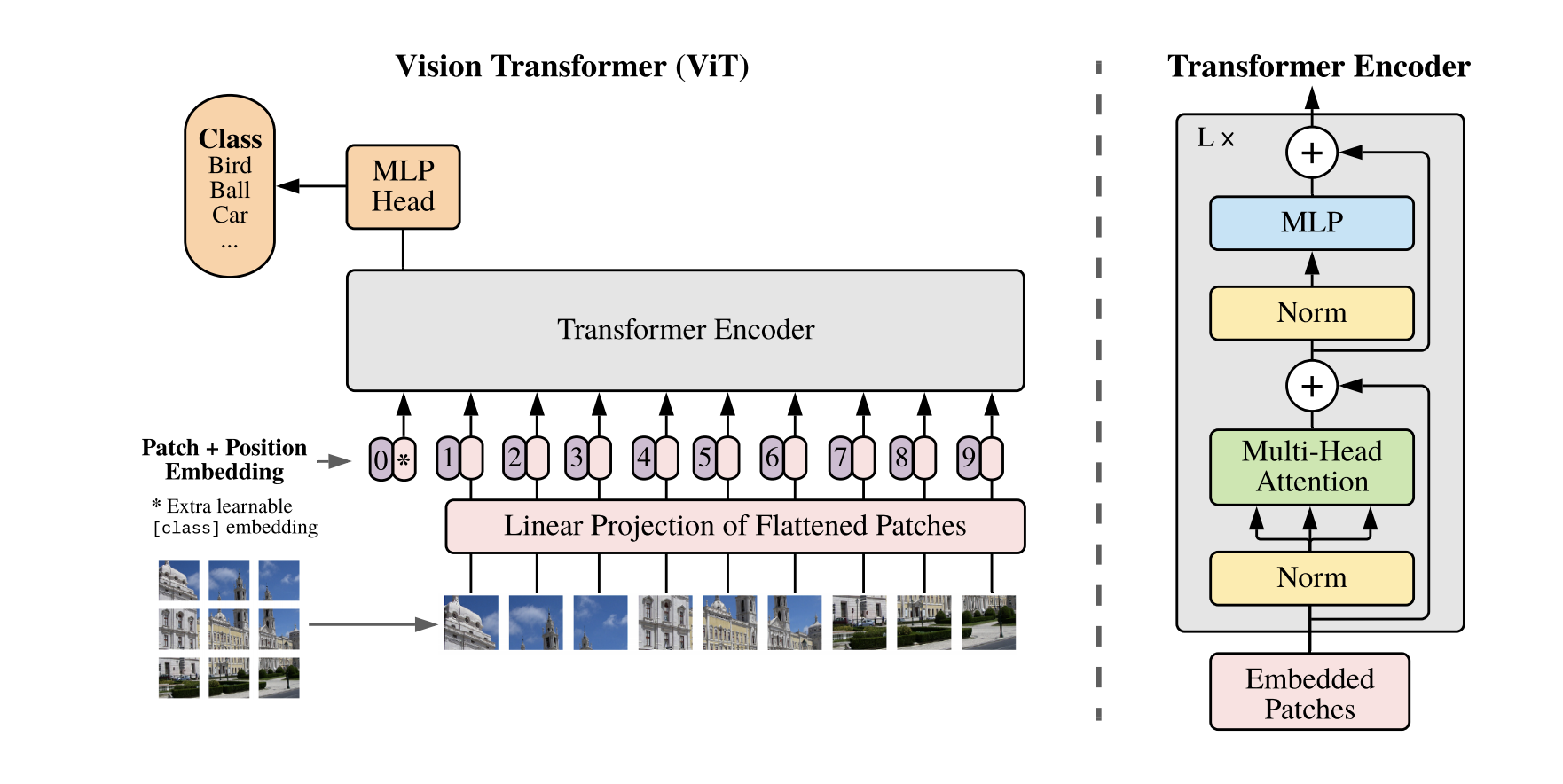

Tensorflow implementation of the Vision Transformer (ViT) presented in An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale, where the authors show that Transformers applied directly to image patches and pre-trained on large datasets work really well on image classification.

Create a Python 3 virtual environment and activate it:

virtualenv -p python3 venv

source ./venv/bin/activateNext, install the required dependencies:

pip install -r requirements.txtStart the model training by running:

python train.py --logdir path/to/log/dirTo track metrics, start Tensorboard

tensorboard --logdir path/to/log/dirand then go to localhost:6006.

@inproceedings{

anonymous2021an,

title={An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale},

author={Anonymous},

booktitle={Submitted to International Conference on Learning Representations},

year={2021},

url={https://openreview.net/forum?id=YicbFdNTTy},

note={under review}

}