[C]onfiguration [O]nly [Web] on [J]VM

[TOC]

COWJ is pronounced as Cows, and stands for:

Configuration-Only-Web-over-JVM

It should be very clear from the naming that:

objective is to optimize back-end development and replacing it with configurations.

It is pretty apparent that a lot of back-end "development" goes under:

- Trivial CRUD over data sources

- Reading from data sources and object massaging - via object mapper, thus mapping input to output

- Aggregation of multiple back-end services

- Adding random business logic to various section of the API workflows

COWJ aims to solve all these 4 problems, such that what was accomplished by 100 of developers can be done by less than 10, a ratio of 90% above in being effective.

Lately, 10+ people gets allocated to maintain "how to create a service" end point. This must stop. The following must be made true:

- Creating a service end point should be just adding configuration

- Writing the service should be just typing in scriptable code

- Input / Output parameters are to be assumed typeless JSON at worst

- We can, at best do a lazy type checking to validate Schema - alternatives

- RAML

- Open API

- JSON Schema

There should not be any "business logic" in the code. They are susceptible to change, hence they should be hosted outside the API end points - DSL should be created to maintain.

Any "Service" point requiring any "data store" access need to declare it, specifically as part of the service configuration process. Objective of the engine would be to handle the data transfer. JSON is the choice for data transfer for now.

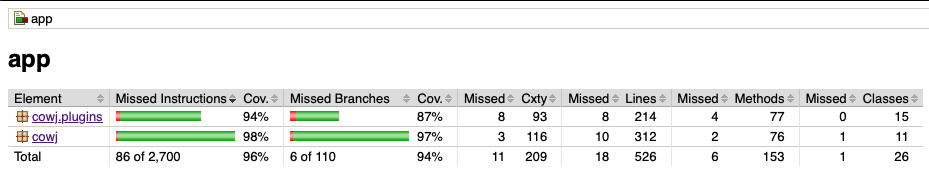

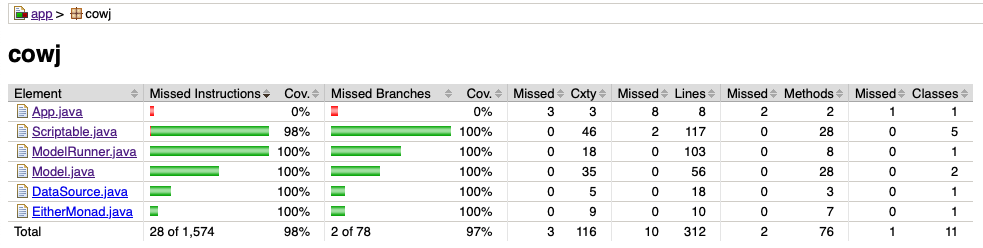

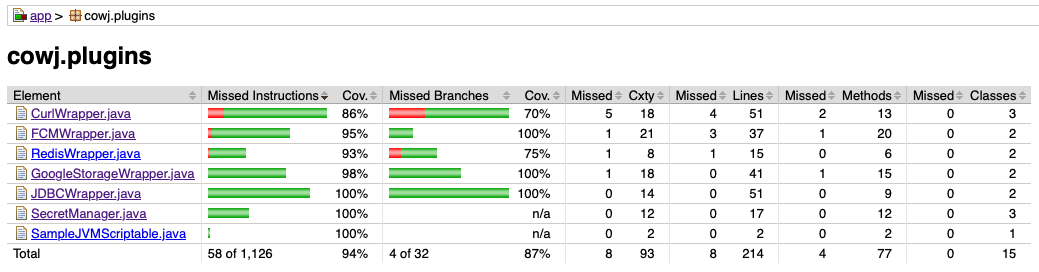

If one is willing to use this, one must wonder - what Makes Anything 'Prod Ready' ? Only suitable answer is the core components must be excessively well tested, and should have really good instruction and branch coverage.

Thus the engine core is immensely tested, and is at per with industry standard quality.

Even plugins are reasonably tested, and are ready for production usage.

Here is the config:

# This shows how COWJ service is routed

# port of the server

port : 8000

# routes information

routes:

get:

/hello : _/scripts/js/hello.js

/param/:id : _/scripts/js/param.js

post:

/hello : _/scripts/js/hello.js

# route forwarding local /users points to json_place

proxies:

get:

/users: json_place/users

post:

/users: json_place/users

# filters - before and after an URI

filters:

before:

"*" : _/before.zm

after:

"*": _/after.zm

# how to load various data sources - plugin based architecture

plugins:

# the package

cowj.plugins:

# items from each package class::field_name

curl: CurlWrapper::CURL

redis: RedisWrapper::REDIS

# data store connections

data-sources:

redis :

type : redis

urls: [ "localhost:6379"]

json_place:

type: curl

url: https://jsonplaceholder.typicode.com

proxy: _/proxy_transform.zm # responsible for message transform

cron:

cache:

exec: _/cache.md

boot: true

at: "*/5 * * * *"It simply defines the routes - as well the handler script for such a route. In this way it is very similar to PHP doctrine, as well as DJango or Play.

Is Polyglot. We support JSR-223 languages - in built support is provided right now for:

- JavaScript

- Python via Jython

- Groovy

- ZoomBA

Underlying we are using the specially cloned and jetty 11 migrated spark-java fork:

https://github.com/nmondal/spark-11

Also it uses ZoomBA extensively : https://gitlab.com/non.est.sacra/zoomba

Here is how it works:

- Client calls server

- Server creates a

Request, Responsepair and sends it to a handler function - A script is the handler function which receives the context as

req,respand can use it to extract whatever it wants. - DataSources are loaded and injected with

_dsvariable.

Here is one such example of routes being implemented:

// javascript

let x = { "id" : req.params("id") };

x;// return The context is defined as:

https://sparkjava.com/documentation#request

https://sparkjava.com/documentation#response

Cowj supports a global, non session oriented global memory - which can be used

to double time as a poor substitute for in memory cache -

and it is a ConcurrentHashMap - accessible by the binding variable _shared.

The syntax for using this would be:

v = _shared.<key_name> // groovy, zoomba

v = _shared[<key_name>] // groovy, zoomba, js, python See the document "A Guide to COWJ Scripting" found here - Scripting

These are how one can have before and after callback before and after any route pattern gets hit.

A before filter gets hit before hitting the actual route, while an after filter gets hit after returning from the route, so one can modify the response if need be.

Classic example of before filter is for auth while after filter can be used to modify response type by adding response headers.

A typical use case from auth:

routes:

get:

/users: _/users.zm

filters:

before:

"*" : _/auth.zmNow inside auth.zm :

// auth.zm

def validate_token(){ /* verification here */ }

token = req.headers("auth")

assert("Invalid Request", 403) as { validate_token(token) } A typical use case to json format error messages:

routes:

get:

/users: _/users.zm

filters:

finally:

"*" : _/finally.zmNow inside finally.zm :

// finally.zm

if ( resp.status @ [200:400] ){ return }

error_msg = resp.body

resp.body( jstr( { "error" : error_msg } ) )This ensures all errors are formatted as json.

In the cron section one can specify the "recurring" tasks

for a project. The key becomes name of the task,

while:

exec: script that needs to be executedboot: to run while system startupat: cron expression to define the recurrence

If the idea of COWJ is to do CRUD, where it does CRUD to/from? The underlying data is provided via the data sources.

How data sources work? There is a global map for registered data sources, which gets injected in the scripts as _ds :

_ds.redisWould access the data source which is registered in the name of redis .

Right now there are the following data sources supported:

JDBC data source - anything that can connect to JDDBC drivers.

some_jdbc:

type: jdbc # shoule have been registered before

driver : "full-class-for-driver"

connection: "connection-string"

properties: # properties for connection

x : y # all of them will be added External Web Service calling. This is the underlying mechanism to call Proxies to forwarding data.

some_curl:

type: curl # shoule have been registered before

url : "https://jsonplaceholder.typicode.com" # the url

headers: # headers we want to pass through

x : y # all of them will be added Exposes Google Storage as a data source.

googl_storage:

type: g_storage # shoule have been registered beforeExposes Redis cluster as a data source:

redis :

type : redis # should register before

urls: [ "localhost:6379"] # bunch of urls to the cluster Firebase as a data source.

All data sources are implemented as plug and play architecture such that no code is required to change for adding plugins to the original one.

This is how one can register a plugin to the system - the following showcases all default ones:

# how to load various data sources - plugin based architecture

plugins:

# the package

cowj.plugins:

# items from each package class::field_name

curl: CurlWrapper::CURL

fcm: FCMWrapper::FCM

g_storage: GoogleStorageWrapper::STORAGE

jdbc: JDBCWrapper::JDBC

redis: RedisWrapper::REDISAs one can see, we have multiple keys inside the plugins which corresponds to multiple packages - and under each package there are type of datasource we want to register it as, and the right side is the implementor_class::static_field_name.

In plain language it reads:

A static filed

CURLof the classcowj.plugins.CurlWrapperimplements aDataStore.Creatorclass and is being registered ascurltype of data store creator.

From where plugins should be loaded? If one chose not to compile their code with COWJ - as majority of the case would be - there is a special folder in the base director defaults to lib. All jars in all directories, recursively will be loaded in system boot and would be put into class path,

One can naturally change the location of the lib folder by:

lib: _/some_other_random_dirHow to author COWJ plugins can be found in here Writing Custom Plugins

Path/Packet forwarding. One simply creates a base host - in the data source section of type curl and then use that key as base for all forwarding.

In the Yaml example the following routing is done:

localhost:5003/users

--> https://jsonplaceholder.typicode.com/users

System responds back with the same status as of the external web service as well as the response from the web service gets transferred back to the original caller.

This transform is coded in the curl type as follows:

data-sources:

json_place:

type: curl

url: https://jsonplaceholder.typicode.com

proxy: _/proxy_transform.zm # responsible for message transformThe proxy section has the script to transform the following to be forwarded to the destination server :

requestobjectheadershas a mutable map of request headersquerieshas mutable map of all query parametersbodyhas the string which isrequest.body()

Evidently at a forward proxy level, these are the parameters one can change before forwarding it to destination.

The transformation function / script is expected to return a map of the form:

{

headers : {

key : value

},

query : {

key : value

},

body : "request body"

}In case the script does not return a map - pushed values will be used to be extracted from the script context and used as a response.

We support json schema based input validation.

To read more see Writing Input Validations

- Build the app.

- Run the app by going to the

app/build/libsfolder by:

java -jar cowj* <config-file>The jar has been created such as to have classpath property set to run

as long as all the dependencies are in the deps folder.