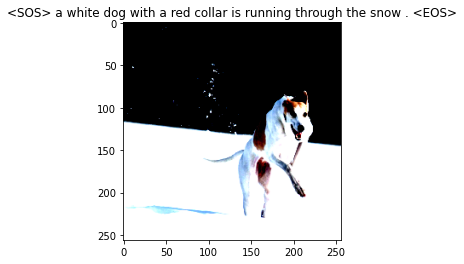

This repository contains the code and the files for the implementation of the Image captioning model. The implementation is similar to proposed in the paper Show and Tell.

This project is a stepping stone towards the version with Soft attention which has several differences in its implementation. You can check out the attention version here. (It contains a deployed caption bot also!!) This is a good starting point for somebody who has finished some online MOOCs since it involves all the concepts and various resources are available if one gets stuck.

- Clone the repository

git clone https://github.com/sankalp1999/Image_Captioning_Without_Attention.git

cd Image_Captioning_Without_Attention- Make a new virtual environment (Optional)

pip install --user virtualenvYou can change name from .env to something else.

virtualenv -p python3 .env

source .env/bin/activate- Install required dependencies

pip install -r requirements.txt- Download the model weights and put in proper folder. You can manually set image path in the inference file or use the static evaluation function in model.

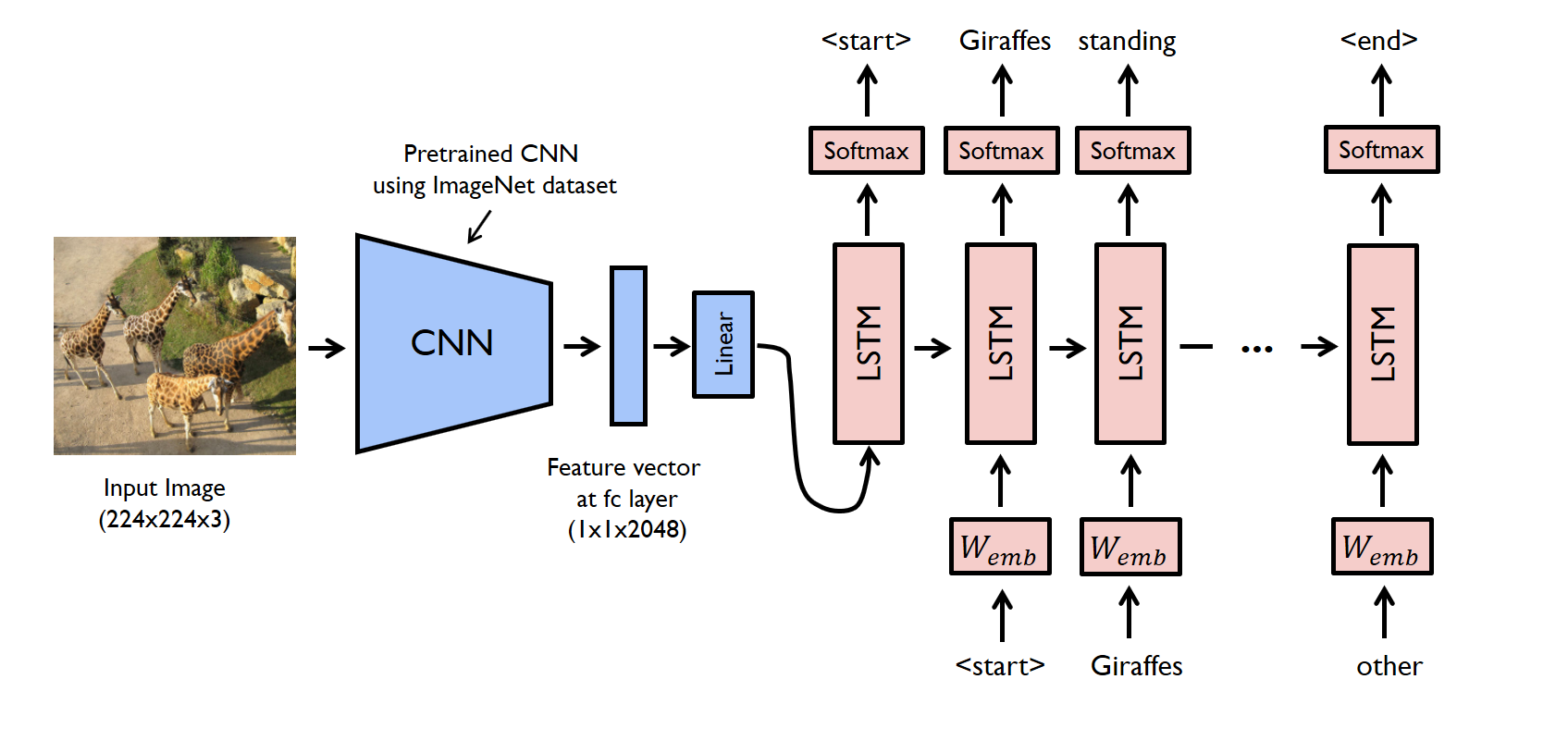

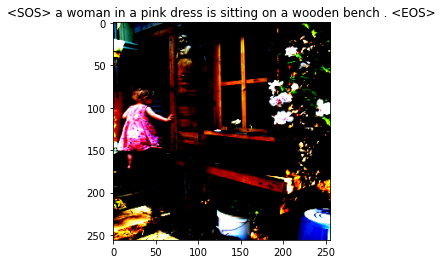

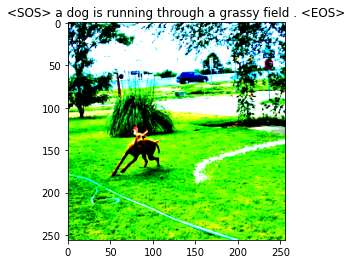

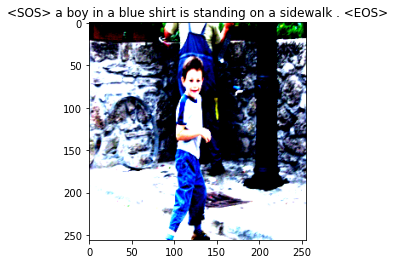

python inference.pyThis model is a typical example of Seq2Seq modelling where we have an encoder and decoder network. We pass the image through the encoder. Get the feature vector. Then, we concatenate the feature vector and embedding and pass it through the decoder.

Given enough such pairs, the model can easily trained using backpropagation.

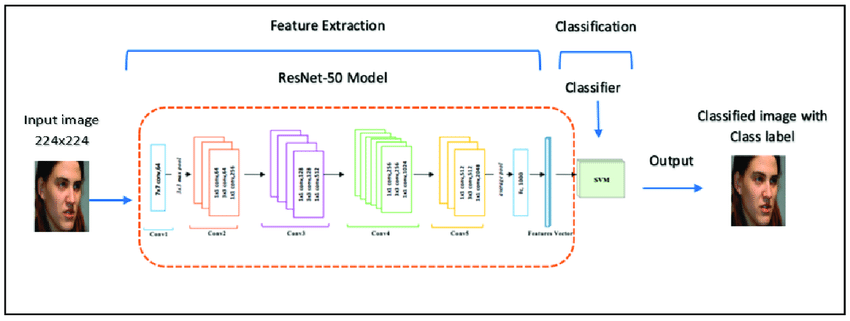

The encoder is a pre-trained Resnet-50. We extract the features from the second last layer after removing the last fully connected layer(which is used for classifcation). The Resnet-50 is trained on the ImageNet set which has 1000 classes.

Standard embedding layer. I train my own embedding instead of using pretrained. Local context is probably better. Another reason for not using pre-trained embedding is the large size of those files.

Vocab size : threshold = 5 2994 (Full dataset used for training)

The threshold is the number of occurences of particular word so that it is included in the vocab which is a mapping of words to indices and vice-versa.

The decoder is an LSTM with 2 layers. Pytorch provides nice API for this! You don't have to deal with those equations.

During train time, the model works by passing the embedding of caption along with the feature vector, getting outputs, getting loss and train by backpropagation.

During test time, we sample word by word.

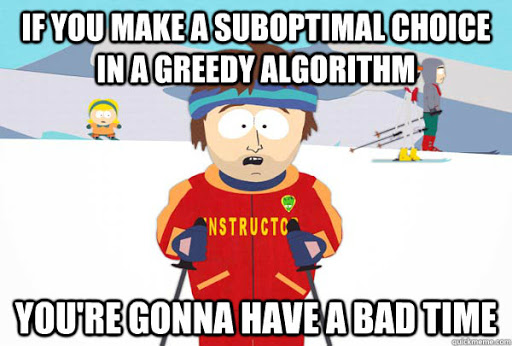

We predict the caption by always select the prediction with the top probability score. This often gives right predictions but can lead to suboptimal predictions a lot of times.

Since all the words are dependent on previous predictions, even one suboptimal word in the beginning can complelety change the context/meaning of the caption.

I use the Beam Search algorithm in the Show, Attend and Tell version.

Flickr8K- It is a relatively small dataset of 8000 images with 5 captions each. There are two versions found on the web - one with splits and one without splits. I used the latter and created manual split.

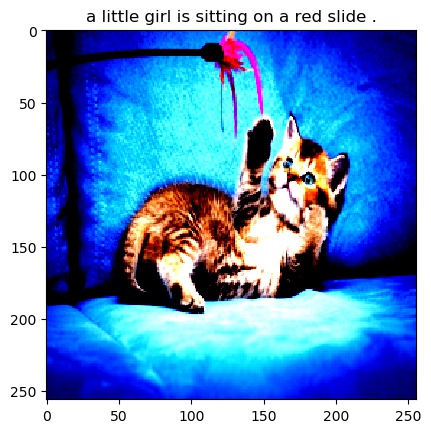

Here, you can see the model can't identify the cat.

Here, you can see the model can't identify the cat.

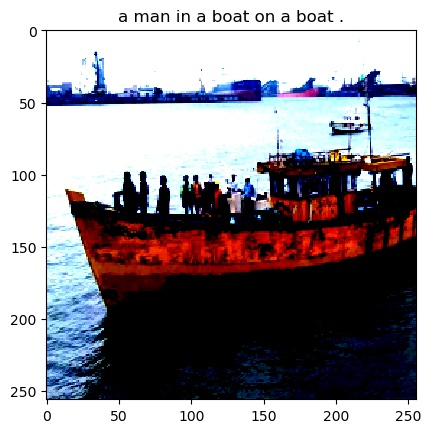

It can identify the boat and people .

It can identify the boat and people .

I referred various blogposts and some Youtube videos for learning. I did this project and Show, Attend and Tell to get better at implementation and apply the concepts I learnt in the deeplearning.ai specialization. Facebook's Pytorch DL framework,forum and kaggle kernels(for training) made this possible.

Papers

Stackoverflow for 100's of bugs I faced.

aladdinpersson - He has a Youtube channel on which he makes detailed tutorials on applications using Pytorch and TensorFlow.