- Introduction

- Data

- Model

- Google Cloud Platform, Apache Airflow, and MongoDB

- Model Validation

- Conclusion

- References

We wanted to create an end-to-end pipeline that can demonstrate how to build a modern deep learning project that can use a Cloud Storage Service, automate tasks using Apache Airflow, aggregate results with MongoDB and train a fine-tuned Deep Learning Model from Hugging Face.

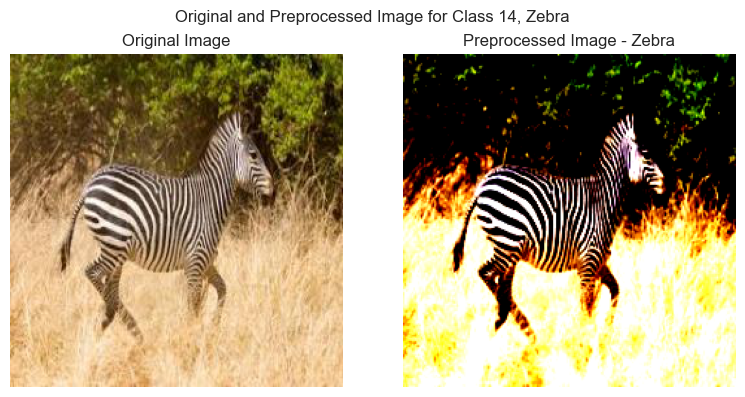

The Multi-Class Animal Classification data we will be using can be found on kaggle. The Training Data consists of 15 clases (Beetle, Butterfly, Cat, Cow, etc.) with 2000 images each, while the Validation Data has 100-200 per class. The images are in standard .jpg format and stored in class folders.

For our multi-class classification model, we will be using PyTorch along with; the Hugging Face's Transformers library. The model we will be using is the ResNet50 model, which is a pre-trained model that has been trained on ImageNet.

import torch.nn as nn

from transformers import ResNetForImageClassification

model = ResNetForImageClassification.from_pretrained("microsoft/resnet-50")We have alternated the last layer of the model to fit our 15 classes instead of the original 1000 classes.

model.classifier[1] = nn.Linear(in_features=2048, out_features=15, bias=True)The model was trained for the task at hand was able to acomplish a final accuracy of about 95% for the majority of classes. The best model was saved and would be used to monitor the model health over a period of time.

We used Google Cloud Platform (GCP) to store a subset of our validation data and Apache Airflow to automate our pipeline so we could integrate the benefits of MongoDB NoSQL Database to our system.

Our Dags consisted of 2 steps:

-

Retrieving the stored validation images from GCP and create a MongoDB collection of the path names for each image label.

- {"id":{"$oid":"65ea77f89d9f5449870d04c7"},

"label":"Beetle",

"image_path":"gs://good_data_things/val_small/Beetle/Beatle-Valid(108).jpeg"}

- {"id":{"$oid":"65ea77f89d9f5449870d04c7"},

-

The next step created an aggregation of the MongoDB collection by sampling 10 images per class and storing it to a CSV file for our model to evaluate.

For the model validation. We had manually run the aggregated results due to an issue with Google Cloud Composer and our Python Environment. However, if we were to overcome this issue we would run this along with the Apache Airflow DAG as we do in practice.

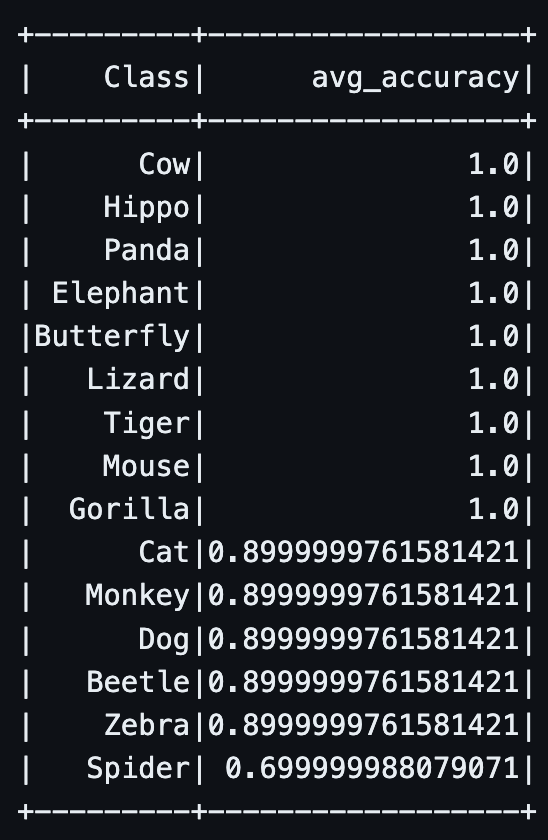

Overall, after 7 days of monitoring the model, our model's performance resulting in the following:

From our monitoring, our model performs well on the majority of classes. However, the spider class performs much worse than all the others. This leads us to determine that we may need much more training on spider images because they are not being accuractly classified.

Through the strategic use of Google Cloud Storage for data management and Airflow DAG for selective data processing, a streamlined workflow has been established. Local processing of image data, after initial path sampling from GCS, has significantly optimized the project's efficiency. Daily performance checks using accuracy score metrics through SparkSQL have precisely pinpointed and improved underperforming classes. This approach not only showcases the project's innovative use of data engineering practices but also underscores its commitment to advancing classification accuracy.