This repo contains the code to serve the Open Flamingo model via gRPC in a containerized environment.

Under the hood it is using the Jina framework as well as DocArray v2 for the data representation and serialization.

- Serve Open Flamingo via gRPC

- Serve with Docker with the containerized application

- Kubernetes deployment

- Cloud native features supported by Jina, namely:

- Replication

- Monitoring and tracing with the OpenTelemetry support

- Dynamic Batching for fast inference

run

pip install -r open_flamingo_serve/requirements.txtthen start the server. You need to have at least 18gb of video of ram. Alternatively you can use your CPU (will be slow though) by changing the device parameters of the FlamingoExecutor to cpu

python serve.pyThis will expose the gRPC server on the port 12347

you can connect via the client by running

python client_example.pyYou need to have docker install in your machine with nvidia driver support for docker or to run via cpu as explain above

cd open_flamingo_serve

docker build -t open_flamingo:latest .then you can run it via

docker run open_flamingo:latest You will need to have a Kubernetes cluster setup and ready.

kubectl apply -R k8sNote: you might need to change the docker image to your docker image (push to your docker repo) in the k8s files.

You can deploy this app on Jina AI Cloud :

first

pip install jcloudthen ( you will be asked to authenticate yourself first)

jc deploy flow.yml

Open Flamingo is an open source implementation of the Flamingo paper from Deepmind, created and trained by LAION.

Flamingo is one of the first MLLM (Multi Modal Large Language Model). This MLLM are LLM (Large Language Model) like GPT3 that are extended to work with modalities beyond text. In the case of Flamingo, it is able to understand both image text,

unlocking several use-case of LLM like cross modal reasoning, Image understanding, In context Learning of with image, ... . We can see this OpenFlamingo as the first open source GPT4 Alternative

MLLM are a promising field of AI research, and as argued by Yann Lecun in this blog post, extending AI models beyond text and natural language might be a requirement to build stronger AI. he says:

An artificial intelligence system trained on words and sentences alone will never approximate human understanding

Y. LeCun

Several top AI labs have been working towards this direction of MLLM. Here is a non-exhaustive list of other MLLM work:

- GPT4 from OpenAI has been announced as being multi-modal (Vision + Text) but only the Text part is available as I write this readme.

- BLIP2 from Saleforce Research. (This one is slightly different from Flamingo as it can take only one image as input)

- Kosmos 1 from Microsoft Research

- and more ...

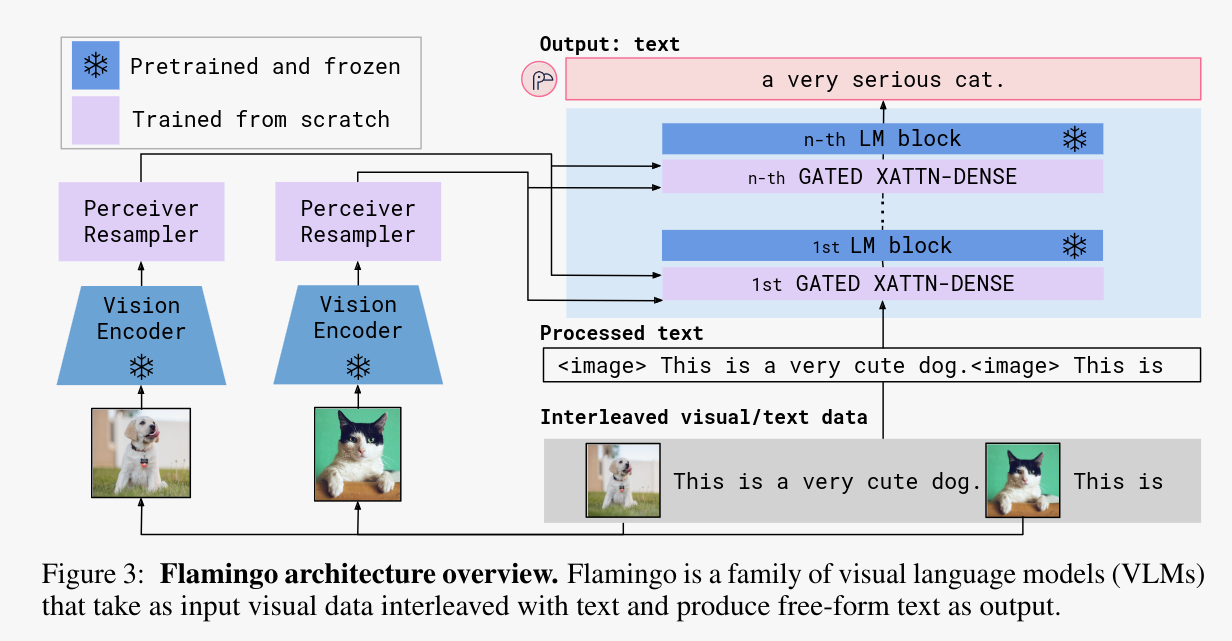

Flamingo models leverage pretrained frozen model for language and vision and "glue" them together. They basically teach a big, text only, LLM how to see by training an adapter to adapt embedding from a frozen vision feature extractor to the input of a LLM. This process is called mutli modal fusion and is at the heart of modern Multi Modal AI research. You can think about it as translating image to text before feeding into a pretrained LLM but instead of translating at the token level (i,e, text level) the translation happened via the adapter at the embedding space which is a higher lever of abstraction.

Specifically Flamingo is doing early fusion. The mutli modal interaction is done as soon as the first attention layer of the frozen pretrained LLM.

This is different from BLIP2 for instance where the fusion is done later in the last layers of the network.

OpenFlamingo is an open source reimplementation of the Flamingo paper by the LAION people.

It is using LLama 7b from meta (which it was using flan family instead for licence reason) for the LLM backbone and ViT-L-14 from the open clip implementation. They end up with a 9b Open flamingo model that was trained on 5M samples from our new Multimodal C4 dataset and 10M samples from LAION-2B.

this is a list of things I want to work on in the future

- setup monitoring tool in k8s

- add a toy frontend to play around

- allow loading directly in 8 bits