The project follows the follow steps:

- Step 1: Scope the Project and Gather Data

- Step 2: Explore and Assess the Data

- Step 3: Define the Data Model

- Step 4: Run ETL to Model the Data

- Step 5: Complete Project Write Up

Avengers eating Shawarma: https://www.youtube.com/watch?v=EYiZeszLosE

Shawarma is the most popular Arabic street food / fast food. Therefore, the target is to conduct a study to open up a shawarma place in one of the airports that see the highest number of trips of visitors and students from Arabic countries.

- List of Arabic Countries

- Population Data from the WorldBank

- List of US Cities and their Lat/Lng coordinates

- Airport Codes

Note: I have imported lots of 3rd party data files, just to join them with the data for exploration purposes. I won't be using all these data sources though.

!pip install python-Levenshtein fuzzywuzzy pyshp geopandasCollecting python-Levenshtein

�[?25l Downloading https://files.pythonhosted.org/packages/42/a9/d1785c85ebf9b7dfacd08938dd028209c34a0ea3b1bcdb895208bd40a67d/python-Levenshtein-0.12.0.tar.gz (48kB)

�[K 100% |████████████████████████████████| 51kB 2.5MB/s ta 0:00:011

�[?25hRequirement already satisfied: fuzzywuzzy in /opt/conda/lib/python3.6/site-packages (0.17.0)

Requirement already satisfied: pyshp in /opt/conda/lib/python3.6/site-packages (2.1.0)

Requirement already satisfied: geopandas in /opt/conda/lib/python3.6/site-packages (0.6.0)

Requirement already satisfied: setuptools in /opt/conda/lib/python3.6/site-packages (from python-Levenshtein) (38.4.0)

Requirement already satisfied: pyproj in /opt/conda/lib/python3.6/site-packages (from geopandas) (2.4.0)

Requirement already satisfied: shapely in /opt/conda/lib/python3.6/site-packages (from geopandas) (1.6.4.post1)

Requirement already satisfied: fiona in /opt/conda/lib/python3.6/site-packages (from geopandas) (1.8.8)

Requirement already satisfied: pandas>=0.23.0 in /opt/conda/lib/python3.6/site-packages (from geopandas) (0.23.3)

Requirement already satisfied: click<8,>=4.0 in /opt/conda/lib/python3.6/site-packages (from fiona->geopandas) (6.7)

Requirement already satisfied: cligj>=0.5 in /opt/conda/lib/python3.6/site-packages (from fiona->geopandas) (0.5.0)

Requirement already satisfied: munch in /opt/conda/lib/python3.6/site-packages (from fiona->geopandas) (2.3.2)

Requirement already satisfied: attrs>=17 in /opt/conda/lib/python3.6/site-packages (from fiona->geopandas) (19.1.0)

Requirement already satisfied: click-plugins>=1.0 in /opt/conda/lib/python3.6/site-packages (from fiona->geopandas) (1.1.1)

Requirement already satisfied: six>=1.7 in /opt/conda/lib/python3.6/site-packages (from fiona->geopandas) (1.11.0)

Requirement already satisfied: python-dateutil>=2.5.0 in /opt/conda/lib/python3.6/site-packages (from pandas>=0.23.0->geopandas) (2.6.1)

Requirement already satisfied: pytz>=2011k in /opt/conda/lib/python3.6/site-packages (from pandas>=0.23.0->geopandas) (2017.3)

Requirement already satisfied: numpy>=1.9.0 in /opt/conda/lib/python3.6/site-packages (from pandas>=0.23.0->geopandas) (1.12.1)

Building wheels for collected packages: python-Levenshtein

Running setup.py bdist_wheel for python-Levenshtein ... �[?25ldone

�[?25h Stored in directory: /root/.cache/pip/wheels/de/c2/93/660fd5f7559049268ad2dc6d81c4e39e9e36518766eaf7e342

Successfully built python-Levenshtein

Installing collected packages: python-Levenshtein

Successfully installed python-Levenshtein-0.12.0

# Do all imports and installs here

import pandas as pd

import fuzzywuzzy

import shapefile

from shapely.geometry.polygon import LinearRing, Polygon, LineString

pd.set_option('display.max_rows', 500)

pd.set_option('display.max_columns', 500)

pd.set_option('display.width', 1000)

import pyspark.sql.functions as F

from pyspark.sql.types import StructType as R, StructField as Fld, DoubleType as Dbl, StringType as Str

from pyspark.sql.types import IntegerType as Int, DateType as Date

from pyspark.sql import SparkSession

spark = SparkSession.builder.\

config("spark.jars.packages","saurfang:spark-sas7bdat:2.0.0-s_2.11")\

.enableHiveSupport().getOrCreate()

#df_spark =spark.read.format('com.github.saurfang.sas.spark').load('../../data/18-83510-I94-Data-2016/i94_aug16_sub.sas7bdat')

sc = spark.sparkContext

spark.sparkContext.setLogLevel('ERROR')

spark.conf.set("spark.sql.shuffle.partitions", 20)fname = '../../data2/GlobalLandTemperaturesByCity.csv'

temp_df = pd.read_csv(fname)round(temp_df[(temp_df['Country'] == 'United States') & (temp_df['City'] == 'Boston')].iloc[-12:]['AverageTemperature'].mean(), 1)8.9000000000000004

# pop_sizes_df = pd.read_csv('WorldBankPopulationSizeByCountry.csv')

pop_sizes_df = pop_sizes_df.rename({'Country Name':'CountryName'}, axis=1)

country_names = list(pop_sizes_df['CountryName'])

pop_sizes_df = pop_sizes_df.set_index('CountryName')

pop_sizes_df.head()

from fuzzywuzzy import process

from fuzzywuzzy import fuzz

def findClosestCountryName(cn, cns= country_names, setOrSort=True, population=False):

max_score = 0

country = ''

for n in cns:

if setOrSort == True:

score = fuzz.token_set_ratio(cn, n)

else:

score = fuzz.token_sort_ratio(cn, n)

if score > max_score:

max_score = score

country = n

if population == True:

return pd.Series([country, max_score, pop_sizes_df.loc[country]['2016']])

return pd.Series([country, max_score])

print(findClosestCountryName('MEXICO Air Sea, and Not Reported (I-94, no land arrivals)', country_names)[0])

print(findClosestCountryName('CHINA, PRC', country_names)[0])pop_sizes_df.head(3)| Country Code | Indicator Name | Indicator Code | 1960 | 1961 | 1962 | 1963 | 1964 | 1965 | 1966 | 1967 | 1968 | 1969 | 1970 | 1971 | 1972 | 1973 | 1974 | 1975 | 1976 | 1977 | 1978 | 1979 | 1980 | 1981 | 1982 | 1983 | 1984 | 1985 | 1986 | 1987 | 1988 | 1989 | 1990 | 1991 | 1992 | 1993 | 1994 | 1995 | 1996 | 1997 | 1998 | 1999 | 2000 | 2001 | 2002 | 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CountryName | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Aruba | ABW | Population, total | SP.POP.TOTL | 54211.0 | 55438.0 | 56225.0 | 56695.0 | 57032.0 | 57360.0 | 57715.0 | 58055.0 | 58386.0 | 58726.0 | 59063.0 | 59440.0 | 59840.0 | 60243.0 | 60528.0 | 60657.0 | 60586.0 | 60366.0 | 60103.0 | 59980.0 | 60096.0 | 60567.0 | 61345.0 | 62201.0 | 62836.0 | 63026.0 | 62644.0 | 61833.0 | 61079.0 | 61032.0 | 62149.0 | 64622.0 | 68235.0 | 72504.0 | 76700.0 | 80324.0 | 83200.0 | 85451.0 | 87277.0 | 89005.0 | 90853.0 | 92898.0 | 94992.0 | 97017.0 | 98737.0 | 100031.0 | 100834.0 | 101222.0 | 101358.0 | 101455.0 | 101669.0 | 102046.0 | 102560.0 | 103159.0 | 103774.0 | 104341.0 | 104872.0 | 105366.0 | 105845.0 |

| Afghanistan | AFG | Population, total | SP.POP.TOTL | 8996973.0 | 9169410.0 | 9351441.0 | 9543205.0 | 9744781.0 | 9956320.0 | 10174836.0 | 10399926.0 | 10637063.0 | 10893776.0 | 11173642.0 | 11475445.0 | 11791215.0 | 12108963.0 | 12412950.0 | 12689160.0 | 12943093.0 | 13171306.0 | 13341198.0 | 13411056.0 | 13356511.0 | 13171673.0 | 12882528.0 | 12537730.0 | 12204292.0 | 11938208.0 | 11736179.0 | 11604534.0 | 11618005.0 | 11868877.0 | 12412308.0 | 13299017.0 | 14485546.0 | 15816603.0 | 17075727.0 | 18110657.0 | 18853437.0 | 19357126.0 | 19737765.0 | 20170844.0 | 20779953.0 | 21606988.0 | 22600770.0 | 23680871.0 | 24726684.0 | 25654277.0 | 26433049.0 | 27100536.0 | 27722276.0 | 28394813.0 | 29185507.0 | 30117413.0 | 31161376.0 | 32269589.0 | 33370794.0 | 34413603.0 | 35383128.0 | 36296400.0 | 37172386.0 |

| Angola | AGO | Population, total | SP.POP.TOTL | 5454933.0 | 5531472.0 | 5608539.0 | 5679458.0 | 5735044.0 | 5770570.0 | 5781214.0 | 5774243.0 | 5771652.0 | 5803254.0 | 5890365.0 | 6040777.0 | 6248552.0 | 6496962.0 | 6761380.0 | 7024000.0 | 7279509.0 | 7533735.0 | 7790707.0 | 8058067.0 | 8341289.0 | 8640446.0 | 8952950.0 | 9278096.0 | 9614754.0 | 9961997.0 | 10320111.0 | 10689250.0 | 11068050.0 | 11454777.0 | 11848386.0 | 12248901.0 | 12657366.0 | 13075049.0 | 13503747.0 | 13945206.0 | 14400719.0 | 14871570.0 | 15359601.0 | 15866869.0 | 16395473.0 | 16945753.0 | 17519417.0 | 18121479.0 | 18758145.0 | 19433602.0 | 20149901.0 | 20905363.0 | 21695634.0 | 22514281.0 | 23356246.0 | 24220661.0 | 25107931.0 | 26015780.0 | 26941779.0 | 27884381.0 | 28842484.0 | 29816748.0 | 30809762.0 |

airport_codes = pd.read_csv('airport-codes_csv.csv')

airport_codes = airport_codes.fillna('')

airport_codes = airport_codes[(airport_codes['iso_country'] == 'US') & (airport_codes['type'] == 'large_airport')]

airport_codes['municipality'] = airport_codes['municipality'].apply(lambda x: x.lower())

airport_codes[airport_codes['municipality'] == 'orlando'].head()| ident | type | name | elevation_ft | continent | iso_country | iso_region | municipality | gps_code | iata_code | local_code | coordinates | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 28001 | KMCO | large_airport | Orlando International Airport | 96 | US | US-FL | orlando | KMCO | MCO | MCO | -81.30899810791016, 28.429399490356445 | |

| 29937 | KSFB | large_airport | Orlando Sanford International Airport | 55 | US | US-FL | orlando | KSFB | SFB | SFB | -81.23750305175781, 28.777599334716797 |

len(airport_codes)862

Reference: https://simplemaps.com/data/us-cities

us_cities = pd.read_csv('uscities.csv')

us_cities.head()| city | city_ascii | state_id | state_name | county_fips | county_name | county_fips_all | county_name_all | lat | lng | population | density | source | military | incorporated | timezone | ranking | zips | id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | South Creek | South Creek | WA | Washington | 53053 | Pierce | 53053 | Pierce | 46.9994 | -122.3921 | 2500.0 | 125.0 | polygon | False | True | America/Los_Angeles | 3 | 98580 98387 98338 | 1840116412 |

| 1 | Roslyn | Roslyn | WA | Washington | 53037 | Kittitas | 53037 | Kittitas | 47.2507 | -121.0989 | 947.0 | 84.0 | polygon | False | True | America/Los_Angeles | 3 | 98941 98068 98925 | 1840097718 |

| 2 | Sprague | Sprague | WA | Washington | 53043 | Lincoln | 53043 | Lincoln | 47.3048 | -117.9713 | 441.0 | 163.0 | polygon | False | True | America/Los_Angeles | 3 | 99032 | 1840096300 |

| 3 | Gig Harbor | Gig Harbor | WA | Washington | 53053 | Pierce | 53053 | Pierce | 47.3352 | -122.5968 | 9507.0 | 622.0 | polygon | False | True | America/Los_Angeles | 3 | 98332 98335 | 1840097082 |

| 4 | Lake Cassidy | Lake Cassidy | WA | Washington | 53061 | Snohomish | 53061 | Snohomish | 48.0639 | -122.0920 | 3591.0 | 131.0 | polygon | False | True | America/Los_Angeles | 3 | 98223 98258 98270 | 1840116371 |

demog = pd.read_csv('us-cities-demographics.csv', delimiter=';')

demog.head()| City | State | Median Age | Male Population | Female Population | Total Population | Number of Veterans | Foreign-born | Average Household Size | State Code | Race | Count | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Silver Spring | Maryland | 33.8 | 40601.0 | 41862.0 | 82463 | 1562.0 | 30908.0 | 2.60 | MD | Hispanic or Latino | 25924 |

| 1 | Quincy | Massachusetts | 41.0 | 44129.0 | 49500.0 | 93629 | 4147.0 | 32935.0 | 2.39 | MA | White | 58723 |

| 2 | Hoover | Alabama | 38.5 | 38040.0 | 46799.0 | 84839 | 4819.0 | 8229.0 | 2.58 | AL | Asian | 4759 |

| 3 | Rancho Cucamonga | California | 34.5 | 88127.0 | 87105.0 | 175232 | 5821.0 | 33878.0 | 3.18 | CA | Black or African-American | 24437 |

| 4 | Newark | New Jersey | 34.6 | 138040.0 | 143873.0 | 281913 | 5829.0 | 86253.0 | 2.73 | NJ | White | 76402 |

demog_race=demog.groupby(['State Code', 'Race']).agg({'Count':'sum', 'Median Age':'mean'})\

.sort_values('Count', ascending=False)

demog_race = demog_race.reset_index()

demog_race = demog_race[demog_race['Race'] != 'White'].groupby(['State Code','Race']).agg({'Count':'max'})\

.sort_values(['State Code', 'Count'], ascending=[True, False]).reset_index().set_index('State Code')

cols = ['State Code', 'Minority', 'Count']

biggest_minority = pd.DataFrame(columns = cols)

for i in list(set(demog_race.index)):

biggest_minority = biggest_minority.append(pd.Series([i] + list(demog_race.loc[i].iloc[0].values),

index=cols), ignore_index=True)

biggest_minority = biggest_minority.set_index('State Code')

biggest_minority.sort_values('Count', ascending=False).head()| Minority | Count | |

|---|---|---|

| State Code | ||

| CA | Hispanic or Latino | 9856464 |

| TX | Hispanic or Latino | 6311431 |

| NY | Hispanic or Latino | 2730185 |

| FL | Hispanic or Latino | 1942022 |

| AZ | Hispanic or Latino | 1508157 |

# Read in the data here

import os

data_dir = "../../data/18-83510-I94-Data-2016/"

files = os.listdir(data_dir)

for i in range(len(files)):

files[i] = data_dir + files[i]

files

dfs = []

for i in range(len(files)):

dfs.append(spark.read.format('com.github.saurfang.sas.spark').load(files[i]))dfs[4].limit(5).toPandas().columnsIndex(['cicid', 'i94yr', 'i94mon', 'i94cit', 'i94res', 'i94port', 'arrdate', 'i94mode', 'i94addr', 'depdate', 'i94bir', 'i94visa', 'count', 'validres', 'delete_days', 'delete_mexl', 'delete_dup', 'delete_visa', 'delete_recdup', 'dtadfile', 'visapost', 'occup', 'entdepa', 'entdepd', 'entdepu', 'matflag', 'biryear', 'dtaddto', 'gender', 'insnum', 'airline', 'admnum', 'fltno', 'visatype'], dtype='object')

dfs[0].limit(5).toPandas().columnsIndex(['cicid', 'i94yr', 'i94mon', 'i94cit', 'i94res', 'i94port', 'arrdate',

'i94mode', 'i94addr', 'depdate', 'i94bir', 'i94visa', 'count',

'dtadfile', 'visapost', 'occup', 'entdepa', 'entdepd', 'entdepu',

'matflag', 'biryear', 'dtaddto', 'gender', 'insnum', 'airline',

'admnum', 'fltno', 'visatype'],

dtype='object')

cols = ['delete_days', 'delete_mexl', 'delete_dup', 'delete_visa', 'delete_recdup']display(dfs[4].limit(5).toPandas().head())

display(dfs[4].filter('validres != 1').limit(5).toPandas().head())

for c in cols:

display(dfs[4].filter(c +' != 0').limit(5).toPandas().head())| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.0 | 2016.0 | 6.0 | 135.0 | 135.0 | XXX | 20612.0 | None | None | None | 59.0 | 2.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | None | None | None | Z | None | U | None | 1957.0 | 10032016 | None | None | None | 1.493846e+10 | None | WT |

| 1 | 5.0 | 2016.0 | 6.0 | 135.0 | 135.0 | XXX | 20612.0 | None | None | None | 50.0 | 2.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | None | None | None | Z | None | U | None | 1966.0 | 10032016 | None | None | None | 1.746006e+10 | None | WT |

| 2 | 6.0 | 2016.0 | 6.0 | 213.0 | 213.0 | XXX | 20609.0 | None | None | None | 27.0 | 3.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | None | None | None | T | None | U | None | 1989.0 | D/S | None | None | None | 1.679298e+09 | None | F1 |

| 3 | 7.0 | 2016.0 | 6.0 | 213.0 | 213.0 | XXX | 20611.0 | None | None | None | 23.0 | 3.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | None | None | None | T | None | U | None | 1993.0 | D/S | None | None | None | 1.140963e+09 | None | F1 |

| 4 | 16.0 | 2016.0 | 6.0 | 245.0 | 245.0 | XXX | 20632.0 | None | None | None | 24.0 | 3.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | None | None | None | T | None | U | None | 1992.0 | D/S | None | None | None | 1.934535e+09 | None | F1 |

| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype |

|---|

| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype |

|---|

| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype |

|---|

| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype |

|---|

| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype |

|---|

| cicid | i94yr | i94mon | i94cit | i94res | i94port | arrdate | i94mode | i94addr | depdate | i94bir | i94visa | count | validres | delete_days | delete_mexl | delete_dup | delete_visa | delete_recdup | dtadfile | visapost | occup | entdepa | entdepd | entdepu | matflag | biryear | dtaddto | gender | insnum | airline | admnum | fltno | visatype |

|---|

dfs[4] = dfs[4].drop('validres')

for c in cols:

dfs[4] = dfs[4].drop(c)imm_df = dfs[0]

for i in range(1, len(dfs)):

imm_df = imm_df.union(dfs[i])imm_df.count()40790529

#write to parquet

imm_df.write.parquet("proc_sas_data")imm_df = spark.read.parquet("proc_sas_data")top_ports = imm_df.groupby(['i94port']).count().orderBy(F.col('count'), ascending=False)

top_ports.coalesce(1).write.mode('overwrite').csv('ports.csv')

top_ports.show(5)+-------+-------+

|i94port| count|

+-------+-------+

| NYC|6678555|

| MIA|5122889|

| LOS|4602847|

| SFR|2309621|

| HHW|2249967|

+-------+-------+

only showing top 5 rows

top_res = imm_df.groupby(['i94res']).count().orderBy(F.col('count'), ascending=False)

top_res.coalesce(1).write.mode('overwrite').csv('res.csv')

top_res.show(5)+------+-------+

|i94res| count|

+------+-------+

| 135.0|4587092|

| 209.0|3603786|

| 245.0|3049942|

| 582.0|2661125|

| 112.0|2046288|

+------+-------+

only showing top 5 rows

top_addr = imm_df.groupby(['i94addr']).count().orderBy(F.col('count'), ascending=False)

top_addr.coalesce(1).write.mode('overwrite').csv('addr.csv')

top_addr.show(5)+-------+-------+

|i94addr| count|

+-------+-------+

| FL|8156192|

| NY|6764396|

| CA|6531491|

| HI|2338444|

| null|2027926|

+-------+-------+

only showing top 5 rows

top_mode = imm_df.groupby(['i94mode']).count().orderBy(F.col('count'), ascending=False)

top_mode.coalesce(1).write.mode('overwrite').csv('mode.csv')

to_mode.show(5)top_visa = imm_df.groupby(['i94visa']).count().orderBy(F.col('count'), ascending=False)

top_visa.coalesce(1).write.mode('overwrite').csv('visa.csv')

top_visa.show(5)+-------+--------+

|i94visa| count|

+-------+--------+

| 2.0|33641979|

| 1.0| 5575279|

| 3.0| 1573271|

+-------+--------+

top_cit = imm_df.groupby(['i94cit']).count().orderBy(F.col('count'), ascending=False)

top_cit.coalesce(1).write.mode('overwrite').csv('countries.csv')

top_cit.show(5)+------+-------+

|i94cit| count|

+------+-------+

| 135.0|4531534|

| 209.0|3278033|

| 245.0|3128257|

| 582.0|2617070|

| 148.0|2051390|

+------+-------+

only showing top 5 rows

top_cit.join(imm_country_df, top_cit.i94cit == imm_country_df.Code).orderBy('count', ascending=False).show(10)+------+-------+----+--------------------+--------------+-----+-------------+

|i94cit| count|Code| Country| CountryName|Score| Population|

+------+-------+----+--------------------+--------------+-----+-------------+

| 135.0|4531534| 135| UNITED KINGDOM|United Kingdom| 100| 6.5595565E7|

| 209.0|3278033| 209| JAPAN| Japan| 100| 1.26994511E8|

| 245.0|3128257| 245| CHINA, PRC| China| 100| 1.378665E9|

| 582.0|2617070| 582|MEXICO Air Sea, a...| Mexico| 100| 1.23333376E8|

| 111.0|1679312| 111| FRANCE| France| 100| 6.6859768E7|

| 689.0|1672212| 689| BRAZIL| Brazil| 100| 2.06163058E8|

| 438.0|1325861| 438| AUSTRALIA| Australia| 100| 2.4190907E7|

| 213.0|1252212| 213| INDIA| India| 100|1.324509589E9|

| 117.0|1116790| 117| ITALY| Italy| 100| 6.0627498E7|

| 129.0| 895509| 129| SPAIN| Spain| 100| 4.6483569E7|

+------+-------+----+--------------------+--------------+-----+-------------+

only showing top 10 rows

The data model will track all Arabic nationalities by country and by port of entry. For that, we will need to first group all visitors by nationality, and port of entry. I've also added grouping by country of residence, and visa type, just in case they will become useful later. The visitors data frame will then be joined with country information, and with port of entry information. The final data output, will be the list of port of entries, with the count of all Arab nationals, in addition to the name of the city, state, and the GPS coordinates of the city.

- Process immigration dictionary to help figure out immigration column fields

- Process list of Arab countries

- Start processing immigration DataFrame and join relevant data

The I94_SAS_Labels_Descriptions.SAS file has been copied into an excel sheet, and relevant field dictionaries will be processed

imm_dict_country = pd.read_excel('imm_dictionary.xlsx', 'Country', header=None)

imm_dict_country[['Code', 'Country']] = imm_dict_country[0].apply(lambda x: pd.Series(x.strip().replace("'", "")\

.split("=")))

imm_dict_country['Code'] = imm_dict_country['Code'].apply(lambda x: int(x.strip()))

imm_dict_country['Country'] = imm_dict_country['Country'].apply(lambda x: x.strip())

imm_dict_country[['CountryName', 'Score', 'Population']] = imm_dict_country['Country'].apply(lambda x: findClosestCountryName(x,

country_names, population=True))

imm_dict_country = imm_dict_country.drop(0, axis=1)

imm_country_df = spark.createDataFrame(imm_dict_country)

imm_dict_country.head()| Code | Country | CountryName | Score | Population | |

|---|---|---|---|---|---|

| 0 | 582 | MEXICO Air Sea, and Not Reported (I-94, no lan... | Mexico | 100 | 123333376.0 |

| 1 | 236 | AFGHANISTAN | Afghanistan | 100 | 35383128.0 |

| 2 | 101 | ALBANIA | Albania | 100 | 2876101.0 |

| 3 | 316 | ALGERIA | Algeria | 100 | 40551404.0 |

| 4 | 102 | ANDORRA | Andorra | 100 | 77297.0 |

city_names = list(us_cities['city_ascii'])

findClosestCountryName('Alcan', city_names)[0]'Alcan Border'

imm_dict_port = pd.read_excel('imm_dictionary.xlsx', 'Port', header=None)

imm_dict_port['Code'] = imm_dict_port[0].apply(lambda x: x.strip().replace("'", ""))

imm_dict_port['Port'] = imm_dict_port[2].apply(lambda x: x.strip().replace("'", ""))

# imm_dict_port[['City'] = ''

# imm_dict_port[['City', 'State']] = ''

def splitCityState(x):

y = x.strip().split(',')

yy = []

for z in y:

yy.append(z.strip())

if len(yy) < 2:

return pd.Series([yy[0], ''])

if len(yy) > 2:

return pd.Series([yy[0], ', '.join(yy[1:len(y)])])

return pd.Series(yy)

imm_dict_port[['City', 'State']] = imm_dict_port['Port'].apply(lambda x: splitCityState(x))

imm_dict_port = imm_dict_port.drop([0, 1, 2, 3], axis=1)

imm_dict_port['City'] = imm_dict_port['City'].apply(lambda x: x.capitalize())

city_names = list(us_cities['city_ascii'])

def identify_city_name(row):

city = row['City']

state = row['State']

city_names = list(us_cities[us_cities['state_id'] == state]['city_ascii'])

return findClosestCountryName(city, city_names, setOrSort=False)[0]

imm_dict_port['matched_city'] = ''

imm_dict_port['matched_city'] = imm_dict_port.apply(lambda row: identify_city_name(row), axis=1)

imm_dict_port = imm_dict_port.merge(us_cities,left_on=['matched_city', 'State'], right_on=['city_ascii', 'state_id'])

imm_dict_port = imm_dict_port.drop(['City'], axis=1)

print(len(imm_dict_port))

imm_dict_port = imm_dict_port.fillna('')

imm_port_df = spark.createDataFrame(imm_dict_port)

#imm_port_df.limit(50).toPandas().head()

#imm_dict_port[imm_dict_port['city_ascii'].isnull()].head()

imm_dict_port.head()495

| Code | Port | State | matched_city | city | city_ascii | state_id | state_name | county_fips | county_name | county_fips_all | county_name_all | lat | lng | population | density | source | military | incorporated | timezone | ranking | zips | id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | ALC | ALCAN, AK | AK | Alatna | Alatna | Alatna | AK | Alaska | 2290 | Yukon-Koyukuk | 02290 | Yukon-Koyukuk | 66.5638 | -152.8392 | 0.0 | 0.0 | polygon | False | False | America/Anchorage | 3 | 99720 | 1840114044 |

| 1 | ANC | ANCHORAGE, AK | AK | Anchorage | Anchorage | Anchorage | AK | Alaska | 2020 | Anchorage | 02020 | Anchorage | 61.1508 | -149.1091 | 253421.0 | 66.0 | polygon | False | True | America/Anchorage | 2 | 99518 99515 99517 99516 99513 99540 99567 9958... | 1840089974 |

| 2 | BAR | BAKER AAF - BAKER ISLAND, AK | AK | Point Baker | Point Baker | Point Baker | AK | Alaska | 2198 | Prince of Wales-Hyder | 02198 | Prince of Wales-Hyder | 56.3482 | -133.6167 | 22.0 | 9.0 | polygon | False | False | America/Sitka | 3 | 99927 | 1840114092 |

| 3 | DAC | DALTONS CACHE, AK | AK | Nondalton | Nondalton | Nondalton | AK | Alaska | 2164 | Lake and Peninsula | 02164 | Lake and Peninsula | 59.9711 | -154.8626 | 132.0 | 7.0 | polygon | False | True | America/Anchorage | 3 | 99640 | 1840090141 |

| 4 | PIZ | DEW STATION PT LAY DEW, AK | AK | Attu Station | Attu Station | Attu Station | AK | Alaska | 2016 | Aleutians West | 02016 | Aleutians West | 52.8955 | 173.1230 | 16.0 | 0.0 | polygon | False | True | America/Adak | 3 | 1840114050 |

imm_dict_states = pd.read_excel('imm_dictionary.xlsx', 'States', header=None)

imm_dict_states[['Code', 'State']] = imm_dict_states[0].apply(lambda x: pd.Series(x.strip().replace("'", "")\

.split("=")))

imm_dict_states = imm_dict_states.drop(0, axis=1)

imm_state_df = spark.createDataFrame(imm_dict_states)

imm_dict_states.head()| Code | State | |

|---|---|---|

| 0 | AL | ALABAMA |

| 1 | AK | ALASKA |

| 2 | AZ | ARIZONA |

| 3 | AR | ARKANSAS |

| 4 | CA | CALIFORNIA |

Reference: https://www.downloadexcelfiles.com/wo_en/download-excel-file-list-arab-countries#.XZm_fuczZTY

arabic_countries = pd.read_csv('list-arab-countries-439j.csv')

arabic_countries = list(arabic_countries['Country (or dependent territory)'])

imm_countries = list(set(imm_dict_country['Country']))

arabic_countries_dict = {}

for c in arabic_countries:

match = findClosestCountryName(c, imm_countries, setOrSort=False)[0]

print(c, match, imm_dict_country[imm_dict_country['Country'] == match]['Code'].values[0])

arabic_countries_dict[c] = imm_dict_country[imm_dict_country['Country'] == match]['Code'].values[0]Egypt EGYPT 368

Algeria ALGERIA 316

Iraq IRAQ 250

Sudan SUDAN 350

Morocco MOROCCO 332

Saudi Arabia SAUDI ARABIA 261

Yemen YEMEN 216

Syria SYRIA 262

Tunisia TUNISIA 323

Somalia SOMALIA 397

United Arab Emirates UNITED ARAB EMIRATES 296

Jordan JORDAN 253

Libya LIBYA 381

Palestine PALESTINE 743

Lebanon LEBANON 255

Oman OMAN 256

Kuwait KUWAIT 272

Mauritania MAURITANIA 389

Qatar QATAR 297

Bahrain BAHRAIN 298

Djibouti DJIBOUTI 322

Comoros COMOROS 317

visitors = imm_df.groupby(['i94cit', 'i94res', 'I94PORT', 'i94visa']).count()

visitors = visitors.join(imm_country_df, visitors['i94cit'] == imm_country_df['Code'])\

.selectExpr('*', "CountryName as CitCountry")\

.selectExpr('*', "Population as CitPopulation").drop('CountryName').drop('Code')\

.drop('Population').drop('Score').drop('Country')

visitors = visitors.join(imm_country_df, visitors['i94res'] == imm_country_df['Code'])\

.selectExpr('*', 'CountryName as ResCountry')\

.selectExpr('*', "Population as ResPopulation").drop('Country')\

.drop('Population').drop('CountryName').drop('Code').drop('Score')

visitors = visitors.join(imm_port_df, visitors['i94port'] == imm_port_df['Code']).drop('key_0').drop('Code')

visitors = visitors.orderBy(F.col('count'), ascending=False)

visitors.coalesce(1).write.mode('overwrite').csv('visitors.csv')

visitors.limit(15).toPandas().head()| i94cit | i94res | I94PORT | i94visa | count | CitCountry | CitPopulation | ResCountry | ResPopulation | Port | State | matched_city | city | city_ascii | state_id | state_name | county_fips | county_name | county_fips_all | county_name_all | lat | lng | population | density | source | military | incorporated | timezone | ranking | zips | id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 209.0 | 209.0 | HHW | 2.0 | 1429900 | Japan | 126994511.0 | Japan | 126994511.0 | HONOLULU, HI | HI | Honolulu | Honolulu | Honolulu | HI | Hawaii | 15003 | Honolulu | 15003 | Honolulu | 21.3294 | -157.8460 | 833671.0 | 2234.0 | polygon | False | True | Pacific/Honolulu | 2 | 96859 96850 96822 96826 96813 96815 96814 9681... | 1840118304 |

| 1 | 135.0 | 135.0 | NYC | 2.0 | 773579 | United Kingdom | 65595565.0 | United Kingdom | 65595565.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

| 2 | 135.0 | 135.0 | ORL | 2.0 | 630968 | United Kingdom | 65595565.0 | United Kingdom | 65595565.0 | ORLANDO, FL | FL | Orlando | Orlando | Orlando | FL | Florida | 12095 | Orange | 12095 | Orange | 28.4772 | -81.3369 | 1776841.0 | 982.0 | polygon | False | True | America/New_York | 1 | 32829 32827 32824 32822 32804 32805 32806 3280... | 1840012172 |

| 3 | 689.0 | 689.0 | MIA | 2.0 | 536911 | Brazil | 206163058.0 | Brazil | 206163058.0 | MIAMI, FL | FL | Miami | Miami | Miami | FL | Florida | 12086 | Miami-Dade | 12086 | Miami-Dade | 25.7839 | -80.2102 | 6381966.0 | 4969.0 | polygon | False | True | America/New_York | 1 | 33129 33125 33126 33127 33128 33149 33144 3314... | 1840012834 |

| 4 | 438.0 | 438.0 | LOS | 2.0 | 499750 | Australia | 24190907.0 | Australia | 24190907.0 | LOS ANGELES, CA | CA | Los Angeles | Los Angeles | Los Angeles | CA | California | 6037 | Los Angeles | 06037 | Los Angeles | 34.1139 | -118.4068 | 12815475.0 | 3295.0 | polygon | False | True | America/Los_Angeles | 1 | 90291 90293 90292 91316 91311 90037 90031 9000... | 1840107920 |

visitors[visitors['CitCountry'] == 'Saudi Arabia'].orderBy(F.col('count'), ascending=False).limit(15).toPandas().head()| i94cit | i94res | I94PORT | i94visa | count | CitCountry | CitPopulation | ResCountry | ResPopulation | Port | State | matched_city | city | city_ascii | state_id | state_name | county_fips | county_name | county_fips_all | county_name_all | lat | lng | population | density | source | military | incorporated | timezone | ranking | zips | id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 261.0 | 261.0 | NYC | 2.0 | 31515 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

| 1 | 261.0 | 261.0 | LOS | 2.0 | 28888 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | LOS ANGELES, CA | CA | Los Angeles | Los Angeles | Los Angeles | CA | California | 6037 | Los Angeles | 06037 | Los Angeles | 34.1139 | -118.4068 | 12815475.0 | 3295.0 | polygon | False | True | America/Los_Angeles | 1 | 90291 90293 90292 91316 91311 90037 90031 9000... | 1840107920 |

| 2 | 261.0 | 261.0 | NYC | 3.0 | 16913 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

| 3 | 261.0 | 261.0 | CHI | 3.0 | 14948 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | CHICAGO, IL | IL | Chicago | Chicago | Chicago | IL | Illinois | 17031 | Cook | 17031 | Cook | 41.8373 | -87.6862 | 8675982.0 | 4612.0 | polygon | False | True | America/Chicago | 1 | 60018 60649 60641 60640 60643 60642 60645 6064... | 1840021521 |

| 4 | 261.0 | 261.0 | LOS | 3.0 | 13102 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | LOS ANGELES, CA | CA | Los Angeles | Los Angeles | Los Angeles | CA | California | 6037 | Los Angeles | 06037 | Los Angeles | 34.1139 | -118.4068 | 12815475.0 | 3295.0 | polygon | False | True | America/Los_Angeles | 1 | 90291 90293 90292 91316 91311 90037 90031 9000... | 1840107920 |

vis_df = visitors.toPandas()

vis_df['flag'] = False

conditions = vis_df['flag']

vis_df = vis_df.drop('flag', axis=1)

for ac in arabic_countries_dict.keys():

conditions = conditions | (vis_df['i94cit'] == arabic_countries_dict[ac])

ac_vis_df = vis_df[conditions]

ac_vis_df.head(5)| i94cit | i94res | I94PORT | i94visa | count | CitCountry | CitPopulation | ResCountry | ResPopulation | Port | State | matched_city | city | city_ascii | state_id | state_name | county_fips | county_name | county_fips_all | county_name_all | lat | lng | population | density | source | military | incorporated | timezone | ranking | zips | id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 194 | 261.0 | 261.0 | NYC | 2.0 | 31515 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

| 203 | 261.0 | 261.0 | LOS | 2.0 | 28888 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | LOS ANGELES, CA | CA | Los Angeles | Los Angeles | Los Angeles | CA | California | 6037 | Los Angeles | 06037 | Los Angeles | 34.1139 | -118.4068 | 12815475.0 | 3295.0 | polygon | False | True | America/Los_Angeles | 1 | 90291 90293 90292 91316 91311 90037 90031 9000... | 1840107920 |

| 217 | 368.0 | 368.0 | NYC | 2.0 | 26875 | Egypt, Arab Rep. | 94447072.0 | Egypt, Arab Rep. | 94447072.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

| 315 | 272.0 | 272.0 | NYC | 2.0 | 18534 | Kuwait | 3956873.0 | Kuwait | 3956873.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

| 328 | 261.0 | 261.0 | NYC | 3.0 | 16913 | Saudi Arabia | 32442572.0 | Saudi Arabia | 32442572.0 | NEW YORK, NY | NY | New York | New York | New York | NY | New York | 36061 | New York | 36061 | New York | 40.6943 | -73.9249 | 19354922.0 | 11083.0 | polygon | False | True | America/New_York | 1 | 11229 11226 11225 11224 11222 11221 11220 1138... | 1840059961 |

len(ac_vis_df)8787

sf = shapefile.Reader('./us-shapefile/gz_2010_us_040_00_500k.shp')

us_states_geo_df = pd.DataFrame(columns=['Name', 'Shape'])

for s in sf.shapeRecords():

sp = s.__geo_interface__['properties']

sg = s.__geo_interface__['geometry']

if sg['type'] == 'MultiPolygon':

polygons = []

for p in sg['coordinates']:

polygons.append(Polygon(list(p[0])))

state_pol = cascaded_union(polygons)

else:

state_pol = Polygon(sg['coordinates'][0])

us_states_geo_df = us_states_geo_df.append({'Name':sp['NAME'], 'Shape':state_pol},

ignore_index=True)

us_states_geo_df.head() | Name | Shape | |

|---|---|---|

| 0 | Maine | (POLYGON ((-70.6078338623047 42.9777641296387,... |

| 1 | Massachusetts | (POLYGON ((-70.81141662597659 41.249870300293,... |

| 2 | Michigan | (POLYGON ((-83.8292236328125 43.6626319885254,... |

| 3 | Montana | POLYGON ((-104.057698 44.997431, -104.250145 4... |

| 4 | Nevada | POLYGON ((-114.0506 37.00039599999999, -114.04... |

state_counts = ac_vis_df.groupby('State').agg({'count':'sum'}).sort_values('count', ascending=False)

state_counts = state_counts.reset_index()

state_counts = state_counts.rename({'State':'State_Code', 'count':'StateCount'}, axis =1)

state_counts.head(5)| State_Code | StateCount | |

|---|---|---|

| 0 | NY | 180561 |

| 1 | CA | 122553 |

| 2 | IL | 62470 |

| 3 | FL | 46369 |

| 4 | TX | 40002 |

hi_vol_ports = ac_vis_df.groupby(['I94PORT', 'city', 'State', 'state_name', 'lat', 'lng']).agg({'count':'sum'}).sort_values('count', ascending=False)

hi_vol_ports = hi_vol_ports.reset_index()

hi_vol_ports = hi_vol_ports.merge(us_states_geo_df, left_on='state_name', right_on='Name')

hi_vol_ports = hi_vol_ports.merge(state_counts, left_on='State', right_on='State_Code')

hi_vol_ports = hi_vol_ports.drop(['State_Code', 'state_name'], axis=1)

hi_vol_ports.to_csv('hi_vol_ports.csv')

hi_vol_ports.head()| I94PORT | city | State | lat | lng | count | Name | Shape | StateCount | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | NYC | New York | NY | 40.6943 | -73.9249 | 170329 | New York | (POLYGON ((-71.943563 41.286675, -71.926802380... | 180561 |

| 1 | CHM | Champlain | NY | 44.9882 | -73.4408 | 4271 | New York | (POLYGON ((-71.943563 41.286675, -71.926802380... | 180561 |

| 2 | PBB | Central Bridge | NY | 42.7068 | -74.3473 | 1812 | New York | (POLYGON ((-71.943563 41.286675, -71.926802380... | 180561 |

| 3 | NIA | Niagara Falls | NY | 43.0921 | -79.0147 | 1706 | New York | (POLYGON ((-71.943563 41.286675, -71.926802380... | 180561 |

| 4 | LEW | Lewiston | NY | 43.1724 | -79.0400 | 1095 | New York | (POLYGON ((-71.943563 41.286675, -71.926802380... | 180561 |

Given the geography, it makes sense that NYC is the port of entry with the highest volume of Arab nationals. Therefore, we must find the international airports in NYC, and pick the busiest one. I94Port is the CBP location code for Immigration purposes, and unfortunately, might include multiple international airports.

airport_codes[airport_codes['municipality'] == 'new york'].head()| ident | type | name | elevation_ft | continent | iso_country | iso_region | municipality | gps_code | iata_code | local_code | coordinates | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 27679 | KJFK | large_airport | John F Kennedy International Airport | 13 | US | US-NY | new york | KJFK | JFK | JFK | -73.77890015, 40.63980103 | |

| 27819 | KLGA | large_airport | La Guardia Airport | 21 | US | US-NY | new york | KLGA | LGA | LGA | -73.87259674, 40.77719879 | |

| 49898 | US-0883 | large_airport | JFK | US | US-NY | new york | 0, 0 |

In this case, we can infer from the above that there two airports in the NYC I94Port Port of Entry definition. Some online searching will yield that JFK has more international flights, while La Guardia is more geared towards internal flights.

Therefore the winnder airport is JFK for our modest Shawarma joint.

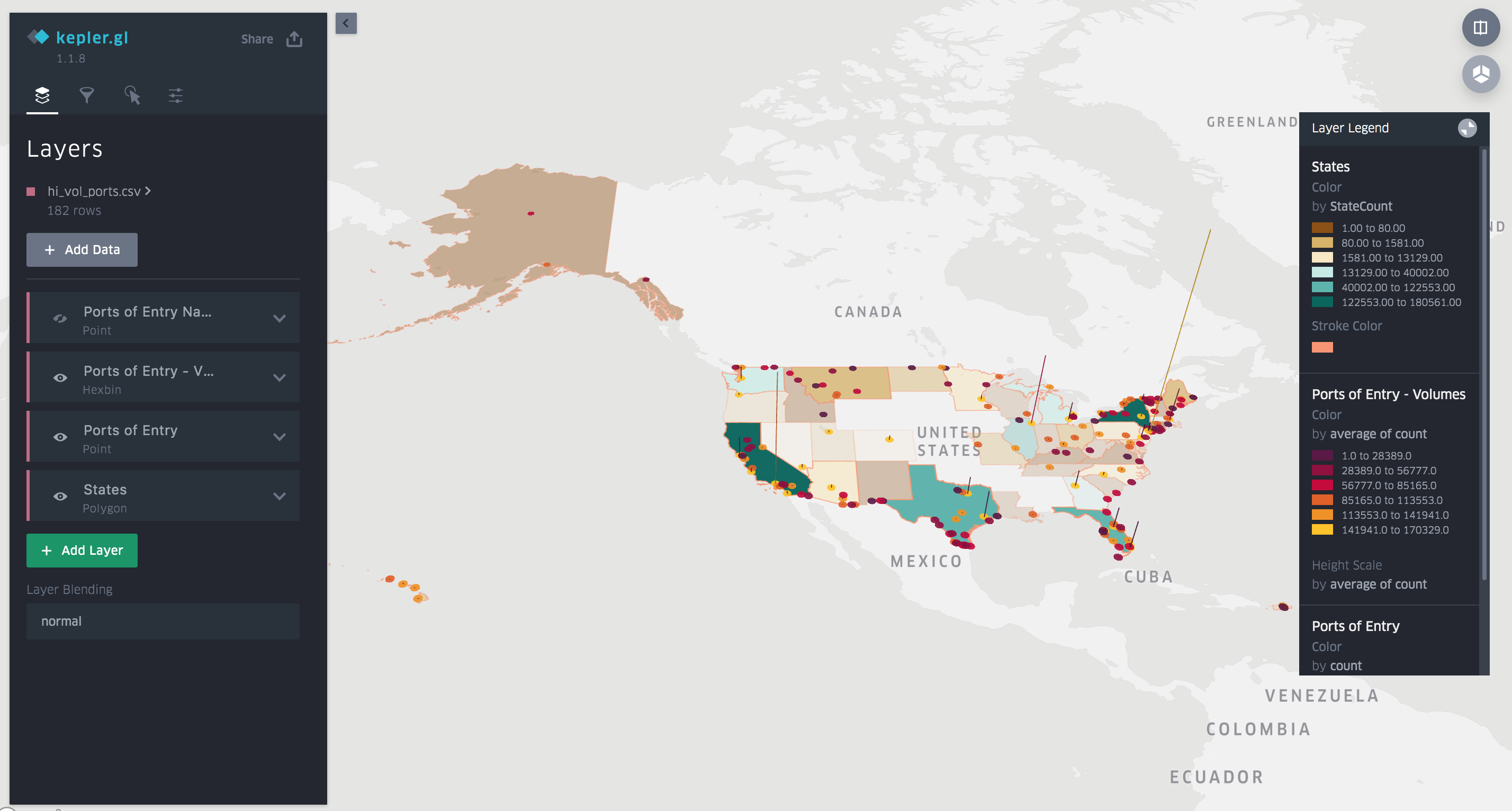

The saved file "hi_vol_ports.csv" can be uploaded to kepler.gl, and with a little bit of manual configuration, further geospatial analysis can be conducted. The below link accesses a map with the final dataframe data, as well as configured layers.

The map could be accessed at: https://kepler.gl/demo/map?mapUrl=https://dl.dropboxusercontent.com/s/dpvk9xkud4kqf1h/keplergl_m41qnas.json

Run Quality Checks

# Perform quality checks here

print(len(set(ac_vis_df['CitCountry'])) == len(set(arabic_countries)))

for ac in arabic_countries_dict.keys():

print(visitors[visitors['i94cit'] == str(arabic_countries_dict[ac])].count() ==\

len(ac_vis_df[ac_vis_df['i94cit'] == arabic_countries_dict[ac]]))

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

True

Create a data dictionary for your data model. For each field, provide a brief description of what the data is and where it came from. You can include the data dictionary in the notebook or in a separate file.

The list of columns in the final processed data frame is below:

- I94PORT: CBP location code of the port of entry

- City: name of the city of the port of entry

- State: code of the state of the port of entry

- Name: full name of the state of the port of entry

- lat: Latitude of the city of the port of entry

- lng: Longitude of the city of the port of entry

- count: count of Arab travellers per port of entry

- StateCount: count of Arab travellers per state

- Shape: shape of the state for mapping purposes

-

Clearly state the rationale for the choice of tools and technologies for the project.

-

Propose how often the data should be updated and why.

-

Write a description of how you would approach the problem differently under the following scenarios:

-

The data was increased by 100x.

-

The data populates a dashboard that must be updated on a daily basis by 7am every day.

-

The database needed to be accessed by 100+ people.

-

Most of the included data sources have helped in finding the right airport. The geospatial analysis helped identify 4 clusters or major points of interests, mainly the Northeast (New York and surroundings), Southern California (LA), Northen California (SF), and Texas (Houston)

-

The immigration data should be updated yearly (or at the same frequency that the right authority releases the data)

-

If the data was increased by 100x, I would increase the number of nodes in the Spark Cluster. After grouping the immigration data, the analysis could be continued with Pandas, the same way that it has been done here.

-

If the data needed to populate a dashboard on a daily basis, Apache Airflow would help, as well as an online database where the dashboard can draw the data from.

-

If the database need to be accessed by 100+ people, this is relatively low number, and could be handled by any database. But if this number becomes too large, then maybe hosting on Redshift or an online version of Cassandra would help with the load.