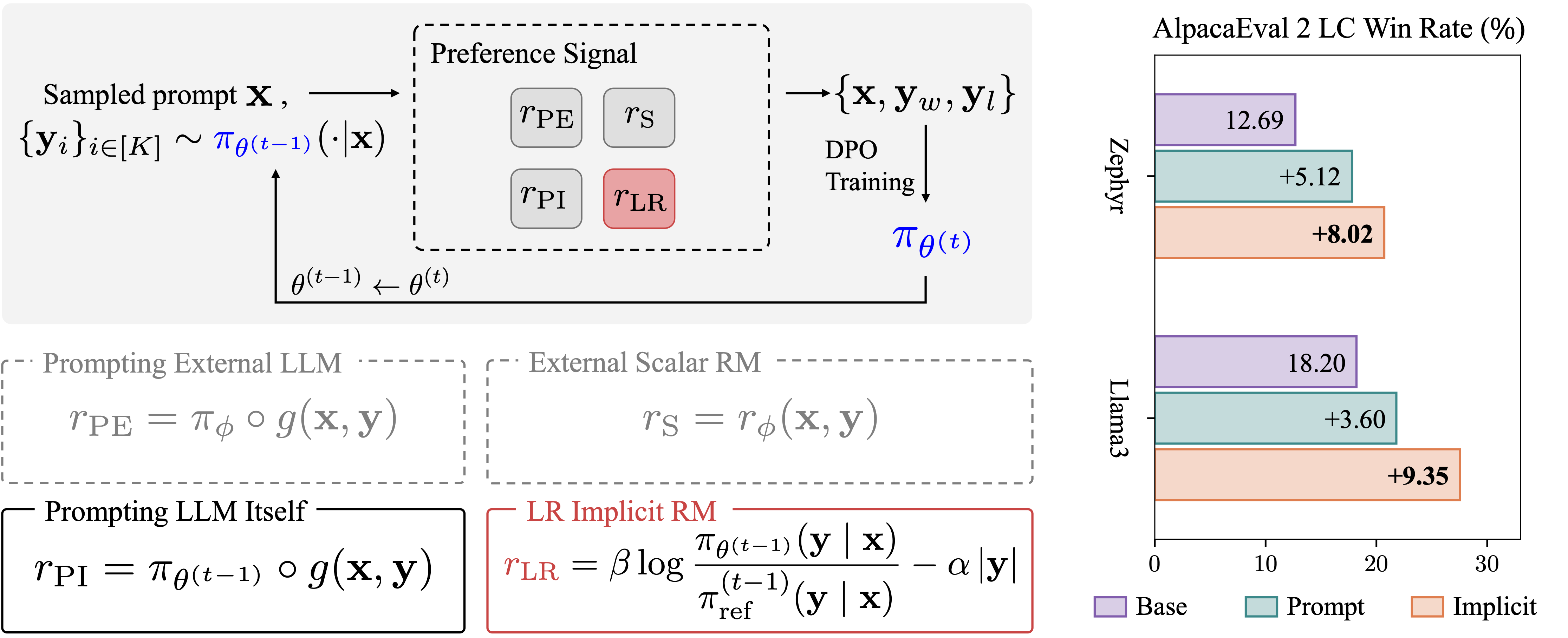

This repository contains the implementation of our paper Bootstrapping Language Models via DPO Implicit Rewards. We show that the implicit reward model from the prior DPO training can be utilized to bootstrap and further align LLMs.

| Model | AE2 LC | AE2 WR |

|---|---|---|

| 🤗Llama-3-Base-8B-SFT-DPO | 18.20 | 15.50 |

| 🤗Llama-3-Base-8B-DICE Iter1 | 25.08 | 25.77 |

| 🤗Llama-3-Base-8B-DICE Iter2 | 27.55 | 30.99 |

| 🤗Zephyr-7b-beta | 12.69 | 10.71 |

| 🤗Zephyr-7B-DICE Iter1 | 19.03 | 17.67 |

| 🤗Zephyr-7B-DICE Iter2 | 20.71 | 20.16 |

Please refer to pipeline.sh#1.1_response_generation on instructions for batch inference with the appropriate chat template.

Please install dependencies using the following command:

git clone https://github.com/sail-sg/dice.git

conda create -n dice python=3.10

conda activate dice

cd dice/llama-factory

pip install -e .[deepspeed,metrics,bitsandbytes]

cd ..

pip install -e .

pip install -r requirements.txt

# optional to install flash attention

pip install flash-attn --no-build-isolationProvide the local path to this repo to DICE_DIR in two files:

scripts/run_dice/iter.shscripts/run_dice/pipeline.sh

E.g. DICE_DIR="/home/username/dice"

We provide sample training scripts for both Llama3 and Zephyr settings. It is recommended to run the script with 8x A100 GPUs. For other hardware environments, you might need to adjust the script.

-

Llama3

bash scripts/run_dice/iter.sh llama3

-

Zephyr

bash scripts/run_dice/iter.sh zephyr

This repo is built on LLaMA-Factory. Thanks for the amazing work!

Please consider citing our paper if you find the repo helpful in your work:

@article{chen2024bootstrapping,

title={Bootstrapping Language Models with DPO Implicit Rewards},

author={Chen, Changyu and Liu, Zichen and Du, Chao and Pang, Tianyu and Liu, Qian and Sinha, Arunesh and Varakantham, Pradeep and Lin, Min},

journal={arXiv preprint arXiv:2406.09760},

year={2024}

}