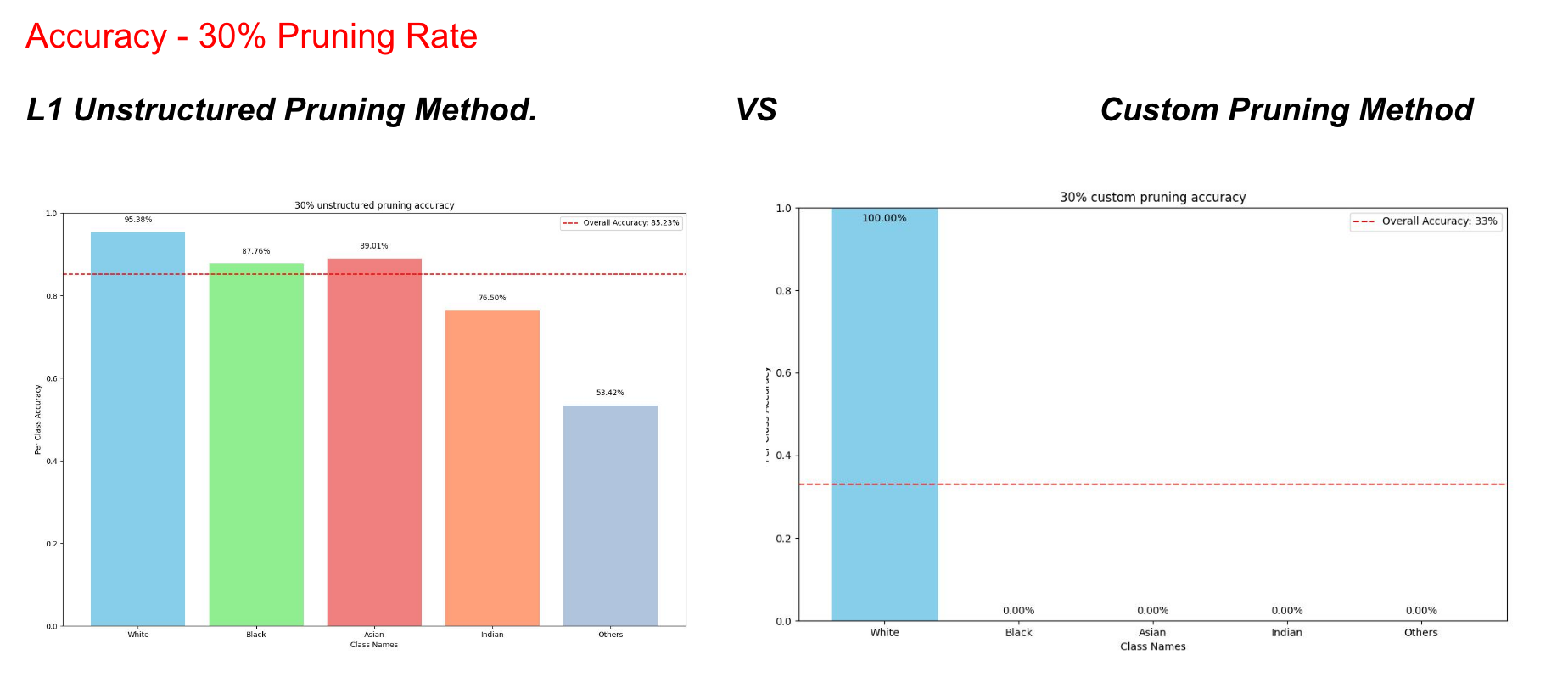

Pruning on models trained on imbalanced datasets, leads to reduction in the accuracy of the minority class, and increase in the accuracy of the majority class. Previous Studies [1] have explored the relation between Gradient norms, Hessians and the size of the minority class.

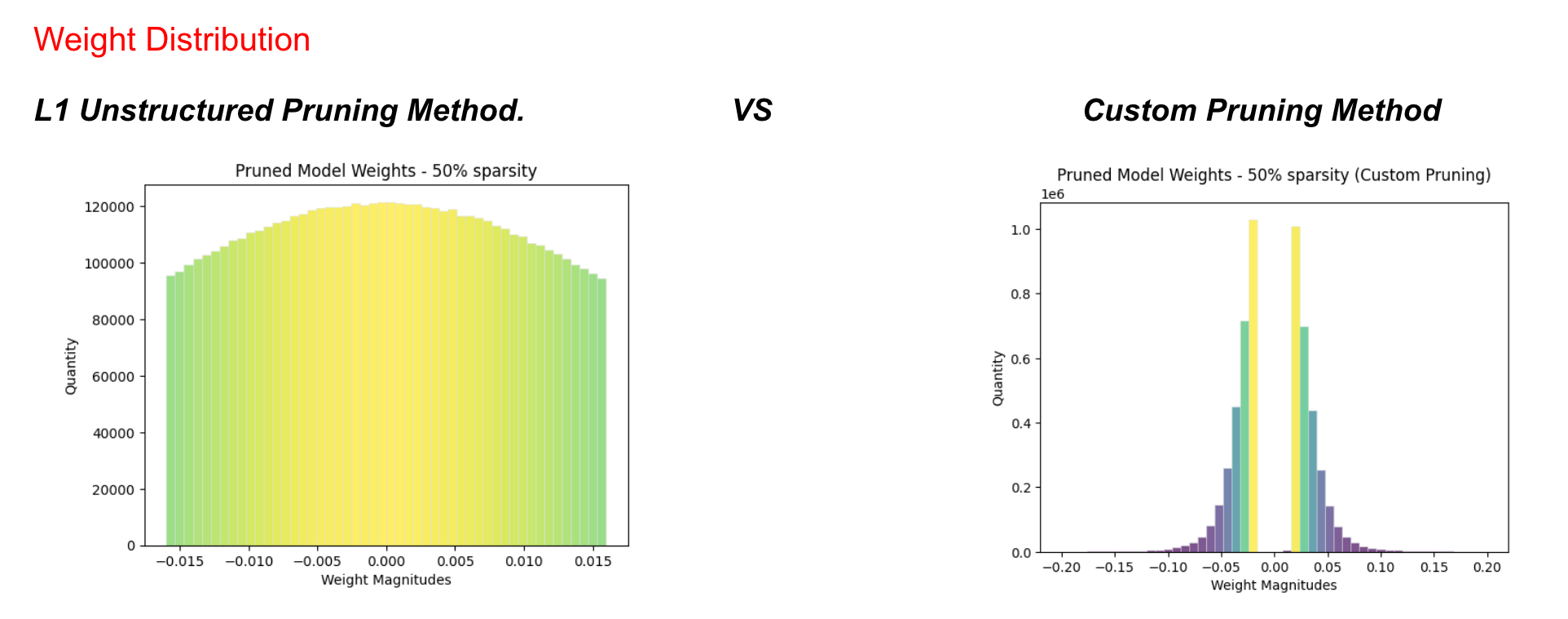

We explore the relationship between the values of the weights and the reduction in the accuracy of the minority class. To this end we explore two Pruning Options

- L1 Unstructured Pruning (Globally Pruning the weights with the least L1 Norm)

- Our Custom Pruning Method (Globally Pruning the weights with the highest L1 Norm)

We observe that pruning the tail end of the distribution of the weights leads to reduction in the accuracy of the minority class. We rigorously experiment multiple pruning ratios and two different datasets. For more info, look at our Wiki Page

There are two files in the Code/ folder.

- ResNet 18 cifar contains the pruning experiments and the associated results.

- Module Wise Pruning containes the pruning experiments on custom DNN model and the associated results.