We have build a model and now we want that model to be accessible from web. There are many ways to do that but we are going to do that using Tensorflow Serving.

- Train a dumb model and save that model Train

- Restore saved model to check everything is working Restore

- Export saved model to format that can be used by tensorflow-serving Export

- Load exported model to check everything is working Load exported

- Deploy exported model in tensorflow-serving using docker image

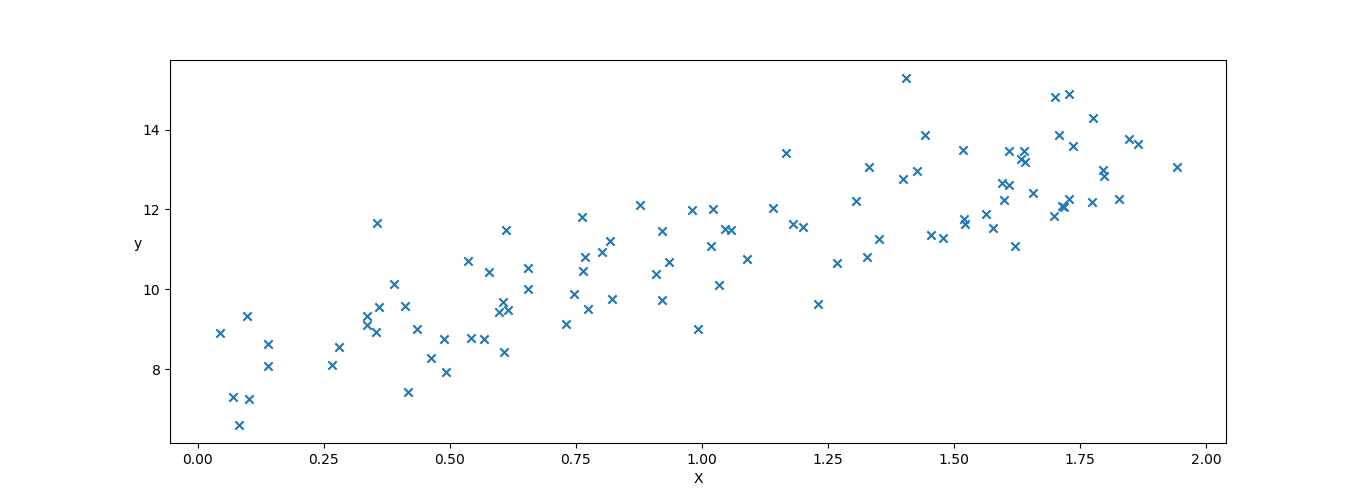

We will generate random datasaet and create a very basic model that will learn relationship between our randomly generated dataset

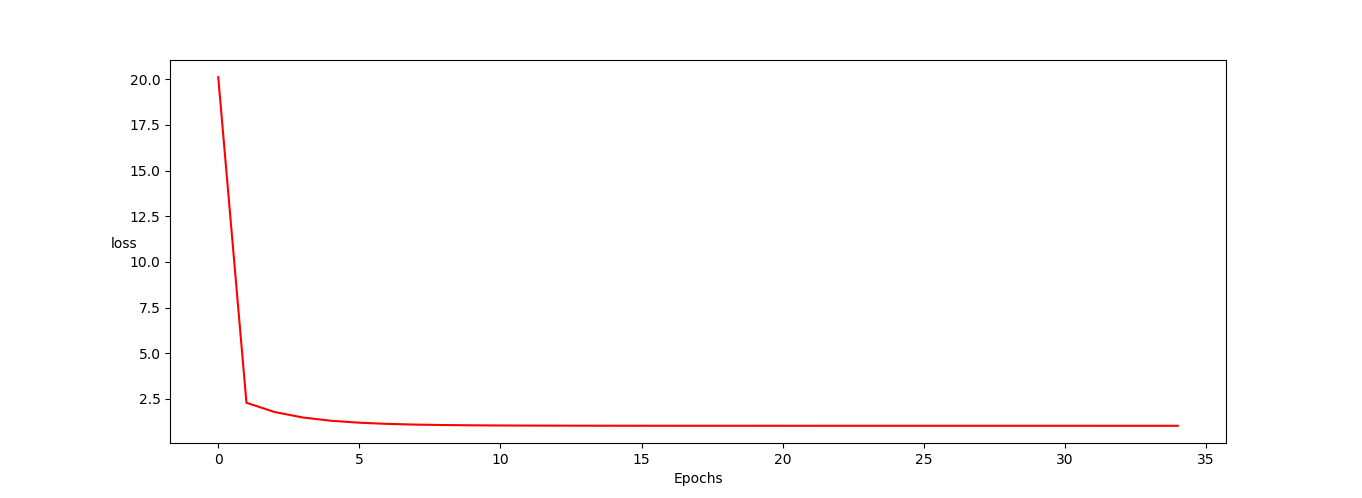

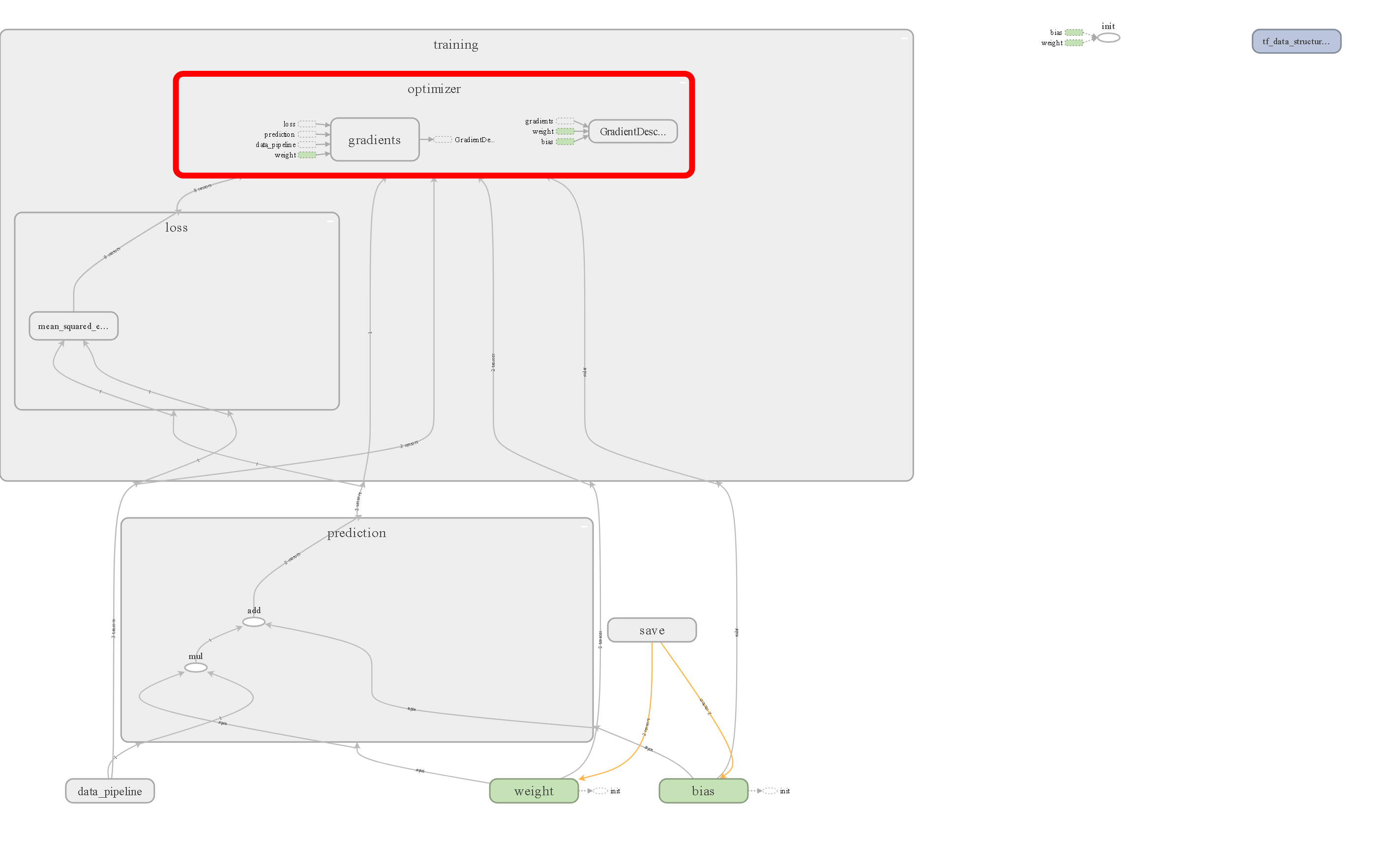

when training gets completed it will save model in Save Directory using tf.train.Saver() and it will also save summary in Summary to visualize the graph in tensorboard.

Here will load the saved model and make prediction using that by some input

Here comes the interesting part where will take the saved model and export it to SavedModel format that tensorflow-serving will use to serve. To do that first we need load meta graph file and fetch tensors from graph which are required for predictions using their names then build tensor info from them that will be used to create signature definition that will be passed to the SavedModelBuilder instance. We can finally build model signature that identifies what serving is going to expect from the client.

Now let's take a look at the export directory.

$ ls serve/linear/

1/

A sub-directory will be created for exporting each version of the model.

$ ls serve/linear/1/

saved_model.pb variables/

We'll use the command line utility saved_model_cli to look at the MetaGraphDefs (the models) and SignatureDefs (the methods you can call) in our SavedModel.

$ saved_model_cli show --dir serve/linear/1/ --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['x'] tensor_info:

dtype: DT_FLOAT

shape: (1)

name: data_pipeline/IteratorGetNext:0

The given SavedModel SignatureDef contains the following output(s):

outputs['y'] tensor_info:

dtype: DT_FLOAT

shape: (1)

name: prediction/add:0

Method name is: tensorflow/serving/predict

Here will load the exported SavedModel and make prediction using that by some random input

One of the easiest ways to get started using TensorFlow Serving is with Docker.

General installation instructions are on the Docker site, but we give some quick links here:

- Docker for macOS

- Docker for Windows for Windows 10 Pro or later

- Docker Toolbox for much older versions of macOS, or versions of Windows before Windows 10 Pro

Lets serve our model

-

you can pull the latest TensorFlow Serving docker image by running

docker pull tensorflow/serving -

Run docker image that will serve your model

sudo docker run --name tf_serving -p 8501:8501 --mount type=bind,source=$(pwd)/linear/,target=/models/linear -e MODEL_NAME=linear -t tensorflow/serving

if all goes well you'll see logs like 😎

2019-01-31 07:55:30.133911: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:259] SavedModel load for tags { serve }; Status: success. Took 58911 microseconds.

2019-01-31 07:55:30.133937: I tensorflow_serving/servables/tensorflow/saved_model_warmup.cc:83] No warmup data file found at /models/linear/1/assets.extra/tf_serving_warmup_requests

2019-01-31 07:55:30.134095: I tensorflow_serving/core/loader_harness.cc:86] Successfully loaded servable version {name: linear version: 1}

2019-01-31 07:55:30.137262: I tensorflow_serving/model_servers/server.cc:286] Running gRPC ModelServer at 0.0.0.0:8500 ...

[warn] getaddrinfo: address family for nodename not supported

2019-01-31 07:55:30.143359: I tensorflow_serving/model_servers/server.cc:302] Exporting HTTP/REST API at:localhost:8501 ...

- In addition to gRPC APIs TensorFlow ModelServer also supports RESTful APIs.

-

Model status API: It returns the status of a model in the ModelServer

GET http://host:port/v1/models/${MODEL_NAME}[/versions/${MODEL_VERSION}]Go to your browser type

http://localhost:8501/v1/models/linear/versions/1and you'll get status{ "model_version_status":[ { "version":"1", "state":"AVAILABLE", "status":{ "error_code":"OK", "error_message":"" } } ] } -

Model Metadata API: It returns the metadata of a model in the ModelServer

GET http://host:port/v1/models/${MODEL_NAME}[/versions/${MODEL_VERSION}]/metadataGo to your browser type

http://localhost:8501/v1/models/linear/versions/1/metadataand you'll get metadata{ "model_spec":{ "name":"linear", "signature_name":"", "version":"1" }, "metadata":{ "signature_def":{ "signature_def":{ "serving_default":{ "inputs":{ "x":{ "dtype":"DT_FLOAT", "tensor_shape":{ "dim":[ { "size":"1", "name":"" } ], "unknown_rank":false }, "name":"data_pipeline/IteratorGetNext:0" } }, "outputs":{ "y":{ "dtype":"DT_FLOAT", "tensor_shape":{ "dim":[ { "size":"1", "name":"" } ], "unknown_rank":false }, "name":"prediction/add:0" } }, "method_name":"tensorflow/serving/predict" } } } } } -

Predict API: It returns the predictions from you model based on the inputs that you'll send

POST http://host:port/v1/models/${MODEL_NAME}[/versions/${MODEL_VERSION}]:predictLet's make a curl request and check we are getting predictions or not

curl -d '{"signature_name":"serving_default", "instances":[10, 20, 30]}' -X POST http://localhost:8501/v1/models/linear:predict

output{ "predictions":[ 38.4256, 68.9495, 99.4733 ] }

Now you can use anything to make POST request to the model server and even use Flask, Django or any other framework of you choice to integrate this REST API and make application on top it 😎👍✌️