This repository provides a PyTorch implementation of "Semi-supervised Monaural Singing Voice Separation with a Masking Network Trained on Synthetic Mixtures" (paper).

The network learns to seperate vocals from music, by training on a set of samples of mixed music (singing and instrumental) and an unmatched set of instrumental music. Comparison with a fully supervised method can be found here

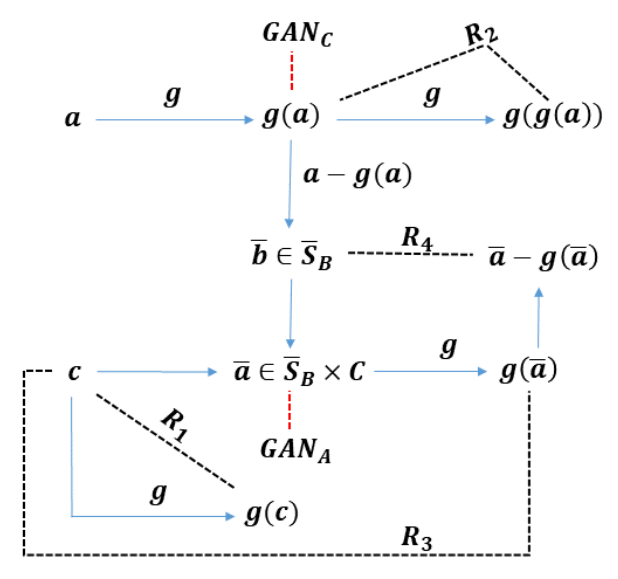

The algorithm is based on two main ideas:

- Utilizing GAN losses for alinging the distribution of masked samples with instrumental music and the distribution of synthetic samples with real mixture samples.

- Reconstruction losses based on the architecture and the superposition property of audio channels, creating more stable constrain than the GAN loss.

A conda environment file is available in the repository.

- Python 3.6 +

- Pytorch 0.4.0

- Torchvision

- Tensorboardx

- librosa

- tqdm

- imageio

- opencv

- musdb

- dsd100

$ git clone https://github.com/sagiebenaim/Singing.git

$ cd Singing/

For creating and activating the conda environment:

$ conda env create -f environment.yml

$ source activate singing

To download the DSD100 dataset:

$ bash download.sh DSD100

To download the MUSDB18 dataset, you must request access to the dataset from this website. Then, you need unzip the file to ./dataset/ folder

For creating the spectrograms for training, run the following command

$ python dataset.py --dataset DSD100

The default spectrograms are for vocals and accompaniment seperation. for drums/bass, use the --target drums/--target bass option

Run the training script below.

$ python train.py --config configs/vocals_new.yamlTo test on the DSD100 dataset:

$ python test.pyTo separate music channels (default:vocals and accompaniment) for DSD100, using the pretrained model, run the script below. The audio files will be saved into ./outputs directory.

$ python create_songs.pyThe script contains the function create_songs for creating vocals and accompaniment for any given song directory, for file format supported by soundfile.

for doing so, create a folder named ./input, move there the files and run the script:

$ python create_songs.py --input customIf you found this code useful, please cite the following paper:

@inproceedings{michelashvili2018singing,

title={Semi-Supervised Monaural Singing Voice Separation With a Masking Network Trained on Synthetic Mixtures},

author={Michael Michelashvili and Sagie Benaim and Lior Wolf},

booktitle={ICASSP},

year={2019},

}

This repository is based on the code from MUNIT