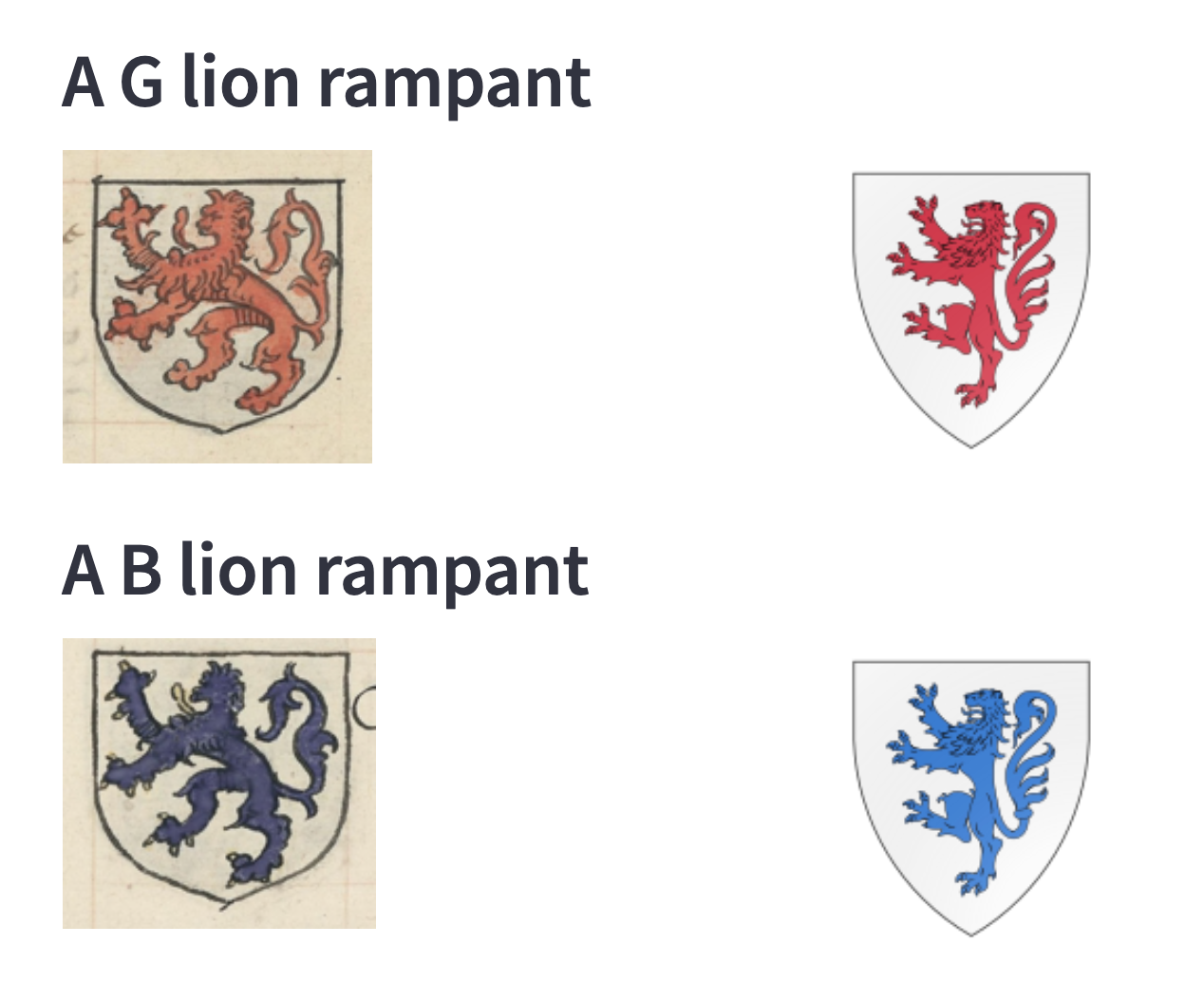

coat-of-arms

Work in progress

Setup Local Environment

1- Get the repo

git clone git@github.com:safaa-alnabulsi/coat-of-arms.git

cd coat-of-arms

2- Create virtual enviroment

python -m pip install -U setuptools pip

conda create --name thesis-py38 python=3.8

conda activate thesis-py38

conda install --file requirements.txt

torchdatasets: pip install automata-lib

pip install --user torchdatasets

pip install --user torchdatasets-nightly

jupyter notebook

3- to run tests

pytest

4- clone https://github.com/safaa-alnabulsi/armoria-api

npm install --save

then

npm start

5- to see it visually (needs a dataset in a folder named data/cropped_coas/out ):

streamlit run view_crops.py

Note: if you want to see results from more than one experiment, you need to run it:

tensorboard --logdir_spec ExperimentA:path/to/dir,ExperimentB:another/path/to/somewhere

7- to generate dataset

python generate-baseline-large.py --index=40787

python add-pixels-to-caption.py --index=40787 --dataset baseline-gen-data/medium

The default index is 0

Training the baseline model

- To submit a job to run on one node on the cluster

qsub train_baseline.sh /home/space/datasets/COA/generated-data-api-large 256 1 false- Locally:

python train_baseline.py --dataset baseline-gen-data/small --batch-size 256 --epochs 1 --resplit no --local yes- To check the loss/accuracy while training with tensorboard locally, run the following command

tensorboard --logdir=experiments/ --bind_allTo continue training from latest saved checkpoint

python train_baseline.py --dataset ~/tub/coat-of-arms/baseline-gen-data/small --batch-size 256 --local y --resplit no --resized-images yes --epochs 5 --checkpoint yes --run-folder run-09-12-2022-10:48:20 --accuracy all --seed 1234To continue training on real dataset - lions

python train_baseline.py --dataset ~/tub/coat-of-arms/data/cropped_coas/out --batch-size 13 --local y --resplit no --resized-images yes --epochs 50 --checkpoint yes --run-folder run-11-13-2022-15:40:39 --accuracy charge-mod-only --seed 1234 --real-data yes --caption-file real_captions_psumsq_lions.txt --baseline-model baseline-model-11-13-2022-16:09:55.pthPlease note that starting from seed argument, all other following args should also come in order (over 10 bash script arguments). Check the shell script.

Testing the baseline model

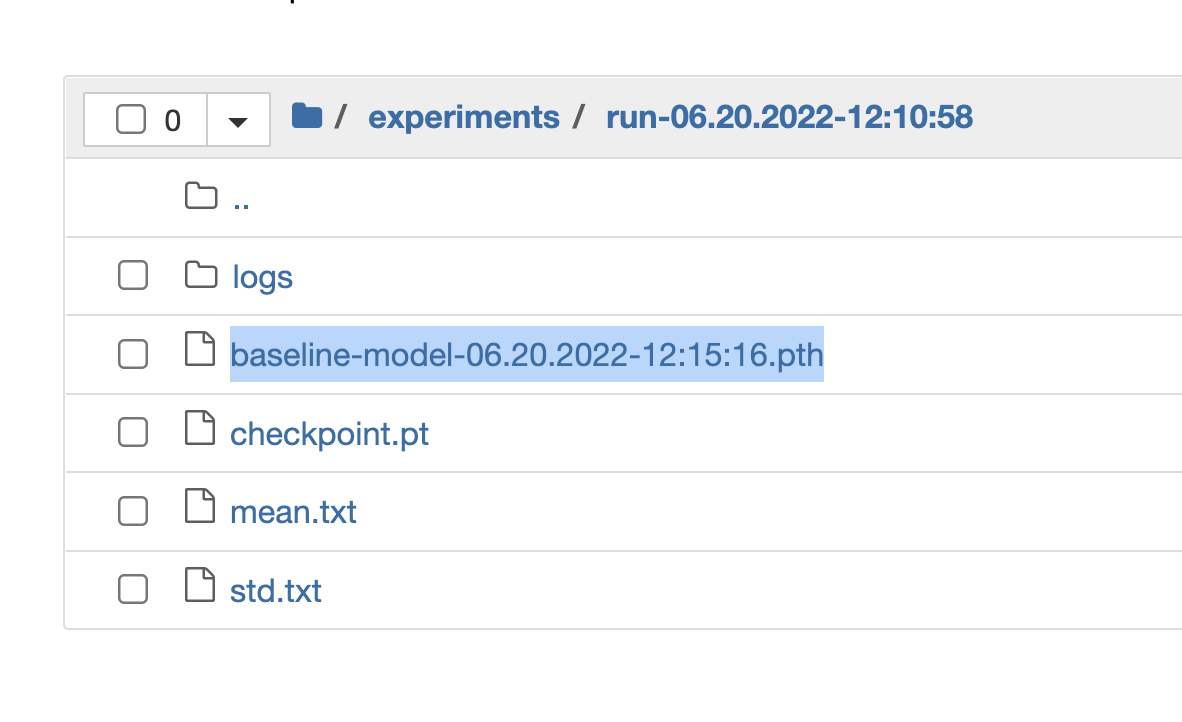

Each run of the training script is stored in the following structure of experiemnts:

You can use notebook 09-baseline-model-test.ipynb to load the model and test it on both synthesized data and real data.

You can also use test_baseline.py script to test the model:

Synthetic data

- Locally:

python test_baseline.py --dataset ~/tub/coat-of-arms/baseline-gen-data/small --batch-size 516 --local y --run-name 'run-06-22-2022-07:57:31' --model-name 'baseline-model-06-25-2022-20:54:47.pth' --real_data no --resized-images no --caption-file test_captions_psumsq.txt- On the cluster:

qsub test_baseline.sh /home/space/datasets/COA/generated-data-api-large 'run-06-22-2022-07:57:31' 'baseline-model-06-25-2022-20:54:47.pth' 516 no no no test_captions_psumsq.txtReal data

Note: for testing real data, just pass the folder of the dataset to the dataset parameter and pass real_data as yes

- Locally:

python test_baseline.py --dataset /Users/salnabulsi/tub/coat-of-arms/data/cropped_coas/out --batch-size 256 --local y --run-name 'run-06-22-2022-07:57:31' --model-name 'baseline-model-06-25-2022-20:54:47.pth' --real-data yes --resized-images no --caption-file test_real_captions_psumsq.txt- On the cluster:

qsub test_baseline.sh /home/salnabulsi/coat-of-arms/data/cropped_coas/out 'run-06-22-2022-07:57:31' 'baseline-model-06-25-2022-20:54:47.pth' 256 no yes no test_real_captions_psumsq.txttensorboard: Tracking training/testing results real time

The server will start in http://localhost:6006/

- To track the metrics of loss and accuracy in real time:

tensorboard --logdir=/home/space/datasets/COA/experiments --bind_allCheck the port and then do ssh forwarding:

ssh -L 6012:cluster:6012 <your-email> -i ~/.ssh/id_rsaNavigate to http://localhost:6012/ in your browser and check the job logs in real time.

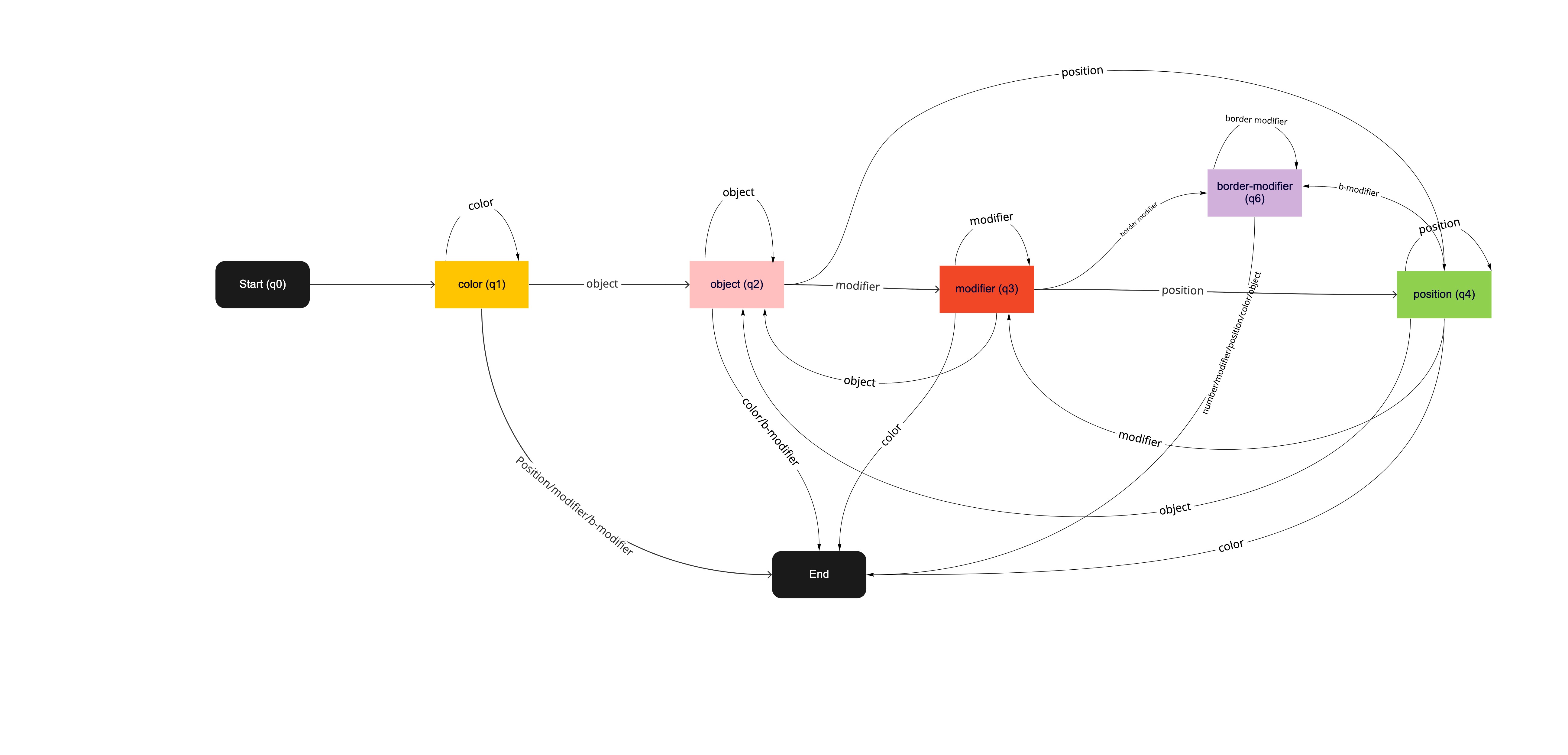

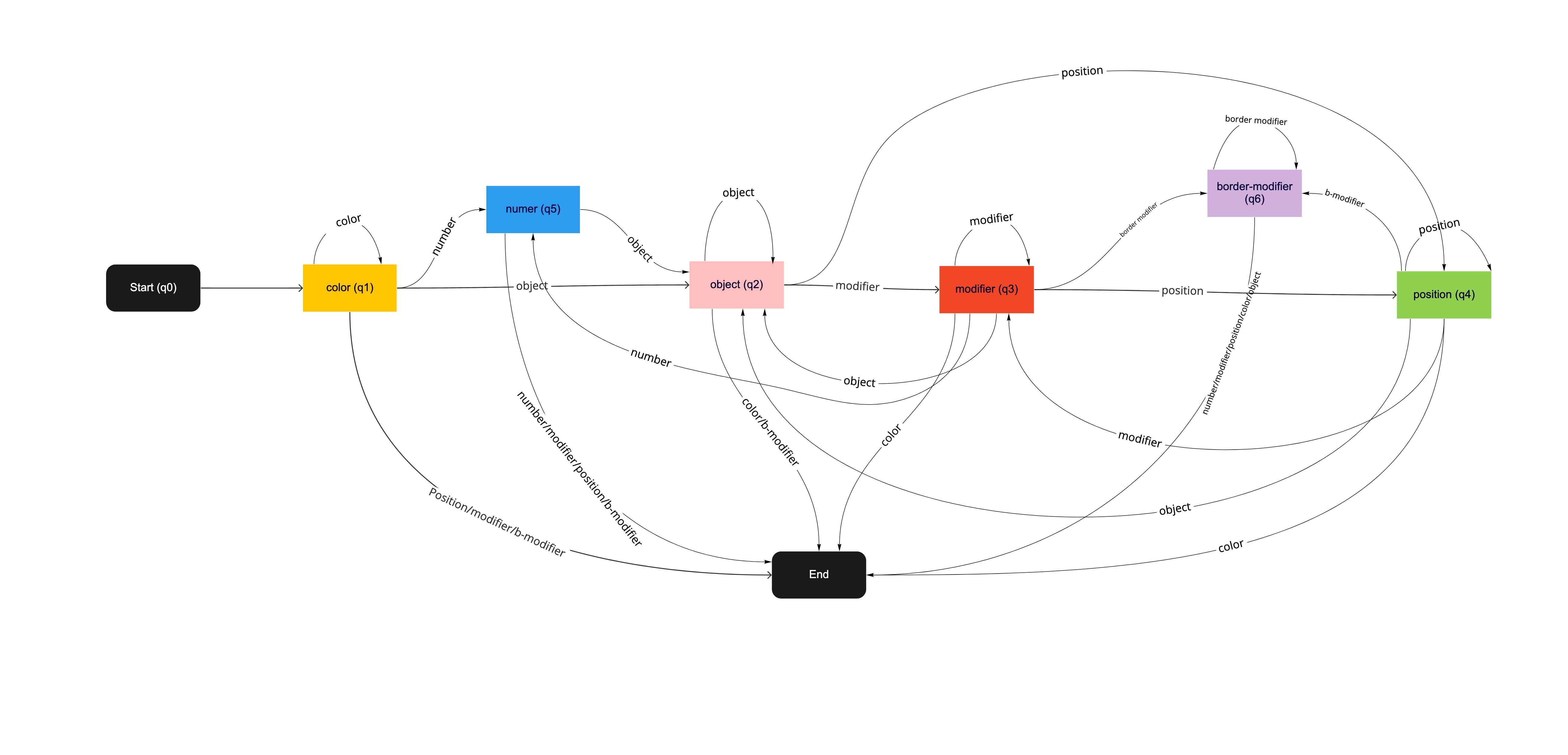

The Automata

The visual representation of the implemented automata in LabelCheckerAutomata

Helping scripts

Dataset generation script

This script generates dataset from permutations. It sends requests to Armoria API and creates caption.txt file.

python generate-baseline-large.py --index=40787Generate script

This script generates two values psum, psum_sq for each image in the given dataset and store the result in a new text file captions-psumsq.txt.

- psum: total sum of pixles in the image

- psum_sq: sum of the squared of pixles in the image

python add-pixels-to-caption.py --index=40787 --dataset baseline-gen-data/mediumResizing images script

This script resize the images in the given folder to 100x100 and store them in res_images. No params as it's for one-time use.

- Locally:

python resize-images.py- On the cluster:

qsub resize-images.shReferences:

- automata-lib: https://pypi.org/project/automata-lib/#class-dfafa

- Armoria API: https://github.com/Azgaar/armoria-api

- Early Stopping for PyTorch: https://github.com/Bjarten/early-stopping-pytorch

- torchdatasets: https://github.com/szymonmaszke/torchdatasets

- Torch data-loader: https://www.kaggle.com/mdteach/torch-data-loader-flicker-8k

- Tensorboard: https://pytorch.org/tutorials/recipes/recipes/tensorboard_with_pytorch.html

- baseline model explained: https://towardsdatascience.com/baseline-models-your-guide-for-model-building-1ec3aa244b8d

- Transfer Learning and Other Tricks: https://www.oreilly.com/library/view/programming-pytorch-for/9781492045342/ch04.html

- Reproducibility: https://gist.github.com/Guitaricet/28fbb2a753b1bb888ef0b2731c03c031