Master thesis repository

- BBC text categorization

- Graph Convolutional Network

- TextGCN

- DocumentGCN

- Results

- Repository description

Text documents are one of the richest sources of data for businesses.

We’ll use a public dataset from the BBC comprised of 2225 articles, each labeled under one of 5 categories: business, entertainment, politics, sport or tech.

The dataset is broken into 1490 records for training and 735 for testing. The goal will be to build a system that can accurately classify previously unseen news articles into the right category.

The competition is evaluated using Accuracy as a metric.

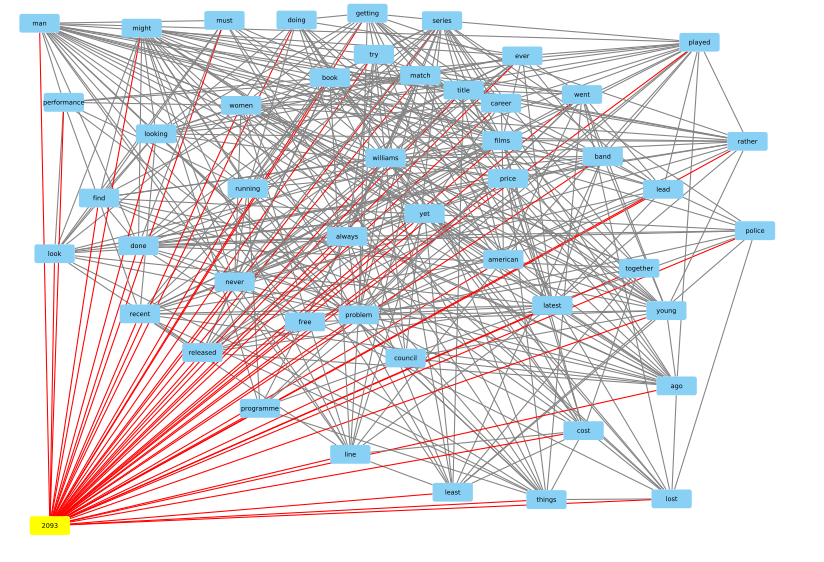

This approach was developed by Liang Yao, Chengsheng Mao and Yuan Luo in "Graph Convolutional Networks for Text Classification". It is a semi-supervised node classification method for heterogeneous graphs. There are two types of nodes: document nodes and token (word) nodes. The connection between token_i and token_j is established based on pointwise mutual information, whereas the strength of the connection between document and word that belongs to that document is determined by tf-idf. The identity matrix is the feature matrix for this graph. A fragment of this graph for a single document and some subset of the words is presented below.

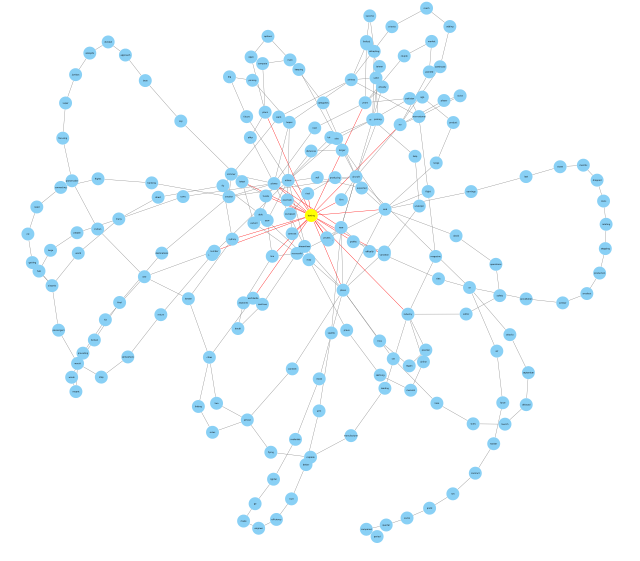

DocumentGCN is a supervised graph classification method. Firstly, all documents are represented as separate graphs. The graphs are built from a set of unique words in a given document and connected based on the co-occurrence property. A word2vec representation of a word is assigned as an attribute of every node. The considered neural network contained few GCN layers followed by global pooling of feature matrix and dense layer. The Figure below shows a graph representation of a single document from the corpus.

| Model | Accuracy (%) | Learning Time (s) |

|---|---|---|

| DocumentGCN | 98.6 | 195.95 |

| TextGCN | 48.4 | 336.73 |

| BERT | 97.3 | 2045.0 |

| BiLSTM | 95.0 | 3266.0 |

| NaiveBayes | 96.4 | 0.0038 |

Steps for reproducing the results:

- run the data/load_raw_data.ipynb -- it will create a feather file with all the documents in a single dataframe.

- TextGCN:

- prepare the heterogenous word-document graph for the whole corpus with TextGCN/text2graph.ipynb

- learn the model and make predictions with TextGCN/TextGCN.ipynb

- DocumentGCN

- run:

wget -c "https://s3.amazonaws.com/dl4j-distribution/GoogleNews-vectors-negative300.bin.gz" -P ./pretrain_modelsor download the GoogleNews-vectors-negative300.bin.gz file by yourself and place it in the pretrain_models folder. - (remove everything from data/DocumentGCN_Graphs AND prepare the graph for every document in the corpus with DocumentGCN/text2graphs.ipynb) OR

run

unzip data/DocumentGCN_Graphs/txt_features1843_04022021.zip -d ./data/DocumentGCN_Graphs/to decompress example data - learn the model and make predictions with DocumentGCN/DocumentGCN.ipynb

- run:

- Non-graph-oriented models are contained in the NB&DNN folder.

- run:

unzip pretrain_models/simple_word2vec.zip -d pretrain_models/ - run the code in the jupyter notebook:

- tfidfNB -- is a Naive Bayes with tf-idf feature vectors

- BiLSTM_W2V100 -- contain simple bidirectional lstm model with word2vec features

- run:

Created by Robert Benke - feel free to contact me!