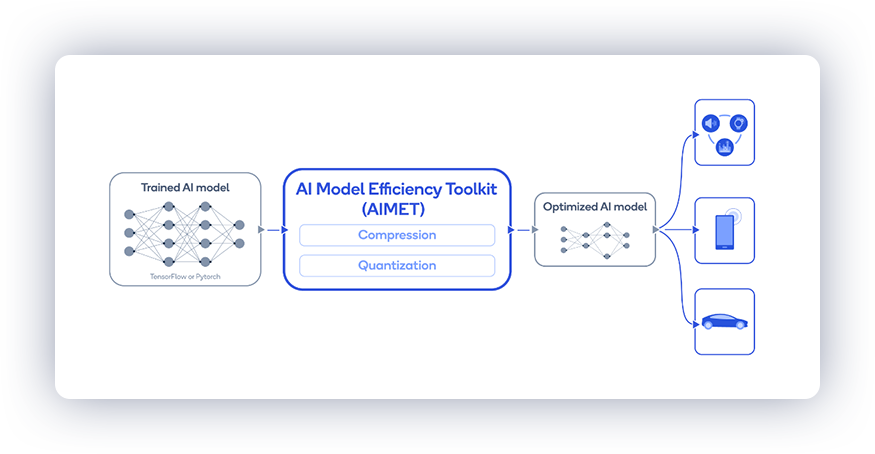

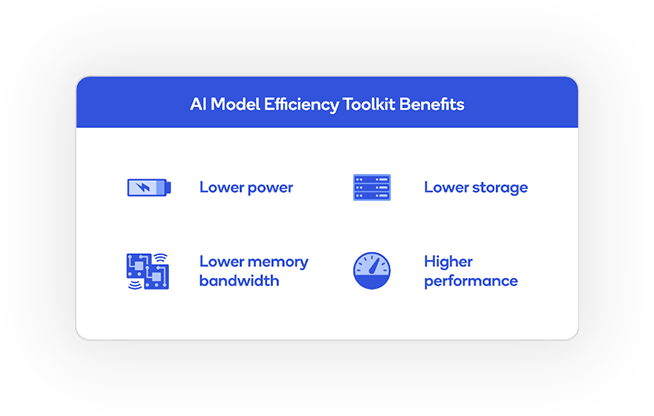

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy.

AIMET is designed to work with PyTorch and TensorFlow models.

- Supports advanced quantization techniques: Inference using integer runtimes is significantly faster than using floating-point runtimes. For example, models run 5x-15x faster on the Qualcomm Hexagon DSP than on the Qualcomm Kyro CPU. In addition, 8-bit precision models have a 4x smaller footprint than 32-bit precision models. However, maintaining model accuracy when quantizing ML models is often challenging. AIMET solves this using novel techniques like Data-Free Quantization that provide state-of-the-art INT8 results on several popular models.

- Supports advanced model compression techniques that enable models to run faster at inference-time and require less memory

- AIMET is designed to automate optimization of neural networks avoiding time-consuming and tedious manual tweaking. AIMET also provides user-friendly APIs that allow users to make calls directly from their TensorFlow or PyTorch pipelines.

Please visit the AIMET on Github Pages for more details.

- Cross-Layer Equalization: Equalize weight tensors to reduce amplitude variation across channels

- Bias Correction: Corrects shift in layer outputs introduced due to quantization

- Quantization Simulation: Simulate on-target quantized inference accuracy

- Fine-tuning: Use quantization simulation to train the model further to improve accuracy

- Spatial SVD: Tensor decomposition technique to split a large layer into two smaller ones

- Channel Pruning: Removes redundant input channels from a layer and reconstructs layer weights

- Per-layer compression-ratio selection: Automatically selects how much to compress each layer in the model

- Weight ranges: Inspect visually if a model is a candidate for applying the Cross Layer Equalization technique. And the effect after applying the technique

- Per-layer compression sensitivity: Visually get feedback about the sensitivity of any given layer in the model to compression

AIMET can quantize an existing 32-bit floating-point model to an 8-bit fixed-point model without sacrificing much accuracy and without model fine-tuning. As an example of accuracy maintained, the DFQ method applied to several popular networks, such as MobileNet-v2 and ResNet-50, result in less than 0.9% loss in accuracy all the way down to 8-bit quantization — in an automated way without any training data.

| Models | FP32 | INT8 Simulation |

|---|---|---|

| MobileNet v2 (top1) | 71.72% | 71.08% |

| ResNet 50 (top1) | 76.05% | 75.45% |

| DeepLab v3 (mIOU) | 72.65% | 71.91% |

AIMET can also significantly compress models. For popular models, such as Resnet-50 and Resnet-18, compression with spatial SVD plus channel pruning achieves 50% MAC (multiply-accumulate) reduction while retaining accuracy within approx. 1% of the original uncompressed model.

| Models | Uncompressed model | 50% Compressed model |

|---|---|---|

| ResNet18 (top1) | 69.76% | 68.56% |

| ResNet 50 (top1) | 76.05% | 75.75% |

Thanks for your interest in contributing to AIMET! Please read our Contributions Page for more information on contributing features or bug fixes. We look forward to your participation!

AIMET aims to be a community-driven project maintained by Qualcomm Innovation Center, Inc.

AIMET is licensed under the BSD 3-clause “New” or “Revised” License. Check out the LICENSE for more details.