TwitterTrends

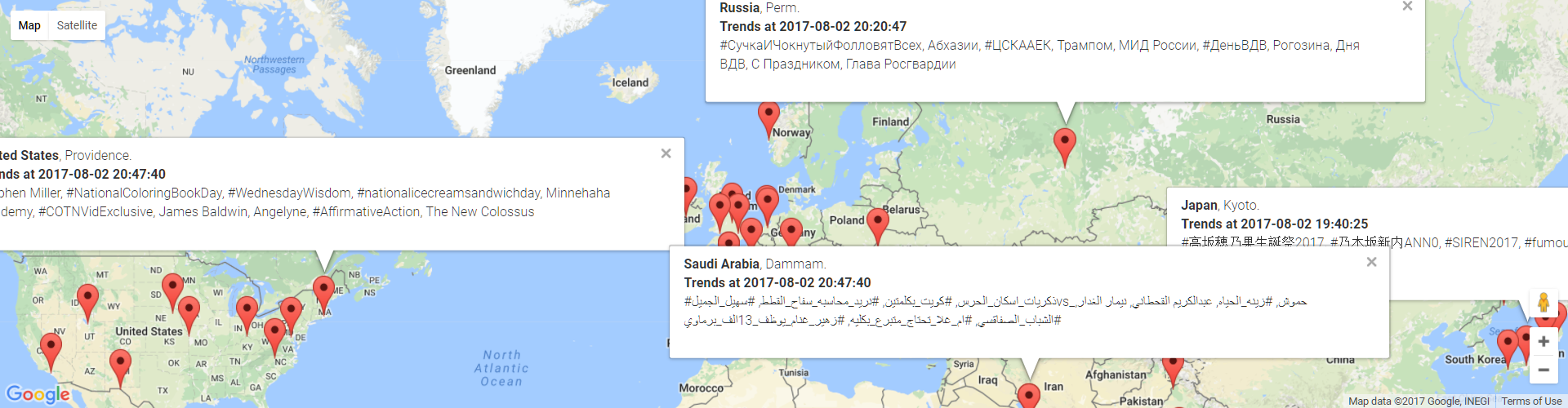

This streaming project obtains current Twitter's trending topic and show them in Google Maps like this:

The project consists in the next 4 processes (each of one is a Jupyter Notebook). Here's a brief description, we can find detailed info inside each notebook:

- TwitterTrends-1-TrendsToFile (Scala): It obtains trending topic with twitter4j's methods: getAvailableTrends (return locations with trending topics) and getPlaceTrends (returns the top 10 trending topics for a specific location) and save all info into a file.

- TwitterTrends-2-FileToKafka (Scala): It streams trending topic from a file to a Kafka topic using Spark Structured Streaming.

- TwitterTrends-3-KafkaToMongoDB (Scala): It streams trending topic from a Kafka topic to MongoDB using Spark Structured Streaming.

- TwitterTrends-4-MongoDBtoGMaps (Python): It visualizes trending topic obtained from MongoDB into Google Maps.

Requirements

- Oracle JDK 1.8 (64 bit).

- Scala 2.11.

- Apache Spark 2.2.0.

- Apache Kafka 0.11.00 for Scala 2.11.

- Generate our own Twitter Tokens.

- Python 3 and Gmaps plugin for trending topics visualization.

Starting Kafka and MongoDB servers

In order to run TwitterTrends-2-FileToKafka we need to start Kafka server. For TwitterTrends-3-KafkaToMongoDB we need to start Kafka server and MongoDB server.

Here's some indications about how to do it:

Starting Kafka server

First we need to add Kafka binaries directory in our system PATH and execute the next commands on kafka directory:

- Start ZooKeeper instance:

zookeeper-server-start.bat config/zookeeper.properties - Start the Kafka server

kafka-server-start.bat config/server.properties - Create a topic (first run only):

kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic tweeterTopic

We can see detailed explanation as well as the Unix commands in Kafka Quickstart.

Starting MongoDB server

We need to add MongoDB binaries directory in our system PATH and to type mongod in a command line.

Once started, If we want to use a GUI for MongoDB, we can use MongoDB Compass.

Some things to consider

-

Jupyter Scala: Scala kernel for Jupyter.

There's no need to use Jupyter (we can use our favourite Scala development environment) but in order to explain the project in a more interactive way I prefer to use it.

If we plan to use Jupyter Scala we have to take in mind that the way to manage dependences (adding external libraries) differs from Jupyter Scala Notebook to a standard Scala IDE/Intellij IDEA with the use of SBT (Simple Build Tool). For example:

In a standard Scala IDE/Intellij IDEA with the use of SBT we manage libraries adding the next line into our build.sbt file:

libraryDependencies += "org.apache.spark" %% "spark-sql" % "2.2.0"

In Scala Jupyter Notebook we manage libraries executing the next statement in the notebook:

import $ivy.`org.apache.spark::spark-sql:2.2.0`

In either case we need to load the libraries as we normally do, for example:

import org.apache.spark

-

Spark's logging level: By default when creating the Spark Session it will show all logging level (even INFO). In order to change this we can set our desired logging level.

In order to do this we have to copy and rename the log4j.properties.template included in our spark/conf folder to spark/conf/log4j.properties and change the following:

log4j.rootCategory=INFO, consoleto

log4j.rootCategory=WARN, consoleNow we can import log4j library and set our properties file. For example:

import org.apache.log4j.PropertyConfigurator PropertyConfigurator.configure("C:/spark/conf/log4j.properties")

Pending tasks

- Implementing Structured Streaming from Kafka to MongoDB when supported.

- Filtering by continent ($geoIntersects with MongoDB).