A notebook to demonstrate common tricks with word embeddings. Meant to accompany a talk I gave to Agency.

Best run using Anaconda python3. This notebook also requires PyTorch and torchtext.

I'm a big fan of Jupyter Widgets, which let you add interactive components to a standard Jupyter notebook very easily.

I used torchtext's built in support for GloVe vectors. As an additional point of comparison, I used Polyglot vectors. These were preprocessed to match the .txt format expected by the torchtext vector loader. The GloVe vectors will be downloaded by the vector loader, but the Polyglot vectors are much smaller so I include the processed file. Note: The GloVe embeddings are ~800mb, so it might take a while to download and load. You'll likely get better analogy performance if you experiment with other versions of the GloVe embeddings.

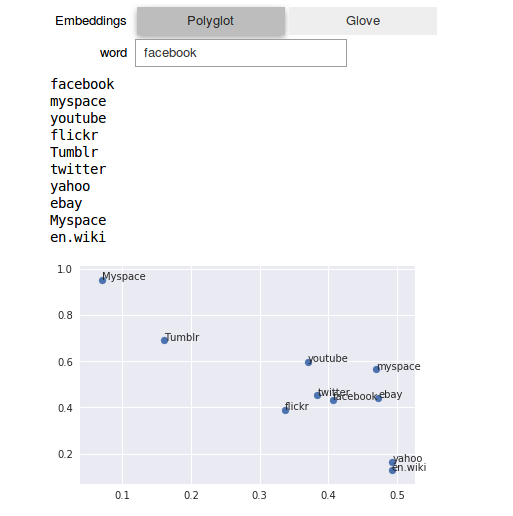

Word embeddings often form clusters composed of semantically similar words. Below are the nearest vectors to the vector for facebook in the Polyglot embeddings. Nearness is defined by cosine similarity,

but this can be changed to euclidean distance in the notebook. I also use PCA to reduce the dimensionality of the embedding space and plot the nearby points.

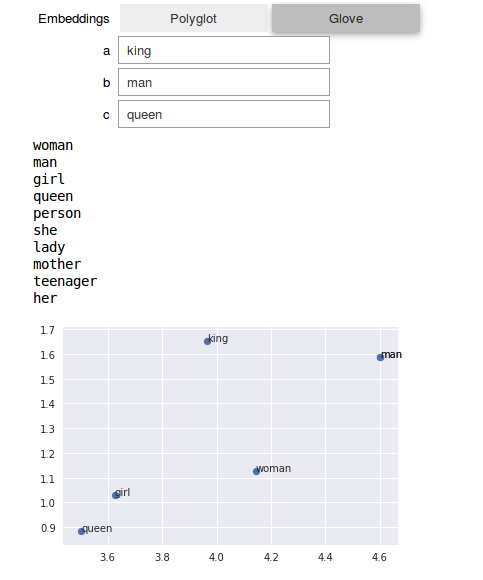

Word embeddings also tend to have the nice property that vector addition does "semantic addition". The classic example is using embeddings to solve analogies, as shown here using GloVe:

The analogy here is king is to man as queen is to ____ .

Again, the printed results are the nearest vectors and the plot is created using PCA.