A comparison of popular methods to create more efficient (smaller and faster) Nueral Networks.

Framework: Pytorch

Dataset: CIFAR10

Model: VGGnet11,16,19

Methods:

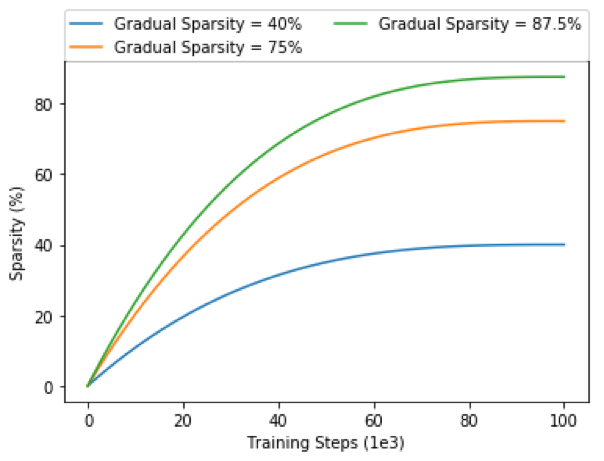

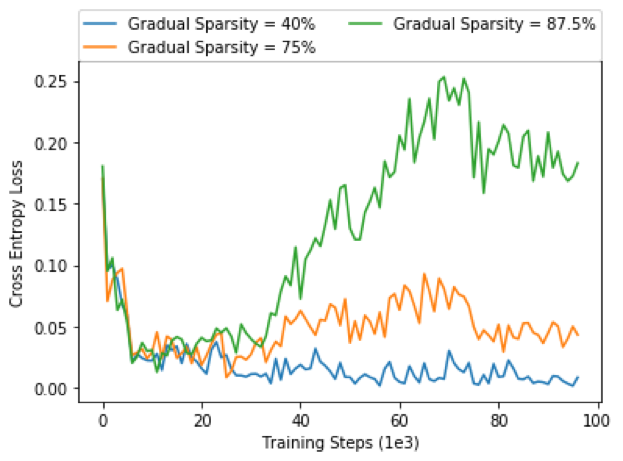

- Gradual Pruning (sparse)

- Low-Rank Factorization

- Knowledge Distillation

Absolute Metrics:

- Model size (weights)

- Test Accuracy

- Training Time (h)

- Inference Time (s)

- Runtime model size

Relative Metrics:

- Model compression rate

- Training/inference accelerations

- Relative accuracy between small and large models.

References:

- https://github.com/chengyangfu/pytorch-vgg-cifar10

- https://github.com/pytorch/vision/blob/master/torchvision/models/vgg.py

- https://github.com/jacobgil/pytorch-pruning/blob/master/finetune.py

- https://github.com/bearpaw/pytorch-classification/blob/master/models/cifar/alexnet.py

- https://github.com/jiecaoyu/pytorch-nin-cifar10/blob/master/original.py

- https://github.com/wanglouis49/pytorch-weights_pruning

M. Zhu and S. Gupta, “To prune, or not to prune: exploring the efficacy of pruning for model compression,” ArXiv e-prints, Oct. 2017.