Tutorial: Knative + Tekton

Linux Foundation Open Source Summit NA 2020

Slides: Knative-Tekton-OSSNA.pdf

Last Update: 2020/08/27

1. Setup Environment

1. Setup Environment

1.1 Setup Kubernetes Clusters

1.1.1 IBM Free Kubernetes Cluster

- Get a free Kubernetes cluster on IBM Cloud, also check out the booth at OSS-NA IBM booth during the conference how to get $200 credit.

- Select cluster from IBM Cloud console

- Click the drop down Action menu on the top right and select Connect via CLI and follow the commands.

- Log in to your IBM Cloud account

ibmcloud login -a cloud.ibm.com -r <REGION> -g <IAM_RESOURCE_GROUP>

- Set the Kubernetes context

ibmcloud ks cluster config -c mycluster

- Verify that you can connect to your cluster.

kubectl version --short

1.1.2 Kubernetes with Minikube

- Install minikube Linux, MacOS, or Windows. This tutorial was tested with version

v1.12.0. You print current and latest version numberminikube update-check - Configure your cluster 2 CPUs, 2 GB Memory, and version of kubernetes

v1.18.5. If you already have a minikube with different config, you need to delete it for new configuration to take effect or create a new profile.minikube delete minikube config set cpus 2 minikube config set memory 2048 minikube config set kubernetes-version v1.18.5 - Start your minikube cluster

minikube start - Verify versions if the

kubectl, the cluster, and that you can connect to your cluster.kubectl version --short

1.1.3 Kubernetes with Kind (Kubernetes In Docker)

- Install kind Linux, MacOS, or Windows. This tutorial was tested with version

v0.8.1. You can verify version withkind --version

- A kind cluster manifest file clusterconfig.yaml is already provided, you can customize it. We are exposing port

80on they host to be later use by the Knative Kourier ingress. To use a different version of kubernetes check the image digest to use from the kind release pagekind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 nodes: - role: control-plane image: kindest/node:v1.18.2@sha256:7b27a6d0f2517ff88ba444025beae41491b016bc6af573ba467b70c5e8e0d85f extraPortMappings: - containerPort: 31080 # expose port 31380 of the node to port 80 on the host, later to be use by kourier ingress hostPort: 80

- Create and start your cluster, we specify the config file above

kind create cluster --name knative --config kind/clusterconfig.yaml - Verify the versions of the client

kubectland the cluster api-server, and that you can connect to your cluster.kubectl cluster-info --context kind-knative

1.1.4 Kubernetes with Katacoda

- For a short version of this tutorial try it out on my Katacoda Scenario

1.2 Setup Command Line Interface (CLI) Tools

- Kubernetes CLI

kubectl - Knative CLI

kn - Tekton CLI

tkn

1.3 Setup Container Registry

- Get access to a container registry such as quay, dockerhub, or your own private registry instance from a Cloud provider such as IBM Cloud 😉. On this tutorial we are going to use Dockerhub

- Set the environment variables

REGISTRY_SERVER,REGISTRY_NAMESPACEandREGISTRY_PASSWORD, TheREGISTRY_NAMESPACEmost likely would be your dockerhub username. For Dockerhub usedocker.ioas the value forREGISTRY_SERVERREGISTRY_SERVER='docker.io' REGISTRY_NAMESPACE='REPLACEME_DOCKER_USERNAME_VALUE' REGISTRY_PASSWORD='REPLACEME_DOCKER_PASSWORD'

- You can use the file .template.env as template for the variables

cp .template.env .env # edit the file .env with variables and credentials the source the file source .env

1.4 Setup Git

- Get access to a git server such as gitlab, github, or your own private git instance from a Cloud provider such as IBM Cloud 😉. On this tutorial we are going to use GitHub

- Fork this repository https://github.com/csantanapr/knative-tekton

- Set the environment variable

GIT_REPO_URLto the url of your fork, not mine.GIT_REPO_URL='https://github.com/REPLACEME/knative-tekton' - Clone the repository and change directory

git clone $GIT_REPO_URL cd knative-tekton

- You can use the file .template.env as template for the variables

cp .template.env .env # edit the file .env with variables and credentials the source the file source .env

2. Install Knative Serving

2. Install Knative Serving

-

Install Knative Serving in namespace

knative-servingkubectl apply -f https://github.com/knative/serving/releases/download/v0.17.1/serving-crds.yaml kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.1/serving-core.yaml kubectl wait deployment activator autoscaler controller webhook --for=condition=Available -n knative-serving -

Install Knative Layer kourier in namespace

kourier-systemkubectl apply -f https://github.com/knative/net-kourier/releases/download/v0.17.0/kourier.yaml kubectl wait deployment 3scale-kourier-gateway --for=condition=Available -n kourier-system kubectl wait deployment 3scale-kourier-control --for=condition=Available -n knative-serving -

Set the environment variable

EXTERNAL_IPto External IP Address of the Worker Node If using minikube:EXTERNAL_IP=$(minikube ip) echo EXTERNAL_IP=$EXTERNAL_IP

If using kind:

EXTERNAL_IP="127.0.0.1"If using IBM Kubernetes:

EXTERNAL_IP=$(kubectl get nodes -o jsonpath='{.items[0].status.addresses[?(@.type=="ExternalIP")].address}')Verify the value

echo EXTERNAL_IP=$EXTERNAL_IP

-

Set the environment variable

KNATIVE_DOMAINas the DNS domain usingnip.ioKNATIVE_DOMAIN="$EXTERNAL_IP.nip.io" echo KNATIVE_DOMAIN=$KNATIVE_DOMAIN

Double check DNS is resolving

dig $KNATIVE_DOMAIN -

Configure DNS for Knative Serving

kubectl patch configmap -n knative-serving config-domain -p "{\"data\": {\"$KNATIVE_DOMAIN\": \"\"}}" -

Configure Kourier to listen for http port 80 on the node

If using Kind then use this

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: name: kourier-ingress namespace: kourier-system labels: networking.knative.dev/ingress-provider: kourier spec: type: NodePort selector: app: 3scale-kourier-gateway ports: - name: http2 nodePort: 31080 port: 80 targetPort: 8080 EOF

If not using Kind then use this

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Service metadata: name: kourier-ingress namespace: kourier-system labels: networking.knative.dev/ingress-provider: kourier spec: selector: app: 3scale-kourier-gateway ports: - name: http2 port: 80 targetPort: 8080 externalIPs: - $EXTERNAL_IP EOF

-

Configure Knative to use Kourier

kubectl patch configmap/config-network \ --namespace knative-serving \ --type merge \ --patch '{"data":{"ingress.class":"kourier.ingress.networking.knative.dev"}}' -

Verify that Knative is Installed properly all pods should be in

Runningstate and ourkourier-ingressservice configured.kubectl get pods -n knative-serving kubectl get pods -n kourier-system kubectl get svc -n kourier-system kourier-ingress

3. Using Knative to Run Serverless Applications

3. Using Knative to Run Serverless Applications

- Set the environment variable

SUB_DOMAINto the kubernetes namespace with Domain name<namespace>.<domainname>this way we can use any kubernetes namespace other thandefaultCURRENT_CTX=$(kubectl config current-context) CURRENT_NS=$(kubectl config view -o=jsonpath="{.contexts[?(@.name==\"${CURRENT_CTX}\")].context.namespace}") if [[ -z "${CURRENT_NS}" ]]; then CURRENT_NS="default" fi SUB_DOMAIN="$CURRENT_NS.$KNATIVE_DOMAIN" echo "\n\nSUB_DOMAIN=$SUB_DOMAIN"

3.1 Create Knative Service

3.1 Create Knative Service

-

Using the Knative CLI

kndeploy an application usig a Container Imagekn service create hello --image gcr.io/knative-samples/helloworld-go --autoscale-window 15s

You can set a lower window. The service is scaled to zero if no request was receivedin during that time.

--autoscale-window 10s

-

You can list your service

kn service list hello

-

Use curl to invoke the Application

curl http://hello.$SUB_DOMAINIt should print

Hello World! -

You can watch the pods and see how they scale down to zero after http traffic stops to the url

kubectl get pod -l serving.knative.dev/service=hello -wOutput should look like this after a few seconds when http traffic stops:

NAME READY STATUS hello-r4vz7-deployment-c5d4b88f7-ks95l 2/2 Running hello-r4vz7-deployment-c5d4b88f7-ks95l 2/2 Terminating hello-r4vz7-deployment-c5d4b88f7-ks95l 1/2 Terminating hello-r4vz7-deployment-c5d4b88f7-ks95l 0/2 TerminatingTry to access the url again, and you will see the new pods running again.

NAME READY STATUS hello-r4vz7-deployment-c5d4b88f7-rr8cd 0/2 Pending hello-r4vz7-deployment-c5d4b88f7-rr8cd 0/2 ContainerCreating hello-r4vz7-deployment-c5d4b88f7-rr8cd 1/2 Running hello-r4vz7-deployment-c5d4b88f7-rr8cd 2/2 RunningSome people call this Serverless 🎉 🌮 🔥

3.2 Updating the Knative service

3.2 Updating the Knative service

- Update the service hello with a new environment variable

TARGETkn service update hello --env TARGET="World from v1" - Now invoke the service

It should print

curl http://hello.$SUB_DOMAINHello World from v1!

3.3 Knative Service Traffic Splitting

3.3 Knative Service Traffic Splitting

-

Update the service hello by updating the environment variable

TARGET, tag the previous versionv1, send 25% traffic to this new version and leaving 75% of the traffic tov1kn service update hello \ --env TARGET="Knative from v2" \ --tag $(kubectl get ksvc hello --template='{{.status.latestReadyRevisionName}}')=v1 \ --traffic v1=75,@latest=25

-

Describe the service to see the traffic split details

kn service describe hello

Should print this

Name: hello Namespace: debug Age: 6m URL: http://hello.$SUB_DOMAIN Revisions: 25% @latest (hello-mshgs-3) [3] (26s) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) 75% hello-tgzmt-2 #v1 [2] (6m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) Conditions: OK TYPE AGE REASON ++ Ready 21s ++ ConfigurationsReady 24s ++ RoutesReady 21s -

Invoke the service usign a while loop you will see the message

Hello Knative from v225% of the timewhile true; do curl http://hello.$SUB_DOMAIN sleep 0.5 done

Should print this

Hello World from v1! Hello Knative from v2! Hello World from v1! Hello World from v1! -

Update the service this time dark launch new version

v3on a specific url, zero traffic will go to this version from the main url of the servicekn service update hello \ --env TARGET="OSS NA 2020 from v3" \ --tag $(kubectl get ksvc hello --template='{{.status.latestReadyRevisionName}}')=v2 \ --tag @latest=v3 \ --traffic v1=75,v2=25,@latest=0 -

Describe the service to see the traffic split details,

v3doesn't get any traffickn service describe hello

Should print this

Revisions: + @latest (hello-wkyty-4) #v3 [4] (1m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) 25% hello-fbzqf-3 #v2 [3] (6m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) 75% hello-kcspq-2 #v1 [2] (7m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) -

The latest version of the service is only available with an url prefix

v3-, go ahead and invoke the latest directly.curl http://v3-hello.$SUB_DOMAINIt shoud print this

Hello OSS NA from v3! -

We are happy with our darked launch version of the application, lets turn it live to 100% of the users on the default url

kn service update hello --traffic @latest=100

-

Describe the service to see the traffic split details,

@latestnow gets 100% of the traffickn service describe hello

Should print this

Revisions: 100% @latest (hello-wkyty-4) #v3 [4] (4m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) + hello-fbzqf-3 #v2 [3] (8m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) + hello-kcspq-2 #v1 [2] (9m) Image: gcr.io/knative-samples/helloworld-go (pinned to 5ea96b) -

If we invoke the service in a loop you will see that 100% of the traffic is directed to version

v3of our applicationwhile true; do curl http://hello.$SUB_DOMAIN sleep 0.5 done

Should print this

Hello OSS NA 2020 from v3! Hello OSS NA 2020 from v3! Hello OSS NA 2020 from v3! Hello OSS NA 2020 from v3! -

By using tags the custom urls with tag prefix are still available, in case you want to access an old revision of the application

curl http://v1-hello.$SUB_DOMAIN curl http://v2-hello.$SUB_DOMAIN curl http://v3-hello.$SUB_DOMAIN

It should print

Hello World from v1! Hello Knative from v2! Hello OSS NA 2020 from v3! -

Now that you have your service configure and deploy, you want to reproduce this using a kubernetes manifest using YAML in a different namespace or cluster. You can define your Knative service using the following YAML you can use the command

kn service exportShow me the Knative YAML

--- apiVersion: serving.knative.dev/v1 kind: Service metadata: name: hello spec: template: metadata: name: hello-v1 spec: containers: - image: gcr.io/knative-samples/helloworld-go env: - name: TARGET value: World from v1 --- apiVersion: serving.knative.dev/v1 kind: Service metadata: name: hello spec: template: metadata: name: hello-v2 spec: containers: - image: gcr.io/knative-samples/helloworld-go env: - name: TARGET value: Knative from v2 --- apiVersion: serving.knative.dev/v1 kind: Service metadata: name: hello spec: template: metadata: name: hello-v3 spec: containers: - image: gcr.io/knative-samples/helloworld-go env: - name: TARGET value: OSS NA 2020 from v3 traffic: - latestRevision: false percent: 0 revisionName: hello-v1 tag: v1 - latestRevision: false percent: 0 revisionName: hello-v2 tag: v2 - latestRevision: true percent: 100 tag: v3

If you want to deploy usign YAML, delete the Application with

knand redeploy withkubectlkn service delete hello kubectl apply -f knative/v1.yaml kubectl apply -f knative/v2.yaml kubectl apply -f knative/v3.yaml

Try the service again

while true; do curl http://hello.$SUB_DOMAIN done

-

Delete the Application and all it's revisions

kn service delete hello

4. Install Tekton Pipelines

4. Install Tekton

4.1 Install Tekton Pipelines

- Install Tekton Pipelines in namespace

tekton-pipelineskubectl apply -f https://github.com/tektoncd/pipeline/releases/download/v0.14.1/release.yaml kubectl wait deployment tekton-pipelines-controller tekton-pipelines-webhook --for=condition=Available -n tekton-pipelines

4.2 Install Tekton Dashboard (Optional)

4.2 Install Tekton Dashboard (Optional)

- Install Tekton Dashboard in namespace

tekton-pipelineskubectl apply -f https://github.com/tektoncd/dashboard/releases/download/v0.7.1/tekton-dashboard-release.yaml kubectl wait deployment tekton-dashboard --for=condition=Available -n tekton-pipelines - We can access the Tekton Dashboard serving using the Kourier Ingress using the

KNATIVE_DOMAINcat <<EOF | kubectl apply -f - apiVersion: networking.internal.knative.dev/v1alpha1 kind: Ingress metadata: name: tekton-dashboard namespace: tekton-pipelines annotations: networking.knative.dev/ingress.class: kourier.ingress.networking.knative.dev spec: rules: - hosts: - dashboard.tekton-pipelines.$KNATIVE_DOMAIN http: paths: - splits: - appendHeaders: {} serviceName: tekton-dashboard serviceNamespace: tekton-pipelines servicePort: 9097 visibility: ExternalIP visibility: ExternalIP EOF

- Set an environment variable

TEKTON_DASHBOARD_URLwith the url to access the DashboardTEKTON_DASHBOARD_URL=http://dashboard.tekton-pipelines.$KNATIVE_DOMAIN echo TEKTON_DASHBOARD_URL=$TEKTON_DASHBOARD_URL

5. Using Tekton to Build and Deploy Applications

5. Using Tekton to Build Applications

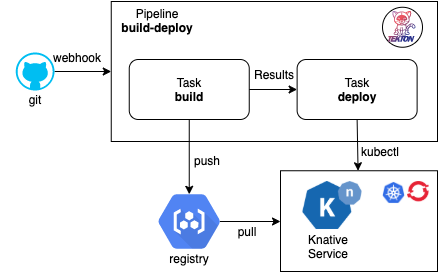

- Tekton helps create composable DevOps Automation by putting together Tasks, and Pipelines

5.1 Configure Credentials and ServiceAccounts for Tekton

5.1 Configure Access for Tekton

- We need to package our application in a Container Image and store this Image in a Container Registry. Since we are going to need to create secrets with the registry credentials we are going to create a ServiceAccount

pipelineswith the associated secretregcred. Make sure you setup your container credentials as environment variables. Checkout the Setup Container Registry in the Setup Environment section on this tutorial. This commands will print your credentials make sure no one is looking over, the printed command is what you need to run.NOTE: If you password have some characters that are interpreted by the shell, then do NOT use environment variables, explicit enter your values in the command wrapped by single quotesecho "" echo kubectl create secret docker-registry regcred \ --docker-server=\'${REGISTRY_SERVER}\' \ --docker-username=\'${REGISTRY_NAMESPACE}\' \ --docker-password=\'${REGISTRY_PASSWORD}\' echo "\nRun the above command manually ^^ this avoids problems with certain charaters in your password on the shell"

' - Verify the secret

regcredwas createdkubectl describe secret regcred - Create a ServiceAccount

pipelinethat contains the secretregsecretthat we just createdRun the following command with the providedapiVersion: v1 kind: ServiceAccount metadata: name: pipeline secrets: - name: regcred

YAMLkubectl apply -f tekton/sa.yaml

- We are going to be using Tekton to deploy the Knative Service, we need to configure RBAC to provide edit access to the current namespace

defaultto the ServiceAccountpipelineif you are using a different namespace thandefaultedit the filetekton/rbac.yamland provide the namespace where to create theRoleand theRoleBindingfo more info check out the RBAC docs. Run the following command to grant access to sapipelinescat tekton/rbac.yaml | sed "s/namespace: default/namespace: $CURRENT_NS/g" | kubectl apply -f -

5.2 The Build Tekton Task

5.2 The Build Tekton Task

-

In this repository we have a sample application, you can see the source code in ./nodejs/app.js This application is using JavaScript to implement a web server, but you can use any language you want.

const app = require("express")() const server = require("http").createServer(app) const port = process.env.PORT || "8080" const message = process.env.TARGET || 'Hello World' app.get('/', (req, res) => res.send(message)) server.listen(port, function () { console.log(`App listening on ${port}`) });

-

I provided a Tekton Task that can download source code from git, build and push the Image to a registry.

Show me the Build Task YAML

apiVersion: tekton.dev/v1beta1 kind: Task metadata: name: build spec: params: - name: repo-url description: The git repository url - name: revision description: The branch, tag, or git reference from the git repo-url location default: master - name: image description: "The location where to push the image in the form of <server>/<namespace>/<repository>:<tag>" - name: CONTEXT description: Path to the directory to use as context. default: . - name: BUILDER_IMAGE description: The location of the buildah builder image. default: quay.io/buildah/stable:v1.14.8 - name: STORAGE_DRIVER description: Set buildah storage driver default: overlay - name: DOCKERFILE description: Path to the Dockerfile to build. default: ./Dockerfile - name: TLSVERIFY description: Verify the TLS on the registry endpoint (for push/pull to a non-TLS registry) default: "false" - name: FORMAT description: The format of the built container, oci or docker default: "oci" steps: - name: git-clone image: alpine/git script: | git clone $(params.repo-url) /source cd /source git checkout $(params.revision) volumeMounts: - name: source mountPath: /source - name: build-image image: $(params.BUILDER_IMAGE) workingdir: /source script: | echo "Building Image $(params.image)" buildah --storage-driver=$(params.STORAGE_DRIVER) bud --format=$(params.FORMAT) --tls-verify=$(params.TLSVERIFY) -f $(params.DOCKERFILE) -t $(params.image) $(params.CONTEXT) echo "Pushing Image $(params.image)" buildah --storage-driver=$(params.STORAGE_DRIVER) push --tls-verify=$(params.TLSVERIFY) --digestfile ./image-digest $(params.image) docker://$(params.image) securityContext: privileged: true volumeMounts: - name: varlibcontainers mountPath: /var/lib/containers - name: source mountPath: /source volumes: - name: varlibcontainers emptyDir: {} - name: source emptyDir: {}

-

Install the provided task build like this.

kubectl apply -f tekton/task-build.yaml

-

You can list the task that we just created using the

tknCLItkn task ls

-

We can also get more details about the build Task using

tkn task describetkn task describe build

-

Let's use the Tekton CLI to test our build Task you need to pass the ServiceAccount

pipelineto be use to run the Task. You will need to pass the GitHub URL to your fork or use this repository. You will need to pass the directory within the repository where the application in our case isnodejs. The repository image name isknative-tektontkn task start build --showlog \ -p repo-url=${GIT_REPO_URL} \ -p image=${REGISTRY_SERVER}/${REGISTRY_NAMESPACE}/knative-tekton \ -p CONTEXT=nodejs \ -s pipeline

-

You can check out the container registry and see that the image was pushed to repository a minute ago, it should return status Code

200curl -s -o /dev/null -w "%{http_code}\n" https://index.$REGISTRY_SERVER/v1/repositories/$REGISTRY_NAMESPACE/knative-tekton/tags/latest

5.3 The Deploy Tekton Task

5.3 The Deploy Tekton Task

-

I provided a Deploy Tekton Task that can run

kubectlto deploy the Knative Application using a YAML manifest.Show me the Deploy Task YAML

apiVersion: tekton.dev/v1beta1 kind: Task metadata: name: deploy spec: params: - name: repo-url description: The git repository url - name: revision description: The branch, tag, or git reference from the git repo-url location default: master - name: dir description: Path to the directory to use as context. default: . - name: yaml description: Path to the directory to use as context. default: "" - name: image description: Path to the container image default: "" - name: KUBECTL_IMAGE description: The location of the kubectl image. default: docker.io/csantanapr/kubectl steps: - name: git-clone image: alpine/git script: | git clone $(params.repo-url) /source cd /source git checkout $(params.revision) volumeMounts: - name: source mountPath: /source - name: kubectl-apply image: $(params.KUBECTL_IMAGE) workingdir: /source script: | if [ "$(params.image)" != "" ] && [ "$(params.yaml)" != "" ]; then yq w -i $(params.dir)/$(params.yaml) "spec.template.spec.containers[0].image" "$(params.image)" cat $(params.dir)/$(params.yaml) fi kubectl apply -f $(params.dir)/$(params.yaml) volumeMounts: - name: source mountPath: /source volumes: - name: source emptyDir: {}

-

Install the provided task deploy like this.

kubectl apply -f tekton/task-deploy.yaml

-

You can list the task that we just created using the

tknCLItkn task ls

-

We can also get more details about the deploy Task using

tkn task describetkn task describe deploy

-

I provided a Task YAML that defines our Knative Application in knative/service.yaml

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: demo spec: template: spec: containers: - image: docker.io/csantanapr/knative-tekton imagePullPolicy: Always env: - name: TARGET value: Welcome to the Knative Meetup

-

Let's use the Tekton CLI to test our deploy Task you need to pass the ServiceAccount

pipelineto be use to run the Task. You will need to pass the GitHub URL to your fork or use this repository. You will need to pass the directory within the repository where the application yaml manifest is located and the file name in our case isknativeandservice.yaml.tkn task start deploy --showlog \ -p image=${REGISTRY_SERVER}/${REGISTRY_NAMESPACE}/knative-tekton \ -p repo-url=${GIT_REPO_URL} \ -p dir=knative \ -p yaml=service.yaml \ -s pipeline

-

You can check out that the Knative Application was deploy

kn service list demo

5.4 The Build and Deploy Pipeline

5.4 The Build and Deploy Pipeline

-

If we want to build the application image and then deploy the application, we can run the Tasks build and deploy by defining a Pipeline that contains the two Tasks, deploy the Pipeline

build-deploy.Show me the Pipeline YAML

apiVersion: tekton.dev/v1beta1 kind: Pipeline metadata: name: build-deploy spec: params: - name: repo-url default: https://github.com/csantanapr/knative-tekton - name: revision default: master - name: image - name: image-tag default: latest - name: CONTEXT default: nodejs tasks: - name: build taskRef: name: build params: - name: image value: $(params.image):$(params.image-tag) - name: repo-url value: $(params.repo-url) - name: revision value: $(params.revision) - name: CONTEXT value: $(params.CONTEXT) - name: deploy runAfter: [build] taskRef: name: deploy params: - name: image value: $(params.image):$(params.image-tag) - name: repo-url value: $(params.repo-url) - name: revision value: $(params.revision) - name: dir value: knative - name: yaml value: service.yaml

-

Install the Pipeline with this command

kubectl apply -f tekton/pipeline-build-deploy.yaml

-

You can list the pipeline that we just created using the

tknCLItkn pipeline ls

-

We can also get more details about the build-deploy Pipeline using

tkn pipeline describetkn pipeline describe build-deploy

-

Let's use the Tekton CLI to test our build-deploy Pipeline you need to pass the ServiceAccount

pipelineto be use to run the Tasks. You will need to pass the GitHub URL to your fork or use this repository. You will also pass the Image location where to push in the the registry and where Kubernetes should pull the image for the Knative Application. The directory and filename for the Kantive yaml are already specified in the Pipeline definition.tkn pipeline start build-deploy --showlog \ -p image=${REGISTRY_SERVER}/${REGISTRY_NAMESPACE}/knative-tekton \ -p repo-url=${GIT_REPO_URL} \ -s pipeline

-

You can inpect the results and duration by describing the last PipelineRun

tkn pipelinerun describe --last

-

Check that the latest Knative Application revision is ready

kn service list demo

-

Run the Application using the url

curl http://demo.$SUB_DOMAINIt shoudl print

Welcome to OSS NA 2020

6. Automate the Tekton Pipeline using Git Web Hooks Triggers

6. Automate the Tekton Pipeline using Git Web Hooks

6.1 Install Tekton Triggers

6.1 Install Tekton Triggers

- Install Tekton Triggers in namespace

tekton-pipelineskubectl apply -f https://github.com/tektoncd/triggers/releases/download/v0.6.1/release.yaml kubectl wait deployment tekton-triggers-controller tekton-triggers-webhook --for=condition=Available -n tekton-pipelines

6.2 Create TriggerTemplate, TriggerBinding

6.2 Create TriggerTemplate, TriggerBinding

-

When the Webhook invokes we want to start a Pipeline, we will a

TriggerTemplateto use a specification on which Tekton resources should be created, in our case will be creating a newPipelineRunthis will start a newPipelineinstall.Show me the TriggerTemplate YAML

apiVersion: triggers.tekton.dev/v1alpha1 kind: TriggerTemplate metadata: name: build-deploy spec: params: - name: gitrevision description: The git revision default: master - name: gitrepositoryurl description: The git repository url - name: gittruncatedsha - name: image default: REPLACE_IMAGE resourcetemplates: - apiVersion: tekton.dev/v1beta1 kind: PipelineRun metadata: generateName: build-deploy-run- spec: serviceAccountName: pipeline pipelineRef: name: build-deploy params: - name: revision value: $(params.gitrevision) - name: repo-url value: $(params.gitrepositoryurl) - name: image-tag value: $(params.gittruncatedsha) - name: image value: $(params.image)

-

Install the TriggerTemplate

cat tekton/trigger-template.yaml | sed "s/REPLACE_IMAGE/$REGISTRY_SERVER\/$REGISTRY_NAMESPACE\/knative-tekton/g" | kubectl apply -f -

-

When the Webhook invokes we want to extract information from the Web Hook http request sent by the Git Server, we will use a

TriggerBindingthis information is what gets passed to theTriggerTemplate.Show me the TriggerBinding YAML

apiVersion: triggers.tekton.dev/v1alpha1 kind: TriggerBinding metadata: name: build-deploy spec: params: - name: gitrevision value: $(body.head_commit.id) - name: gitrepositoryurl value: $(body.repository.url) - name: gittruncatedsha value: $(body.extensions.truncated_sha)

-

Install the TriggerBinding

kubectl apply -f tekton/trigger-binding.yaml

6.3 Create Trigger EventListener

6.3 Create Trigger EventListener

-

To be able to handle the http request sent by the GitHub Webhook, we need a webserver. Tekton provides a way to define this listeners that takes the

TriggerBindingand theTriggerTemplateas specification. We can specify Interceptors to handle any customization for example I only want to start a new Pipeline only when push happens on the main branch.Show me the Trigger Eventlistener YAML

apiVersion: triggers.tekton.dev/v1alpha1 kind: EventListener metadata: name: cicd spec: serviceAccountName: pipeline triggers: - name: cicd-trig bindings: - ref: build-deploy template: name: build-deploy interceptors: - cel: filter: "header.match('X-GitHub-Event', 'push') && body.ref == 'refs/heads/master'" overlays: - key: extensions.truncated_sha expression: "body.head_commit.id.truncate(7)"

-

Install the Trigger EventListener

kubectl apply -f tekton/trigger-listener.yaml

-

The Eventlister creates a deployment and a service you can list both using this command

kubectl get deployments,eventlistener,svc -l eventlistener=cicd

6.4 Get URL for Git WebHook

6.4 Get URL for Git WebHook

- If you are using the IBM Free Kubernetes cluster a public IP Address is alocated to your worker node and we will use this one for this part of the tutorial. It will depend on your cluster and how traffic is configured into your Kubernetes Cluster, you would need to configure an Application Load Balancer (ALB), Ingress, or in case of OpenShift a Route. If you are running the Kubernetes cluster on your local workstation using something like minikube, kind, docker-desktop, or k3s then I recommend a Cloud Native Tunnel solution like inlets by the open source contributor Alex Ellis.

- Expose the EventListener with Kourier

cat <<EOF | kubectl apply -f - apiVersion: networking.internal.knative.dev/v1alpha1 kind: Ingress metadata: name: el-cicd namespace: $CURRENT_NS annotations: networking.knative.dev/ingress.class: kourier.ingress.networking.knative.dev spec: rules: - hosts: - el-cicd.$CURRENT_NS.$KNATIVE_DOMAIN http: paths: - splits: - appendHeaders: {} serviceName: el-cicd serviceNamespace: $CURRENT_NS servicePort: 8080 visibility: ExternalIP visibility: ExternalIP EOF

- Get the url using using

CURRENT_NSandKNATIVE_DOMAINWARNING: Take into account that this URL is insecure is using http and not https, this means you should not use this type of URL for real work environments, In that case you would need to expose the service for the eventlistener using a secure connection using httpsGIT_WEBHOOK_URL=http://el-cicd.$CURRENT_NS.$KNATIVE_DOMAIN echo GIT_WEBHOOK_URL=$GIT_WEBHOOK_URL

- Add the Git Web Hook url to your Git repository

- Open Settings in your Github repository

- Click on the side menu Webhooks

- Click on the top right Add webhook

- Copy and paste the

$GIT_WEBHOOK_URLvalue into the Payload URL - Select from the drop down Content type application/json

- Select Send me everything to handle all types of git events.

- Click Add webhook

- Now make a change to the application manifest such like changing the message in knative/service.yaml to something like

My First Serveless App @ OSS NA 2020 🎉 🌮 🔥 🤗!and push the change to themasterbranch - (Optional) If you can't receive the git webhook, for example if using minikube you can emulate the git web hook using by sending a http request directly with git payload. You can edit the file tekton/hook.json to use a different git commit value.

curl -H "X-GitHub-Event:push" -d @tekton/hook.json $GIT_WEBHOOK_URL - A new Tekton PipelineRun gets created starting a new Pipeline Instance. You can check in the Tekton Dashboard for progress of use the tkn CLI

tkn pipeline logs -f --last

- To see the details of the execution of the PipelineRun use the tkn CLI

tkn pipelinerun describe --last

- The Knative Application Application is updated with the new Image built using the tag value of the 7 first characters of the git commit sha, describe the service using the kn CLI

kn service describe demo

- Invoke your new built revision for the Knative Application

It should print

curl http://demo.$SUB_DOMAINMy First Serveless App @ OSS NA 2020 🎉 🌮 🔥 🤗!