This repo is an experimentation to understand how concurrency and parallelism works with FastAPI. The ideas I'll be testing are:

- Scenario 1: Sending requests one after the other to the same endpoint and same path

- Scenario 2: Sending requests one after the other to two different endpoints on the same app

I am interested in understanding the behaviour of async and sync path definitions in FastAPI.

# Clone the repo and `cd` to the repo folder

$ pip install -r requirements.txt

$ brew install hey

# Start the service

$ python -m service.server # To exit the service, simply press `CMD+C`This basic service has 2 endpoints and both endpoints have 2 paths:

- Endpoint:

e1- Path:

p1(def, 10 second wait) - Path:

p2(async def, 1 second wait)

- Path:

- Endpoint:

e2- Path:

p1(def, 1 second wait) - Path:

p2(async def, 1 second wait)

- Path:

- What happens if we have 1 endpoint and 1 path?

- Open another terminal window for this experimentation setup

- If we send requests one after the other to the same endpoint with

defmethod (withoutasync) my assumption is that requests will be served sequentially and the last request will have to wait for a long time.

# Will send 10 requests concurrently to an endpoint with def path which internally waits for 1 second

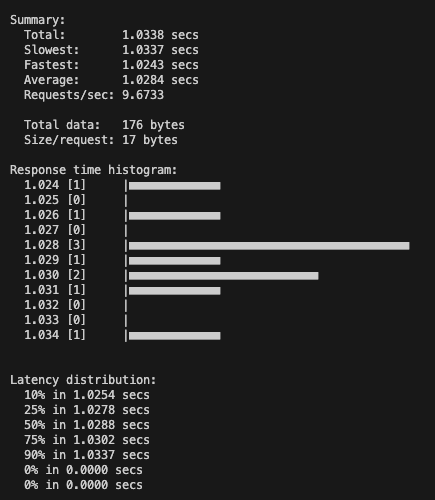

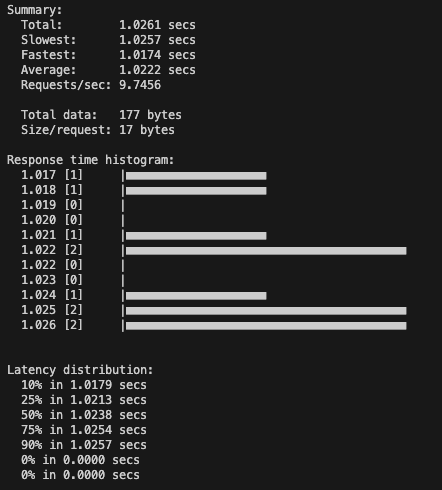

$ hey -n 10 -c 10 http://localhost:8888/e2/p1Hmm.., this is wierd. All requests are processed parallely even when the path p1 of endpoint e2 is defined to by def.

- If we send requests one after the other to the same endpoint with

async defmethod my assumption is that requests will be served concurrently and almost all the requests will be returned in the same time.

# Will send 10 requests concurrently to an endpoint with async def path which internally waits for 1 second

$ hey -n 10 -c 10 http://localhost:8888/e2/p2Again, the result is completely the opposite of what I expected. I later realized that until I await something inside a async function, the function never really gives the control back to the threadpool and hence acts lika a blocking call.

- What happens if we have 2 endpoints and separate paths in both of them?

- Open another terminal window for this experimentation setup

- If we send requests one after the other to two different endpoints

e1ande2. Both of them have paths which aredefmethods (withoutasync).

In one terminal window

# Will send 10 requests concurrently to an endpoint with def path which internally waits for 10 seconds

$ hey -n 10 -c 10 http://localhost:8888/e1/p1In another terminal window

# Will send 10 requests concurrently to an endpoint with def path which internally waits for 1 second

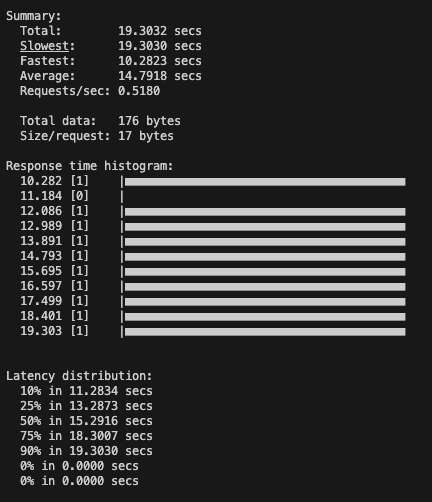

$ hey -n 10 -c 10 http://localhost:8888/e2/p1Endpoint 1

Endpoint 2

All 20 requests are processed parallely in both the endpoints.

- If we send requests one after the other to two different endpoints

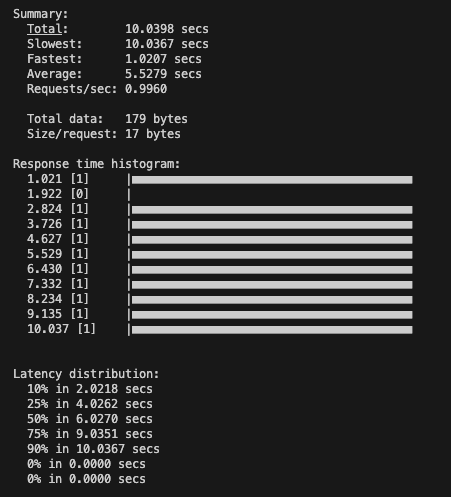

e1ande2. Both of them have paths which areasync defmethods.

In one terminal window

# Will send 10 requests concurrently to an endpoint with async def path which internally waits for 1 second

$ hey -n 10 -c 10 http://localhost:8888/e1/p2In another terminal window

# Will send 10 requests concurrently to an endpoint with async def path which internally waits for 1 second

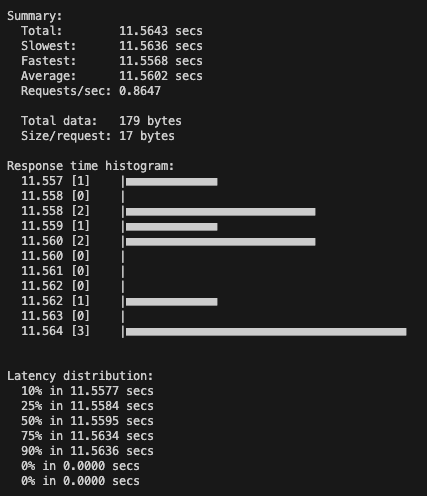

$ hey -n 10 -c 10 http://localhost:8888/e2/p2Endpoint 1

Endpoint 2

All 20 requests are processed sequentially one after the other.

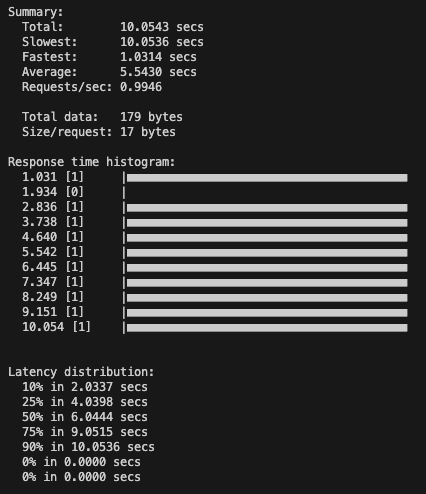

- If we send requests one after the other to two different endpoints

e1ande2. One of the endpoint hasasyncmthod and other is a simpledefmethod

In one terminal window

# Will send 10 requests concurrently to an endpoint with def path which internally waits for 10 seconds

$ hey -n 10 -c 10 http://localhost:8888/e1/p1In another terminal window

# Will send 10 requests concurrently to an endpoint with async def path which internally waits for 1 second

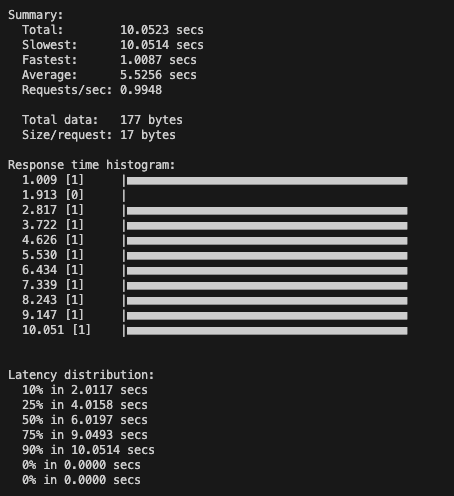

$ hey -n 10 -c 10 http://localhost:8888/e2/p2Endpoint 1

Endpoint 2

Endpoint 1 processes all requests parallely. Endpoint 2 processes requests sequentially. Depending on how you run it the time distribution might be a different. Requests to both endpoints are processed in separate threads.

FastAPI calls def functions in separate threads. This means that technically def functions in FastAPI works as concurrent functions.

For async def, until and unless you await something, you dont really get any beenfit of async and it ends up becoming a blocking call. The difference is that inside async def, you can await coroutines.

Read more about that here.