- single GPU: 16GB

-

Download the pretrained_model.

- BitTorrent link:

magnet:?xt=urn:btih:ZXXDAUWYLRUXXBHUYEMS6Q5CE5WA3LVA&dn=LLaMA - The final directory:

. ├── inference.py ├── llama │ ├── generation.py │ ├── __init__.py │ ├── model_parallel.py │ ├── model_single.py │ └── tokenizer.py ├── LLaMA │ ├── 7B │ │ ├── checklist.chk │ │ ├── consolidated.00.pth │ │ └── params.json │ ├── tokenizer_checklist.chk │ └── tokenizer.model ├── requirements.txt └── webapp_single.py

- BitTorrent link:

-

Install the related packages.

pip install -r requirements.txt

-

Run

- Inference by scripts

python inference.py

- Run by Gradio UI

python webapp_single.py --ckpt_dir LLaMA/7B \ --tokenizer_path LLaMA/tokenizer.model \ --server_name 127.0.0.1 \ --server_port 7806

- Inference by scripts

-

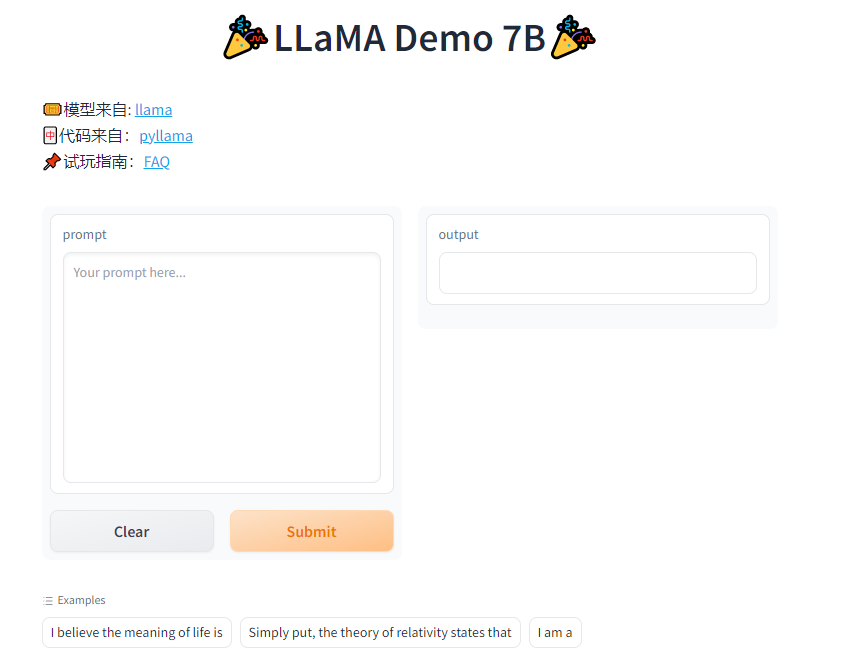

Gradio Result

- Open

http://127.0.0.1:7860to enjoy it.

- Open