The ICU Surveillance Event Detection System is an innovative solution designed to revolutionize patient monitoring within hospital intensive care units.

Leveraging state-of-the-art technologies such as YOLO (You Only Look Once) for real-time object detection, this system provides a comprehensive and adaptive approach to annotating and capturing critical events during shifts.

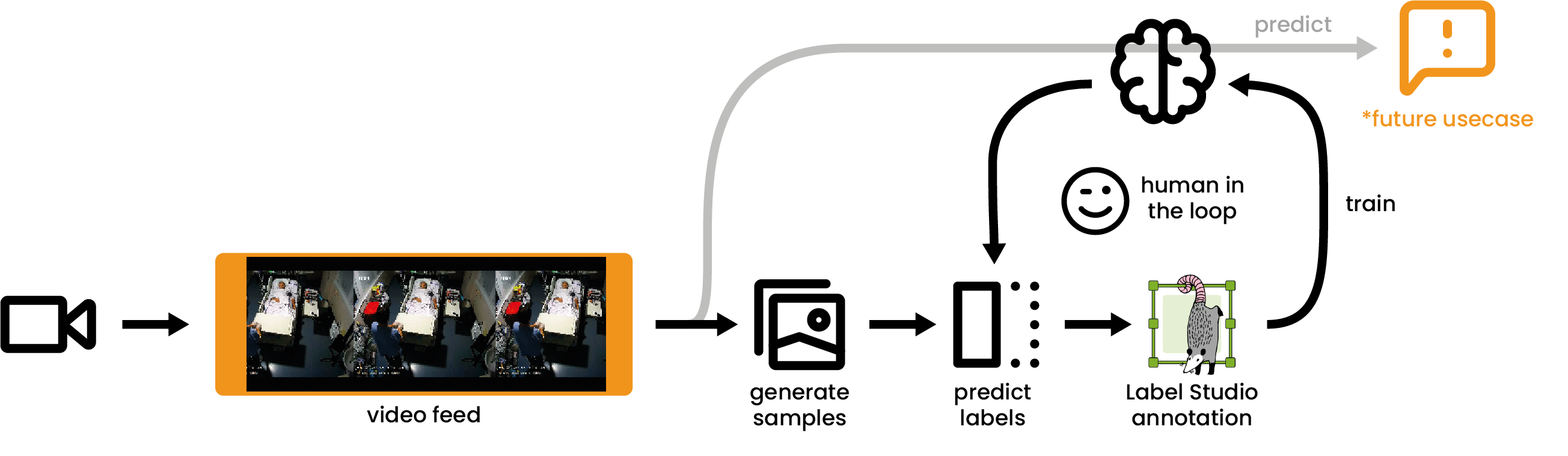

Open-Vocabulary Model: Pre-annotation with an open-vocabulary model allows for the identification of novel, untrained classes. This ensures adaptability to emerging scenarios and classes.

Human-in-the-Loop System: The integration of a human-in-the-loop system enhances model accuracy and adaptability through continuous annotating, creating a dynamic and self-improving system.

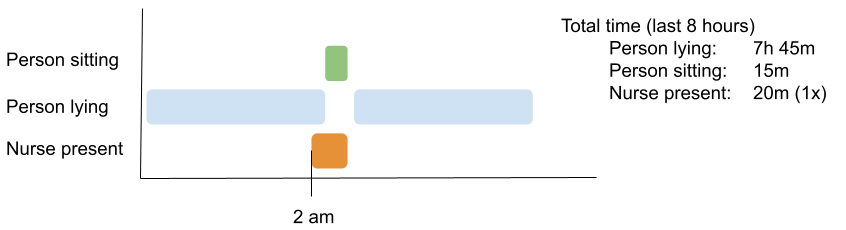

Real-time Monitoring: Utilizing YOLO for object detection, the system ensures fast and accurate identification of events within ICU rooms.

Data annotation process, the pinnacle of our system, unfolds in three distinct phases:

-

Video Segmentation: We initiate by extracting segments from videos, converting them into images for streamlined handling. This strategic approach ensures that only relevant portions of videos are utilized, thereby minimizing the data processing workload.

-

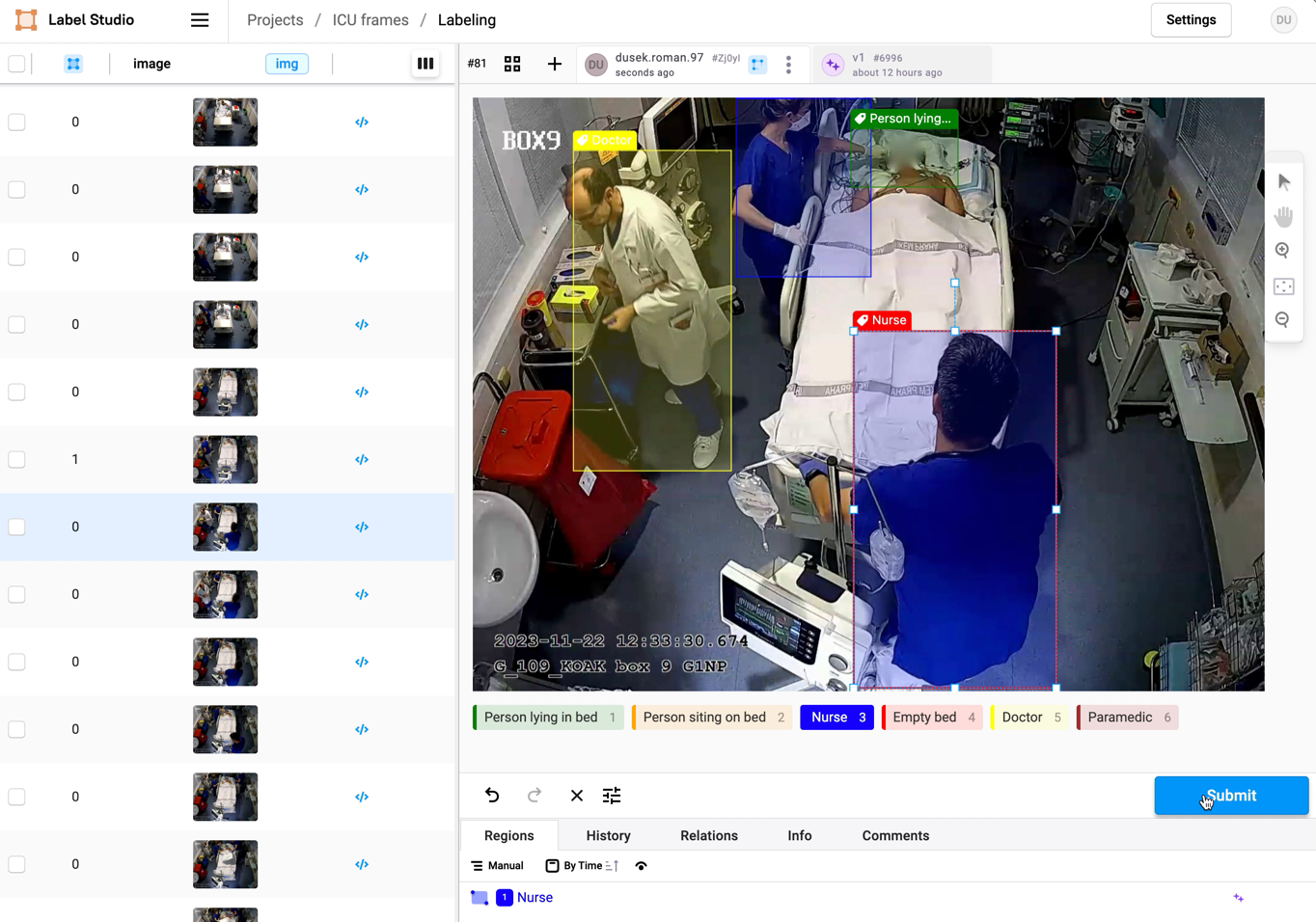

Open-Vocabulary Pre-Annotation: In the subsequent step, we employ an open-vocabulary model for pre-annotation of images. This model, designed for large-scale object detection, significantly accelerates the annotation process. Its expansive capabilities enhance the efficiency of annotators by providing a head start in labeling diverse objects within the images.

-

Human-Annotator Validation: The final phase involves human annotators reviewing and improving the pre-annotated images. This step serves a dual purpose: validating the accuracy of pre-annotations and allowing for any necessary corrections. This iterative process ensures that the dataset is curated, with human expertise refining the model's annotations. This cycle is systematically applied to all images in the dataset.

This multi-phase approach not only streamlines the annotation workflow but also guarantees the accuracy and relevance of annotations through a collaborative effort between automated processes and human validation.

The integration of YOLO into the ICU Surveillance Event Detection System plays a pivotal role in achieving real-time and accurate object detection within hospital intensive care units. YOLO is a state-of-the-art deep learning algorithm that excels at detecting and classifying objects in images or video frames swiftly and with high precision.

In the root folder, there's a Python notebook named Train_YOLOv8.ipynb, which details the YOLO training process.

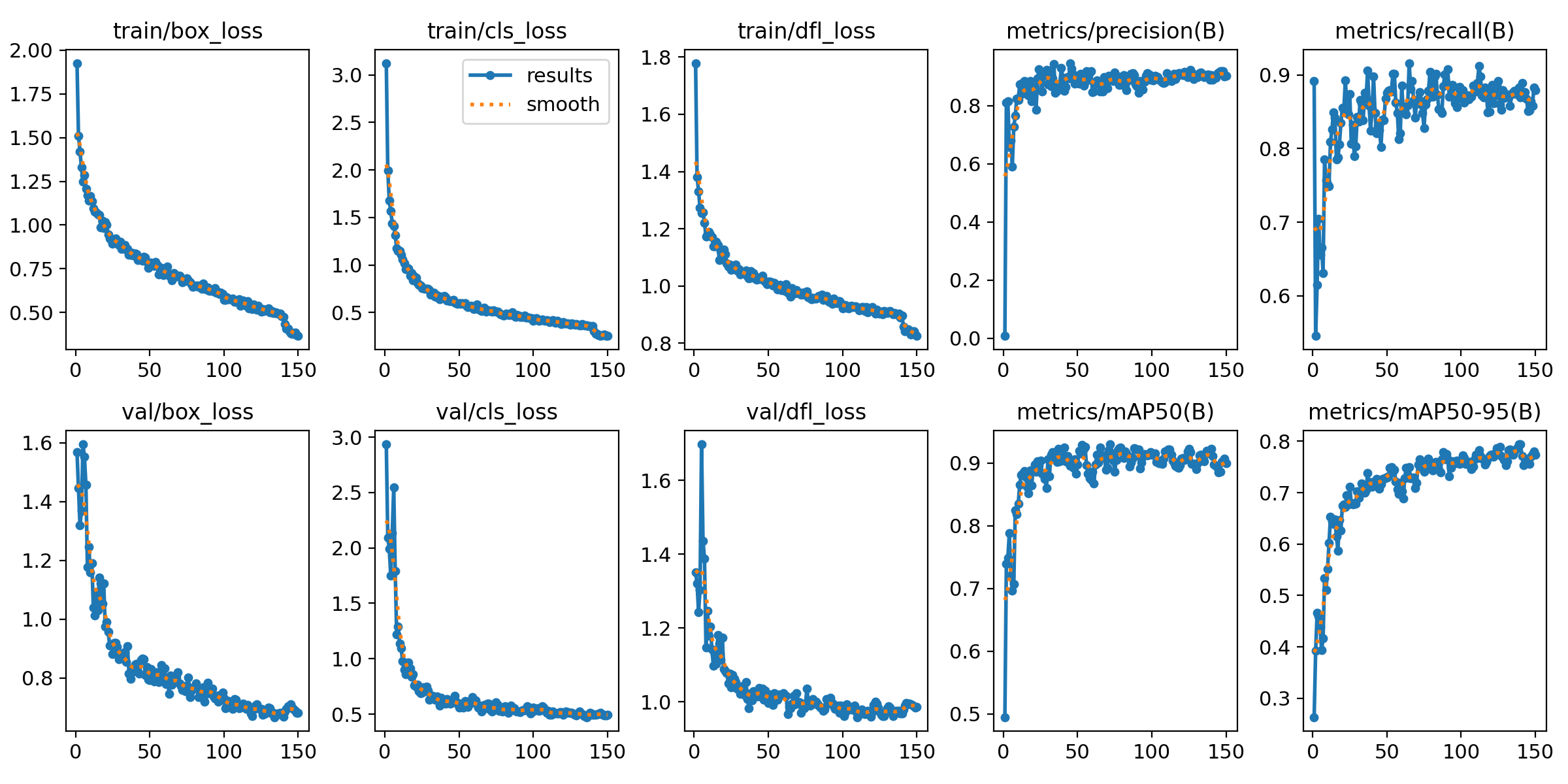

For the experimental training phase, YOLO v8 was employed. The training set comprised 400 images, while the validation set included 100 images. The process and outcomes of the training are meticulously documented in the final statistics, as shown in the image.

After extensive training, the most effective model weights were identified and are now stored in

After extensive training, the most effective model weights were identified and are now stored in weights/best.pt.

# after cloning repo, install dependencies

make install_localFor usage yolo trained model with weight, is need clone repo by this command

git lfs clone git@github.com:roman-dusek/VitalWatch.gitWe have made are project as simple as possible to use. All of the stages of our systems are called by make commands.

# clipp videos into interesting videos/frames to be annotated

make clip_videos

# script expects videos to be in myfiles/raw_videos folder

# script will create myfiles/images folder and put clipped videos there

# you can also choose export of short clips that can be also anotated for object tracking

> Clipping videos has finished.

> Clipped 12 videos, in total 8 hours of video.

> Prepared 554 interval for pre-annotation, in total 2 hours of video.# pre-annotate images using open-vocabulary model

make run_pre-annotation

> Pre-annotation has finished

> Recognizing 1873 objects in 554 intervals.# pre-annotate images using open-vocabulary model

make start_labelstudio# generate images from video in interesting parts, default store folder is myfiles/images

python video_frame_generator.py --file-name=video.avi --time-window=20 --threshold=700 --save-path=myfiles/images# generate yolo-export training structure which is ready for training. It's locate in myfiles/yolo-export folder

python generate_yolo_structure --label-file=/myfiles/pre-annotation/labels.csv --detection-file=myfiles/pre-annotation/detections.csvOne of our vision is to provide a real-time monitoring system for ICU patients. This can be easily achieved by uring YOLO to detect the patient's movement and send notification based on some dangerous events being detected to the medical staff. To achieve this, we have implemented a notification system that alerts the medical staff when a patient is in need of attention. Tha can be achieved both by sending notification to main dashboard or sending notification to mobile device.

example for ilustration purposes

List the next steps for your project, including additional features and improvements.

- automating pipeline to be able to run on locally inside IKEM

- schedulling jobs using airflow