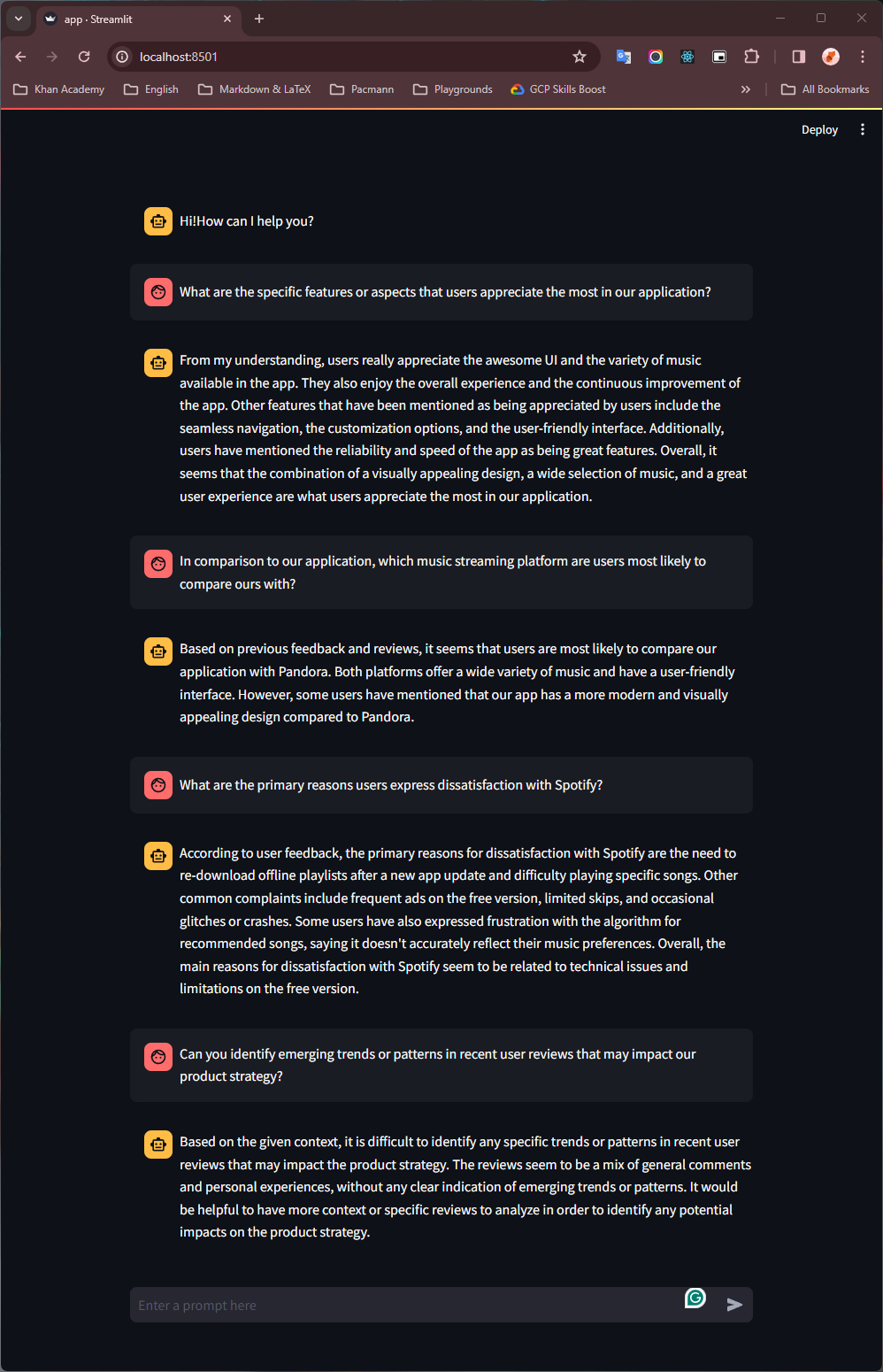

Q&A tool to extract meaningful information from the Google Store reviews.

Prerequisite:

- In this case, I use WSL or Ubuntu 22.04.

- Python3.10

- virtualenv

Steps:

-

Create and activate the virtualenv.

virtualenv .venv -p /usr/bin/python3.10 source .venv/bin/activate -

Install python packages.

pip install -r requirements.txt

Steps:

-

Load the environment variables that contain

OPENAIandPINECONEcredentials. Then, fill in your credentials. The example of envars were written in.env.examplefile. -

Edit and fill

.env.exampleand rename it to.env. -

Load the envars with

exportcommand.export $(grep -v '^#' .env | xargs)

-

Now we can run the execution pipeline by executing the

main.pyfile.python main.py

-

All the configuration params are saved in

config/config.yml -

The process will execute the other process in this order:

- Data ingestion (Automatically download the datasets).

- The important params:

force_ingest: set toTrueto replace the current datasets.

- The important params:

- Upsert the document (Select the useful features (column), Load the CSV as Documents, Chunk the documents, Document embedding, and upsert to the Pinecone database).

- The important params:

data_length: to determine the length of the datasets (number of rows as documents). With the value set to-1are mean upsert all data to Pinecone.force_upsert: set toTrueto replace the current documents in Pinecone.

- The important params:

- Evaluation (Evaluate the LLMs Performance), this part is already done in

notebook/10_evaluation.ipynbbut I still need time to implement it as part of the pipeline.

- Data ingestion (Automatically download the datasets).

Steps:

-

Load the environment variables that contain

OPENAIandPINECONEcredentials. Then, fill in your credentials. The example of envars was written in.env.examplefile. -

Edit and fill

.env.exampleand rename it to.env. -

Load the envars with

exportcommand.export $(grep -v '^#' .env | xargs)

-

Now we can run the streamlit apps by executing the

app.py.streamlit run app.py

- Convert

notebook/10_evaluation.ipynbto pipeline. - Try more prompt by using Summarization and Self-Querying to improve the ChatBot. Because this is a CSV data and we can use the Metadata as input.

- Spliting Upsert the document pipeline into more detail parts like:

- Select the useful features (column)

- Load the CSV as Documents

- Chunk the documents

- Document embedding

- Upsert to the Pinecone database