Code for the Paper "ConTextual: Evaluating Context-Sensitive Text-Rich Visual Reasoning in Large Multimodal Models".

For more details, please refer to the project page with dataset exploration and visualization tools: https://con-textual.github.io/.

🔔 If you have any questions or suggestions, please don't hesitate to let us know. You can comment on the Twitter, or post an issue on this repository.

[Webpage] [Paper] [Huggingface Dataset] [Leaderboard] [Twitter]

Tentative logo for ConTextual.

"A visually striking logo featuring 'Con' and 'ual' in black on white, with the word 'Text' in an inverted color scheme for emphasis."

- [2024.07.20] Video Presentation of ConTextual is out, https://www.youtube.com/watch?v=meVkolyVIBc!

- [2024.07.18] GPT4o-mini is just 1% behind GPT4o, even though considerably smaller in size!

- [2024.06.20] Claude-3.5-Sonnet outperforms Claude-3-Opus by 19% 😲

- [2024.05.18] GPT4o sets the new SOTA 62.8% 🥳 yet still trails humans by 7% — room for growth remains!

- [2024.05.18] Gemini-1.5-Flash and Gemini-1.5-Pro outperform the prior SOTA (GPT4v) by 9% and 5% respectively.

- [2024.05.01] ConTextual is accepted at ICML 2024🎉 main conference and DMLR workshop, ICML 2024.

- [2024.04.12] ConTextual is accepted as Spotlight at 1st Evaluation of Generative Foundation Models (EVGENFM) workshop at CVPR 2024!

- [2024.04.12] ConTextual is accepted at 3rd Vision Datasets Understanding (VDU) workshop at CVPR 2024!

- [2024.03.08] LLaVA-Next-34B pushes the open-source LMM SOTA to 36.8👏 Less than 4% behind Gemini-Pro-Vision😮

- [2024.03.05] Preliminary analysis on Claude 3 Opus. It also fails on the instances where the best performing model GPT4V fails 👀 Stay tuned for the detailed analysis! Tweet

- [2024.03.05] A Blog detailing the ConTextual dataset has been published in collaboration with HuggingFace. Thank you Clementine Fourrier for the support!

- [2024.01.24] The Test and Val Leaderboards are now hosted on HuggingFace! You can directly submit your Val subset predictions on HuggingFace to get quick view of where your model stands HuggingFace Leaderboard

- [2024.01.24] The Test and Val leaderboards are out Leaderboard

- [2024.01.24] Our dataset is now accessible at Huggingface Datasets.

- [2024.01.24] Our work was featured by AK on Twitter. Thanks!

- [2024.01.24] Our paper is now accessible at https://arxiv.org/abs/2401.13311.

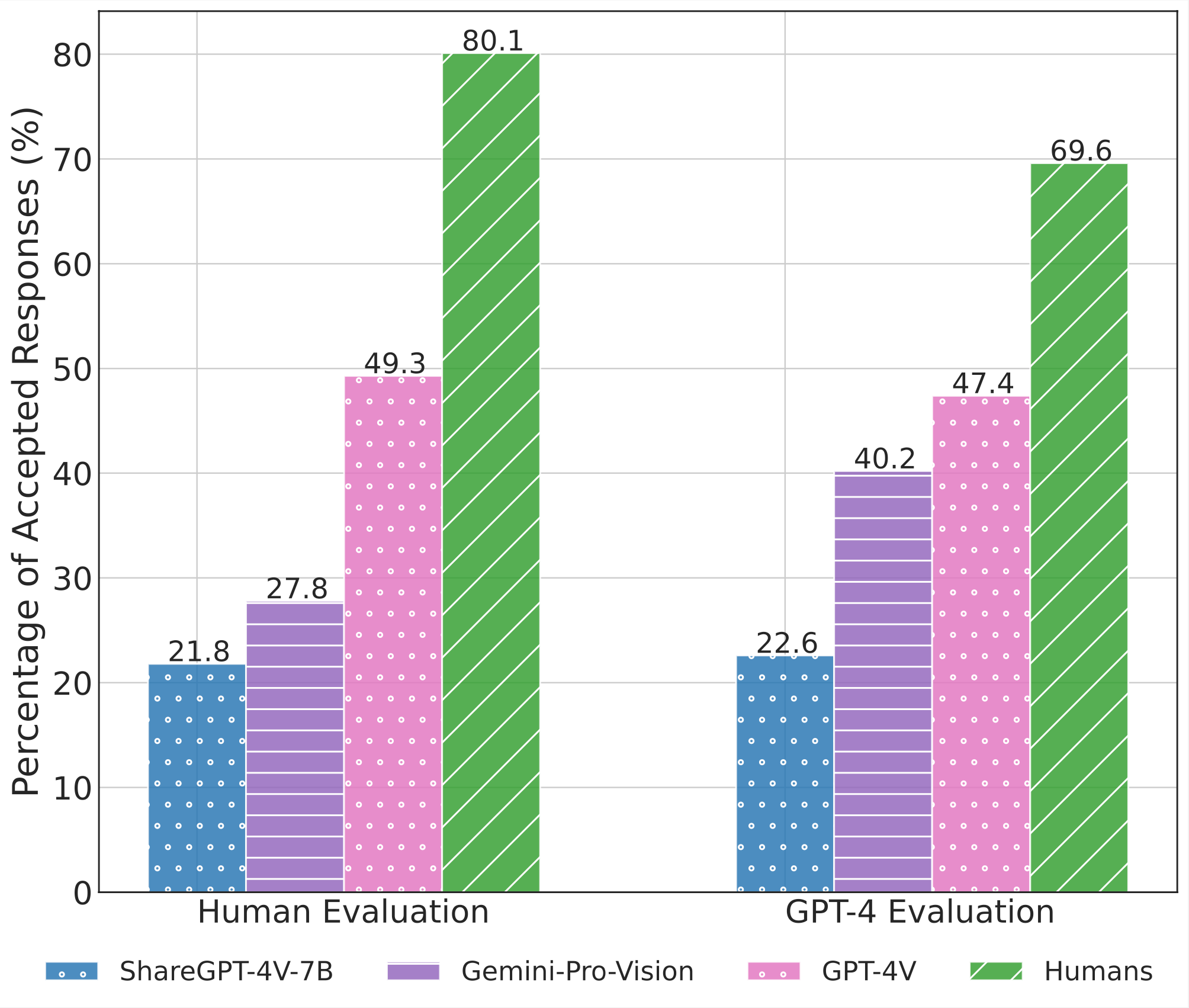

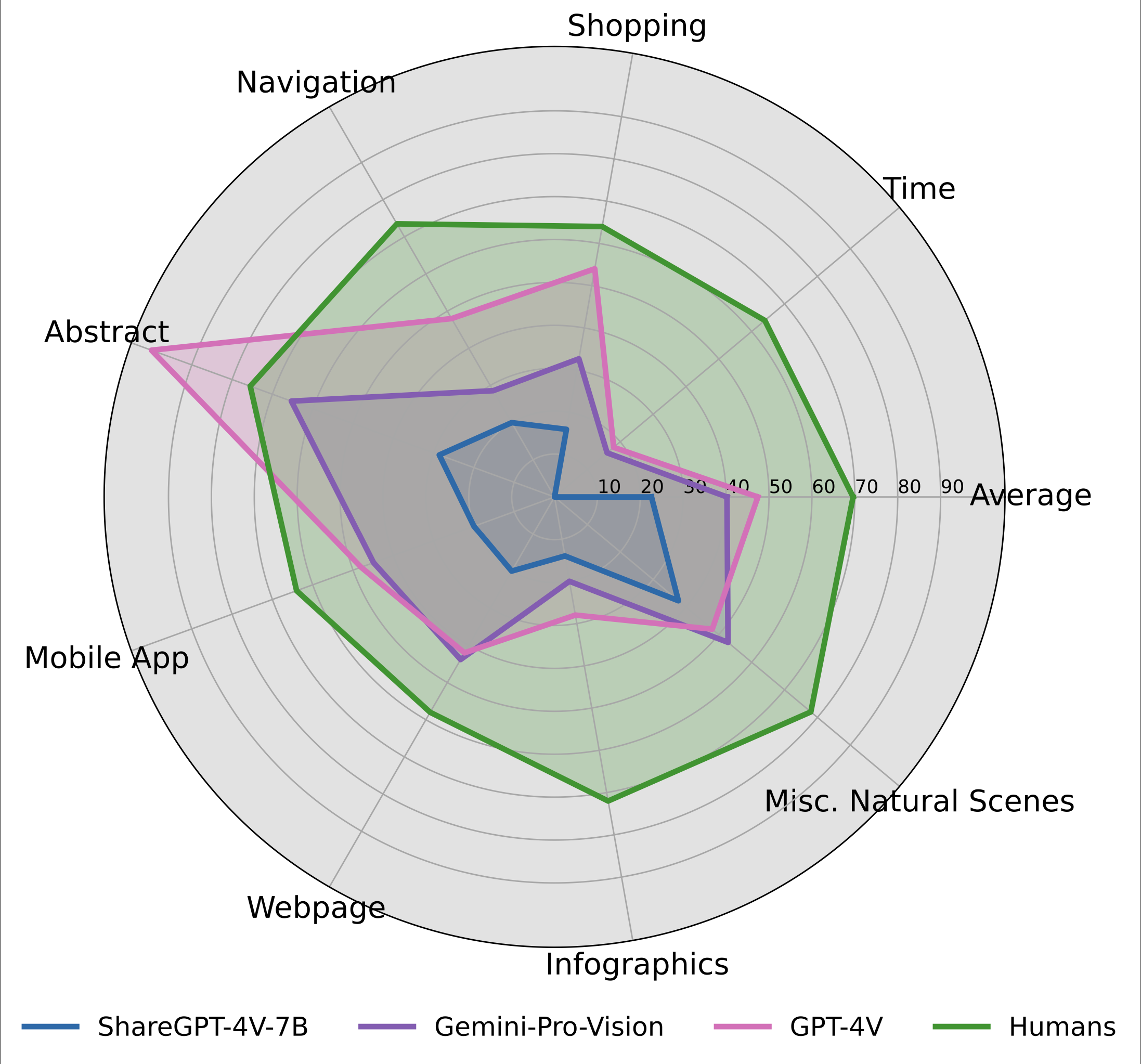

Recent advancements in AI have led to the development of large multimodal models (LMMs) capable of processing complex tasks involving joint reasoning over text and visual content in the image (e.g., navigating maps in public places). This paper introduces ConTextual, a novel benchmark comprising instructions designed explicitly to evaluate LMMs' ability to perform context-sensitive text-rich visual reasoning. ConTextual emphasizes diverse real-world scenarios (e.g., time-reading, navigation, shopping and more) demanding a deeper understanding of the interactions between textual and visual elements. Our findings reveal a significant performance gap of 30.8% between the best-performing LMM, GPT-4V(ision), and human capabilities using human evaluation indicating substantial room for improvement in context-sensitive text-rich visual reasoning. Notably, while GPT-4V excelled in abstract categories like meme and quote interpretation, its overall performance still lagged behind humans. In addition to human evaluations, we also employed automatic evaluation metrics using GPT-4, uncovering similar trends in performance disparities. We also perform a fine-grained evaluation across diverse visual contexts and provide qualitative analysis which provides a robust framework for future advancements in the LMM design.

Performance of GPT-4V, Gemini-Pro-Vision, ShareGPT-4V-7B, and Humans on ConTextual benchmark. Left: Human evaluation and an automatic GPT-4 based evaluation of the response correctness. Right: Fine-grained performance with variation in visual contexts using GPT-4 based evaluation.

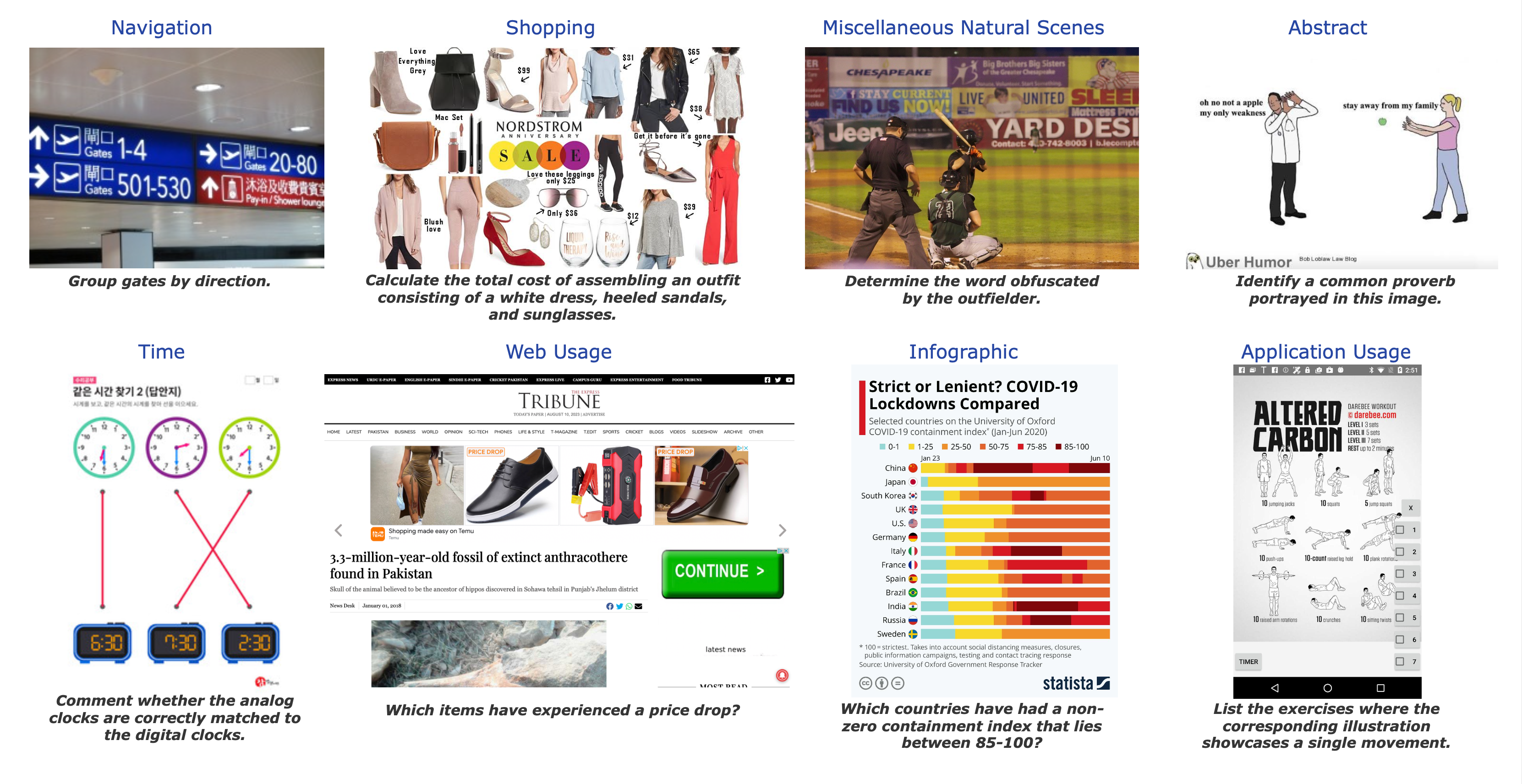

A sample from each of the 8 visual scenarios in ConTextual benchmark.

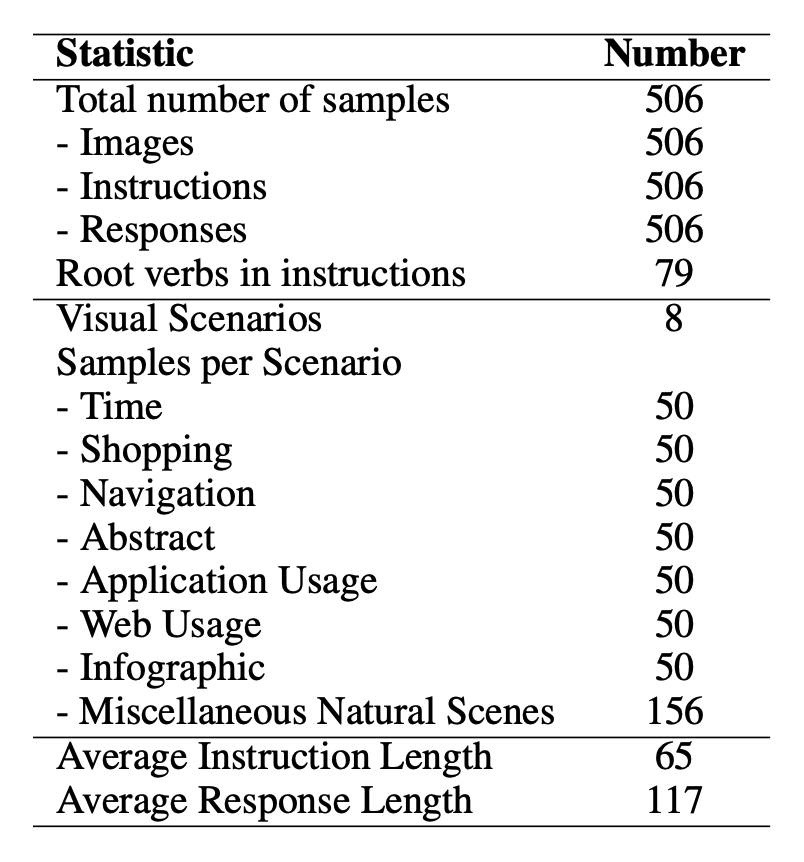

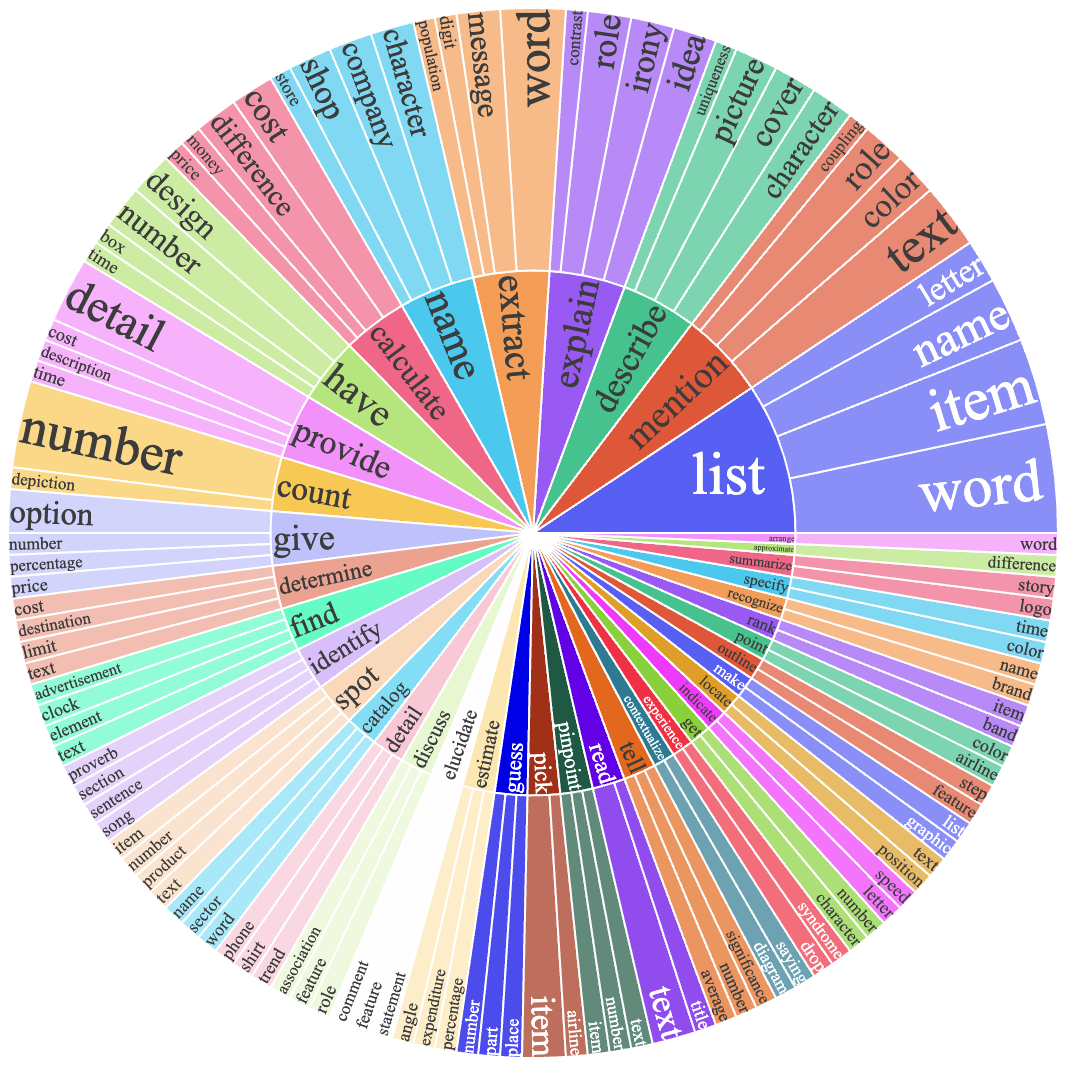

Data Statistics of ConTextual. Left: Key stats such as the quantity of samples, images, instructions, responses, both overall and categorized, and the lengths of instructions and responses. Right: Top 40 Most frequently occurring verbs (inner circle) and their top 4 direct nouns (outer circle) in the instructions.

For more details, you can find our project page here and our paper here.

🚨🚨 The leaderboard is continuously being updated. To submit your results to the leaderboard, please send your model predictions for the image urls in the data/contextual_test.csv data to rwadhawan7@g.ucla.edu and hbansal@g.ucla.edu.

| # | Model | Method | Source | Date | ALL | Time | Shop | Nav | Abs | App | Web | Info | Misc NS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| - | Human Performance* | - | Link | 2024-01-24 | 69.6 | 64.0 | 64.0 | 73.5 | 75.5 | 64.0 | 58.0 | 72.0 | 78.0 |

| 1 | GPT-4o 🥇 | LMM 🖼️ | Link | 2024-05-18 | 62.8 | 32.0 | 70.0 | 60.0 | 98.0 | 72.0 | 62.0 | 48.0 | 64.7 |

| 2 | GPT-4o-mini-2024-07-18 🥈 | LMM 🖼️ | Link | 2024-07-18 | 61.7 | 22.0 | 62.0 | 62.0 | 98.0 | 72.0 | 64.0 | 42.0 | 67.3 |

| 3 | Claude-3.5-Sonnet-2024-06-20 🥉 | LMM 🖼️ | Link | 2024-07-18 | 57.5 | 22.0 | 52.0 | 66.0 | 96.0 | 68.0 | 64.0 | 44.0 | 56.7 |

| 4 | Gemini-1.5-Flash-Preview-0514 | LMM 🖼️ | Link | 2024-05-18 | 56.0 | 30.0 | 51.0 | 52.1 | 84.0 | 63.0 | 63.2 | 42.8 | 61.7 |

| 5 | Gemini-1.5-Pro-Preview-0514 | LMM 🖼️ | Link | 2024-05-18 | 52.4 | 24.0 | 46.9 | 39.6 | 84.0 | 45.8 | 59.2 | 43.8 | 64.0 |

| 6 | GPT-4V(ision) | LMM 🖼️ | Link | 2024-01-24 | 47.4 | 18.0 | 54.0 | 48.0 | 100.0 | 48.0 | 42.0 | 28.0 | 48.0 |

| 7 | Gemini-Pro-Vision | LMM 🖼️ | Link | 2024-01-24 | 40.2 | 16.0 | 32.7 | 28.6 | 65.3 | 44.9 | 43.8 | 20.0 | 52.8 |

| 8 | Claude-3-Opus-2024-02-29 | LMM 🖼️ | Link | 2024-03-05 | 38.1 | 18.0 | 32.0 | 34.0 | 68.0 | 44.0 | 38.0 | 18.0 | 44.7 |

| 9 | LLaVA-Next-34B | LMM 🖼️ | Link | 2024-03-05 | 36.8 | 10.0 | 30.6 | 36.0 | 66.0 | 36.0 | 28.0 | 12.0 | 51.3 |

| 10 | LLaVA-Next-13B | LMM 🖼️ | Link | 2024-03-05 | 30.3 | 0.0 | 28.6 | 32.0 | 60.0 | 18.0 | 32.0 | 10.0 | 40.4 |

| 11 | ShareGPT-4V-7B | LMM 🖼️ | Link | 2024-01-24 | 22.6 | 0.0 | 16.0 | 20.0 | 28.6 | 20.0 | 20.0 | 14.0 | 37.7 |

| 12 | GPT-4 w/ Layout-aware OCR + Caption | LLM 👓 | Link | 2024-01-24 | 22.2 | 6.0 | 16.0 | 24.0 | 57.1 | 14.0 | 18.0 | 8.0 | 27.3 |

| 13 | Qwen-VL | LMM 🖼️ | Link | 2024-01-24 | 21.8 | 4.0 | 20.0 | 24.0 | 53.1 | 6.0 | 18.0 | 14.0 | 27.3 |

| 14 | LLaVA-1.5B-13B | LMM 🖼️ | Link | 2024-01-24 | 20.8 | 4.0 | 10.0 | 18.0 | 44.9 | 16.0 | 26.0 | 4.0 | 29.7 |

| 15 | mPLUG-Owl-v2-7B | LMM 🖼️ | Link | 2024-01-24 | 18.6 | 4.0 | 8.0 | 24.0 | 32.7 | 20.0 | 10.0 | 12.0 | 26.0 |

| 16 | GPT-4 w/ Layout-aware OCR | LLM 👓 | Link | 2024-01-24 | 18.2 | 8.0 | 20.0 | 18.0 | 34.7 | 10.0 | 16.0 | 16.0 | 20.7 |

| 17 | GPT-4 w/ OCR | LLM 👓 | Link | 2024-01-24 | 15.9 | 4.0 | 10.0 | 14.0 | 30.6 | 8.0 | 16.0 | 28.6 | 16.9 |

| 18 | LLaVAR-13B | LMM 🖼️ | Link | 2024-01-24 | 14.9 | 10.0 | 16.0 | 6.0 | 44.9 | 8.0 | 10.0 | 6.0 | 16.7 |

| 19 | BLIVA | LMM 🖼️ | Link | 2024-01-24 | 10.3 | 2.0 | 4.0 | 14.0 | 24.5 | 4.0 | 8.0 | 4.0 | 14.7 |

| 20 | InstructBLIP-Vicuna-7B | LMM 🖼️ | Link | 2024-01-24 | 9.7 | 2.0 | 4.0 | 16.0 | 20.0 | 6.0 | 12.0 | 2.1 | 12.0 |

| 21 | Idefics-9B | LMM 🖼️ | Link | 2024-01-24 | 7.7 | 4.0 | 2.0 | 12.0 | 12.0 | 0.0 | 6.0 | 2.0 | 13.3 |

| # | Model | Method | Source | Date | ALL | Time | Shop | Nav | Abs | App | Web | Info | Misc NS |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| - | Human Performance* | - | Link | 2024-01-24 | 72.0 | 90.0 | 90.0 | 70.0 | 70.0 | 60.0 | 50.0 | 80.0 | 70.0 |

| 1 | GPT-4V(ision) 🥇 | LMM 🖼️ | Link | 2024-01-24 | 53.0 | 40.0 | 60.0 | 50.0 | 100.0 | 50.0 | 30.0 | 30.0 | 56.7 |

| 2 | Gemini-Pro-Vision 🥈 | LMM 🖼️ | Link | 2024-01-24 | 37.8 | 20.0 | 30.0 | 10.0 | 80.0 | 44.4 | 30.0 | 20.0 | 46.7 |

| 3 | GPT-4 w/ Layout-aware OCR + Caption🥉 | LLM 👓 | Link | 2024-01-24 | 23.0 | 10.0 | 10.0 | 40.0 | 60.0 | 0.0 | 10.0 | 20.0 | 26.7 |

| 4 | ShareGPT-4V-7B | LMM 🖼️ | Link | 2024-01-24 | 17.0 | 0.0 | 30.0 | 10.0 | 30.0 | 10.0 | 10.0 | 0.0 | 26.7 |

| 5 | LLaVA-1.5B-13B | LMM 🖼️ | Link | 2024-01-24 | 16.0 | 0.0 | 10.0 | 10.0 | 50.0 | 10.0 | 20.0 | 10.0 | 16.7 |

Some notations in the table:

-

Human Performance*: Average human performance from AMT annotators.

-

GPT-4V: Open-AI's LMM GPT-4V(ision)

-

Gemini-Pro-Vision: Google's LMM Gemini-Pro-Vision

-

GPT-4: GPT-4 Turbo

-

Method types

- LMM 🖼️: Large Multimodal Model

- LLM 👓: Augmented Large Language Model

-

Visual Scenarios:

- Time: Time

- Shop: Shopping

- Nav: Navigation

- Abs: Abstract

- App: Application Usage

- Web: Web Usage

- Info: Infographic

- Misc NS: Miscellaneous Natural Scenes

All the data samoples are included in the Test set and we provide 100 samples as part of the Val to quickly prototype your model on ConTextual.

-

Test: 506 samples used to test the models performance on ConTextual, only consists of Image and Instruction pairs. Note 100 samples out of 506 samples are provided as Val subset.

-

Val: 100 samples used for model development and validation. Consists of Image, Instruction, Response triplets.

-

You can access the dataset on 🤗 HuggingFace:

- Test: Test link

- Val: Val subset link

-

For ease of access and response analysis, the dataset is also available in the repository.

- Test:

data/contextual_test.csv - Val:

data/contextual_val.csv

- Test:

The dataset is provided in json format and contains the following attributes:

{

"image_url": [string] url to the hosted image,

"instruction" [string] instruction text,

"response": [string] response text (only provided for samples in the val subset),

"category": visual scenario this example belongs to like 'time' and 'shopping' out of 8 possible scenarios in ConTextual

}

- (Step 1) Run the following command to generate (or update)

master.jsonwithGPT-4Vresults.

OPENAI_API_KEY=<YOUR OPENAI API KEY> python models/gpt4v/eval_gpt4v.py --data-file <location to data.csv> [data/contextual_val.csv or data/contextual_all.csv]- (Step 1) First authorize with

gcloudusinggcloud auth application-default login. - (Step 2) Run the following command to generate (or update)

master.jsonwithgemini-pro-visionresults.

python models/gemini-pro-vision/eval_gemini.py --image-file <location to data.csv>[data/contextual_val.csv or data/contextual_test.csv]We use the default settings from the respective project repos.

- (Step 1) Run the following command to generate

gpt4_judgments.jsoncontaining all the gpt-4 judgements.

OPENAI_API_KEY=<YOUR OPENAI KEY> python eval/response_eval_gpt4.py --data-file data/contextual_val.csv[file with responses] --master master.json --model-name <model name in master.json>- (Step 1) Run the following command to print the average acceptance rating along with visual context specific rating and generate a

gpt_4_model_analysis.jsonfile.

python analysis/analyze_performance.py --data-file data/contextual_val.csv[file with responses] --judgment-file gpt4_judgments.json --model-name <model name in master.json>Here are the key contributors to this project:

Rohan Wadhawan1 * , Hritik Bansal1 * , Kai-Wei Chang1, Nanyun Peng1

1University of California, Los Angeles, *Equal Contribution

If you find ConTextual useful for your your research and applications, please kindly cite using this BibTeX:

@misc{wadhawan2024contextual,

title={ConTextual: Evaluating Context-Sensitive Text-Rich Visual Reasoning in Large Multimodal Models},

author={Rohan Wadhawan and Hritik Bansal and Kai-Wei Chang and Nanyun Peng},

year={2024},

eprint={2401.13311},

archivePrefix={arXiv},

primaryClass={cs.CV}

}