Read this in other languages: 日本語.

Use Machine Learning to Predict U.S. Opioid Prescribers with Watson Studio and Scikit Learn

Data Science Experience is now Watson Studio. Although some images in this code pattern may show the service as Data Science Experience, the steps and processes will still work.

This Code Pattern will focus on and guide you through how to use scikit learn and python in Watson Studio to predict opioid prescribers based off of a 2014 kaggle dataset.

Opioid prescriptions and overdoses are becoming an increasingly overwhelming problem for the United States, even causing a declared state of emergency in recent months. Though we, as data scientists, may not be able to single handedly fix this problem, we can dive into the data and figure out what exactly is going on and what may happen in the future given current circumstances.

This Code Pattern aims to do just that: it dives into a kaggle dataset which looks at opioid overdose deaths by state as well as different, unique physicians, their credentials, specialties, whether or not they've prescribed opioids in 2014 as well as the specific names of the prescriptions they have prescribed. Follow along to see how to explore the data in a Watson Studio notebook, visualize a few initial findings in a variety of ways, including geographically, using Pixie Dust. Pixie Dust is a great library to use when you need to explore your data visually very quickly. It literally only needs one line of code! Once that initial exploration is complete, this Code Pattern uses the machine learning library, scikit learn, to train several models and figure out which have the most accurate predictions of opioid prescriptions. Scikit learn, if you're unfamiliar, is a machine learning library, which is commonly used by data scientists due to its ease of use. Specifically, by using the library you're able to easily access a number of machine learning classifiers which you can implement with relatively minimal lines of code. Even more, scikit learn allows you to visualize your output, showcasing your findings. Because of this, the library is often used in machine learning classes to teach what different classifiers do- much like the comparative output this Code Pattern highlights! Ready to dive in?

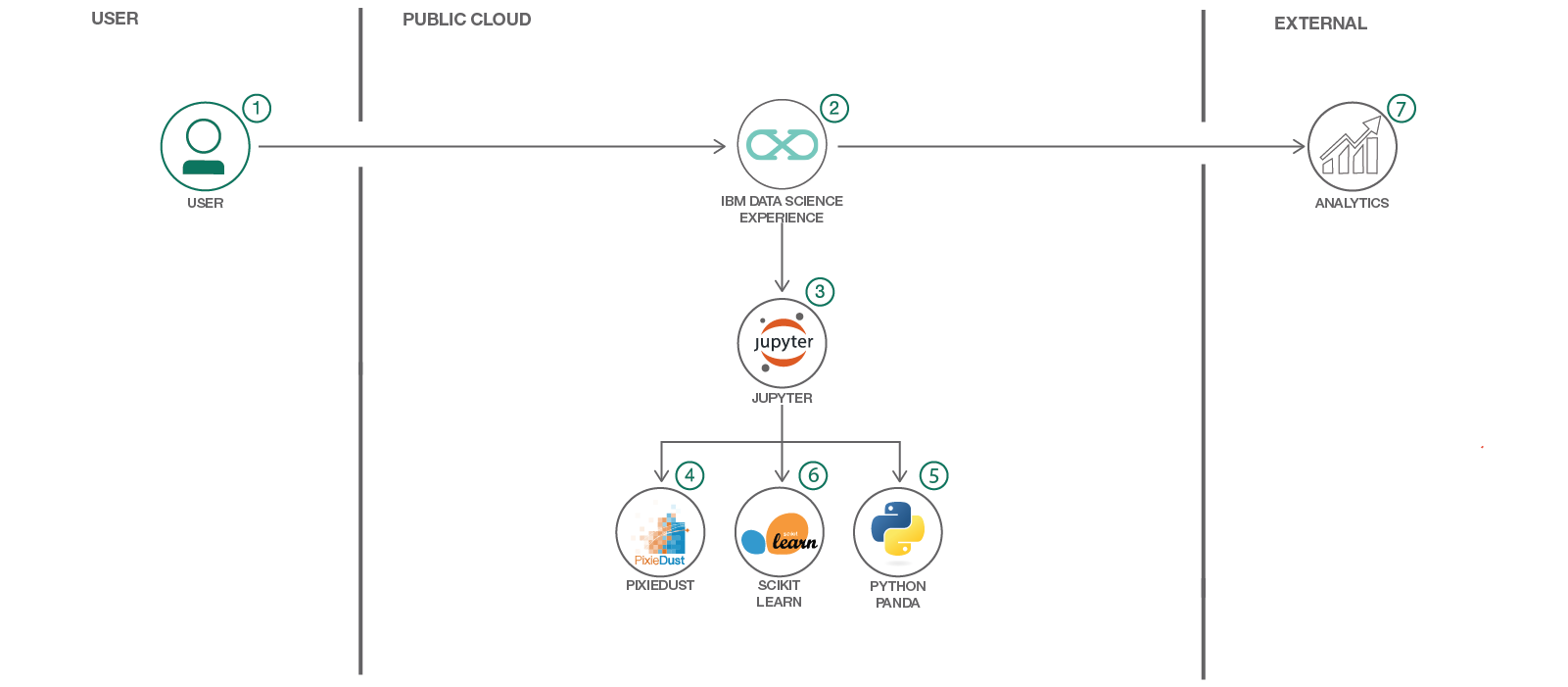

Flow

- Log into IBM's Watson Studio service.

- Upload the data as a data asset into Watson Studio.

- Start a notebook in Watson Studio and input the data asset previously created.

- Explore the data with pandas

- Create data visualizations with Pixie Dust.

- Train machine learning models with scikit learn.

- Evaluate their prediction performance.

Included components

- IBM Watson Studio: Analyze data using RStudio, Jupyter, and Python in a configured, collaborative environment that includes IBM value-adds, such as managed Spark.

- Jupyter Notebook: An open source web application that allows you to create and share documents that contain live code, equations, visualizations, and explanatory text.

- PixieDust: Provides a Python helper library for IPython Notebook.

Featured technologies

- Data Science: Systems and scientific methods to analyze structured and unstructured data in order to extract knowledge and insights.

- Python: Python is a programming language that lets you work more quickly and integrate your systems more effectively.

- pandas: A Python library providing high-performance, easy-to-use data structures.

Steps

This Code Pattern consists of two activities:

Run using a Jupyter notebook in the IBM Watson Studio

- Sign up for the Watson Studio

- Create a new Watson Studio project

- Create the notebook

- Upload data

- Run the notebook

- Save and Share

1. Sign up for the Watson Studio

Log in or sign up for IBM's Watson Studio.

Note: if you would prefer to skip the remaining Watson Studio set-up steps and just follow along by viewing the completed Notebook, simply:

- View the completed notebook and its outputs, as is.

- While viewing the notebook, you can optionally download it to store for future use.

- When complete, continue this code pattern by jumping ahead to the Analyze and Predict the data section.

2. Create a new Watson Studio project

- Select the

New Projectoption from the Watson Studio landing page and choose theData Scienceoption.

- To create a project in Watson Studio, give the project a name and either create a new

Cloud Object Storageservice or select an existing one from your IBM Cloud account.

- Upon a successful project creation, you are taken to a dashboard view of your project. Take note of the

AssetsandSettingstabs, we'll be using them to associate our project with any external assets (datasets and notebooks) and any IBM cloud services.

3. Create the Notebook

- From the project dashboard view, click the

Assetstab, click the+ New notebookbutton.

- Give your notebook a name and select your desired runtime, in this case we'll be using the associated Spark runtime.

- Now select the

From URLtab to specify the URL to the notebook in this repository.

- Enter this URL:

https://github.com/IBM/predict-opioid-prescribers/blob/master/notebooks/opioid-prescription-prediction.ipynb

- Click the

Createbutton.

4. Upload data

- Return to the project dashboard view and select the

Assetstab. - This project has 3 datasets. Upload all three as data assets in your project. Do this by loading each dataset into the pop up section on the right hand side. Please see a screenshot of what it should look like below.

- Once complete, go into your notebook in the edit mode (click on the pencil icon next to your notebook on the dashboard).

- Click on the

1001data icon in the top right. The data files should show up. - Click on each and select

Insert Pandas Data Frame. Once you do that, a whole bunch of code will show up in your first cell. - Make sure your

opioids.csvis saved asdf_data_1,overdoses.csvis saved asdf_data_2andprescriber_info.csvis saved asdf_data_3so that it is consistent with the original notebook. You may have to edit this because when your data is loaded into the notebook, it may be defined as a continuation of data frames, based on where I left off. This means your data may show up withopioids.csvasdf_data_4,overdoses.csvasdf_data_5and so on. Either adjust the data frame names to be in sync with mine (remove where I loaded data and rename your data frames or input your loading information into the original code) or edit the following code below accordingly. Do this to make sure the code will run!

5. Run the notebook

When a notebook is executed, what is actually happening is that each code cell in the notebook is executed, in order, from top to bottom.

Each code cell is selectable and is preceded by a tag in the left margin. The tag

format is In [x]:. Depending on the state of the notebook, the x can be:

- A blank, this indicates that the cell has never been executed.

- A number, this number represents the relative order this code step was executed.

- A

*, this indicates that the cell is currently executing.

There are several ways to execute the code cells in your notebook:

- One cell at a time.

- Select the cell, and then press the

Playbutton in the toolbar.

- Select the cell, and then press the

- Batch mode, in sequential order.

- From the

Cellmenu bar, there are several options available. For example, you canRun Allcells in your notebook, or you canRun All Below, that will start executing from the first cell under the currently selected cell, and then continue executing all cells that follow.

- From the

- At a scheduled time.

- Press the

Schedulebutton located in the top right section of your notebook panel. Here you can schedule your notebook to be executed once at some future time, or repeatedly at your specified interval.

- Press the

6. Save and Share

How to save your work:

Under the File menu, there are several ways to save your notebook:

Savewill simply save the current state of your notebook, without any version information.Save Versionwill save your current state of your notebook with a version tag that contains a date and time stamp. Up to 10 versions of your notebook can be saved, each one retrievable by selecting theRevert To Versionmenu item.

How to share your work:

You can share your notebook by selecting the Share button located in the top

right section of your notebook panel. The end result of this action will be a URL

link that will display a “read-only” version of your notebook. You have several

options to specify exactly what you want shared from your notebook:

Only text and output: will remove all code cells from the notebook view.All content excluding sensitive code cells: will remove any code cells that contain a sensitive tag. For example,# @hidden_cellis used to protect your dashDB credentials from being shared.All content, including code: displays the notebook as is.- A variety of

download asoptions are also available in the menu.

Analyze and Predict the data

- Explore the different datasets using python, pandas and Pixie Dust. Once again, feel free to follow along in Watson Studio.

To get familiar with your data, explore it with visualizations and by looking at subsets of the data. For example, we see that though California has the highest overdoses, when we correct for population we see that West Virginia actually has the highest rate of overdoses per capita.

- Clean the data using python.

Every dataset has its imperfections. Let's clean ours up by making the States consistent and changing our columns to allow us to use them as integers.

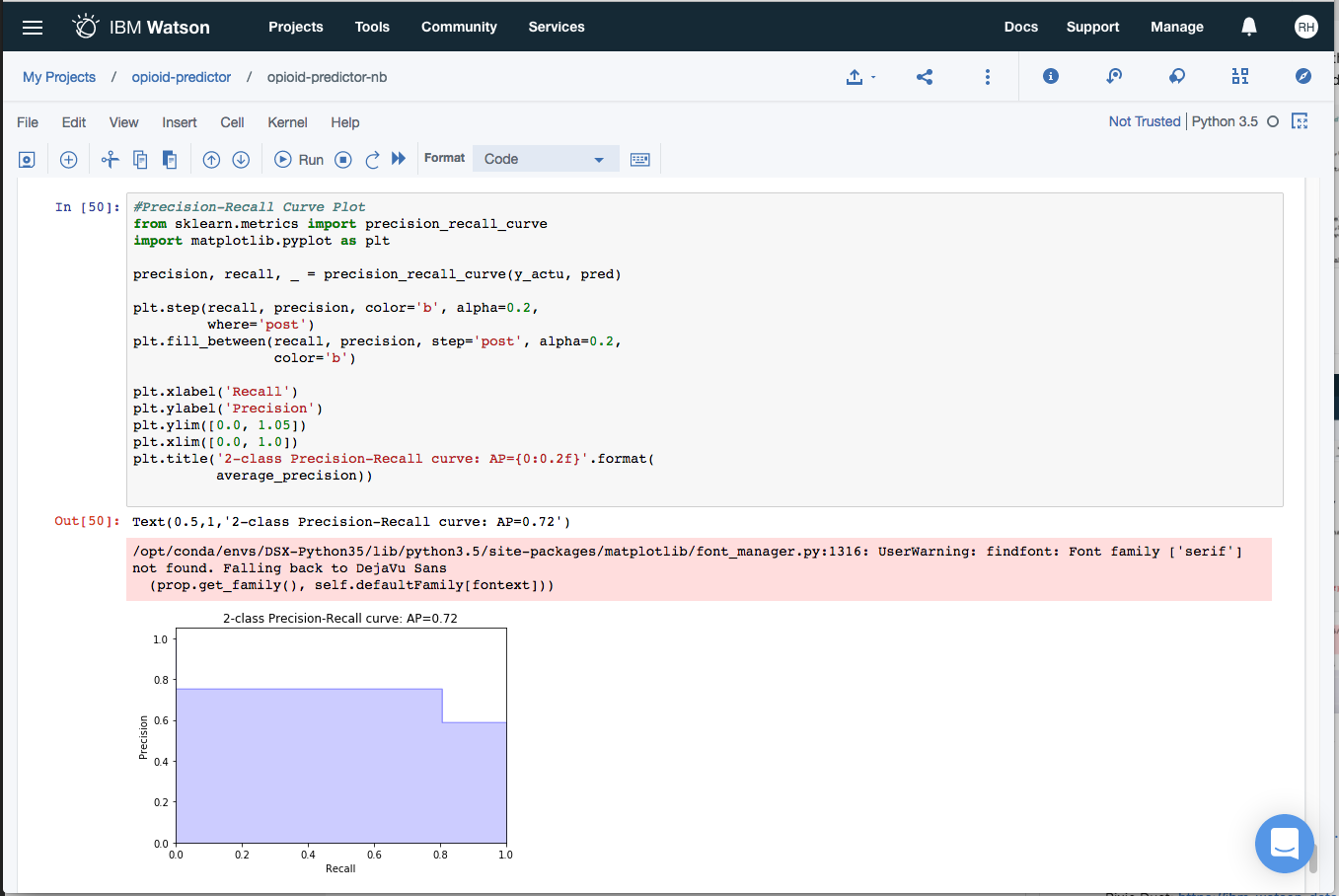

- Run several models to predict opioid prescribers using scikit learn.

You can check out the output in the notebook or in the image below. In this step we run several machine learning models in order to evaluate which is the most effective at predicting opioid prescribers. Though it is beyond the scope of this pattern, by predicting these opioid prescribers you are laying the framework to predict the likelihood that a certain type of doctor prescribes opioids. Additionally, if we had more years of data (beyond 2014) we could also predict future rates of overdoses. For now, we'll just take a look at the models.

- Evaluate the models.

For the code, see the notebook found locally under notebooks, or view the notebook here!

Sample output

After running various classifiers, we find that Random Forest, Gradient Boosting and our Ensemble models had the best performance on predicting opioid prescribers.

Awesome job following along! Now go try and take this further or apply it to a different use case!

Links

- Watson Studio: https://datascience.ibm.com/docs/content/analyze-data/creating-notebooks.html.

- Pandas: https://pandas.pydata.org/

- Pixie Dust: https://ibm-watson-data-lab.github.io/pixiedust/displayapi.html#introduction

- Data: https://www.kaggle.com/apryor6/us-opiate-prescriptions/data

- Scikit Learn: http://scikit-learn.org/stable/

Learn more

- Data Analytics Code Patterns: Enjoyed this Code Pattern? Check out our other Data Analytics Code Patterns

- AI and Data Code Pattern Playlist: Bookmark our playlist with all of our Code Pattern videos

- Watson Studio: Master the art of data science with IBM's Watson Studio

- Spark on IBM Cloud: Need a Spark cluster? Create up to 30 Spark executors on IBM Cloud with our Spark service

License

This code pattern is licensed under the Apache Software License, Version 2. Separate third party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 (DCO) and the Apache Software License, Version 2.