NLU2019

NLU2019 project: Question NLI. The task is to determine whether the context sentence contains the answer to the question (entailment or not entailment).

Usage:

- Download dataset.

$ python download_glue_data.py --data_dir glue_data --tasks allThis code borrowed from here, you need using VPN to run it, or you can using my provided 'glue_data.zip' easily.

- Install

apex.apexis a pyTorch extension: Tools for easy mixed precision and distributed training in Pytorch. The official repository is here.

$ git clone https://github.com/NVIDIA/apex

$ cd apex

$ pip install -v --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" .- Install the necessary libary

pytorch-pretrained-bert.

$ pip install pytorch-pretrained-bert- Clone this repository.

$ git clone https://github.com/weidafeng/NLU2019.git

$ cd NLU2019- Train. You will get the pretrained model flies('config.json eval_results.txt pytorch_model.bin vocab.txt') in

glue_data/QNLI/eval_result.

$ bash train.shHere is my results:

acc = 0.9110378912685337

eval_loss = 0.501230152572013

global_step = 16370

loss = 0.0006768958065624673

- Predict. You will load the pretrained model to predict, and get the submission

QNLI.tsvinglue_data/QNLI/eval_result.

$ bash test.sh- Submission. Create a zip of the prediction TSVs, without any subfolders, e.g. using:

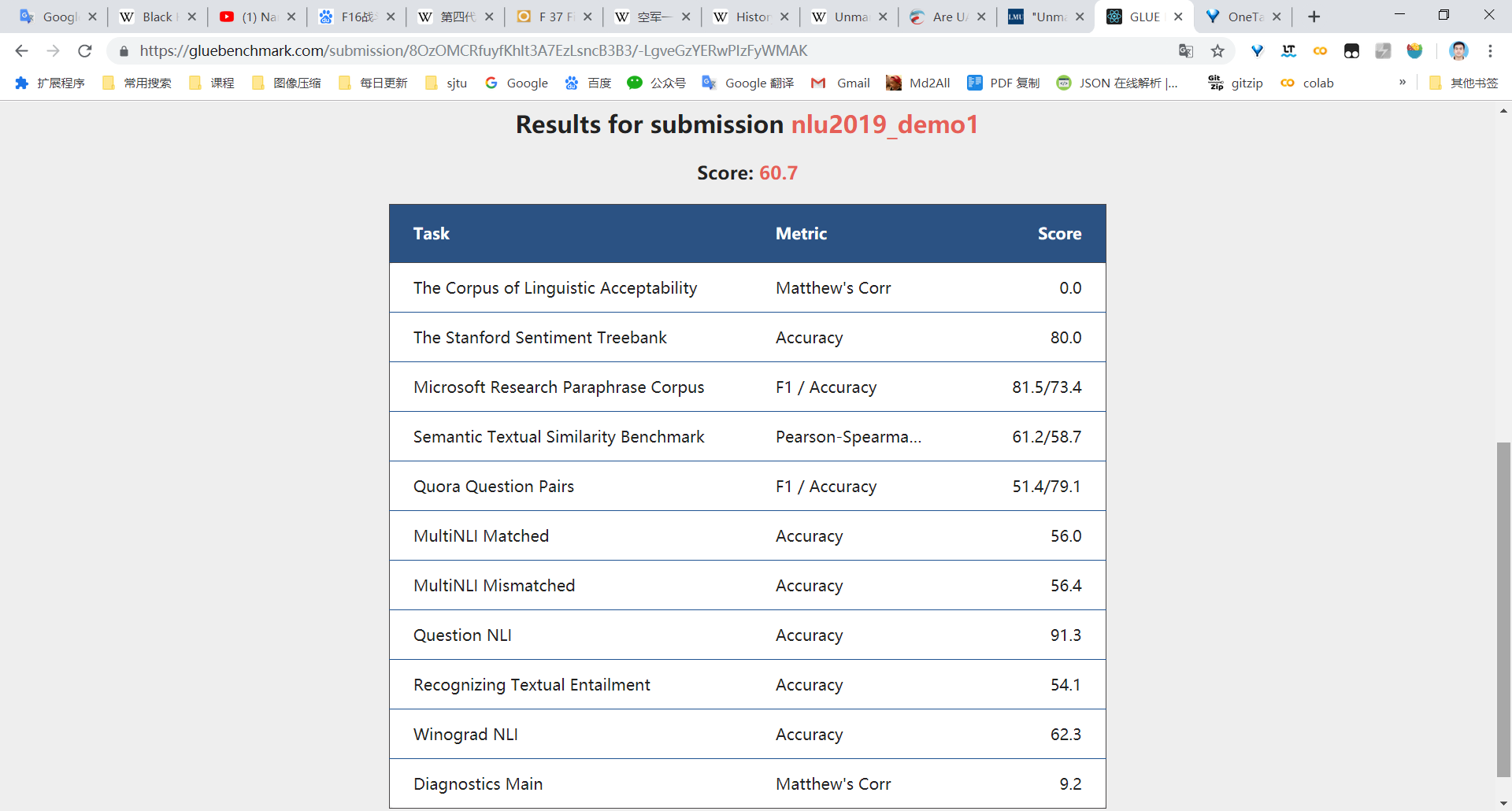

$ zip -r submission.zip *.tsvHere is my glue result:

Trained model is too big to store in GitHub, if needed, please feel free to contact me.

Trained model is too big to store in GitHub, if needed, please feel free to contact me.

File path tree and annotations:

├─bert-base-uncased # path to store the cached pretraind `bert` model(automatically download from s3 link)

├─glue_data

│ └─QNLI # path to sort GLUE data

│ └─results # path to store trained model('config.json eval_results.txt pytorch_model.bin vocab.txt') and the prediction results(`QNLI.tsv`)

├─model # main code for this project

└─submission # submission file