The official source code for LM4SGG: Large Language Model for Weakly Supervised Scene Graph Generation, accepted at CVPR 2024.

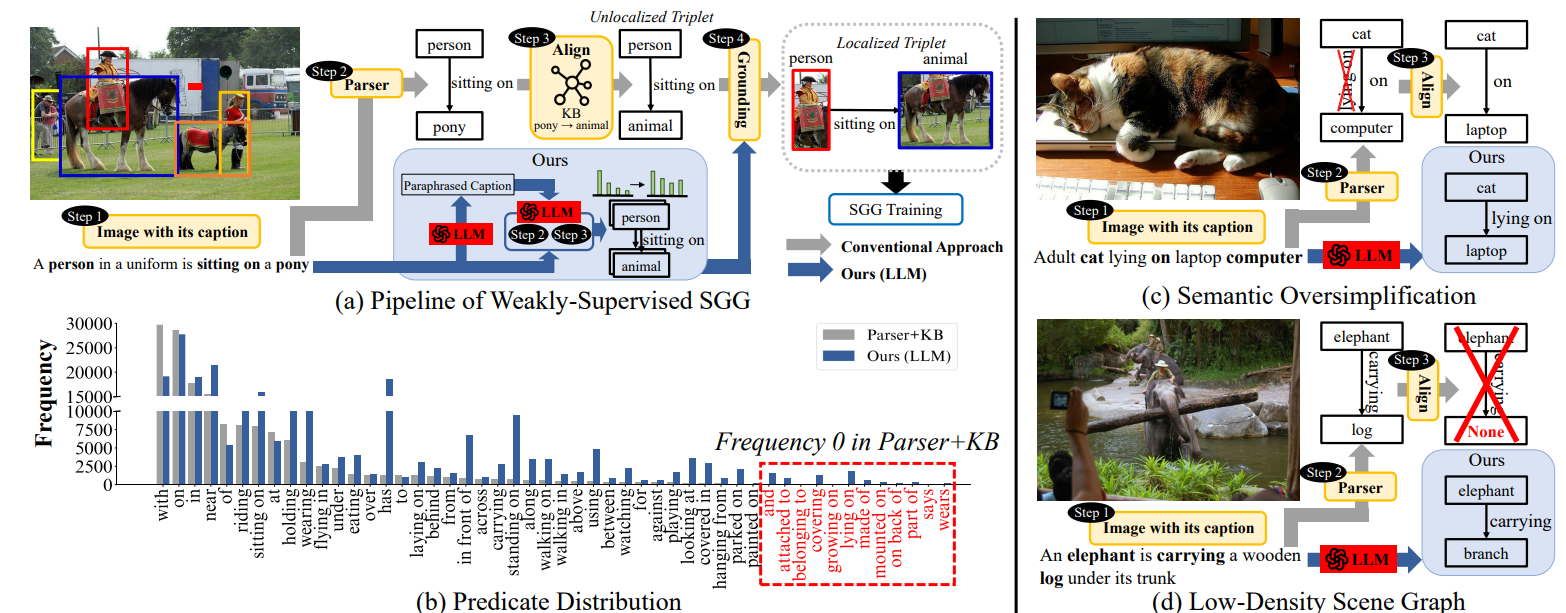

Addressing two issues inherent in the conventional approach(Parser+Knowledge Base(WordNet))

-

Semantic Over-simplification (Step 2)

The standard scene graph parser commonly leads to converting the fine-grained predicates into coarse-grained predicates, which we refer to as semantic over-simplification. For example, in Figure (c), an informative predicate lying on in the image caption is undesirably converted into a less informative predicate on, because the scene parser operating on rule-based fails to capture the predicate lying on at once, and its heuristic rules fall short of accommodating the diverse range of caption's structure. As a result, in Figure (b), the predicate distribution follows long-tailedness. To make matter worse, 12 out of 50 predicates are non-existent, which means that these 12 predicates can never be predicted. -

Low-density Scene Graph (Step 3)

The triplet alignment based on knowledge base (i.e., WordNet) leads to low-density scene graphs, i.e., the number of remaining triplets after Step 3 is small. Specifically, a triplet is discarded if any of three components (i.e., subject, predicate, object) or their synonym/hypernym/hyponym within the triplet fail to align with the entity or predicate classes in the target data. For example, in Figure (d), the triplet <elephant, carrying, log> is discarded because log does not exist in the target data nor its synonym/hypernym, even if elephant and carrying do exist. As a result, a large number of predicates is discarded, resulting in a poor generalization and performance degradation. This is attributed to the fact that the static structured knowledge of KB is insufficient to cover the semantic relationships among a wide a range of words.

To alleviate the two issues aforementioned above, we adopt a pre-trained Large Language Model (LLM). Inspired by the idea of Chain-of-Thoughts (CoT), which arrives at an answer in a stepwise manner, we seperate the triplet formation process into two chains, each of which replaces the rule-based parser in Step 2 (i.e., Chain-1) and the KB in Step 3 (i.e., Chain-2).

Regarding an LLM, we employ gpt-3.5-turbo in ChatGPT.

- Release prompts and codes for training the model with Conceptual caption dataset

- Release enhanced scene graph datasets of Conceptual caption

- Release prompts and codes for training the model with Visual Genome caption dataset

- Release enhanced scene graph datasets of Visual Genome caption

Python: 3.9.0

conda install pytorch==1.10.1 torchvision==0.11.2 cudatoolkit=11.3 -c pytorch -c conda-forge

pip install openai

pip install einops shapely timm yacs tensorboardX ftfy prettytable pymongo tqdm pickle numpy

pip install transformers Once the package has been installed, please run setup.py file.

python setup.py build develop --userDirectory Structure

root

├── dataset

│ ├── COCO

│ │ │── captions_train2017.json

│ │ │── captions_val2017.json

│ │ │── COCO_triplet_labels.npy

│ │ └── images

| │ └── *.png

│ ├── VG

│ │ │── image_data.json

│ │ │── VG-SGG-with-attri.h5

│ │ │── VG-SGG-dicts-with-attri.json

│ │ └── VG_100K

│ │ └── *.png

│ ├── GQA

│ │ │── GQA_200_ID_Info.json

│ │ │── GQA_200_Train.json

│ │ │── GQA_200_Test.json

│ │ └── images

│ │ └── *.png

To train SGG model, we use image caption with its image in COCO dataset. Please download the COCO dataset and put the corresponding files into dataset/COCO directory. The name of files in url are:

2017 Traing images [118K/18GB]

2017 Val images [5K/1GB]

2017 Train/Val annotations [241MB]

Note that after downloading the raw images, please combine them into dataset/COCO/images directory. For a fair comparison, we use 64K images, following the previous studies (SGNLS, Li et al, MM'22). Please download a file including the image id of 64K images.

For evaluation, we use Visual Genome (VG) and GQA datasets.

We follow the same pre-processing strategy with VS3_CVPR23. Please download the linked files to prepare necessary files.

- Raw Images: part 1 (9GB), part 2 (5GB)

- Annotation Files: image_data.json, VG-SGG-dicts-with-attri.json, VG-SGG-with-attri.h5

After downloading the raw images and annotation files, please put them into dataset/VG/VG_100K and dataset/VG directory, respectively.

We follow the same-preprocessing strategy with SHA-GCL-for-SGG. Please download the linked files to prepare necessary files.

- Raw Images: Full (20.3GB)

- Annotation Files: GQA_200_ID_Info.json, GQA_200_Test.json, GQA_200_Train.json

After downloading the raw images and annotation files, please put them into dataset/GQA/images and dataset/GQA directory, respectively.

To utilize gpt-3.5-turbo in ChatGPT, please insert your openai key which is obtained from https://platform.openai.com/account/api-keys

Please follow step by step to obtain localized triplets.

Since triplet extraction via LLM is based on openAI's API, the code can be runned in parallel. For example, 10,000 images can be divided into 1,000 images with 10 codes. To this end, please change start and end variables in .py code, and name of saved files to avoid overwriting files.

- Extract triplets from original captions

python triplet_extraction_process/extract_triplet_with_original_caption.py {API_KEY}- Extract triplets from paraphrased captions

python triplet_extraction_process/extract_triplet_with_paraphrased_caption.py {API_KEY}After Chain-1, the output files are located in dataset/COCO directory. The files containing misaligned triplets can be downloaded as:

python triplet_extraction_process/alignment_classes_vg.py {API_KEY}After Chain-2, the output files are located in triplet_extraction_process/alignment_dict directory. The files containing aligned entity/predicate information can be downloaded as:

-

triplet_extraction_process/alignment_dict/aligned_entity_dict_vg.pkl

-

triplet_extraction_process/alignment_dict/aligned_predicate_dict_vg.pkl

python triplet_extraction_process/final_preprocess_triplets_vg.pyAfter Final instruction, the output file is located in dataset/VG directory. The file containing aligned triplets in VS3 format can be downloaded as follows:

We follow same code in VS3 to ground unlocalized triplets. A pre-trained GLIP model is necessary to ground them. Please put the pre-trained GLIP model to MODEL directory.

# Download pre-trained GLIP models

mkdir MODEL

wget https://penzhanwu2bbs.blob.core.windows.net/data/GLIPv1_Open/models/glip_tiny_model_o365_goldg_cc_sbu.pth -O swin_tiny_patch4_window7_224.pth

wget https://penzhanwu2bbs.blob.core.windows.net/data/GLIPv1_Open/models/glip_large_model.pth -O swin_large_patch4_window12_384_22k.pth# Grounding unlocalized triplets

python tools/data_preprocess/parse_SG_from_COCO_caption_LLM_VG.pyAfter grounding unlocalized triplets, the output file named aligned_triplet_info_vg_grounded.json is located in dataset/VG directory. The file of localized triplets can be downloaded as follows:

Based on the extracted triplets in Chain-1, please run the below codes, similar to the process in Triplet Extraction Process via LLM - VG

# Chain-2: Alignment of Classes in Triplets via LLM

python triplet_extraction_process/alignment_classes_gqa.py {API_KEY}

# Construction of aligned tripelts in VS3 format

python triplet_extraction_process/final_preprocess_triplets_gqa.py

# Grounding Unlocalized Triplets

python tools/data_preprocess/parse_SG_from_COCO_caption_LLM_GQA.pyWe provide files regarding GQA dataset.

To change localized triplets constructed by LLM, please change cococaption_scene_graph path in maskrcnn_benchmark/config/paths_catalog.py file.

Please change variable in cococaption_scenegraph to dataset/VG/aligned_triplet_info_vg_grounded.json (localized triplets).

bash train_vg.shIf you want to train model with reweighting strategy, please run the code.

bash train_rwt_vg.shPlease change variable in cococaption_scenegraph to dataset/GQA/aligned_triplet_info_gqa_grounded.json (localized triplets). After changing variable, please run the code.

bash train_gqa.sh# Please change model checkpoint in test.sh file

bash test.sh We also provide pre-trained models.

- model_VG_VS3.pth, config.yml, evaluation_res.txt

- model_VG_VS3_Rwt.pth, config.yml, evaluation_res.txt

@misc{kim2023llm4sgg,

title={LLM4SGG: Large Language Model for Weakly Supervised Scene Graph Generation},

author={Kibum Kim and Kanghoon Yoon and Jaehyeong Jeon and Yeonjun In and Jinyoung Moon and Donghyun Kim and Chanyoung Park},

year={2023},

eprint={2310.10404},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

The code is developed on top of VS3.