Welcome to the PDF QA Application! This project allows you to upload a PDF or text file and ask questions about the content of the file. The application uses advanced natural language processing and document search capabilities to provide accurate answers.

Before running the application, ensure you have the following prerequisites installed:

- Python 3.x

- Chroma Database (Installation instructions can be found here)

-

Clone the repository to your local machine:

git clone https://github.com/alenjohn05/LLM-PDF-Chat.git

-

Navigate to the project directory:

cd Langchain-LLM-PDF-QA -

Install the required Python dependencies:

poetry install

If you don't have Poetry installed, you can install it using:

pip install poetry

-

Start a Poetry shell:

poetry shell

Please create an

.envfile from.env.sampleonce the application is installed. Edit the.envfile with your OpenAI organization and OpenAI key:cp .env.sample .env

-

Start the application:

chainlit run app/app.py -w

-

Access the application in your web browser (by default, it runs on http://localhost:5000).

-

You'll be greeted with a welcome message and instructions on how to use the application.

-

Click the "Upload" button to select and upload a PDF or text file.

-

After uploading a file, you can ask questions related to the content of the file.

-

The application will use advanced natural language processing to provide answers based on the uploaded document.

You can configure the application by modifying the settings in the .env file. This includes setting the OpenAI API key, adjusting search engine parameters, and more. Please refer to the documentation for detailed configuration options.

This application can be extended and customized for various use cases. You can explore advanced features such as customizing prompts, integrating with different document loaders, and optimizing the search engine. Detailed instructions and examples can be found in the documentation.

We welcome contributions to this project. If you have ideas for improvements, bug fixes, or new features, please open an issue or submit a pull request. Your contributions help make this application better for everyone.

This project is licensed under the MIT License. You are free to use, modify, and distribute the code for your own purposes.

This project was developed as part of a workshop series. Below are details about the workshops:

The first lab in the workshop series focuses on building a basic chat application with data using LLM (Language Model) techniques. This application allows users to interact with a chat interface, upload PDF files, and ask questions related to the content of the files.

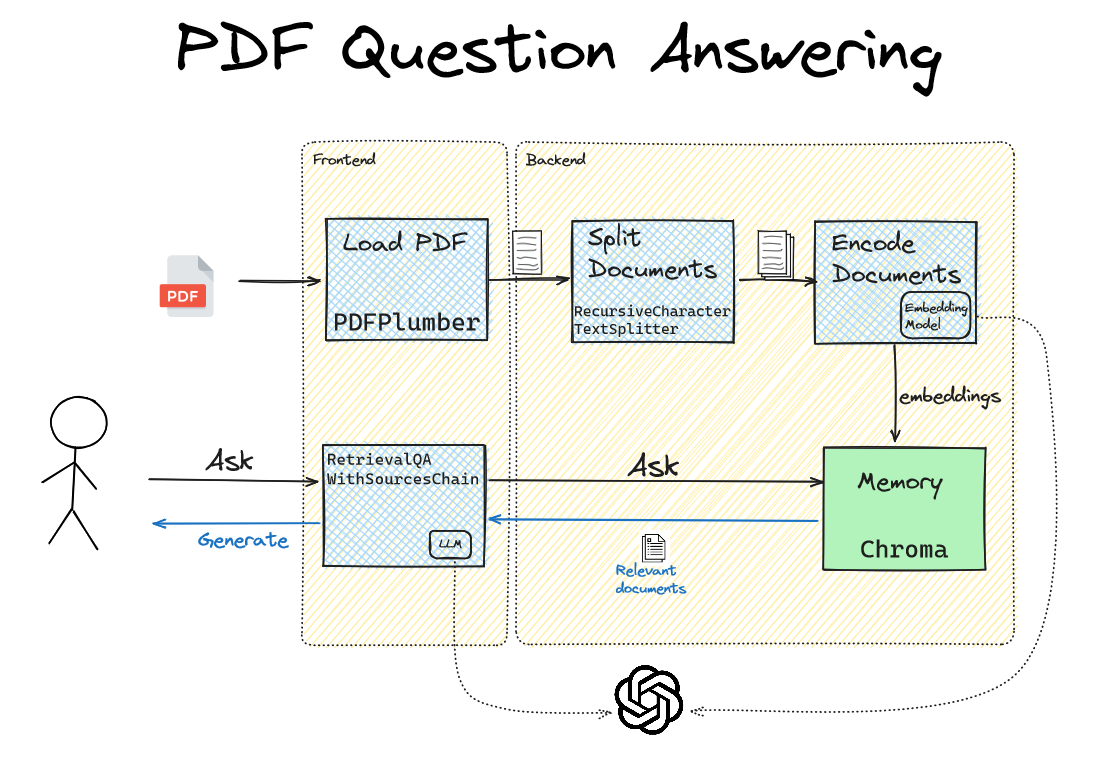

- User uploads a PDF file.

- App loads and decodes the PDF into plain text.

- App chunks the text into smaller documents to fit the input size limitations of embedding models.

- App stores the embeddings into memory.

- User asks a question.

- App retrieves relevant documents from memory and generates an answer based on the retrieved text.

In this lab, we used the following components to build the PDF QA Application:

- Langchain: A framework for developing LLM applications.

- Chainlit: A full-stack interface for building LLM applications.

- Chroma: A database for managing LLM embeddings.

- OpenAI: For advanced natural language processing.

The application's architecture is designed as follows:

To run the complete application, follow the instructions provided in the README.

The second lab in the workshop series focuses on improving the question answering capabilities of the application by addressing issues related to prompt engineering. In particular, we address the problem of "hallucination" where the model generates incorrect information in responses.

During testing, we encountered issues with "hallucination," where the model generated incorrect information in responses. For example, when asking complex questions, the model sometimes produced factually incorrect answers. We needed to resolve this hallucination problem through prompt engineering.

To resolve the hallucination problem, we conducted experiments with different prompts and prompt structures. We discovered that removing certain prompts and modifying the sources prompt improved the model's accuracy in generating answers.

To implement these changes, we traced the Langchain source code to identify where prompts were used in the application. We then extracted and customized the prompts in a separate file and initialized the RetrievalQAWithSourcesChain with the custom prompts.

The updated prompts improved the model's ability to generate accurate answers, enhancing the overall performance of the application.

To run the complete application with these prompt improvements, follow the instructions provided in the README.