Reference: https://arxiv.org/pdf/1507.06527.pdf

- Pytorch(1.5.0)

- Openai Gym(0.17.1)

- Tensorboard (2.1.0)

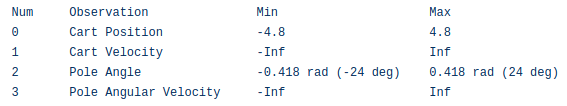

- CartPole-v1 environment is consists of Cart position/velocity, Pole angle/velocity.

- I set the partially observed state is position of cart and pole's angle. The agent doesn't have idea of the velocity.

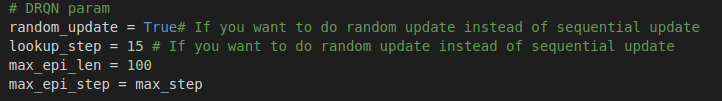

- episodes are selected randomly from the replay memory and updates begin at the beginning of the episode and proceed forward through time to the conclusion of the episode. The targets at each timestep are generated from the target Q-network. The RNN's hidden state is carried forward throughout episode.

- Episodes are selected randomly from the replay memory and updates begin at random points in the episode and proceed for only unroll iterations timesteps(lookup_step). The targets at each timestep are generated from the target Q-network. The RNN's initial state is zeroed at the start of the update.

- The above parameters are used to set the DRQN setting. random update choose what update method to use.

- lookup_step is how long step to observe. I found that longer lookup_step is better.

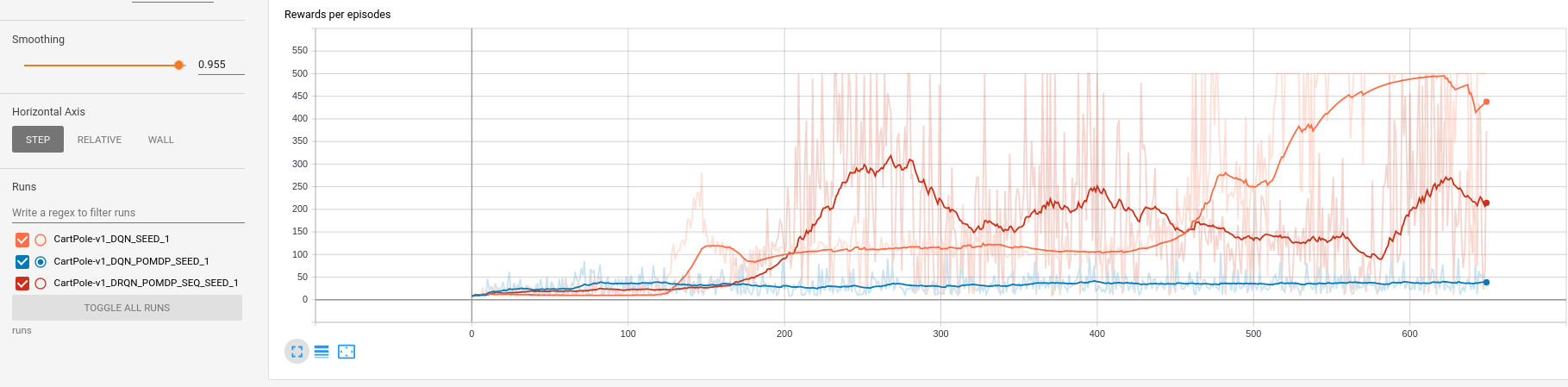

- (orange)DQN with fully observed MDP situation can reach the highest reward.

- (blue)DQN with POMDP never can be reached to the high reward situation.

- (red)DRQN with POMDP can be reach the somewhat performance although it only can observe the position.

- Random update of DRQN