This repository replicates various convolution layers from SOTA papers.

This repository currently includes:

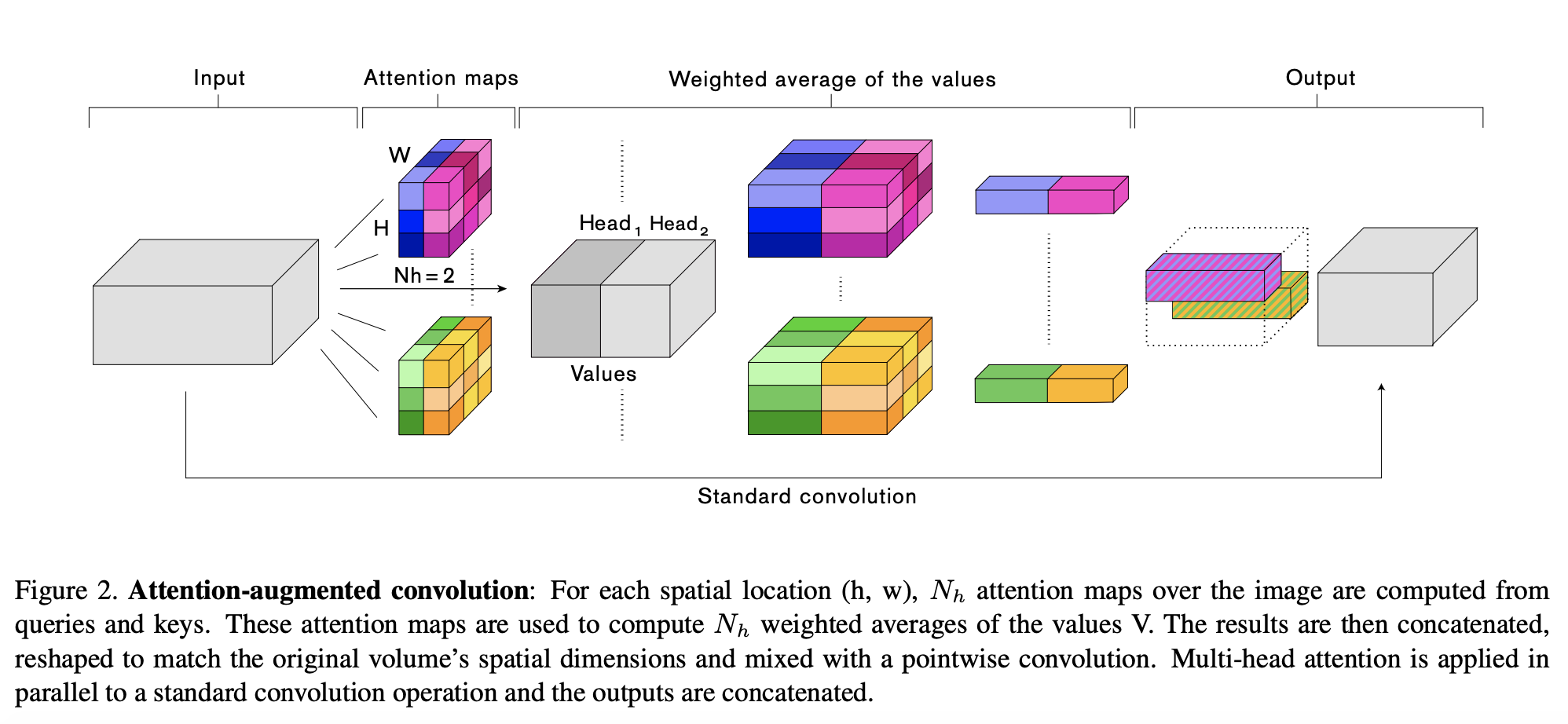

- Attention Augmented (AA) Convolution Layer

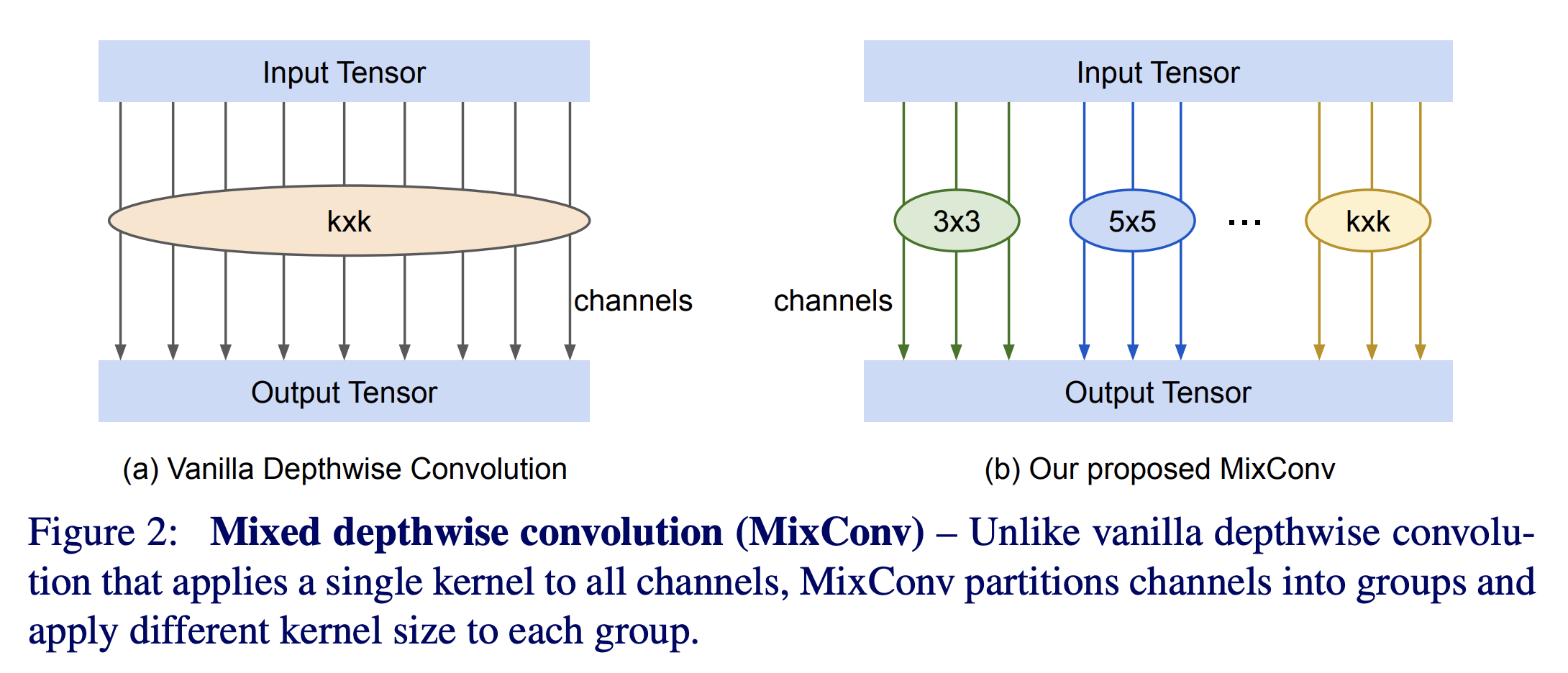

- Mixed Depthwise Convolution Layer

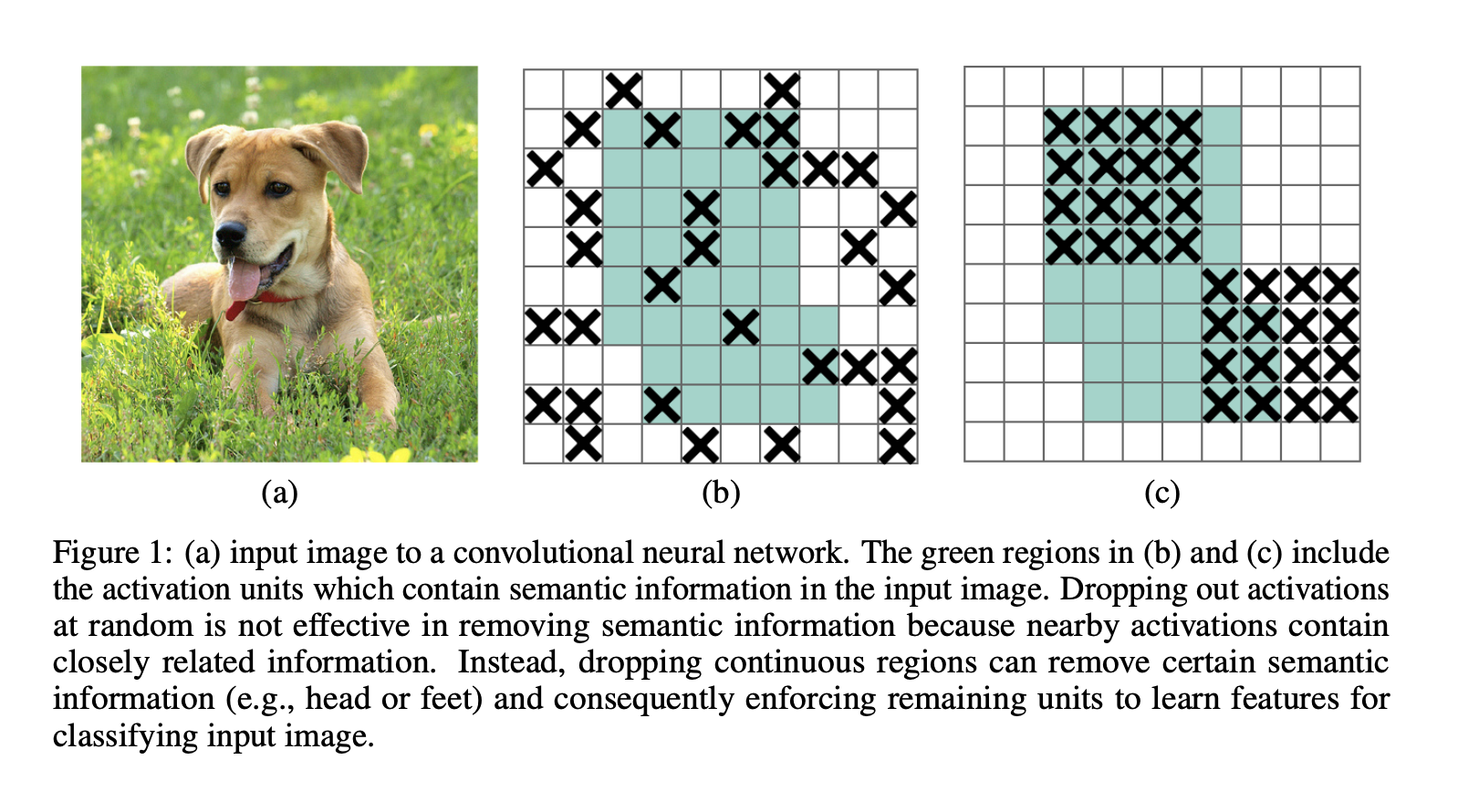

- Drop Block

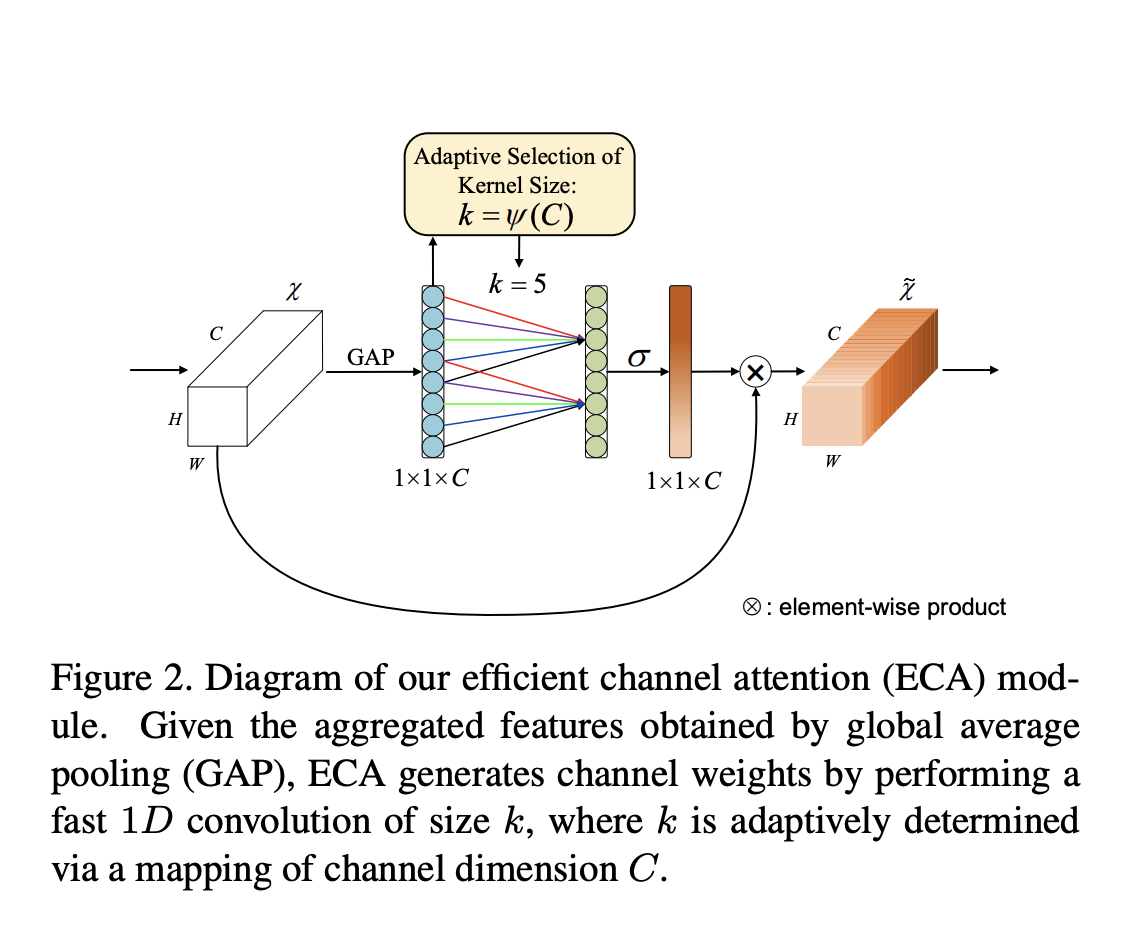

- Efficient Channel Attention (ECA) Layer

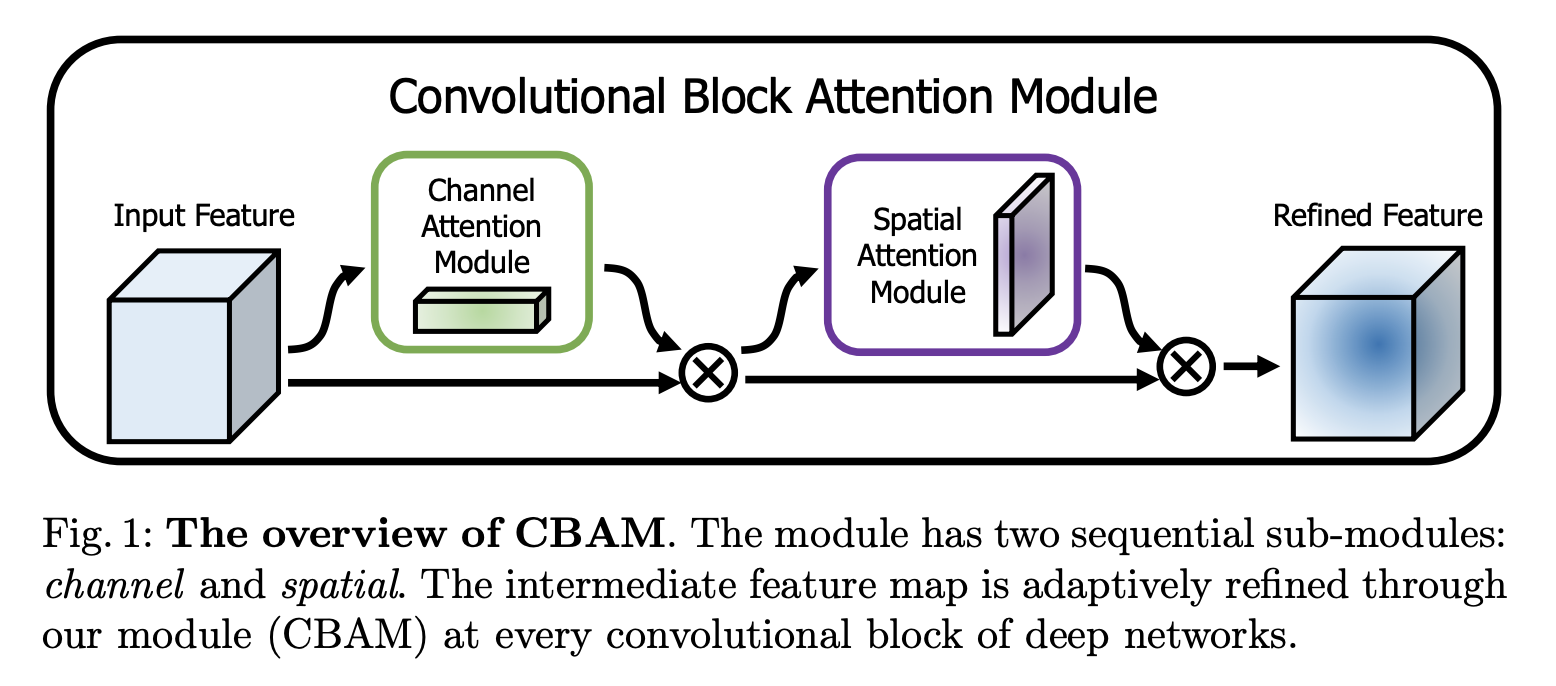

- Convolutional Block Attention Module (CBAM) Layer

For other implementations in:

- Pytorch: leaderj1001

- Keras: titu1994

- TensorFlow 1.0: gan3sh500

- This implementation does not yet include relative positional encodings.

For other implementations in:

- TensorFlow 1.0: Tensorflow

- This implementation combines depthwise convolution with pointwise convolution. The original implementation only used depthwise convolutions.

For other implementations in:

- TensorFlow 1.0: Tensorflow

- Pytorch: miguelvr

For other implementations in:

- Pytorch: BangguWu

For other implementations in:

- Pytorch: Jongchan

Here is an example of how to use one of the layers:

import tensorflow as tf

from convVariants import AAConv

aaConv = AAConv(

channels_out=32,

kernel_size=3,

depth_k=8,

depth_v=8,

num_heads=4)

The layer can be treated like any other tf.keras.layers class.

model = tf.keras.models.Sequential([

aaConv,

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

Test cases are located here.

To run tests:

cd Convolution_Variants

python tests.py

- tensorflow 2.0.0 with GPU

- These layers are only tested to work for input format: NCHW.

Links to the original papers: