This repository is the official implementation of the paper:

Residual Policy Learning for Vehicle Control of Autonomous Racing Cars

The paper will be presented at the IEEE Intelligent Vehicle Symposium 2023. If you find our work useful, please consider citing it.

The development of vehicle controllers for autonomous racing is challenging because racing cars operate at their physical driving limit. Prompted by the demand for improved performance, autonomous racing research has seen the proliferation of machine learning-based controllers. While these approaches show competitive performance, their practical applicability is often limited. Residual policy learning promises to mitigate this by combining classical controllers with learned residual controllers. The critical advantage of residual controllers is their high adaptability parallel to the classical controller's stable behavior. We propose a residual vehicle controller for autonomous racing cars that learns to amend a classical controller for the path-following of racing lines. In an extensive study, performance gains of our approach are evaluated for a simulated car of the F1TENTH autonomous racing series. The evaluation for twelve replicated real-world racetracks shows that the residual controller reduces lap times by an average of 4.55 % compared to a classical controller and zero-shot generalizes to new racetracks.

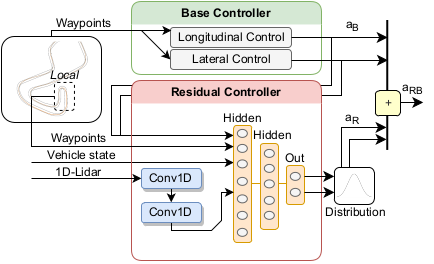

Proposed control architecture consisting of a base controller that outputs control action

This repository uses an adapted version of the F1TENTH gym as simulator. Map data of replicated real-world racetracks are used from the F1TENTH maps repository.

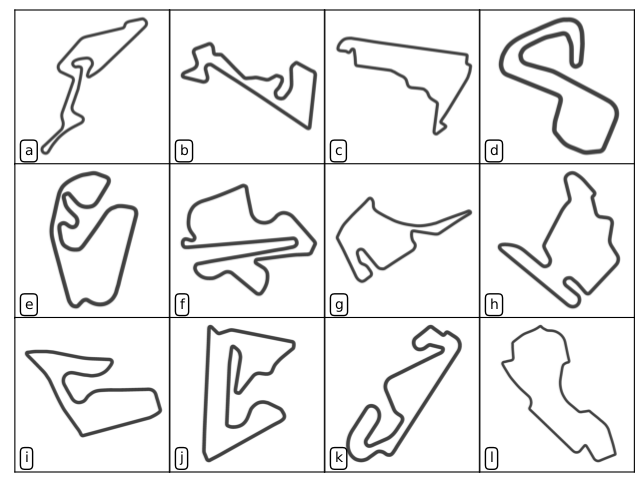

Racetracks for training and testing: (a) Nurburgring, (b) Moscow Raceway, (c) Mexico City, (d) Brands Hatch, (e) Sao Paulo, (f) Sepang, (g) Hockenheim, (h) Budapest, (i) Spielberg, (j) Sakhir, (k) Catalunya, and (l) Melbourne.

| Track | Baseline in s | Residual in s | Improvement in s | Improvement in % |

|---|---|---|---|---|

| Nuerburgring | 60.84 | 58.07 | 2.77 | 4.55 |

| Moscow | 46.75 | 43.45 | 3.30 | 7.06 |

| Mexico City | 49.12 | 46.76 | 2.36 | 4.80 |

| Brands Hatch | 45.92 | 44.97 | 0.95 | 2.07 |

| Sao Paulo | 47.92 | 44.92 | 3.00 | 6.26 |

| Sepang | 66.24 | 63.18 | 3.06 | 4.62 |

| Hockenheim | 49.96 | 47.35 | 2.61 | 5.22 |

| Budapest | 54.33 | 51.67 | 2.66 | 4.90 |

| Spielberg | 45.33 | 43.93 | 1.40 | 3.09 |

| Track | Baseline in s | Residual in s | Improvement in s | Improvement in % |

|---|---|---|---|---|

| Sakhir | 60.34 | 57.72 | 2.62 | 4.34 |

| Catalunya | 56.50 | 53.54 | 1.49 | 5.24 |

| Melbourne | 61.03 | 59.54 | 2.96 | 2.44 |

| Track | Baseline in s | Residual in s | Improvement in s | Improvement in % |

|---|---|---|---|---|

| Average | 53.69 | 50.85 | 2.43 | 4.55 |

- We recommend to use a virtual environment for the installation:

python -m venv rpl4f110_env source rpl4f110_env/bin/activate - Activate the environment and install the following packages:

pip install torch pip install gymnasium pip install tensorboard pip install hydra-core pip install tqdm pip install flatdict pip install torchinfo pip install torchrl pip install numba pip install scipy pip install pyglet pip install pillow pip install pyglet==1.5

- The simulator should be installed as a module:

cd simulator pip install -e .

After setting you desired configuration in the config.yaml file, you can start the training by running:

python main.pySpecific names of the experiment can be set by running:

python main.py +exp_name=your_experiment_nameThe use of your GPU can be avoided by running:

python main.py +cuda=FalseThe training results are stored in the outputs folder. The training progress can be monitored with tensorboard:

tensorboard --logdir outputsThe baseline controller can be evaluated by running:

python main.py +bench_baseline=TrueMost of the code is documented with automatically generated docstrings, please use them with caution.

If you find our work useful, please consider citing our paper:

@inproceedings{trumpp2023residual,

author="Raphael Trumpp and Denis Hoornaert and Marco Caccamo",

title="Residual Policy Learning for Vehicle Control of Autonomous Racing Cars",

booktitle="2023 IEEE Intelligent Vehicles Symposium (IV) (IEEE IV 2023)",

address="Anchorage, USA",

days="4",

moth=jun,

year=2023,

keywords="Residual Policy Learning; Autonomous Racing; Vehicle Control; F1TENTH"

}GNU General Public License v3.0 only" (GPL-3.0) © raphajaner