Our goal is to develop insights on existing BlueBike trip data and create an application to aid in analyzing and providing feedback from live feed data from BlueBike stations. We seek to analyze trip data, station status data, and station location data, in order to create a comprehensive understanding of BlueBike usage.

We're not live atm (we deployed to GCP but were running out of credit RIP) but you can run it locally.

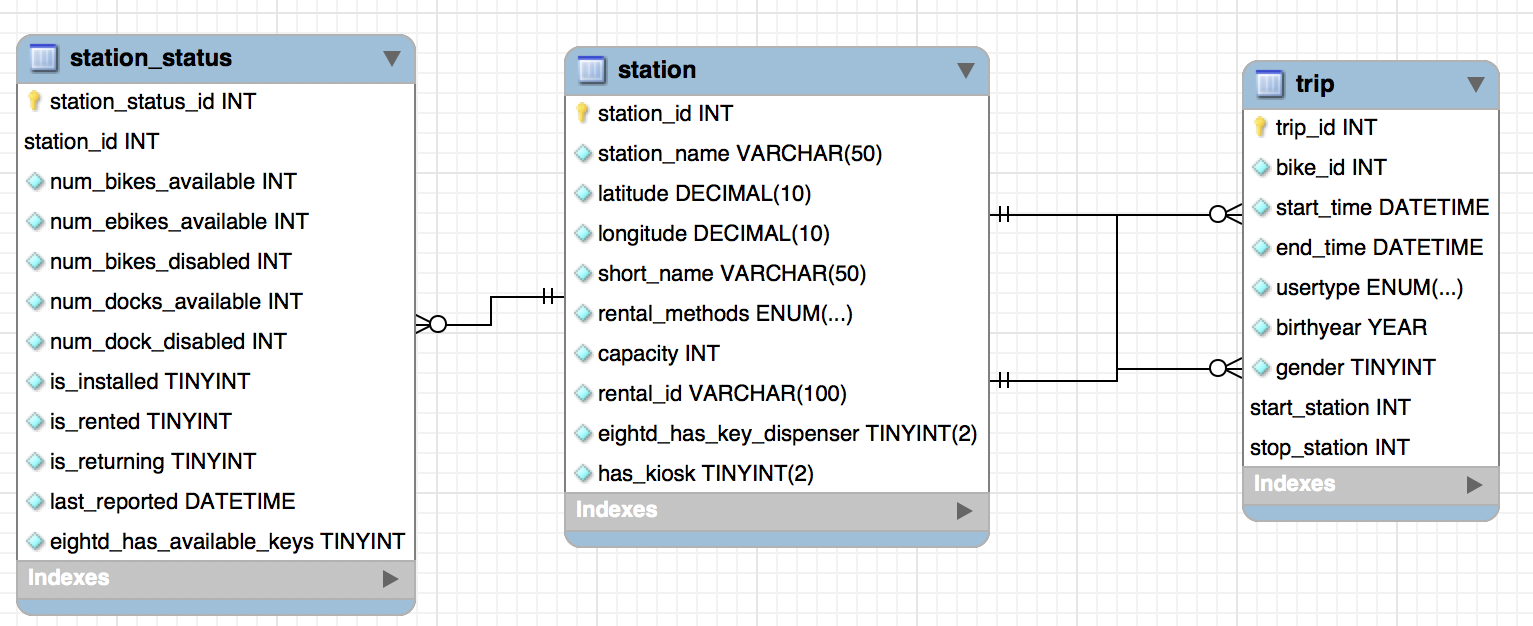

This project provides a bare-bones application, not connected to any MySQL database. Provided that you have your own database you'd like to aggregate data in, we give you the means to define a database (see our schema in the docs directory), an API to access that database, services that use the API to insert data in the database, and finally a front end service with an interactive module for data visualization.

-

The Backend:

- An API Service:

- A container running the API of our application. Abstracts away connecting to DB and sending queries via the following API calls:

- GET Request Path:

/<entity> - POST Request Path:

/<entity>

- GET Request Path:

- See the

docs/apifolder for more information

- A container running the API of our application. Abstracts away connecting to DB and sending queries via the following API calls:

- An Auth Service:

- A container that gives DB credentials to the API service.

- Based on what type of user is calling the API, will provision a different DB connection

- If we are calling it from front-end server, then we assume the unauthenticated, default role ""

- If we are calling it via our data service, then we assume the authenticate role "data-creator"

- A Live Data Service :

- A container that calls the API to upload live data from GBFS to our DB

- Will always be running, polling https://gbfs.bluebikes.com/gbfs/gbfs.json for data, and adding that to DB

- Will also have an additional method to upload data from CSV files

- A container that calls the API to upload live data from GBFS to our DB

- A CSV Data Service :

- A container that calls the API to upload csv data provided by users to our DB

- Will always be running, polling https://gbfs.bluebikes.com/gbfs/gbfs.json for data, and adding that to DB

- Will also have an additional method to upload data from CSV files

- A container that calls the API to upload csv data provided by users to our DB

- An API Service:

-

The Frontend:

- An Nginx Service:

- Host HTML, CSS with and embedded Tableau module

- An Nginx Service:

Since we're not currently up, here are some fun visuals that show what kind of questions we can answer with the data we've aggregated from Bluebike.

A map of Boston, with Bluebike of stations represented as dots. The darker the dot, the more bikes the station has at the moment.

Plots the average bike availability and the average dock availability for the given station(s) at different hours during the day.

-

Install MySQL Server on your device, or create one on Google Cloud Platform's Cloud SQL.

- Recommended: Create two users (beyond just the root connection):

- one with only read permission (

SELECT) - the other with both read and write perm (

SELECTandINSERT)

- one with only read permission (

- Recommended: Create two users (beyond just the root connection):

-

Install Python 3.x: https://www.python.org/downloads/

-

Install

pip: https://pip.pypa.io/en/stable/installing/ -

Install Docker Community Edition : https://docs.docker.com/install/

-

Clone this repo: Run

git clone https://github.com/raghavp96/bluebikedata.giton your terminal -

Use the script in the docs folder to create the database in your MySQL server (with a root connection)

-

Install the Python

dockerpackage: Runpip install dockeron your terminal (make sure yourpipis for Python3) -

Go to the

authfolder and rename eachtemplate-*.jsonfile torole-*.json- place the appropriate information in each JSON -

In your terminal, ensure you are in the root directory of the project (where this README is). Run

make start -

In your browser, visit:

where you should see jsons displayed on the page

-

Run

make stopto stop all containers

All of the files in gcp/ are irrelevant for the purposes of running locally. They are usedpurely for deployment.

The Deployment Process:

-

We build and push the Docker images for all our services into a precreated Project on Google Container Registry.

-

Our API service, Auth service, and Data services are then bundled into one Kubernetes Deployment, which we've exposed as a Kubernetes Service.

- We did this to allow each container to be on the same host network, to mirror our local Docker intercontainer communication configuration )

-

Our Front End Service is then bundled into its own Kubernetes Deployment, which we've exposed as a separate Kubernetes Service.

- For security reasons we whitelist the IP's of machines with access to our CloudSQL Instance

- Roles - We mention that different services/components will be hitting the API, and that they will assume different roles. This allows us to better tighten the security on what calls a user can make to our API. The roles are:

- Default - The front end will assume the

defaultrole, allowing it to make only GET requests - Data Creator - The data service will need to be able to query and mutate the DB, and so assumes the

data-creatorrole. It will be able to make both GET and POST

- Default - The front end will assume the

- Containers - We use containers here, adopting a microservice architecture. This allows for true process isolation, and allows us to increase security.

container_manager.pyandconfig.json- Cloned from https://github.com/raghavp96/dockernetes, these enable us to run services in their own container, attach them all to the same Docker network, so they can still communicate. Config.json allows us to specify what ports the services are running on.- Ex: The API service needs to get DB credentials from the Auth service.