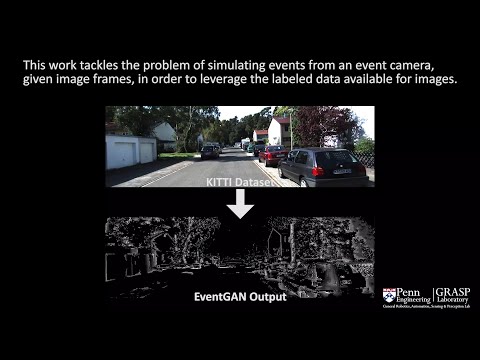

This repo contains the PyTorch code associated with EventGAN: Leveraging Large Scale Image Datasets for Event Cameras.

For additional information, please see the video below (https://www.youtube.com/watch?v=Vcm4Iox4H2w):

In this work, we present EventGAN, a method to simulate events from a pair of grayscale images using a convolutional neural network. Our network takes as input a temporal pair of grayscale images, and regresses the event volume that possibly could have been generated in the time between the images. Supervision is applied through a combination of an adversarial loss, classifying between the real event volumes from the data and the volumes generated by the network, and a pair of cycle consistency losses. The cycle consistency losses are formed through a pair of self-supervised pre-trained networks, trained to regress optical flow and perform image reconstruction from events. At training time for EventGAN, the weights of these pre-trained networks are frozen, and the self-supervised loss is applied through the generator. This constrains the output event volumes from the network to encode the optical flow and image appearance information contained between the two images.

Compared to existing model based simulators, our method is able to simulate arbitrary motion between a pair of frames, while also learning a distribution for event noise. We evaluate our method on two downstream tasks: 2D human pose estimation and object detection. The code for these networks will be coming soon.

If you use this work in an academic publication, please cite the following work:

Zhu, Alex Zihao, et al. "EventGAN: Leveraging Large Scale Image Datasets for Event Cameras." arXiv preprint arXiv:1912.01584 (2019).

@article{zhu2019eventgan,

title={EventGAN: Leveraging Large Scale Image Datasets for Event Cameras},

author={Zhu, Alex Zihao and Wang, Ziyun and Khant, Kaung and Daniilidis, Kostas},

journal={arXiv preprint arXiv:1912.01584},

year={2019}

}

For training, we use data from the MVSEC dataset, as well as a newly collected dataset containing event and image data captured from a variety of different scenes. For both datasets, we use the hdf5 format. The hdf5 files for MVSEC can be found here: https://drive.google.com/open?id=1gDy2PwVOu_FPOsEZjojdWEB2ZHmpio8D, and the new data can be found here: https://drive.google.com/drive/folders/1Ik7bhQu2BPPuy8o3XqlKXInS7L3olreE?usp=sharing.

The default option is for the data to be downloaded into EventGAN/data, e.g. EventGAN/data/event_gan_data/ and EventGAN/data/mvsec/hdf5/.

Pre-trained models for the final EventGAN model can be found here: https://drive.google.com/drive/folders/1Ik7bhQu2BPPuy8o3XqlKXInS7L3olreE?usp=sharing. The default option is for the EventGAN model to be placed in logs, e.g. logs/EventGAN.

The model can be tested using demo.py. The basic syntax is:

python3 EventGAN/demo.py --name EventGAN --model EventGAN

This will evaluate the model on the KITTI images stored in EventGAN/example_figs.

Feel free to modify the images read in demo.py, or to further modify the code for another dataset.

To train a new model from scratch, you must first train the optical flow and image reconstruction networks:

python3 EventGAN/train.py --name flow --model flow --batch_size 8 --num_workers 8

python3 EventGAN/train.py --name recons --model recons --batch_size 8 --num_workers 8

Upon completion, copy the pickle files into EventGAN/pretrained_models/flow and EventGAN/pretrained_models/recons, respectively.

The EventGAN model can then be trained with the following command:

python3 EventGAN/train.py --name EventGAN --model EventGAN --batch_size 8 --num_workers 8 --test_steps 1000 --lr_decay 1. --cycle_recons --cycle_recons_model recons --cycle_flow --cycle_flow_model flow

To experiment with different losses, you may omit either of the --cycle_flow and --cycle_recons options, or use the --no_train_gan to turn off the adversarial loss.

More parameters are described by running:

python3 EventGAN/train.py -h

To modify the training data, you can modify EventGAN/data/comb_train_files.txt, with a separate sequence and starting timestamp per line.

Alex Zihao Zhu, Ziyun Wang, Kaung Khant and Kostas Daniilidis.