Welcome!

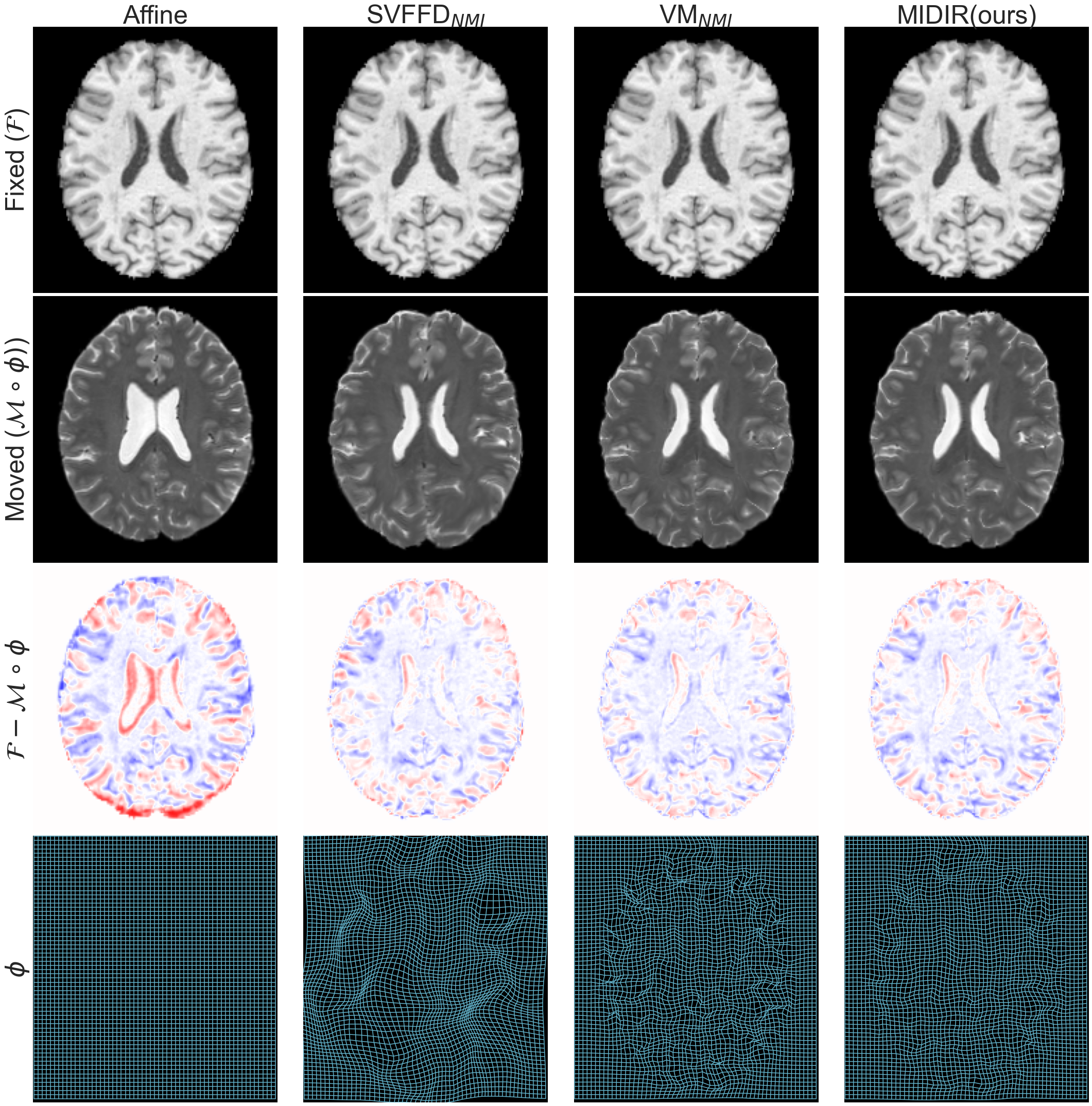

This repository contains code for the Modality-Invariant Diffeomorphic Deep Learning Image Registration (MIDIR) framework presented in the paper (MIDL 2021):

@inproceedings{

qiu2021learning,

title={Learning Diffeomorphic and Modality-invariant Registration using B-splines},

author={Huaqi Qiu and Chen Qin and Andreas Schuh and Kerstin Hammernik and Daniel Rueckert},

booktitle={Medical Imaging with Deep Learning},

year={2021},

url={https://openreview.net/forum?id=eSI9Qh2DJhN}

}

Please consider citing the paper if you use the code in this repository.

- Clone this repository

- In a fresh Python 3.7.x virtual environment, install dependencies by running:

pip install -r <path_to_cloned_repository>/requirements.txt

If you want to run the code on GPU (which is recommended), please check your CUDA and CuDNN installations. This code has been tested on CUDA 10.1 and CuDNN 7.6.5. Later versions should be backward-competible but not guaranteed.

To install the exact CUDA and CuDNN versions with the corresponding Pytorch build we used:

pip install torch==1.5.1+cu101 torchvision==0.6.1+cu101 -f https://download.pytorch.org/whl/torch_stable.html

conf: Hydra configuration for training deep learning modelsconf_inference: Hydra configuration for inferencedata: data loadingmodel:lightning.py: the LightningModule which puts everything togetherloss.py: image similarity loss and transformation regularity lossnetwork.py: dense and b-spline model networkstransformation.py: dense and b-spline parameterised SVF transformation modulesutils.py: main configuration parsing is in here

utils: some handy utility functions

We use Hydra for structured configurations.

Each directory in conf/ is a config group which contains alternative configurations for that group.

The final configuration is the composition of all config groups.

The default options for the groups are set in conf/config.yaml.

To use a different configuration for a group, for example the loss function:

python loss=<lncc/mse/nmi> ...

Any configuration in this structure can be conveniently over-written in CLI at runtime. For example, to change the regularisation weight at runtime:

python loss.reg_weight=<your_reg_weight> ...

See Hydra documentation for more details.

- Create your own data configuration file

conf/data/your_data.yaml - Specifying the paths to your training and validation data as well as other configurations. Your data should be organised as one subject per directory.

- The configurations are parsed to construct

Datasetinmodel/utils.pyandDataLoaderinmodel/lightning.py - For 3D brain images inter-subject registration, see

conf/data/brain_camcan.yamlfor reference. - For 2D cardiac image intra-subject (frame-to-frame) registration, see

conf/data/cardiac_ukbb.yamlfor reference.

The code contained in this repository has some generic building blocks for deep learning registration, so you can build your own registration model by playing with the configurations.

conf/loss: Image similarity loss function configurationsconf/network: CNN-based network configurationsconf/transformation: Transformation model configurationsconf/training: Training configurations- If you don't have anatomical segmentation for validation, you can switch off the related metric evaluation by

removing "image_metrics" from

meta.metric_groupsand "mean_dice" frommeta.hparam_metricsinconfig.yaml

To train the default model on your own data:

python train.py hydra.run.dir=<model_dir> \

data=<your_data> \

meta.gpu=<gpu_num>

Training logs and outputs will be saved in model_dir.

On default settings, a checkpoint of the model will be saved at model_dir/checkpoints/last.ckpt

A copy of the configurations will be saved to model_dir/.hydra automatically.

As mentioned above, you can overwrite any configuration in CLI at runtime, or chagne the default values in conf/

To tune any hyper-parameters such as regularisation loss weight, b-spline control point spacing or network channels, we can simply use the sweeping run feature of Hydra. For example, to tune the regularisation weight:

python train.py \

-m hydra.sweep.dir=<sweep_parent_dir> \

hydra.sweep.subdir=\${loss.reg_loss}_\${loss.reg_weight} \

loss.reg_weight=<weight1,weight2,weight3,...> \

meta.gpu=<gpu_num>

To run inference of a trained model,

python inference.py hydra.run.dir=<output_dir> \

model=dl \

data=<inference_data>

model.ckpt_path=<path_to_model_checkpoint> \

gpu=<gpu_num>

A different set of configuration files are used for inference (see conf_inference/)

We believe in reproducibility and open datasets in research. Unfortunately, we are not allowed to share the original or processed data that we used in the paper directly due to limitations on redistribution put in place by the original data distributor. But you can apply to download these data at:

We also encourage you to try our framework on other applicable and accessible open datasets, and share your findings!

If you have any question or need any help running the code, feel free to open an issue or email us at: huaqi.qiu15@imperial.ac.uk