Codes for paper Bilevel Optimization: Nonasymptotic Analysis and Faster Algorithms.

Our hyperparameter optimization implementation is bulit on HyperTorch, where we propose stoc-BiO algorithm with better performance than other bilevel algorithms.

The implementation of stoc-BiO is located in two experiments l2reg_on_twentynews.py and mnist_exp.py. We will implement our stoc-BiO as a class for an independent use soon!

Our meta-learning part is built on learn2learn, where we implement the bilevel optimizer ITD-BiO and show that it converges faster than MAML and ANIL. Note that we also implement first-order ITD-BiO (FO-ITD-BiO) without computing the derivative of the inner-loop output with respect to feature parameters, i.e., removing all Jacobian and Hessian-vector calculations. It turns out that FO-ITD-BiO is even faster without sacrificing overall prediction accuracy.

In the following, we provide some experiments to demonstrate the better performance of the proposed stoc-BiO algorithm.

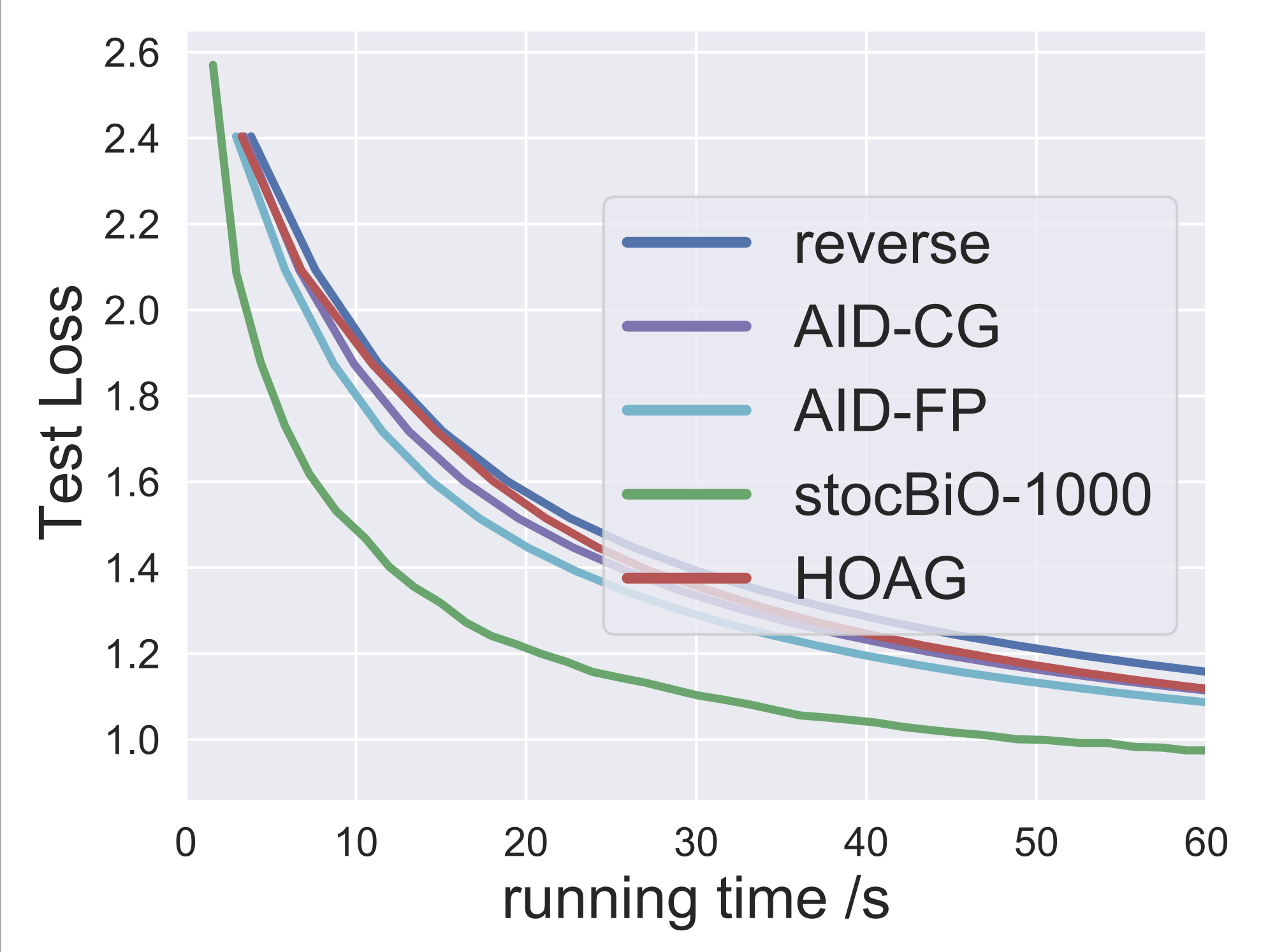

We compare our algorithm to various hyperparameter baseline algorithms on newspaper dataset:

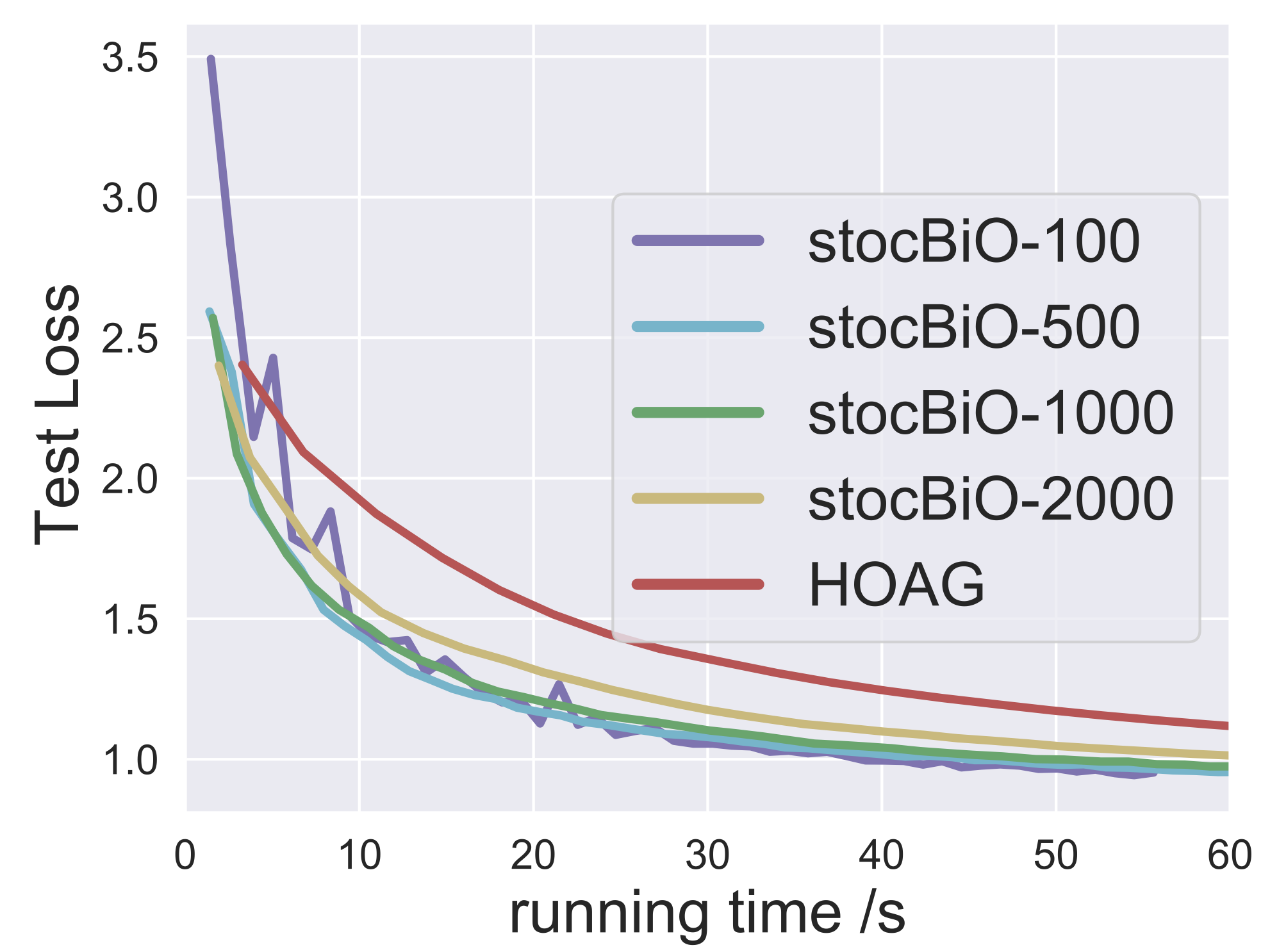

We evaluate the performance of our algorithm with respect to different batch sizes:

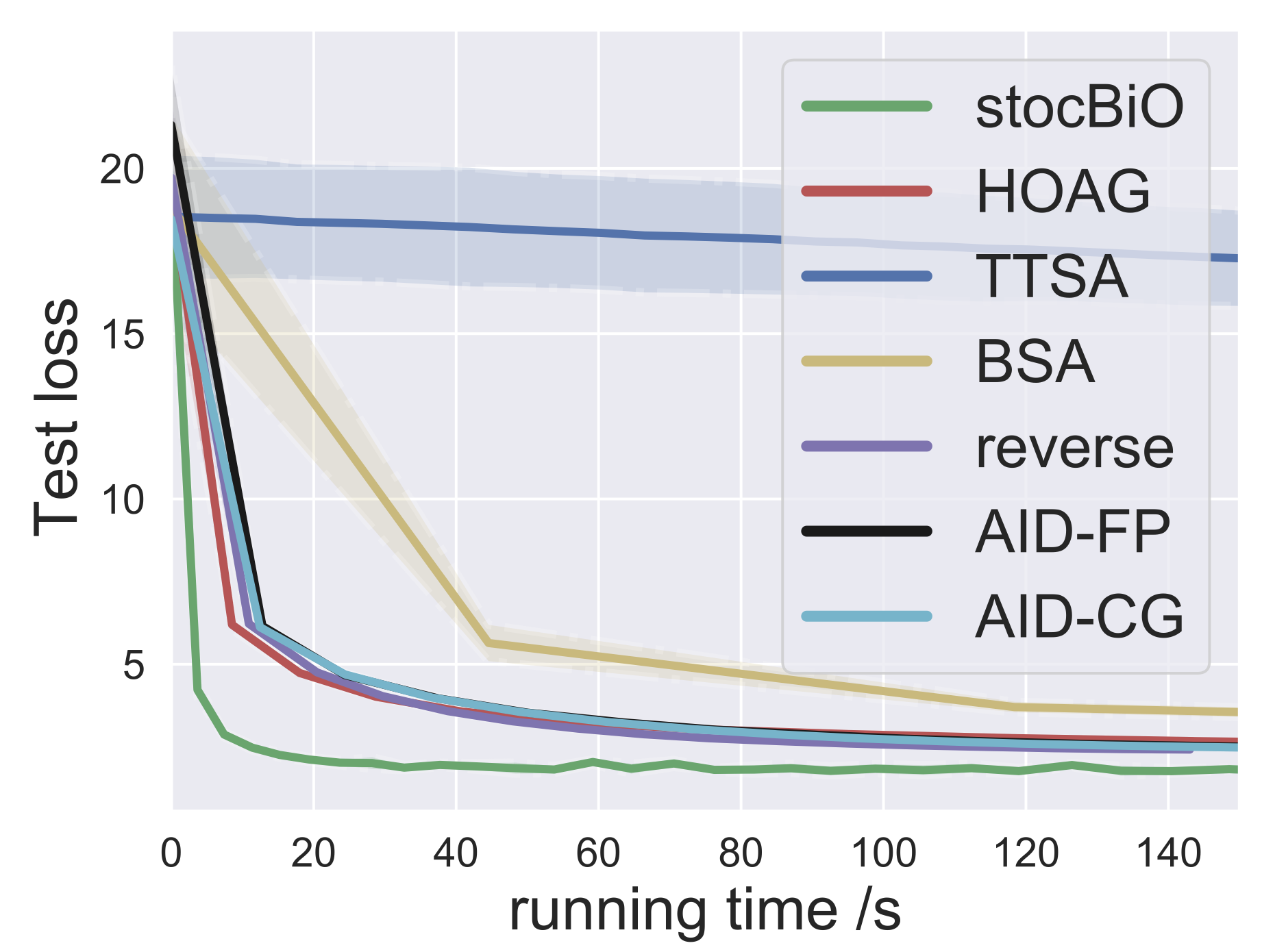

The comparison results on MNIST dataset:

This repo is still under construction and any comment is welcome!