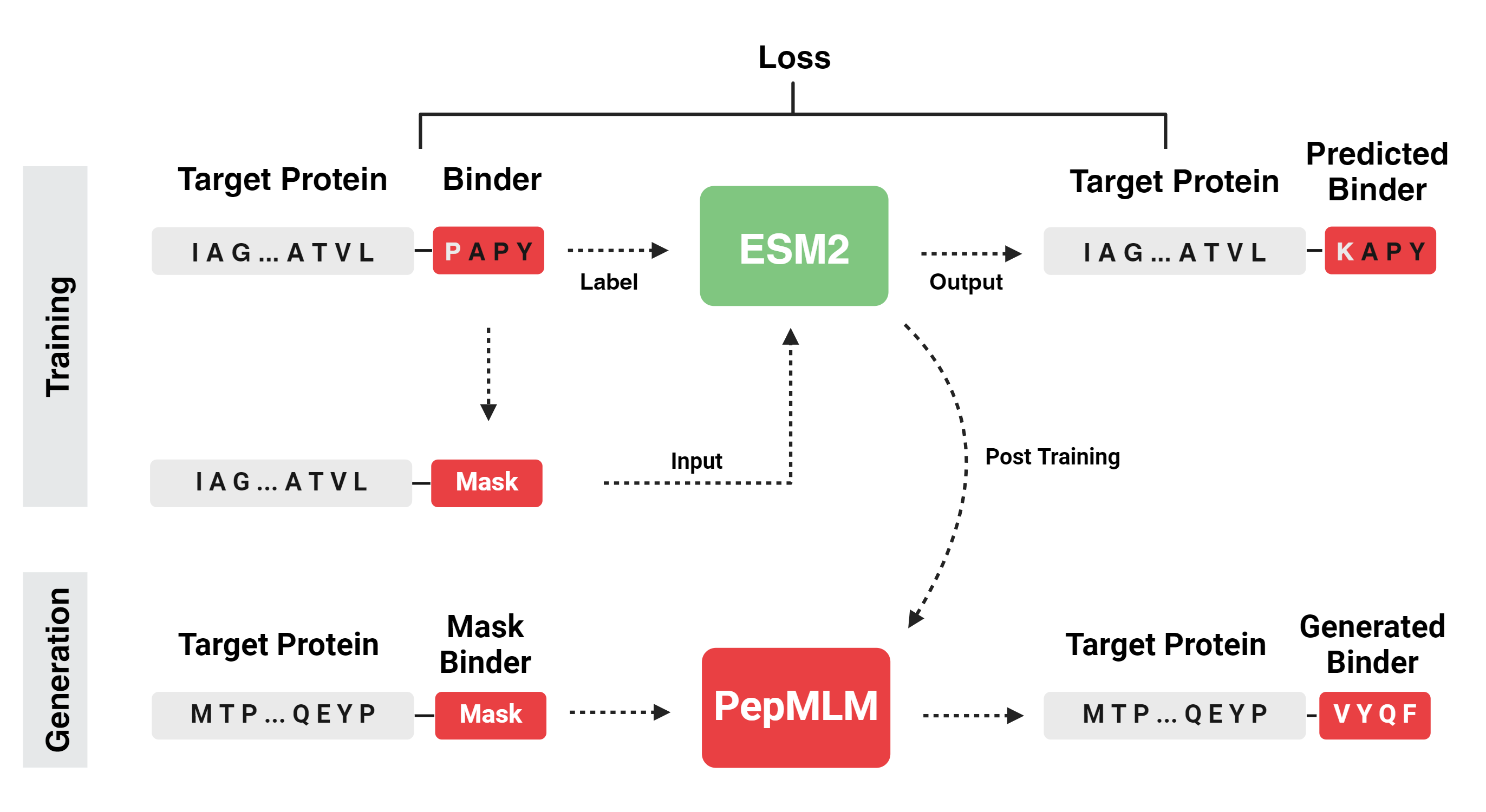

In this work, we introduce PepMLM, a purely target sequence-conditioned de novo generator of linear peptide binders. By employing a novel masking strategy that uniquely positions cognate peptide sequences at the terminus of target protein sequences, PepMLM tasks the state-of-the-art ESM-2 pLM to fully reconstruct the binder region, achieving low perplexities matching or improving upon previously-validated peptide-protein sequence pairs. After successful in silico benchmarking with AlphaFold-Multimer, we experimentally verify PepMLM's efficacy via fusion of model-derived peptides to E3 ubiquitin ligase domains, demonstrating endogenous degradation of target substrates in cellular models. In total, PepMLM enables the generative design of candidate binders to any target protein, without the requirement of target structure, empowering downstream programmable proteome editing applications.

Check out our manuscript on the arXiv!

As of February 2024, the model has been gated on HuggingFace. If you wish to use our model, please visit our page on the HuggingFace site (Link) and submit your access request there. An active HuggingFace account is necessary for both the application and subsequent modeling use. Approval of requests may take a few days, as we are a small lab with a manual approval process.

Once your request is approved, you will need your personal access token to begin using this notebook. We appreciate your understanding.

- How to find your access token: https://huggingface.co/docs/hub/en/security-tokens

# Load model directly

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("TianlaiChen/PepMLM-650M")

model = AutoModelForMaskedLM.from_pretrained("TianlaiChen/PepMLM-650M")

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Contact: pranam.chatterjee@duke.edu

@article{chen2023pepmlm,

title={PepMLM: Target Sequence-Conditioned Generation of Peptide Binders via Masked Language Modeling},

author={Chen, Tianlai and Pertsemlidis, Sarah and Kavirayuni, Venkata Srikar and Vure, Pranay and Pulugurta, Rishab and Hsu, Ashley and Vincoff, Sophia and Yudistyra, Vivian and Hong, Lauren and Wang, Tian and others},

journal={ArXiv},

year={2023},

publisher={arXiv}

}