This project is part of the Udacity Azure ML Nanodegree. In this project, we build and optimize an Azure ML pipeline using the Python SDK and a provided Scikit-learn model. This model is then compared to an Azure AutoML run.

Explain the problem statement:

I was working with the Bank-Marketing dataset, that contains information about a previous bank marketing campaign from a Portuguese institution, i.e. attributed of the people the campaign dealt with and if a client will subscribe to a term deposit product (which is a binary outcome).

We seek to predict that outcome. This can be helpful in developing future marketing campaigns.

Explain the solution: I received 91.454% accuracy with hyperdrive and logistic regression, and the best model that AutoML fetched was the VotingEnsemble with an accuracy of 91.587%, making VotingEnsemble the best performing model.

Explain the pipeline architecture, including data, hyperparameter tuning, and classification algorithm.

- I start by defining training script, for the estimator required by Hyperdrive. This is done in a separate folder called

train.py, and uses Logistic Regression from Scikit-learn. This script is made as follows:- A

clean_datafunction has been defined which cleans missing data and one-hot encodes categorical data. - I created a TabularDataset using TabularDatasetFactory

- I cleaned that data using the

clean_datafunction defined. - Then I split the data into my training and test set.

- Then I train the Logistic Regression model from Sci-kit learn.

- Furthermore, I calculate the primary metric (that is to be optimised), i.e. Accuracy.

- A

- This was followed by defining the sampling method over the search space using RandomParameterSampling.

- I then defined my early termination policy using BanditPolicy.

- Keeping Accuracy as my primary metric I increased the max_total_runs to 20 and defined my HyperDriveConfig.

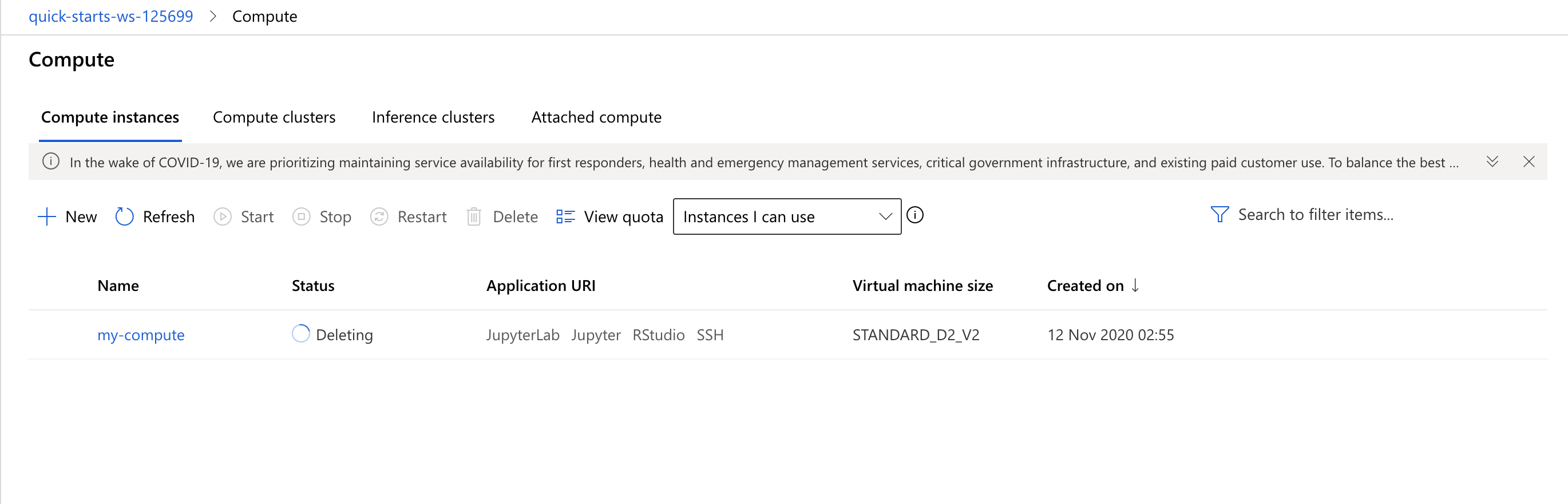

- I then run Hyperdrive.

It gave me the following hyperparameters for my Logistic Regression model

- Regularization Strength - 0.04488915687178386

- Max Iterations - 900

What are the benefits of the parameter sampler you chose? What are the benefits of the parameter sampler you chose? Random sampling supports discrete and continuous hyperparameters. In random sampling, hyperparameter values are randomly selected from the defined search space. It supports early termination of low-performance runs. Unlike other methods, this gives us a wide exploratory range, which is good to do when we don't have much idea about the parameters. It can also be used do an initial search with random sampling and then refine the search space to improve results.

What are the benefits of the early stopping policy you chose? Bandit policy is based on slack factor/slack amount and evaluation interval. Bandit terminates runs where the primary metric is not within the specified slack factor/slack amount compared to the best performing run. Unlike Truncation policy it doesn't calculate primary metric for all runs only to delete a percentage of them, but termminate it as soon as the primary metric doesn't satisfy slack amount, omitting unnecessary baggage. It also omits the need to calculate running Median, making it less computationally cumbersome unlike MedianStoppingPolicy.

Describe the model and hyperparameters generated by AutoML.

- Model - The Voting Ensemble

- Hyperparameters: The models ensembled appear to be arranged as follows:

- "ensembled_iterations": "[1, 0, 13, 10, 9, 11, 12]",

- "ensembled_algorithms": "['XGBoostClassifier', 'LightGBM', 'SGD', 'SGD', 'SGD', 'SGD', 'ExtremeRandomTrees']",

- "ensemble_weights": "[0.2, 0.4, 0.06666666666666667, 0.06666666666666667, 0.06666666666666667, 0.06666666666666667, 0.13333333333333333]"

Compare the two models and their performance. What are the differences in accuracy? In architecture? If there was a difference, why do you think there was one?

- There isn't a huge difference in the accuracy, but Automl definitely has an edge which might extrapolate with a bigger dataset.

- There is significant difference in the architecture of the models, that is due to the range of possibilities and variety AutoML had.

- Needless to mention, the models may also not be as intuitive while starting, hence AutoML can give valuable insights to get on the right track.

What are some areas of improvement for future experiments? Why might these improvements help the model?

- We may focus more time on exploring the data generating a more random dataset. The following columns have a broad gap in terms of number of instances:

- There are far more married people than single and divorced.

- There are far more people without a loan than with one.

- The campaign maybe spread across the year for a better understanding of the customer's mood according to time of the year. Better data is bound to yield better results.

- We can observe and generate a report on, to what extent does which factor affect the likeliness of a client to subscribe, and develop marketing campaigns around them.

- We may notice that the AutoML performed marginally better than the logistic regression model with MaxAbsScaler, XGBoostClassifier and with accuracy of 91.494% and can hence its parameters can also be optimised with HyperDrive alongside Logistic Regression.

- The AutoML experiment could also be run for a longer while which might as well fetch more effective models.

- Different parameters can be used in AutoML config in order to make more effective models.